Abhishek Sinha

On the Benefits of Attributional Robustness

Dec 10, 2019

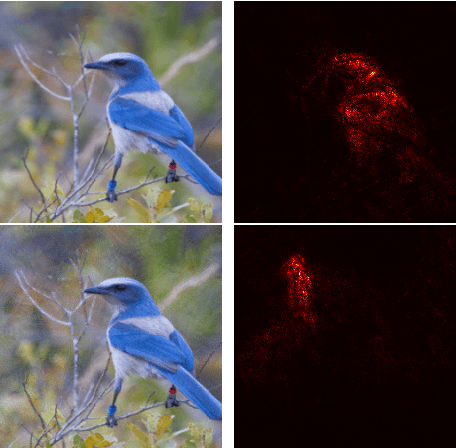

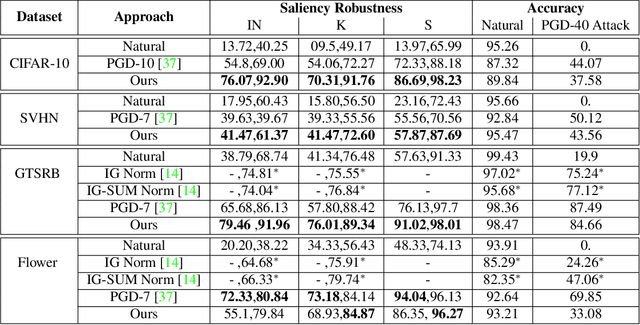

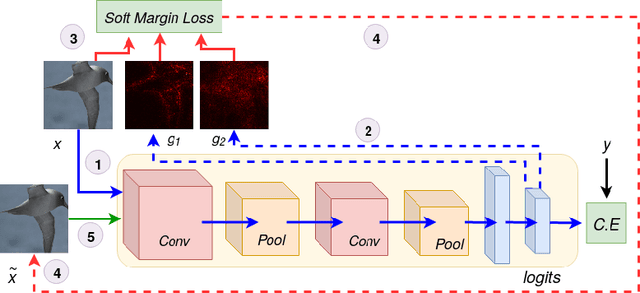

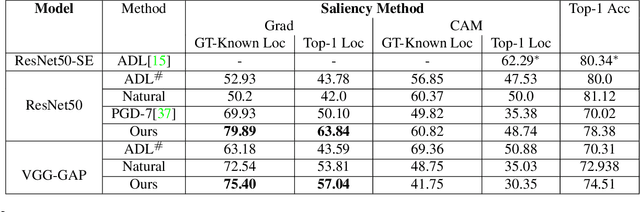

Abstract:Interpretability is an emerging area of research in trustworthy machine learning. Safe deployment of machine learning system mandates that the prediction and its explanation be reliable and robust. Recently, it was shown that one could craft perturbations that produce perceptually indistinguishable inputs having the same prediction, yet very different interpretations. We tackle the problem of attributional robustness (i.e. models having robust explanations) by maximizing the alignment between the input image and its saliency map using soft-margin triplet loss. We propose a robust attribution training methodology that beats the state-of-the-art attributional robustness measure by a margin of approximately 6-18% on several standard datasets, ie. SVHN, CIFAR-10 and GTSRB. We further show the utility of the proposed robust model in the domain of weakly supervised object localization and segmentation. Our proposed robust model also achieves a new state-of-the-art object localization accuracy on the CUB-200 dataset.

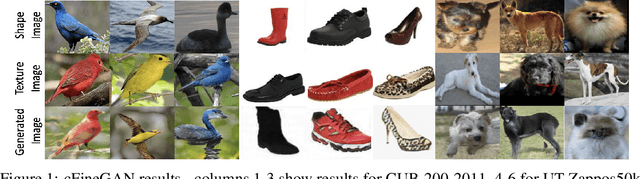

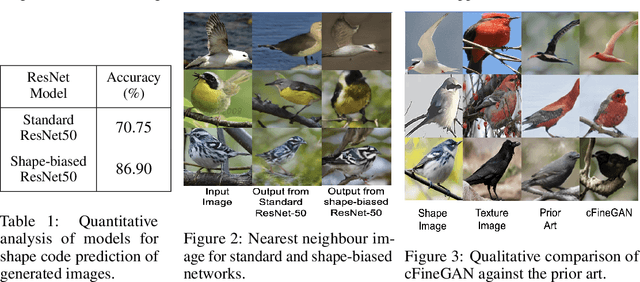

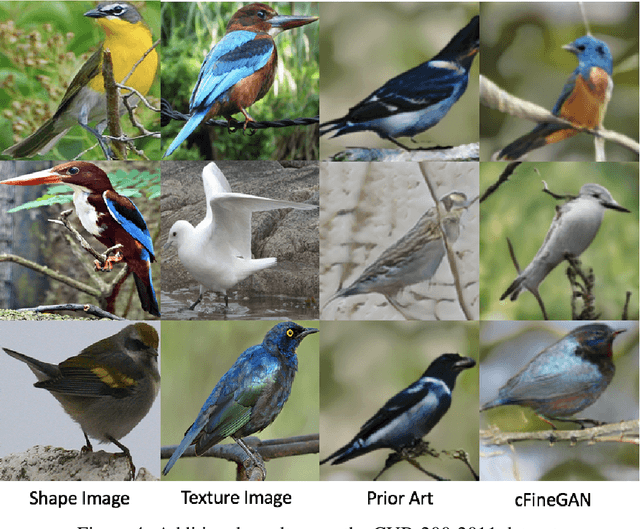

cFineGAN: Unsupervised multi-conditional fine-grained image generation

Dec 06, 2019

Abstract:We propose an unsupervised multi-conditional image generation pipeline: cFineGAN, that can generate an image conditioned on two input images such that the generated image preserves the texture of one and the shape of the other input. To achieve this goal, we extend upon the recently proposed work of FineGAN \citep{singh2018finegan} and make use of standard as well as shape-biased pre-trained ImageNet models. We demonstrate both qualitatively as well as quantitatively the benefit of using the shape-biased network. We present our image generation result across three benchmark datasets- CUB-200-2011, Stanford Dogs and UT Zappos50k.

A Method for Computing Class-wise Universal Adversarial Perturbations

Dec 01, 2019

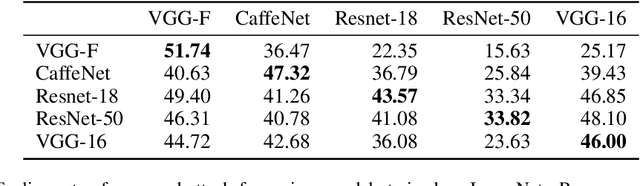

Abstract:We present an algorithm for computing class-specific universal adversarial perturbations for deep neural networks. Such perturbations can induce misclassification in a large fraction of images of a specific class. Unlike previous methods that use iterative optimization for computing a universal perturbation, the proposed method employs a perturbation that is a linear function of weights of the neural network and hence can be computed much faster. The method does not require any training data and has no hyper-parameters. The attack obtains 34% to 51% fooling rate on state-of-the-art deep neural networks on ImageNet and transfers across models. We also study the characteristics of the decision boundaries learned by standard and adversarially trained models to understand the universal adversarial perturbations.

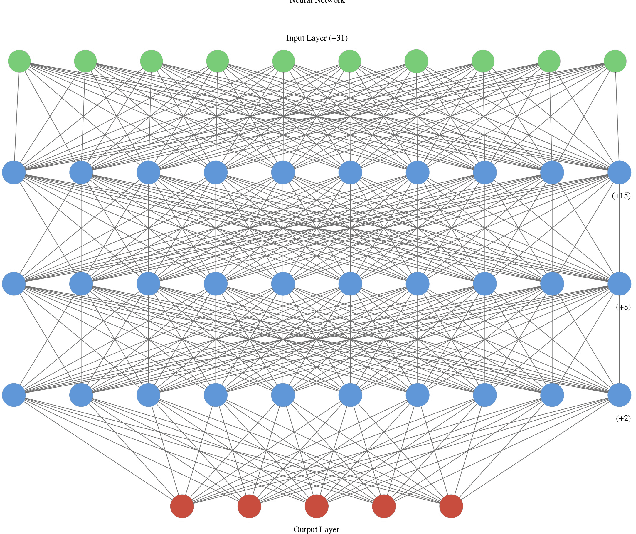

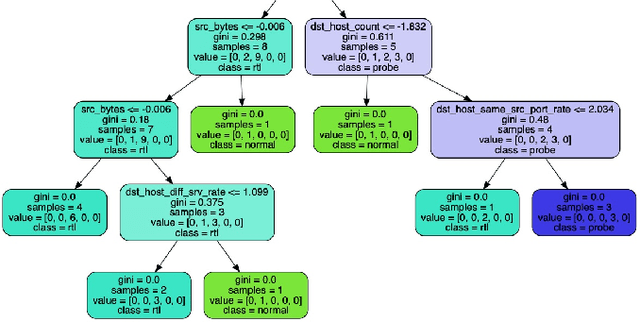

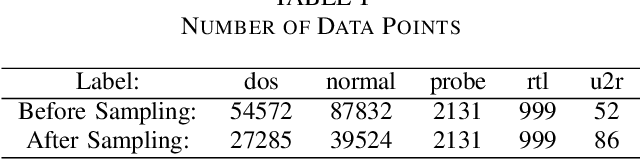

Intrusion Detection using Sequential Hybrid Model

Oct 29, 2019

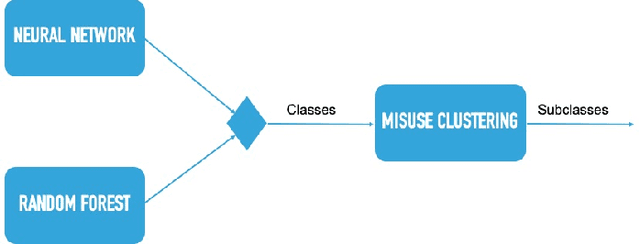

Abstract:A large amount of work has been done on the KDD 99 dataset, most of which includes the use of a hybrid anomaly and misuse detection model done in parallel with each other. In order to further classify the intrusions, our approach to network intrusion detection includes use of two different anomaly detection models followed by misuse detection applied on the combined output obtained from the previous step. The end goal of this is to verify the anomalies detected by the anomaly detection algorithm and clarify whether they are actually intrusions or random outliers from the trained normal (and thus to try and reduce the number of false positives). We aim to detect a pattern in this novel intrusion technique itself, and not the handling of such intrusions. The intrusions were detected to a very high degree of accuracy.

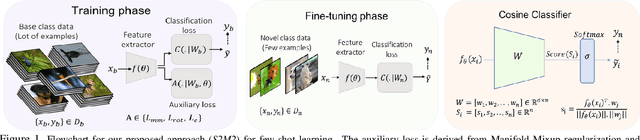

Charting the Right Manifold: Manifold Mixup for Few-shot Learning

Aug 07, 2019

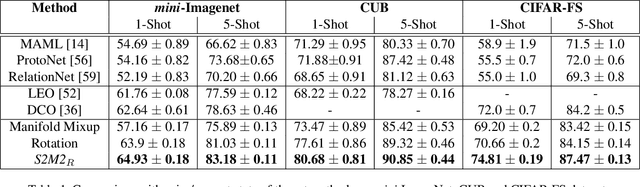

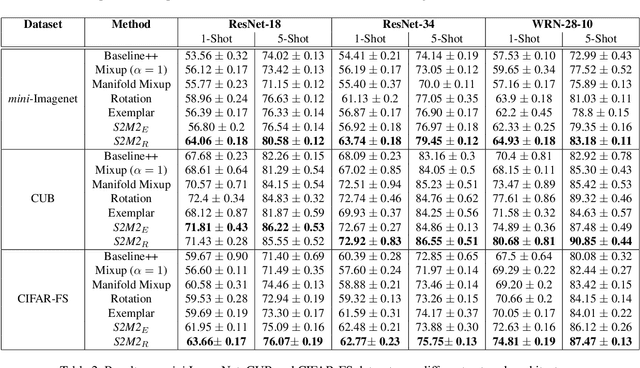

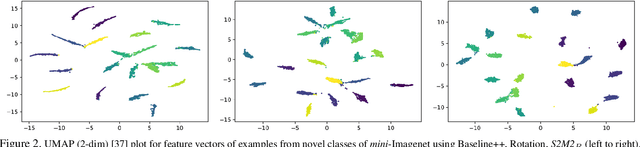

Abstract:Few-shot learning algorithms aim to learn model parameters capable of adapting to unseen classes with the help of only a few labeled examples. A recent regularization technique - Manifold Mixup focuses on learning a general-purpose representation, robust to small changes in the data distribution. Since the goal of few-shot learning is closely linked to robust representation learning, we study Manifold Mixup in this problem setting. Self-supervised learning is another technique that learns semantically meaningful features, using only the inherent structure of the data. This work investigates the role of learning relevant feature manifold for few-shot tasks using self-supervision and regularization techniques. We observe that regularizing the feature manifold, enriched via self-supervised techniques, with Manifold Mixup significantly improves few-shot learning performance. We show that our proposed method S2M2 beats the current state-of-the-art accuracy on standard few-shot learning datasets like CIFAR-FS, CUB and mini-ImageNet by 3-8%. Through extensive experimentation, we show that the features learned using our approach generalize to complex few-shot evaluation tasks, cross-domain scenarios and are robust against slight changes to data distribution.

Harnessing the Vulnerability of Latent Layers in Adversarially Trained Models

May 13, 2019

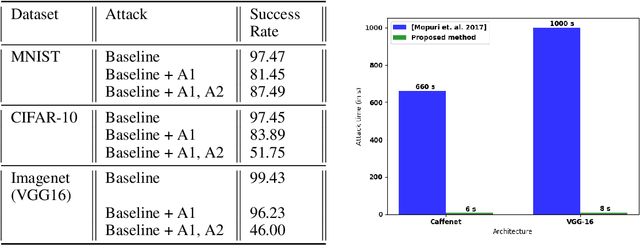

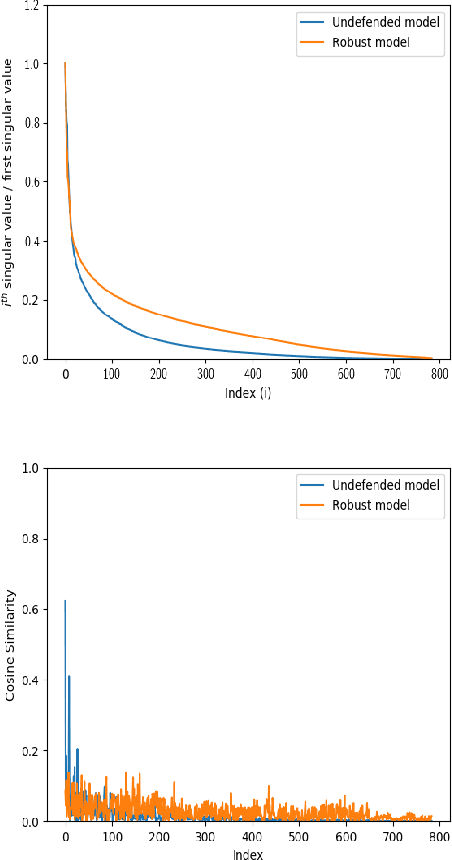

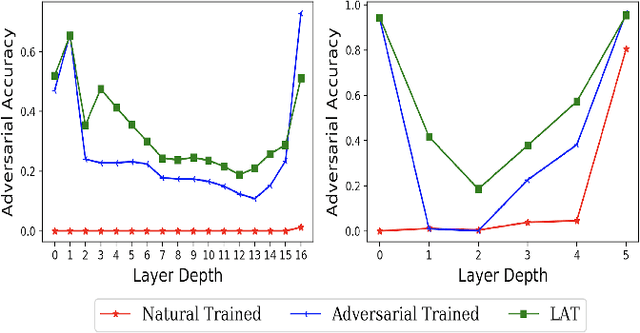

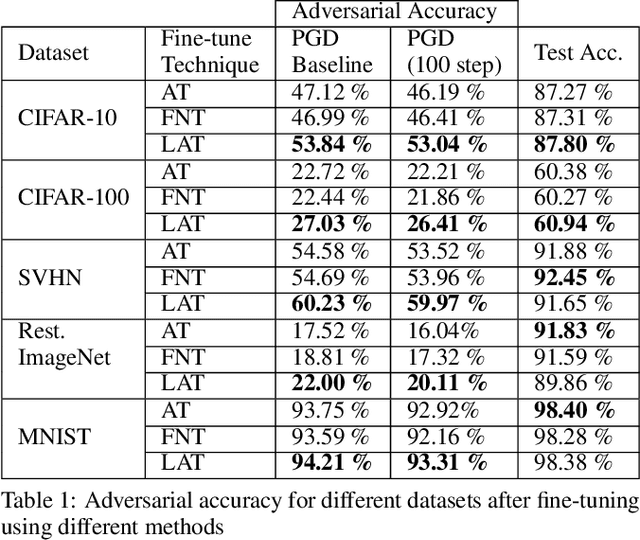

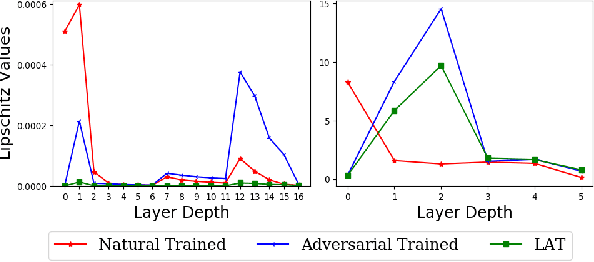

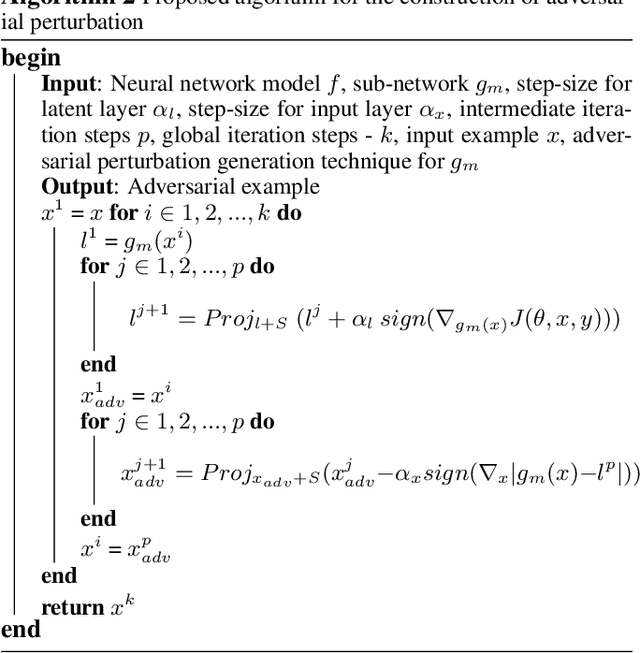

Abstract:Neural networks are vulnerable to adversarial attacks -- small visually imperceptible crafted noise which when added to the input drastically changes the output. The most effective method of defending against these adversarial attacks is to use the methodology of adversarial training. We analyze the adversarially trained robust models to study their vulnerability against adversarial attacks at the level of the latent layers. Our analysis reveals that contrary to the input layer which is robust to adversarial attack, the latent layer of these robust models are highly susceptible to adversarial perturbations of small magnitude. Leveraging this information, we introduce a new technique Latent Adversarial Training (LAT) which comprises of fine-tuning the adversarially trained models to ensure the robustness at the feature layers. We also propose Latent Attack (LA), a novel algorithm for construction of adversarial examples. LAT results in minor improvement in test accuracy and leads to a state-of-the-art adversarial accuracy against the universal first-order adversarial PGD attack which is shown for the MNIST, CIFAR-10, CIFAR-100 datasets.

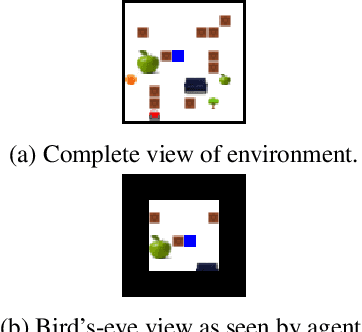

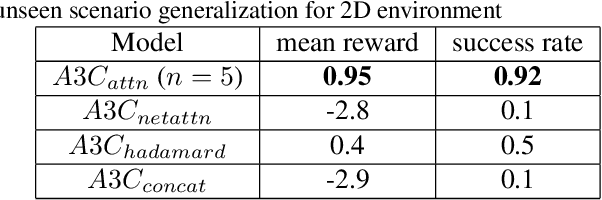

Attention Based Natural Language Grounding by Navigating Virtual Environment

Apr 23, 2018

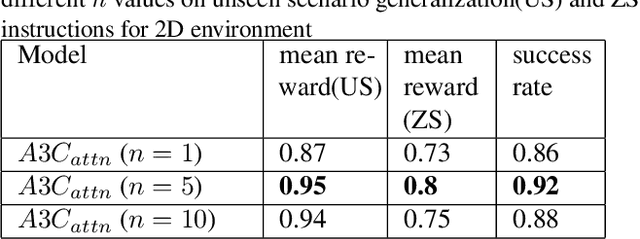

Abstract:In this work, we focus on the problem of grounding language by training an agent to follow a set of natural language instructions and navigate to a target object in an environment. The agent receives visual information through raw pixels and a natural language instruction telling what task needs to be achieved. Other than these two sources of information, our model does not have any prior information of both the visual and textual modalities and is end-to-end trainable. We develop an attention mechanism for multi-modal fusion of visual and textual modalities that allows the agent to learn to complete the task and also achieve language grounding. Our experimental results show that our attention mechanism outperforms the existing multi-modal fusion mechanisms proposed for both 2D and 3D environments in order to solve the above mentioned task. We show that the learnt textual representations are semantically meaningful as they follow vector arithmetic and are also consistent enough to induce translation between instructions in different natural languages. We also show that our model generalizes effectively to unseen scenarios and exhibit \textit{zero-shot} generalization capabilities both in 2D and 3D environments. The code for our 2D environment as well as the models that we developed for both 2D and 3D are available at \href{https://github.com/rl-lang-grounding/rl-lang-ground}{https://github.com/rl-lang-grounding/rl-lang-ground}

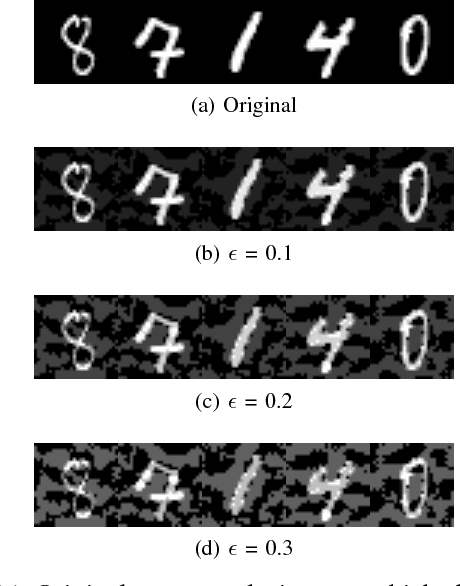

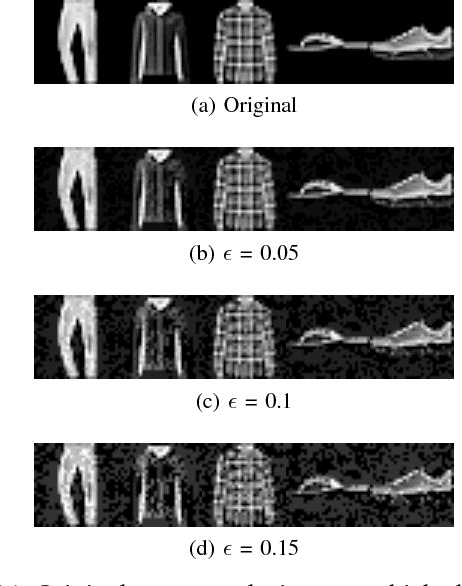

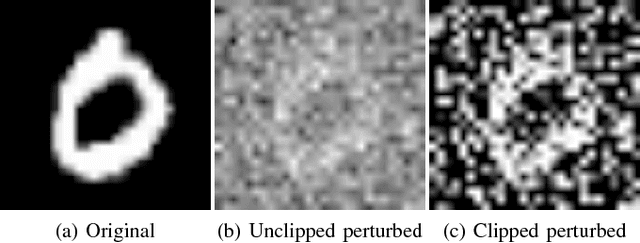

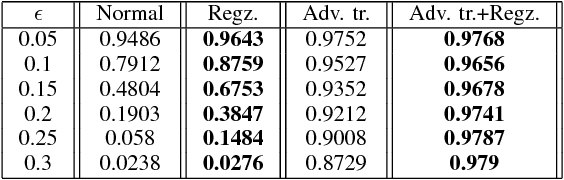

Neural Networks in Adversarial Setting and Ill-Conditioned Weight Space

Jan 03, 2018

Abstract:Recently, Neural networks have seen a huge surge in its adoption due to their ability to provide high accuracy on various tasks. On the other hand, the existence of adversarial examples have raised suspicions regarding the generalization capabilities of neural networks. In this work, we focus on the weight matrix learnt by the neural networks and hypothesize that ill conditioned weight matrix is one of the contributing factors in neural network's susceptibility towards adversarial examples. For ensuring that the learnt weight matrix's condition number remains sufficiently low, we suggest using orthogonal regularizer. We show that this indeed helps in increasing the adversarial accuracy on MNIST and F-MNIST datasets.

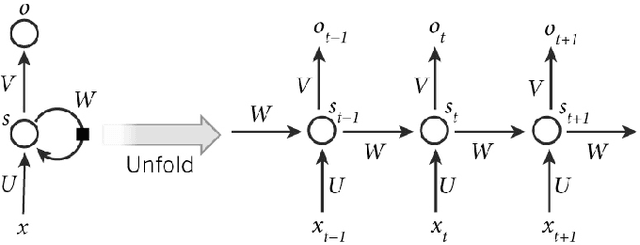

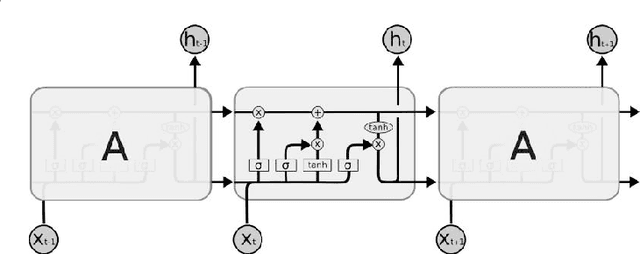

Intelligent Fault Analysis in Electrical Power Grids

Nov 08, 2017

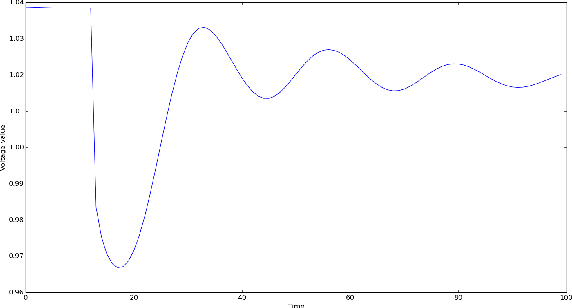

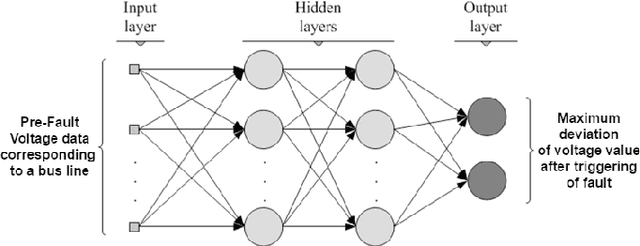

Abstract:Power grids are one of the most important components of infrastructure in today's world. Every nation is dependent on the security and stability of its own power grid to provide electricity to the households and industries. A malfunction of even a small part of a power grid can cause loss of productivity, revenue and in some cases even life. Thus, it is imperative to design a system which can detect the health of the power grid and take protective measures accordingly even before a serious anomaly takes place. To achieve this objective, we have set out to create an artificially intelligent system which can analyze the grid information at any given time and determine the health of the grid through the usage of sophisticated formal models and novel machine learning techniques like recurrent neural networks. Our system simulates grid conditions including stimuli like faults, generator output fluctuations, load fluctuations using Siemens PSS/E software and this data is trained using various classifiers like SVM, LSTM and subsequently tested. The results are excellent with our methods giving very high accuracy for the data. This model can easily be scaled to handle larger and more complex grid architectures.

Deep Fault Analysis and Subset Selection in Solar Power Grids

Nov 08, 2017

Abstract:Non-availability of reliable and sustainable electric power is a major problem in the developing world. Renewable energy sources like solar are not very lucrative in the current stage due to various uncertainties like weather, storage, land use among others. There also exists various other issues like mis-commitment of power, absence of intelligent fault analysis, congestion, etc. In this paper, we propose a novel deep learning-based system for predicting faults and selecting power generators optimally so as to reduce costs and ensure higher reliability in solar power systems. The results are highly encouraging and they suggest that the approaches proposed in this paper have the potential to be applied successfully in the developing world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge