Birdclef

Papers and Code

Transfer Learning with Pseudo Multi-Label Birdcall Classification for DS@GT BirdCLEF 2024

Jul 08, 2024We present working notes for the DS@GT team on transfer learning with pseudo multi-label birdcall classification for the BirdCLEF 2024 competition, focused on identifying Indian bird species in recorded soundscapes. Our approach utilizes production-grade models such as the Google Bird Vocalization Classifier, BirdNET, and EnCodec to address representation and labeling challenges in the competition. We explore the distributional shift between this year's edition of unlabeled soundscapes representative of the hidden test set and propose a pseudo multi-label classification strategy to leverage the unlabeled data. Our highest post-competition public leaderboard score is 0.63 using BirdNET embeddings with Bird Vocalization pseudo-labels. Our code is available at https://github.com/dsgt-kaggle-clef/birdclef-2024

Quantum Embedding with Transformer for High-dimensional Data

Feb 20, 2024Quantum embedding with transformers is a novel and promising architecture for quantum machine learning to deliver exceptional capability on near-term devices or simulators. The research incorporated a vision transformer (ViT) to advance quantum significantly embedding ability and results for a single qubit classifier with around 3 percent in the median F1 score on the BirdCLEF-2021, a challenging high-dimensional dataset. The study showcases and analyzes empirical evidence that our transformer-based architecture is a highly versatile and practical approach to modern quantum machine learning problems.

Transfer Learning with Semi-Supervised Dataset Annotation for Birdcall Classification

Jun 29, 2023

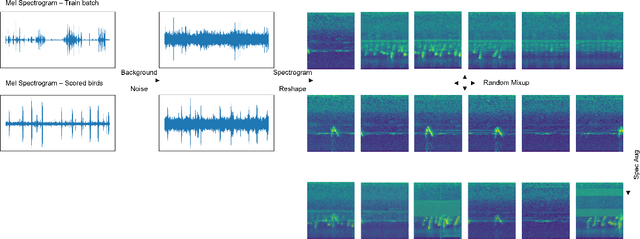

We present working notes on transfer learning with semi-supervised dataset annotation for the BirdCLEF 2023 competition, focused on identifying African bird species in recorded soundscapes. Our approach utilizes existing off-the-shelf models, BirdNET and MixIT, to address representation and labeling challenges in the competition. We explore the embedding space learned by BirdNET and propose a process to derive an annotated dataset for supervised learning. Our experiments involve various models and feature engineering approaches to maximize performance on the competition leaderboard. The results demonstrate the effectiveness of our approach in classifying bird species and highlight the potential of transfer learning and semi-supervised dataset annotation in similar tasks.

The Birds Need Attention Too: Analysing usage of Self Attention in identifying bird calls in soundscapes

Nov 14, 2022Birds are vital parts of ecosystems across the world and are an excellent measure of the quality of life on earth. Many bird species are endangered while others are already extinct. Ecological efforts in understanding and monitoring bird populations are important to conserve their habitat and species, but this mostly relies on manual methods in rough terrains. Recent advances in Machine Learning and Deep Learning have made automatic bird recognition in diverse environments possible. Birdcall recognition till now has been performed using convolutional neural networks. In this work, we try and understand how self-attention can aid in this endeavor. With that we build an pre-trained Attention-based Spectrogram Transformer baseline for BirdCLEF 2022 and compare the results against the pre-trained Convolution-based baseline. Our results show that the transformer models outperformed the convolutional model and we further validate our results by building baselines and analyzing the results for the previous year BirdCLEF 2021 challenge. Source code available at https://github.com/ck090/BirdCLEF-22

Few-shot Long-Tailed Bird Audio Recognition

Jul 04, 2022

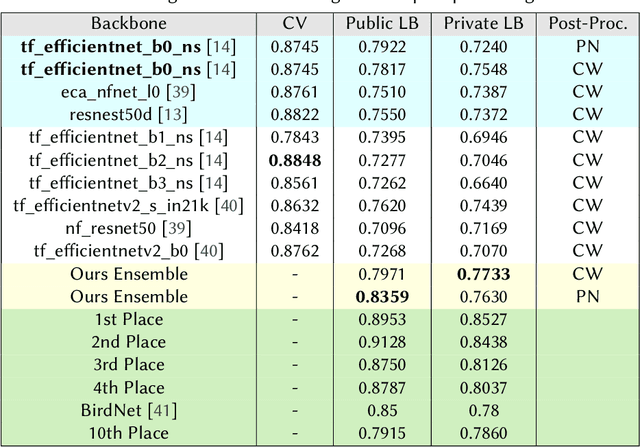

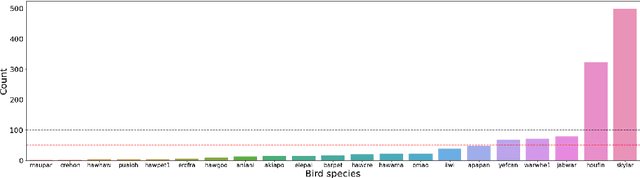

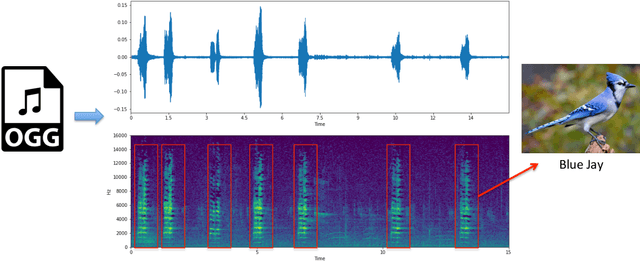

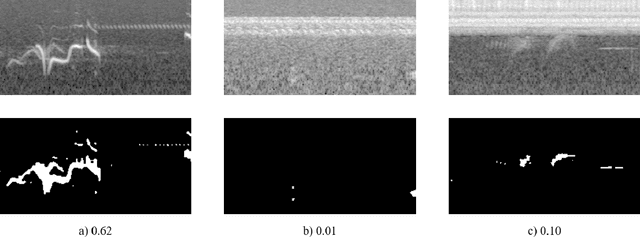

It is easier to hear birds than see them. However, they still play an essential role in nature and are excellent indicators of deteriorating environmental quality and pollution. Recent advances in Deep Neural Networks allow us to process audio data to detect and classify birds. This technology can assist researchers in monitoring bird populations and biodiversity. We propose a sound detection and classification pipeline to analyze complex soundscape recordings and identify birdcalls in the background. Our method learns from weak labels and few data and acoustically recognizes the bird species. Our solution achieved 18th place of 807 teams at the BirdCLEF 2022 Challenge hosted on Kaggle.

Motif Mining and Unsupervised Representation Learning for BirdCLEF 2022

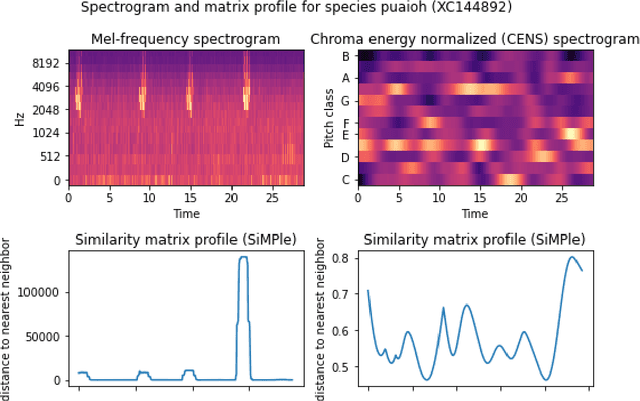

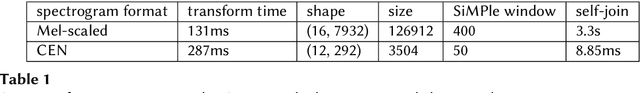

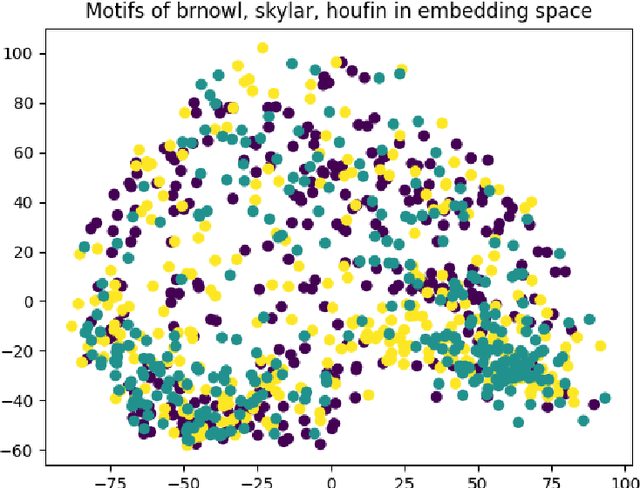

Jun 08, 2022

We build a classification model for the BirdCLEF 2022 challenge using unsupervised methods. We implement an unsupervised representation of the training dataset using a triplet loss on spectrogram representation of audio motifs. Our best model performs with a score of 0.48 on the public leaderboard.

Weakly-Supervised Classification and Detection of Bird Sounds in the Wild. A BirdCLEF 2021 Solution

Jul 10, 2021

It is easier to hear birds than see them, however, they still play an essential role in nature and they are excellent indicators of deteriorating environmental quality and pollution. Recent advances in Machine Learning and Convolutional Neural Networks allow us to detect and classify bird sounds, by doing this, we can assist researchers in monitoring the status and trends of bird populations and biodiversity in ecosystems. We propose a sound detection and classification pipeline for analyzing complex soundscape recordings and identify birdcalls in the background. Our pipeline learns from weak labels, classifies fine-grained bird vocalizations in the wild, and is robust against background sounds (e.g., airplanes, rain, etc). Our solution achieved 10th place of 816 teams at the BirdCLEF 2021 Challenge hosted on Kaggle.

Recognizing Birds from Sound - The 2018 BirdCLEF Baseline System

Apr 19, 2018

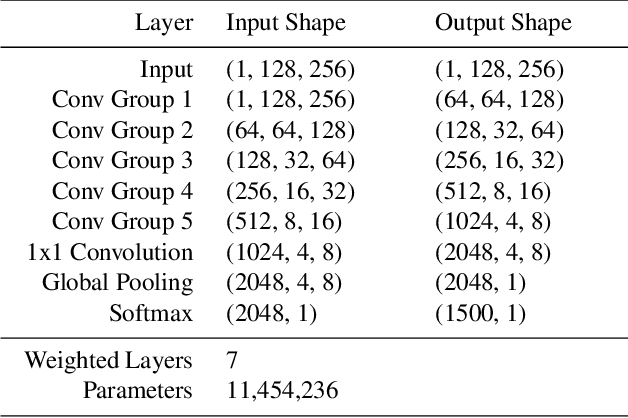

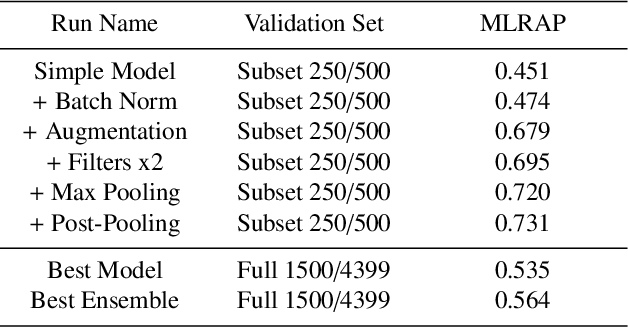

Reliable identification of bird species in recorded audio files would be a transformative tool for researchers, conservation biologists, and birders. In recent years, artificial neural networks have greatly improved the detection quality of machine learning systems for bird species recognition. We present a baseline system using convolutional neural networks. We publish our code base as reference for participants in the 2018 LifeCLEF bird identification task and discuss our experiments and potential improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge