The Case for Claim Difficulty Assessment in Automatic Fact Checking

Paper and Code

Sep 20, 2021

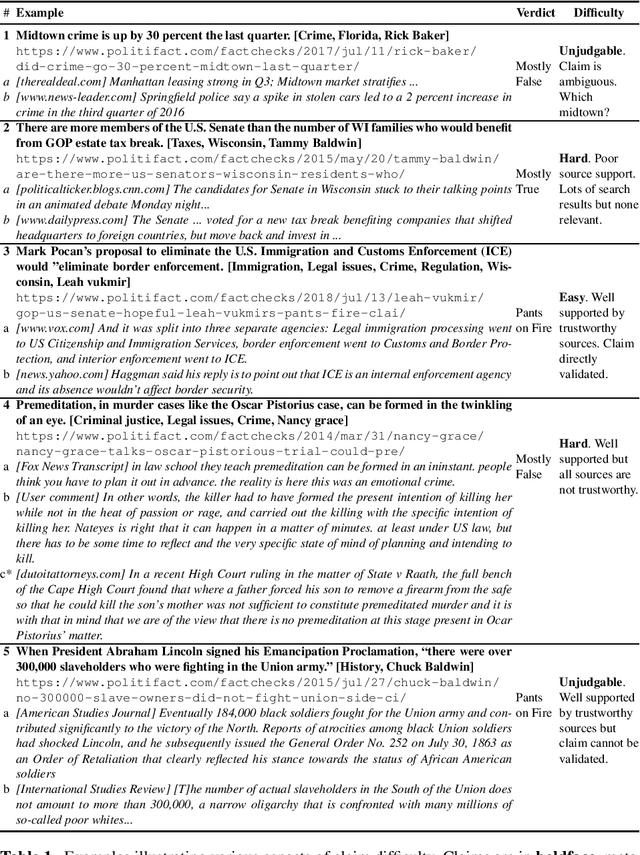

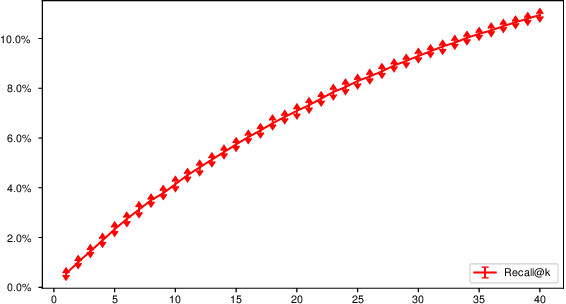

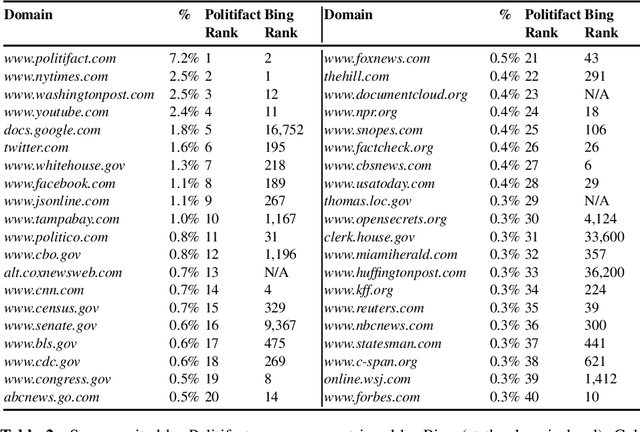

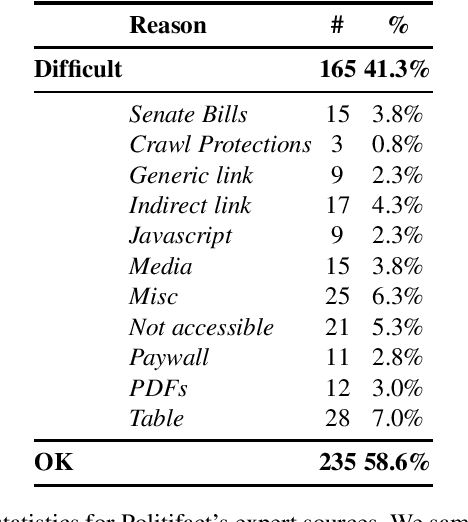

Fact-checking is the process (human, automated, or hybrid) by which claims (i.e., purported facts) are evaluated for veracity. In this article, we raise an issue that has received little attention in prior work - that some claims are far more difficult to fact-check than others. We discuss the implications this has for both practical fact-checking and research on automated fact-checking, including task formulation and dataset design. We report a manual analysis undertaken to explore factors underlying varying claim difficulty and categorize several distinct types of difficulty. We argue that prediction of claim difficulty is a missing component of today's automated fact-checking architectures, and we describe how this difficulty prediction task might be split into a set of distinct subtasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge