PixelNav: Towards Model-based Vision-Only Navigation with Topological Graphs

Paper and Code

Jul 28, 2025

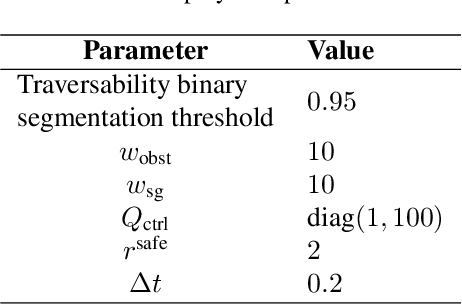

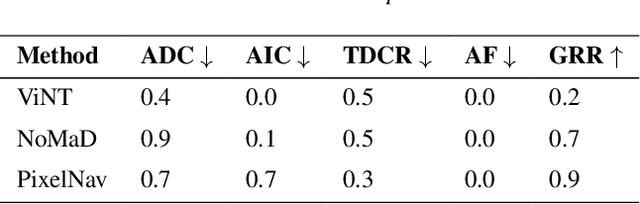

This work proposes a novel hybrid approach for vision-only navigation of mobile robots, which combines advances of both deep learning approaches and classical model-based planning algorithms. Today, purely data-driven end-to-end models are dominant solutions to this problem. Despite advantages such as flexibility and adaptability, the requirement of a large amount of training data and limited interpretability are the main bottlenecks for their practical applications. To address these limitations, we propose a hierarchical system that utilizes recent advances in model predictive control, traversability estimation, visual place recognition, and pose estimation, employing topological graphs as a representation of the target environment. Using such a combination, we provide a scalable system with a higher level of interpretability compared to end-to-end approaches. Extensive real-world experiments show the efficiency of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge