Learning-Based Phase Shift Optimization of Liquid Crystal RIS in Dynamic mmWave Networks

Paper and Code

Dec 17, 2025

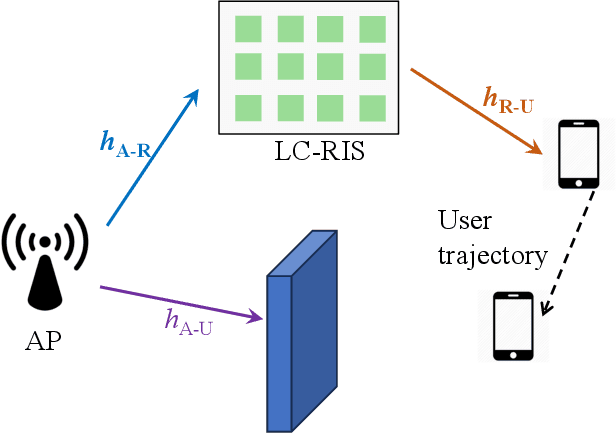

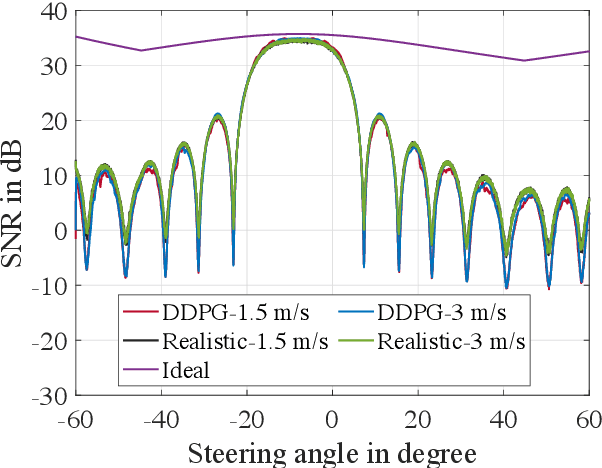

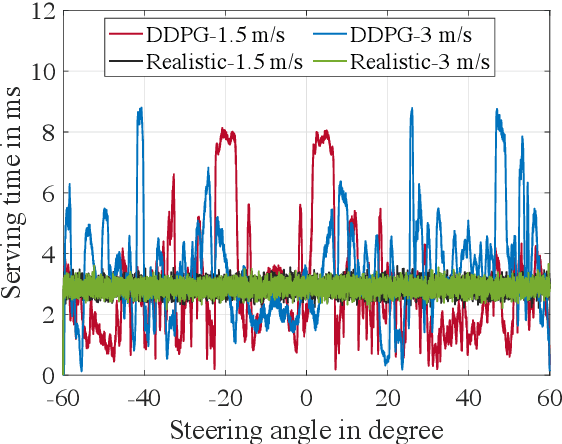

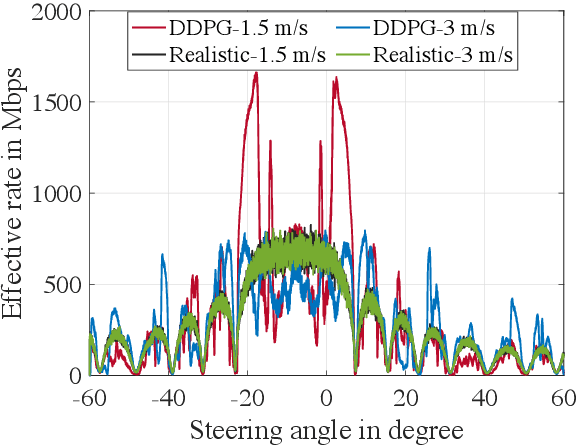

To enhance coverage and signal quality in millimeter-wave (mmWave) frequencies, reconfigurable intelligent surfaces (RISs) have emerged as a game-changing solution to manipulate the wireless environment. Traditional semiconductor-based RISs face scalability issues due to high power consumption. Meanwhile, liquid crystal-based RISs (LC-RISs) offer energy-efficient and cost-effective operation even for large arrays. However, this promise has a caveat. LC-RISs suffer from long reconfiguration times, on the order of tens of milliseconds, which limits their applicability in dynamic scenarios. To date, prior works have focused on hardware design aspects or static scenarios to address this limitation, but little attention has been paid to optimization solutions for dynamic settings. Our paper fills this gap by proposing a reinforcement learning-based optimization framework to dynamically control the phase shifts of LC-RISs and maximize the data rate of a moving user. Specifically, we propose a Deep Deterministic Policy Gradient (DDPG) algorithm that adapts the LC-RIS phase shifts without requiring perfect channel state information and balances the tradeoff between signal-to-noise ratio (SNR) and configuration time. We validate our approach through high-fidelity ray tracing simulations, leveraging measurement data from an LC-RIS prototype. Our results demonstrate the potential of our solution to bring adaptive control to dynamic LC-RIS-assisted mmWave systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge