Zilai Wang

STACodec: Semantic Token Assignment for Balancing Acoustic Fidelity and Semantic Information in Audio Codecs

Feb 05, 2026Abstract:Neural audio codecs are widely used for audio compression and can be integrated into token-based language models. Traditional codecs preserve acoustic details well but lack semantic information. Recent hybrid codecs attempt to incorporate semantic information through distillation, but this often degrades reconstruction performance, making it difficult to achieve both. To address this limitation, we introduce STACodec, a unified codec that integrates semantic information from self-supervised learning (SSL) models into the first layer of residual vector quantization (RVQ-1) via semantic token assignment (STA). To further eliminate reliance on SSL-based semantic tokenizers and improve efficiency during inference, we propose a semantic pre-distillation (SPD) module, which predicts semantic tokens directly for assignment to the first RVQ layer during inference. Experimental results show that STACodec outperforms existing hybrid codecs in both audio reconstruction and downstream semantic tasks, demonstrating a better balance between acoustic fidelity and semantic capability.

Mind the Shift: Using Delta SSL Embeddings to Enhance Child ASR

Jan 28, 2026Abstract:Self-supervised learning (SSL) models have achieved impressive results across many speech tasks, yet child automatic speech recognition (ASR) remains challenging due to limited data and pretraining domain mismatch. Fine-tuning SSL models on child speech induces shifts in the representation space. We hypothesize that delta SSL embeddings, defined as the differences between embeddings from a finetuned model and those from its pretrained counterpart, encode task-specific information that complements finetuned features from another SSL model. We evaluate multiple fusion strategies on the MyST childrens corpus using different models. Results show that delta embedding fusion with WavLM yields up to a 10 percent relative WER reduction for HuBERT and a 4.4 percent reduction for W2V2, compared to finetuned embedding fusion. Notably, fusing WavLM with delta W2V2 embeddings achieves a WER of 9.64, setting a new state of the art among SSL models on the MyST corpus. These findings demonstrate the effectiveness of delta embeddings and highlight feature fusion as a promising direction for advancing child ASR.

Fractional Differential Equation Physics-Informed Neural Network and Its Application in Battery State Estimation

Dec 13, 2025Abstract:Accurate estimation of the State of Charge (SOC) is critical for ensuring the safety, reliability, and performance optimization of lithium-ion battery systems. Conventional data-driven neural network models often struggle to fully characterize the inherent complex nonlinearities and memory-dependent dynamics of electrochemical processes, significantly limiting their predictive accuracy and physical interpretability under dynamic operating conditions. To address this challenge, this study proposes a novel neural architecture termed the Fractional Differential Equation Physics-Informed Neural Network (FDIFF-PINN), which integrates fractional calculus with deep learning. The main contributions of this paper include: (1) Based on a fractional-order equivalent circuit model, a discretized fractional-order partial differential equation is constructed. (2) Comparative experiments were conducted using a dynamic charge/discharge dataset of Panasonic 18650PF batteries under multi-temperature conditions (from -10$^{\circ}$C to 20$^{\circ}$C).

CHSER: A Dataset and Case Study on Generative Speech Error Correction for Child ASR

May 24, 2025

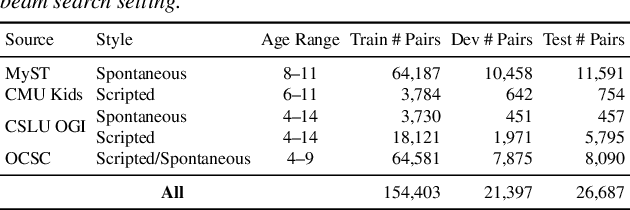

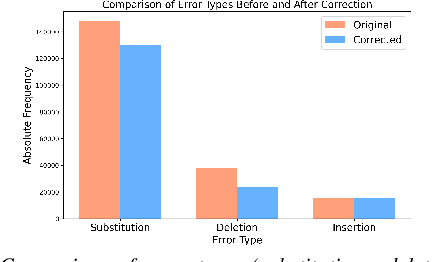

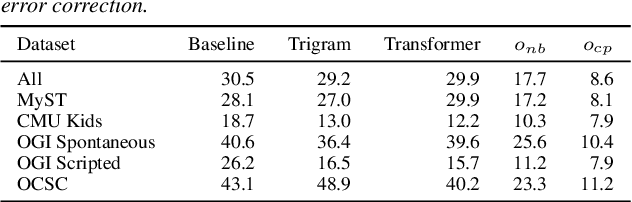

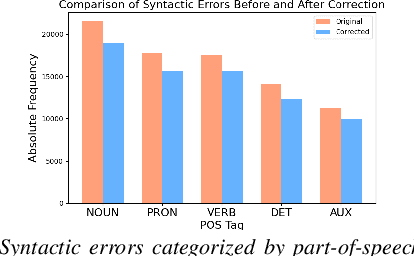

Abstract:Automatic Speech Recognition (ASR) systems struggle with child speech due to its distinct acoustic and linguistic variability and limited availability of child speech datasets, leading to high transcription error rates. While ASR error correction (AEC) methods have improved adult speech transcription, their effectiveness on child speech remains largely unexplored. To address this, we introduce CHSER, a Generative Speech Error Correction (GenSEC) dataset for child speech, comprising 200K hypothesis-transcription pairs spanning diverse age groups and speaking styles. Results demonstrate that fine-tuning on the CHSER dataset achieves up to a 28.5% relative WER reduction in a zero-shot setting and a 13.3% reduction when applied to fine-tuned ASR systems. Additionally, our error analysis reveals that while GenSEC improves substitution and deletion errors, it struggles with insertions and child-specific disfluencies. These findings highlight the potential of GenSEC for improving child ASR.

Selective Attention Merging for low resource tasks: A case study of Child ASR

Jan 14, 2025Abstract:While Speech Foundation Models (SFMs) excel in various speech tasks, their performance for low-resource tasks such as child Automatic Speech Recognition (ASR) is hampered by limited pretraining data. To address this, we explore different model merging techniques to leverage knowledge from models trained on larger, more diverse speech corpora. This paper also introduces Selective Attention (SA) Merge, a novel method that selectively merges task vectors from attention matrices to enhance SFM performance on low-resource tasks. Experiments on the MyST database show significant reductions in relative word error rate of up to 14%, outperforming existing model merging and data augmentation techniques. By combining data augmentation techniques with SA Merge, we achieve a new state-of-the-art WER of 8.69 on the MyST database for the Whisper-small model, highlighting the potential of SA Merge for improving low-resource ASR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge