Yvan Petillot

Heriot-Watt University

RadarSLAM: Radar based Large-Scale SLAM in All Weathers

May 05, 2020

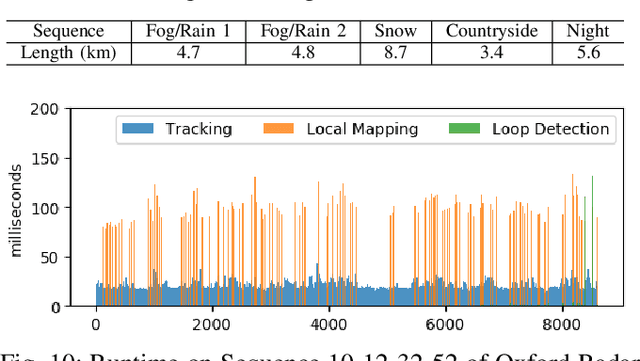

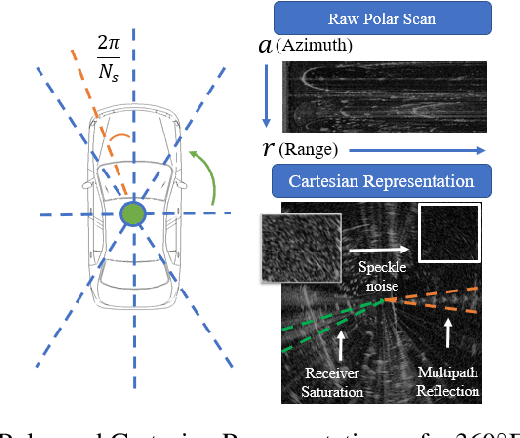

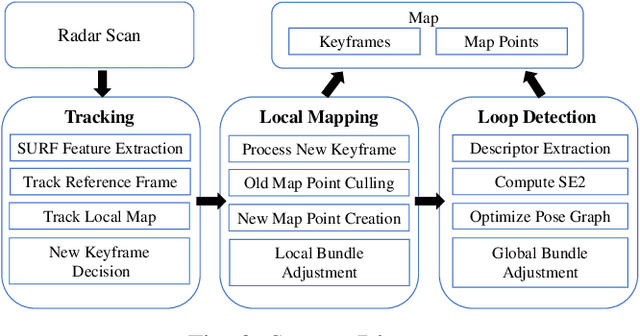

Abstract:Numerous Simultaneous Localization and Mapping (SLAM) algorithms have been presented in last decade using different sensor modalities. However, robust SLAM in extreme weather conditions is still an open research problem. In this paper, RadarSLAM, a full radar based graph SLAM system, is proposed for reliable localization and mapping in large-scale environments. It is composed of pose tracking, local mapping, loop closure detection and pose graph optimization, enhanced by novel feature matching and probabilistic point cloud generation on radar images. Extensive experiments are conducted on a public radar dataset and several self-collected radar sequences, demonstrating the state-of-the-art reliability and localization accuracy in various adverse weather conditions, such as dark night, dense fog and heavy snowfall.

Learning Generalisable Coupling Terms for Obstacle Avoidance via Low-dimensional Geometric Descriptors

Jun 24, 2019

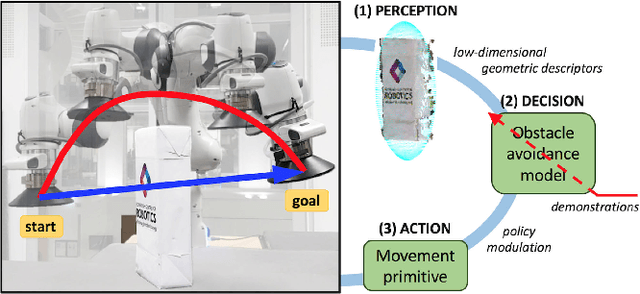

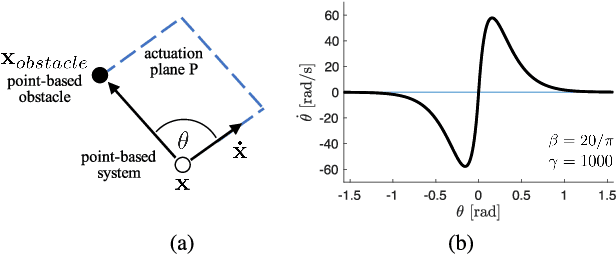

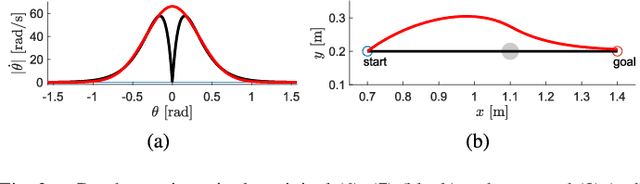

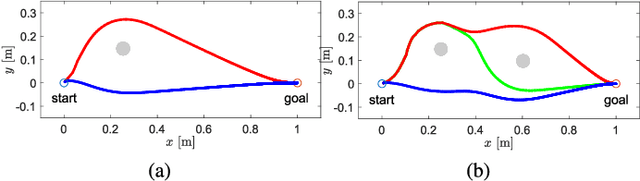

Abstract:Unforeseen events are frequent in the real-world environments where robots are expected to assist, raising the need for fast replanning of the policy in execution to guarantee the system and environment safety. Inspired by human behavioural studies of obstacle avoidance and route selection, this paper presents a hierarchical framework which generates reactive yet bounded obstacle avoidance behaviours through a multi-layered analysis. The framework leverages the strengths of learning techniques and the versatility of dynamic movement primitives to efficiently unify perception, decision, and action levels via low-dimensional geometric descriptors of the environment. Experimental evaluation on synthetic environments and a real anthropomorphic manipulator proves that the robustness and generalisation capabilities of the proposed approach regardless of the obstacle avoidance scenario makes it suitable for robotic systems in real-world environments.

Learning and Composing Primitive Skills for Dual-arm Manipulation

May 25, 2019

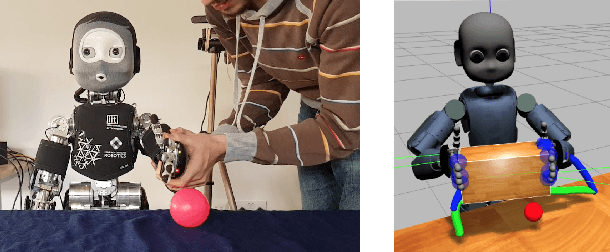

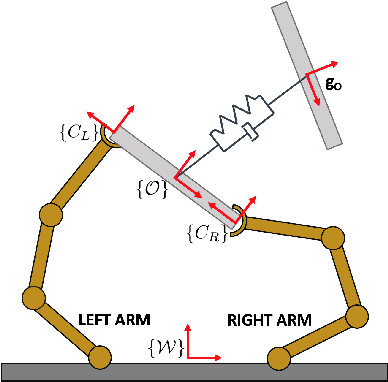

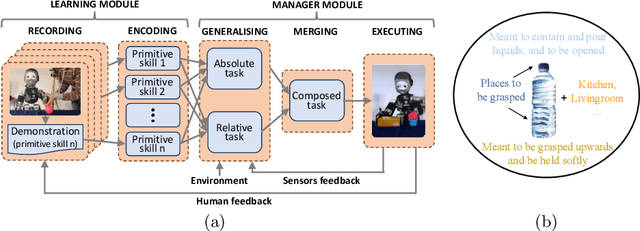

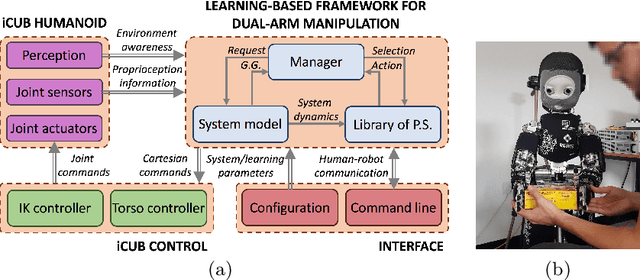

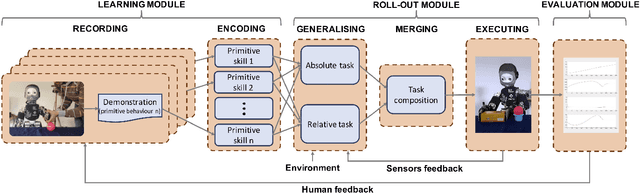

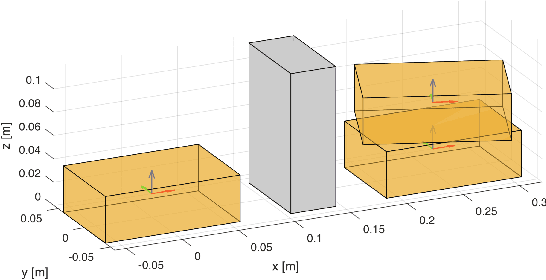

Abstract:In an attempt to confer robots with complex manipulation capabilities, dual-arm anthropomorphic systems have become an important research topic in the robotics community. Most approaches in the literature rely upon a great understanding of the dynamics underlying the system's behaviour and yet offer limited autonomous generalisation capabilities. To address these limitations, this work proposes a modelisation for dual-arm manipulators based on dynamic movement primitives laying in two orthogonal spaces. The modularity and learning capabilities of this model are leveraged to formulate a novel end-to-end learning-based framework which (i) learns a library of primitive skills from human demonstrations, and (ii) composes such knowledge simultaneously and sequentially to confront novel scenarios. The feasibility of the proposal is evaluated by teaching the iCub humanoid the basic skills to succeed on simulated dual-arm pick-and-place tasks. The results suggest the learning and generalisation capabilities of the proposed framework extend to autonomously conduct undemonstrated dual-arm manipulation tasks.

Learning and Generalisation of Primitives Skills Towards Robust Dual-arm Manipulation

Apr 02, 2019

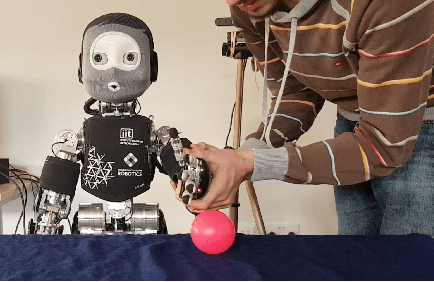

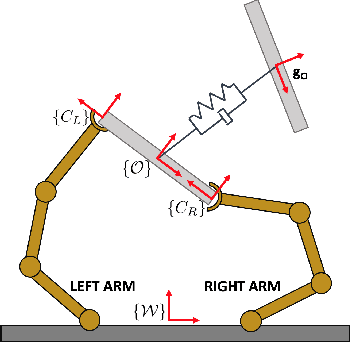

Abstract:Robots are becoming a vital ingredient in society. Some of their daily tasks require dual-arm manipulation skills in the rapidly changing, dynamic and unpredictable real-world environments where they have to operate. Given the expertise of humans in conducting these activities, it is natural to study humans' motions to use the resulting knowledge in robotic control. With this in mind, this work leverages human knowledge to formulate a more general, real-time, and less task-specific framework for dual-arm manipulation. The proposed framework is evaluated on the iCub humanoid robot and several synthetic experiments, by conducting a dual-arm pick-and-place task of a parcel in the presence of unexpected obstacles. Results suggest the suitability of the method towards robust and generalisable dual-arm manipulation.

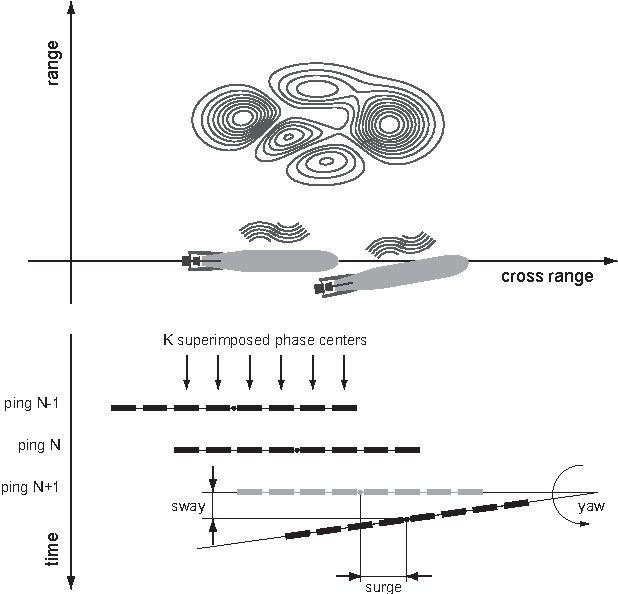

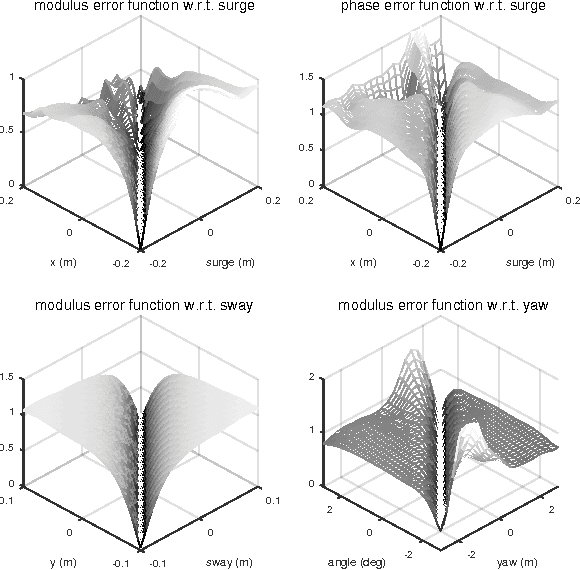

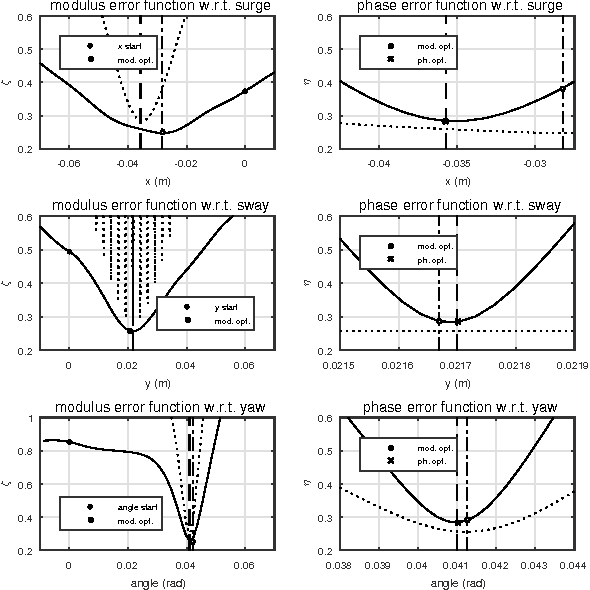

A New Framework for Synthetic Aperture Sonar Micronavigation

Jul 26, 2017

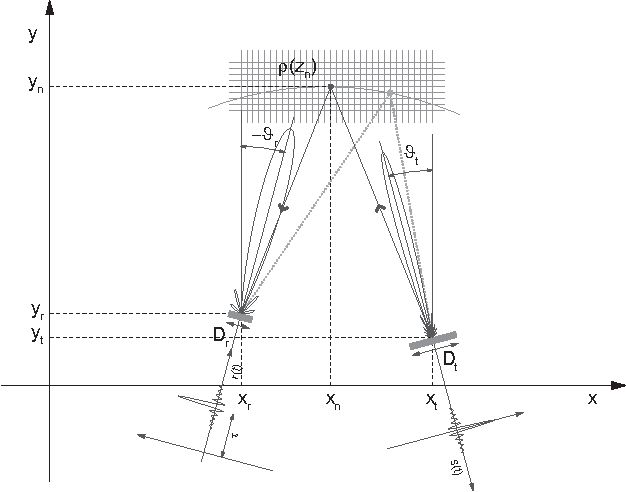

Abstract:Synthetic aperture imaging systems achieve constant azimuth resolution by coherently summating the observations acquired along the aperture path. At this aim, their locations have to be known with subwavelength accuracy. In underwater Synthetic Aperture Sonar (SAS), the nature of propagation and navigation in water makes the retrieval of this information challenging. Inertial sensors have to be employed in combination with signal processing techniques, which are usually referred to as micronavigation. In this paper we propose a novel micronavigation approach based on the minimization of an error function between two contiguous pings having some mutual information. This error is obtained by comparing the vector space intersections between the pings orthogonal projectors. The effectiveness and generality of the proposed approach is demonstrated by means of simulations and by means of an experiment performed in a controlled environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge