Yunli Chen

A Practical Large-Scale Roadside Multi-View Multi-Sensor Spatial Synchronization Framework for Intelligent Transportation Systems

Nov 04, 2023

Abstract:Spatial synchronization in roadside scenarios is essential for integrating data from multiple sensors at different locations. Current methods using cascading spatial transformation (CST) often lead to cumulative errors in large-scale deployments. Manual camera calibration is insufficient and requires extensive manual work, and existing methods are limited to controlled or single-view scenarios. To address these challenges, our research introduces a parallel spatial transformation (PST)-based framework for large-scale, multi-view, multi-sensor scenarios. PST parallelizes sensor coordinate system transformation, reducing cumulative errors. We incorporate deep learning for precise roadside monocular global localization, reducing manual work. Additionally, we use geolocation cues and an optimization algorithm for improved synchronization accuracy. Our framework has been tested in real-world scenarios, outperforming CST-based methods. It significantly enhances large-scale roadside multi-perspective, multi-sensor spatial synchronization, reducing deployment costs.

Optimizing Memory Efficiency of Graph Neural Networks on Edge Computing Platforms

Apr 12, 2021

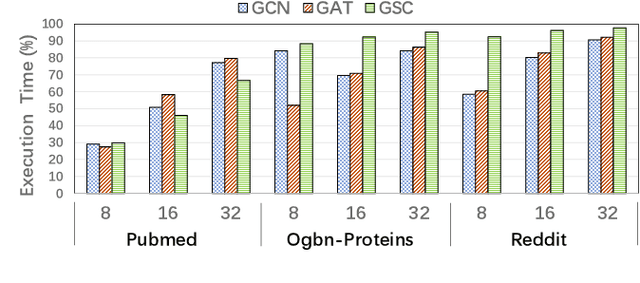

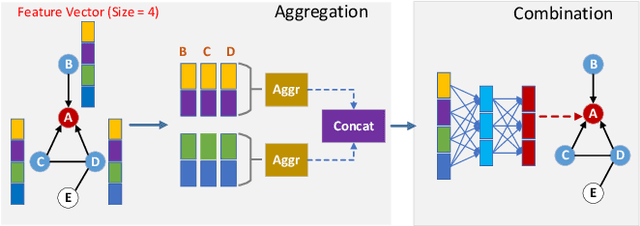

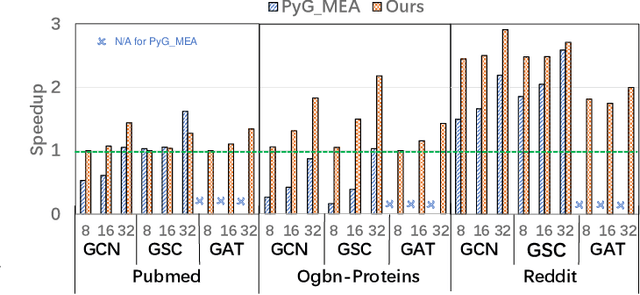

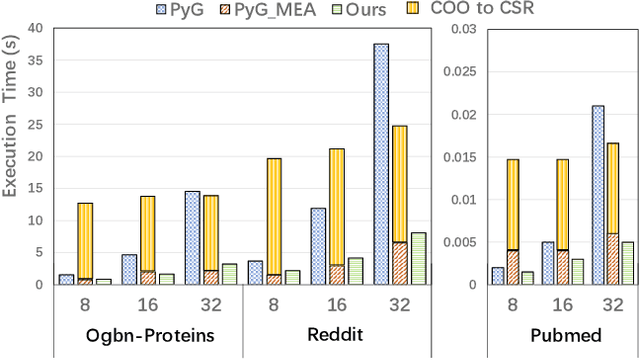

Abstract:Graph neural networks (GNN) have achieved state-of-the-art performance on various industrial tasks. However, the poor efficiency of GNN inference and frequent Out-Of-Memory (OOM) problem limit the successful application of GNN on edge computing platforms. To tackle these problems, a feature decomposition approach is proposed for memory efficiency optimization of GNN inference. The proposed approach could achieve outstanding optimization on various GNN models, covering a wide range of datasets, which speeds up the inference by up to 3x. Furthermore, the proposed feature decomposition could significantly reduce the peak memory usage (up to 5x in memory efficiency improvement) and mitigate OOM problems during GNN inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge