Yuichiro Tanaka

Reservoir Computing inspired Matrix Multiplication-free Language Model

Dec 29, 2025Abstract:Large language models (LLMs) have achieved state-of-the-art performance in natural language processing; however, their high computational cost remains a major bottleneck. In this study, we target computational efficiency by focusing on a matrix multiplication free language model (MatMul-free LM) and further reducing the training cost through an architecture inspired by reservoir computing. Specifically, we partially fix and share the weights of selected layers in the MatMul-free LM and insert reservoir layers to obtain rich dynamic representations without additional training overhead. Additionally, several operations are combined to reduce memory accesses. Experimental results show that the proposed architecture reduces the number of parameters by up to 19%, training time by 9.9%, and inference time by 8.0%, while maintaining comparable performance to the baseline model.

Sign Language Recognition using Parallel Bidirectional Reservoir Computing

Dec 22, 2025

Abstract:Sign language recognition (SLR) facilitates communication between deaf and hearing communities. Deep learning based SLR models are commonly used but require extensive computational resources, making them unsuitable for deployment on edge devices. To address these limitations, we propose a lightweight SLR system that combines parallel bidirectional reservoir computing (PBRC) with MediaPipe. MediaPipe enables real-time hand tracking and precise extraction of hand joint coordinates, which serve as input features for the PBRC architecture. The proposed PBRC architecture consists of two echo state network (ESN) based bidirectional reservoir computing (BRC) modules arranged in parallel to capture temporal dependencies, thereby creating a rich feature representation for classification. We trained our PBRC-based SLR system on the Word-Level American Sign Language (WLASL) video dataset, achieving top-1, top-5, and top-10 accuracies of 60.85%, 85.86%, and 91.74%, respectively. Training time was significantly reduced to 18.67 seconds due to the intrinsic properties of reservoir computing, compared to over 55 minutes for deep learning based methods such as Bi-GRU. This approach offers a lightweight, cost-effective solution for real-time SLR on edge devices.

Hibikino-Musashi@Home 2024 Team Description Paper

Oct 08, 2024

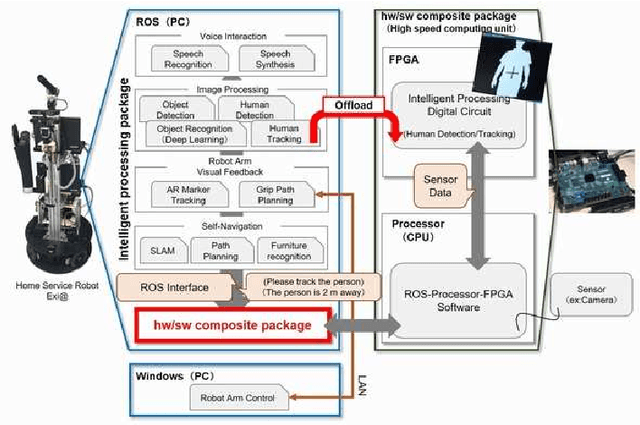

Abstract:This paper provides an overview of the techniques employed by Hibikino-Musashi@Home, which intends to participate in the domestic standard platform league. The team has developed a dataset generator for training a robot vision system and an open-source development environment running on a Human Support Robot simulator. The large language model powered task planner selects appropriate primitive skills to perform the task requested by users. The team aims to design a home service robot that can assist humans in their homes and continuously attends competitions to evaluate and improve the developed system.

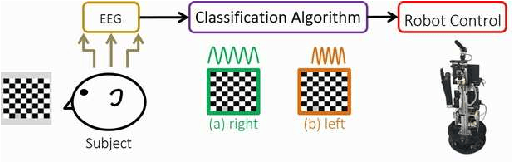

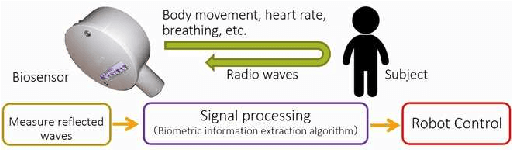

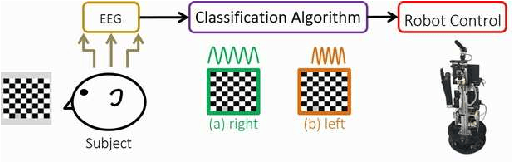

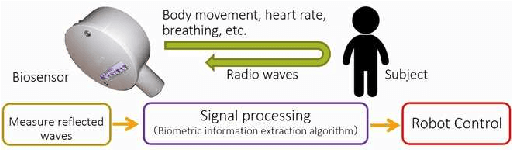

Hibikino-Musashi@Home 2023 Team Description Paper

Oct 19, 2023Abstract:This paper describes an overview of the techniques of Hibikino-Musashi@Home, which intends to participate in the domestic standard platform league. The team has developed a dataset generator for the training of a robot vision system and an open-source development environment running on a human support robot simulator. The robot system comprises self-developed libraries including those for motion synthesis and open-source software works on the robot operating system. The team aims to realize a home service robot that assists humans in a home, and continuously attend the competition to evaluate the developed system. The brain-inspired artificial intelligence system is also proposed for service robots which are expected to work in a real home environment.

Hibikino-Musashi@Home 2022 Team Description Paper

Nov 12, 2022

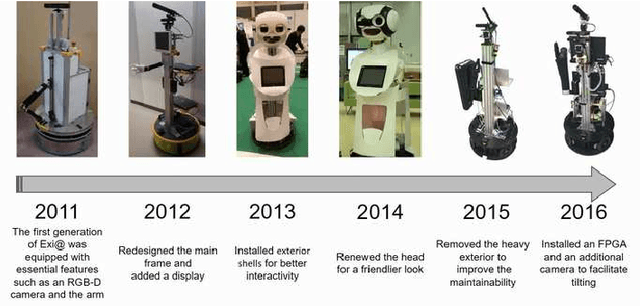

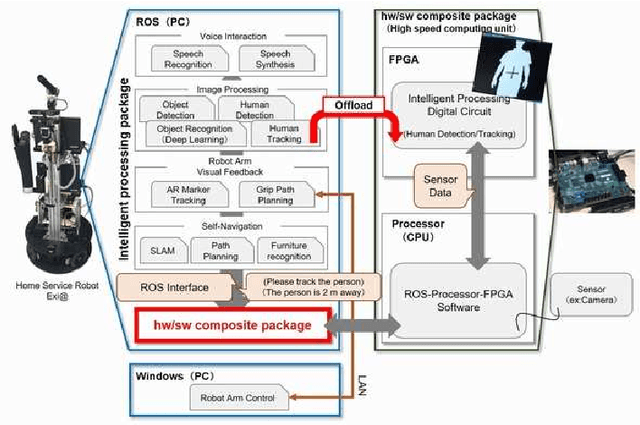

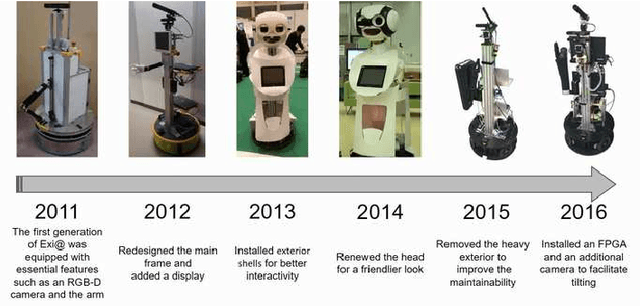

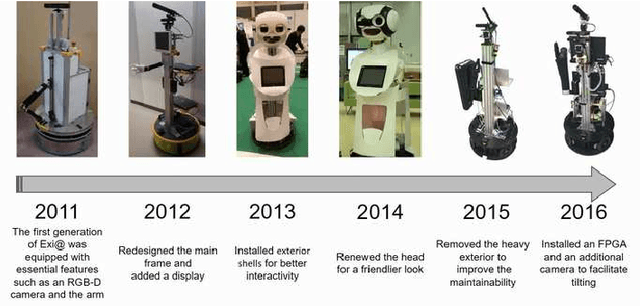

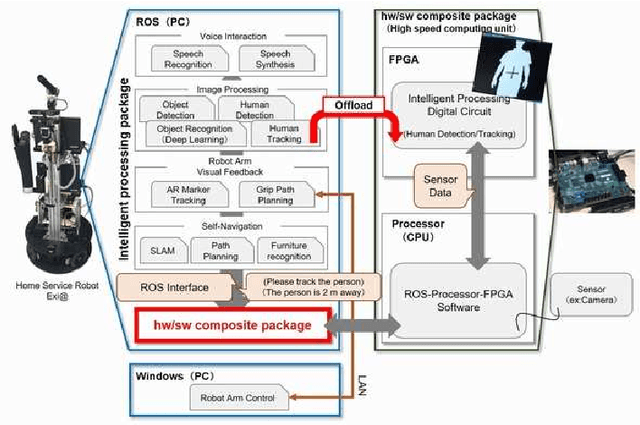

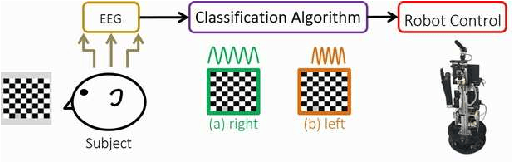

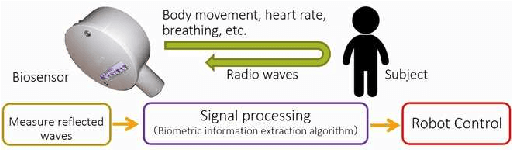

Abstract:Our team, Hibikino-Musashi@Home (HMA), was founded in 2010. It is based in Japan in the Kitakyushu Science and Research Park. Since 2010, we have annually participated in the RoboCup@Home Japan Open competition in the open platform league (OPL).We participated as an open platform league team in the 2017 Nagoya RoboCup competition and as a domestic standard platform league (DSPL) team in the 2017 Nagoya, 2018 Montreal, 2019 Sydney, and 2021 Worldwide RoboCup competitions.We also participated in theWorld Robot Challenge (WRC) 2018 in the service-robotics category of the partner-robot challenge (real space) and won first place. Currently, we have 27 members from nine different laboratories within the Kyushu Institute of Technology and the university of Kitakyushu. In this paper, we introduce the activities that have been performed by our team and the technologies that we use.

Hibikino-Musashi@Home 2018 Team Description Paper

Nov 09, 2022Abstract:Our team, Hibikino-Musashi@Home (the shortened name is HMA), was founded in 2010. It is based in the Kitakyushu Science and Research Park, Japan. We have participated in the RoboCup@Home Japan open competition open platform league every year since 2010. Moreover, we participated in the RoboCup 2017 Nagoya as open platform league and domestic standard platform league teams. Currently, the Hibikino-Musashi@Home team has 20 members from seven different laboratories based in the Kyushu Institute of Technology. In this paper, we introduce the activities of our team and the technologies.

Hibikino-Musashi@Home 2019 Team Description Paper

May 29, 2020

Abstract:Our team, Hibikino-Musashi@Home (HMA), was founded in 2010. It is based in the Kitakyushu Science and Research Park, Japan. Since 2010, we have participated in the RoboCup@Home Japan Open competition open platform league annually. We have also participated in the RoboCup 2017 Nagoya as an open platform league and domestic standard platform league teams, and in the RoboCup 2018 Montreal as a domestic standard platform league team. Currently, we have 23 members from seven different laboratories based in Kyushu Institute of Technology. This paper aims to introduce the activities that are performed by our team and the technologies that we use.

Hibikino-Musashi@Home 2020 Team Description Paper

May 29, 2020

Abstract:Our team, Hibikino-Musashi@Home (HMA), was founded in 2010. It is based in Japan in the Kitakyushu Science and Research Park. Since 2010, we have annually participated in the RoboCup@Home Japan Open competition in the open platform league (OPL). We participated as an open platform league team in the 2017 Nagoya RoboCup competition and as a domestic standard platform league (DSPL) team in the 2017 Nagoya, 2018 Montreal, and 2019 Sydney RoboCup competitions. We also participated in the World Robot Challenge (WRC) 2018 in the service-robotics category of the partner-robot challenge (real space) and won first place. Currently, we have 20 members from eight different laboratories within the Kyushu Institute of Technology. In this paper, we introduce the activities that have been performed by our team and the technologies that we use.

Hibikino-Musashi@Home 2017 Team Description Paper

Nov 15, 2017

Abstract:Our team Hibikino-Musashi@Home was founded in 2010. It is based in Kitakyushu Science and Research Park, Japan. Since 2010, we have participated in the RoboCup@Home Japan open competition open-platform league every year. Currently, the Hibikino-Musashi@Home team has 24 members from seven different laboratories based in the Kyushu Institute of Technology. Our home-service robots are used as platforms for both education and implementation of our research outcomes. In this paper, we introduce our team and the technologies that we have implemented in our robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge