Yu-Ching Shih

Improved Evidence Extraction for Document Inconsistency Detection with LLMs

Jan 06, 2026Abstract:Large language models (LLMs) are becoming useful in many domains due to their impressive abilities that arise from large training datasets and large model sizes. However, research on LLM-based approaches to document inconsistency detection is relatively limited. There are two key aspects of document inconsistency detection: (i) classification of whether there exists any inconsistency, and (ii) providing evidence of the inconsistent sentences. We focus on the latter, and introduce new comprehensive evidence-extraction metrics and a redact-and-retry framework with constrained filtering that substantially improves LLM-based document inconsistency detection over direct prompting. We back our claims with promising experimental results.

Improved LLM Agents for Financial Document Question Answering

Jun 10, 2025

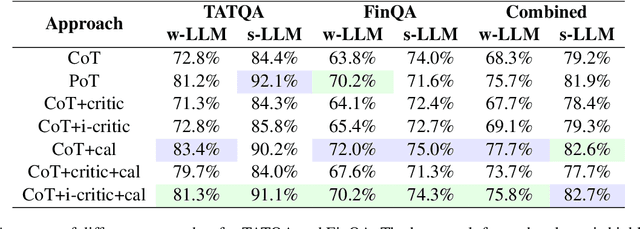

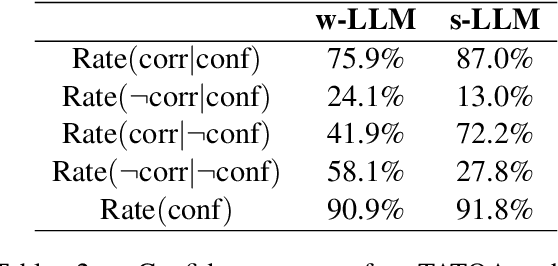

Abstract:Large language models (LLMs) have shown impressive capabilities on numerous natural language processing tasks. However, LLMs still struggle with numerical question answering for financial documents that include tabular and textual data. Recent works have showed the effectiveness of critic agents (i.e., self-correction) for this task given oracle labels. Building upon this framework, this paper examines the effectiveness of the traditional critic agent when oracle labels are not available, and show, through experiments, that this critic agent's performance deteriorates in this scenario. With this in mind, we present an improved critic agent, along with the calculator agent which outperforms the previous state-of-the-art approach (program-of-thought) and is safer. Furthermore, we investigate how our agents interact with each other, and how this interaction affects their performance.

Flexible and Efficient Drift Detection without Labels

Jun 10, 2025

Abstract:Machine learning models are being increasingly used to automate decisions in almost every domain, and ensuring the performance of these models is crucial for ensuring high quality machine learning enabled services. Ensuring concept drift is detected early is thus of the highest importance. A lot of research on concept drift has focused on the supervised case that assumes the true labels of supervised tasks are available immediately after making predictions. Controlling for false positives while monitoring the performance of predictive models used to make inference from extremely large datasets periodically, where the true labels are not instantly available, becomes extremely challenging. We propose a flexible and efficient concept drift detection algorithm that uses classical statistical process control in a label-less setting to accurately detect concept drifts. We shown empirically that under computational constraints, our approach has better statistical power than previous known methods. Furthermore, we introduce a new drift detection framework to model the scenario of detecting drift (without labels) given prior detections, and show our how our drift detection algorithm can be incorporated effectively into this framework. We demonstrate promising performance via numerical simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge