Yichao Yuan

MoE-Lens: Towards the Hardware Limit of High-Throughput MoE LLM Serving Under Resource Constraints

Apr 12, 2025Abstract:Mixture of Experts (MoE) LLMs, characterized by their sparse activation patterns, offer a promising approach to scaling language models while avoiding proportionally increasing the inference cost. However, their large parameter sizes present deployment challenges in resource-constrained environments with limited GPU memory capacity, as GPU memory is often insufficient to accommodate the full set of model weights. Consequently, typical deployments rely on CPU-GPU hybrid execution: the GPU handles compute-intensive GEMM operations, while the CPU processes the relatively lightweight attention mechanism. This setup introduces a key challenge: how to effectively optimize resource utilization across CPU and GPU? Prior work has designed system optimizations based on performance models with limited scope. Specifically, such models do not capture the complex interactions between hardware properties and system execution mechanisms. Therefore, previous approaches neither identify nor achieve the hardware limit. This paper presents MoE-Lens, a high-throughput MoE LLM inference system designed through holistic performance modeling for resource-constrained environments. Our performance model thoroughly analyzes various fundamental system components, including CPU memory capacity, GPU compute power, and workload characteristics, to understand the theoretical performance upper bound of MoE inference. Furthermore, it captures the system execution mechanisms to identify the key hardware bottlenecks and accurately predict the achievable throughput. Informed by our performance model, MoE-Lens introduces an inference system approaching hardware limits. Evaluated on diverse MoE models and datasets, MoE-Lens outperforms the state-of-the-art solution by 4.6x on average (up to 25.5x), with our theoretical model predicting performance with an average 94% accuracy.

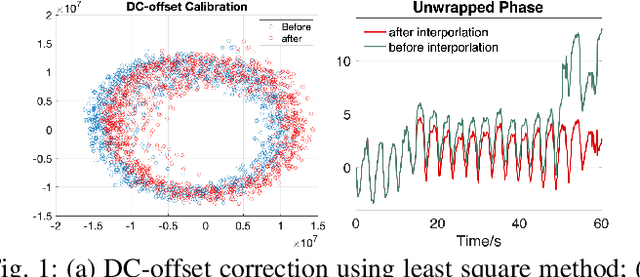

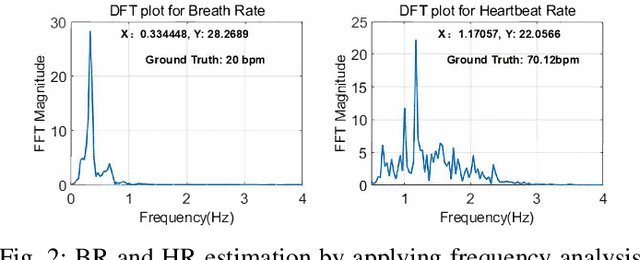

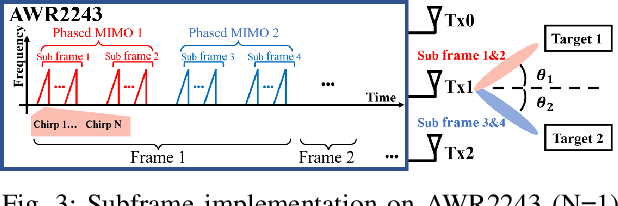

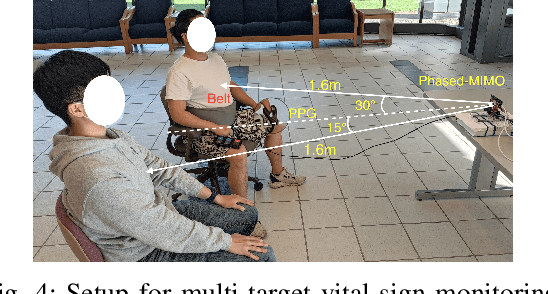

Simultaneous Monitoring of Multiple People's Vital Sign Leveraging a Single Phased-MIMO Radar

Oct 15, 2021

Abstract:Vital sign monitoring plays a critical role in tracking the physiological state of people and enabling various health-related applications (e.g., recommending a change of lifestyle, examining the risk of diseases). Traditional approaches rely on hospitalization or body-attached instruments, which are costly and intrusive. Therefore, researchers have been exploring contact-less vital sign monitoring with radio frequency signals in recent years. Early studies with continuous wave radars/WiFi devices work on detecting vital signs of a single individual, but it still remains challenging to simultaneously monitor vital signs of multiple subjects, especially those who locate in proximity. In this paper, we design and implement a time-division multiplexing (TDM) phased-MIMO radar sensing scheme for high-precision vital sign monitoring of multiple people. Our phased-MIMO radar can steer the mmWave beam towards different directions with a micro-second delay, which enables capturing the vital signs of multiple individuals at the same radial distance to the radar. Furthermore, we develop a TDM-MIMO technique to fully utilize all transmitting antenna (TX)-receiving antenna (RX) pairs, thereby significantly boosting the signal-to-noise ratio. Based on the designed TDM phased-MIMO radar, we develop a system to automatically localize multiple human subjects and estimate their vital signs. Extensive evaluations show that under two-subject scenarios, our system can achieve an error of less than 1 beat per minute (BPM) and 3 BPM for breathing rate (BR) and heartbeat rate (HR) estimations, respectively, at a subject-to-radar distance of $1.6~m$. The minimal subject-to-subject angle separation is $40{\deg}$, corresponding to a close distance of $0.5~m$ between two subjects, which outperforms the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge