Yesid Fonseca

World Models Unlock Optimal Foraging Strategies in Reinforcement Learning Agents

Dec 14, 2025Abstract:Patch foraging involves the deliberate and planned process of determining the optimal time to depart from a resource-rich region and investigate potentially more beneficial alternatives. The Marginal Value Theorem (MVT) is frequently used to characterize this process, offering an optimality model for such foraging behaviors. Although this model has been widely used to make predictions in behavioral ecology, discovering the computational mechanisms that facilitate the emergence of optimal patch-foraging decisions in biological foragers remains under investigation. Here, we show that artificial foragers equipped with learned world models naturally converge to MVT-aligned strategies. Using a model-based reinforcement learning agent that acquires a parsimonious predictive representation of its environment, we demonstrate that anticipatory capabilities, rather than reward maximization alone, drive efficient patch-leaving behavior. Compared with standard model-free RL agents, these model-based agents exhibit decision patterns similar to many of their biological counterparts, suggesting that predictive world models can serve as a foundation for more explainable and biologically grounded decision-making in AI systems. Overall, our findings highlight the value of ecological optimality principles for advancing interpretable and adaptive AI.

Can LLM-Augmented autonomous agents cooperate?, An evaluation of their cooperative capabilities through Melting Pot

Mar 18, 2024Abstract:As the field of AI continues to evolve, a significant dimension of this progression is the development of Large Language Models and their potential to enhance multi-agent artificial intelligence systems. This paper explores the cooperative capabilities of Large Language Model-augmented Autonomous Agents (LAAs) using the well-known Meltin Pot environments along with reference models such as GPT4 and GPT3.5. Preliminary results suggest that while these agents demonstrate a propensity for cooperation, they still struggle with effective collaboration in given environments, emphasizing the need for more robust architectures. The study's contributions include an abstraction layer to adapt Melting Pot game scenarios for LLMs, the implementation of a reusable architecture for LLM-mediated agent development - which includes short and long-term memories and different cognitive modules, and the evaluation of cooperation capabilities using a set of metrics tied to the Melting Pot's "Commons Harvest" game. The paper closes, by discussing the limitations of the current architectural framework and the potential of a new set of modules that fosters better cooperation among LAAs.

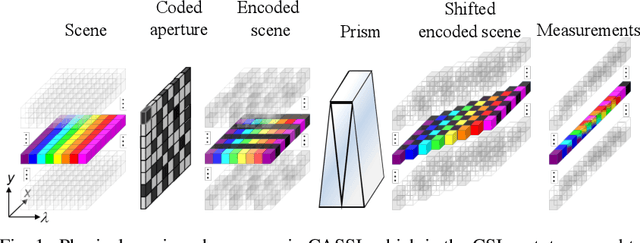

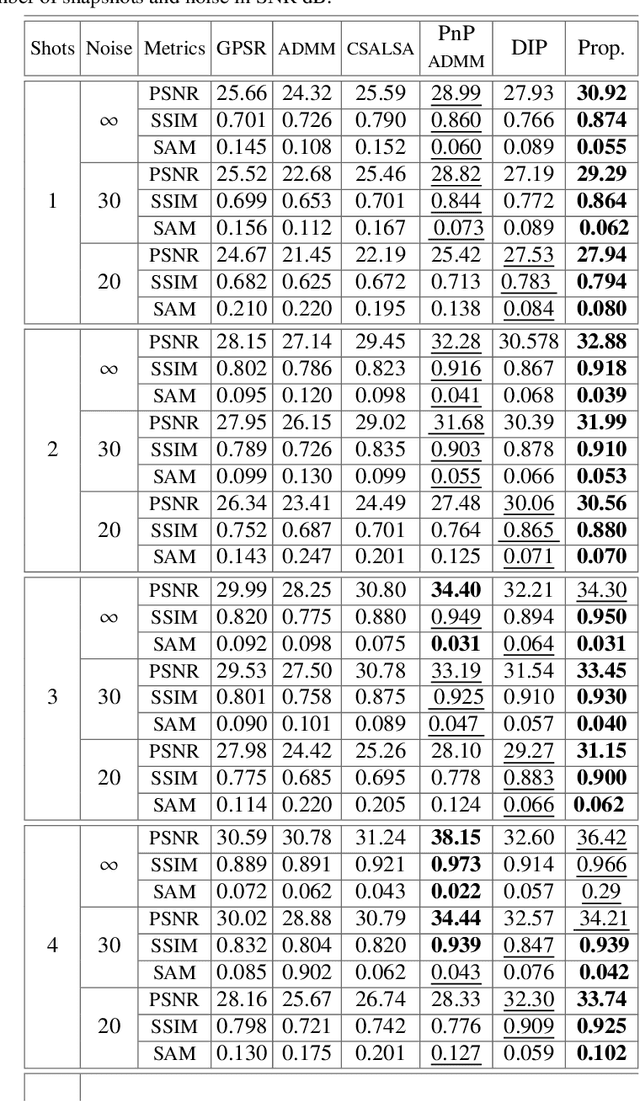

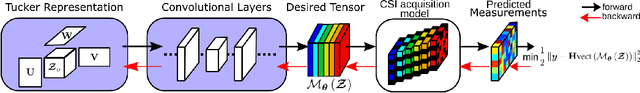

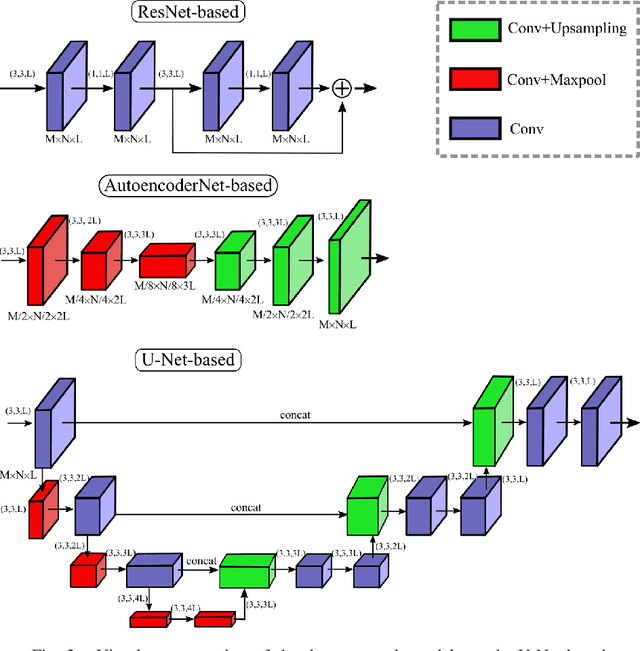

Compressive Spectral Image Reconstruction using Deep Prior and Low-Rank Tensor Representation

Jan 19, 2021

Abstract:Compressive spectral imaging (CSI) has emerged as an alternative spectral image acquisition technology, which reduces the number of measurements at the cost of requiring a recovery process. In general, the reconstruction methods are based on hand-crafted priors used as regularizers in optimization algorithms or recent deep neural networks employed as an image generator to learn a non-linear mapping from the low-dimensional compressed measurements to the image space. However, these data-driven methods need many spectral images to obtain good performance. In this work, a deep recovery framework for CSI without training data is presented. The proposed method is based on the fact that the structure of some deep neural networks and an appropriated low-dimensional structure are sufficient to impose a structure of the underlying spectral image from CSI. We analyzed the low-dimension structure via the Tucker representation, modeled in the first net layer. The proposed scheme is obtained by minimizing the $\ell_2$-norm distance between the compressive measurements and the predicted measurements, and the desired recovered spectral image is formed just before the forward operator. Simulated and experimental results verify the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge