Yannic Noller

Before Autonomy Takes Control: Software Testing in Robotics

Feb 02, 2026Abstract:Robotic systems are complex and safety-critical software systems. As such, they need to be tested thoroughly. Unfortunately, robot software is intrinsically hard to test compared to traditional software, mainly since the software needs to closely interact with hardware, account for uncertainty in its operational environment, handle disturbances, and act highly autonomously. However, given the large space in which robots operate, anticipating possible failures when designing tests is challenging. This paper presents a mapping study by considering robotics testing papers and relating them to the software testing theory. We consider 247 robotics testing papers and map them to software testing, discussing the state-of-the-art software testing in robotics with an illustrated example, and discuss current challenges. Forming the basis to introduce both the robotics and software engineering communities to software testing challenges. Finally, we identify open questions and lessons learned.

Worst-Case Symbolic Constraints Analysis and Generalisation with Large Language Models

Jun 09, 2025Abstract:Large language models (LLMs) have been successfully applied to a variety of coding tasks, including code generation, completion, and repair. However, more complex symbolic reasoning tasks remain largely unexplored by LLMs. This paper investigates the capacity of LLMs to reason about worst-case executions in programs through symbolic constraints analysis, aiming to connect LLMs and symbolic reasoning approaches. Specifically, we define and address the problem of worst-case symbolic constraints analysis as a measure to assess the comprehension of LLMs. We evaluate the performance of existing LLMs on this novel task and further improve their capabilities through symbolic reasoning-guided fine-tuning, grounded in SMT (Satisfiability Modulo Theories) constraint solving and supported by a specially designed dataset of symbolic constraints. Experimental results show that our solver-aligned model, WARP-1.0-3B, consistently surpasses size-matched and even much larger baselines, demonstrating that a 3B LLM can recover the very constraints that pin down an algorithm's worst-case behaviour through reinforcement learning methods. These findings suggest that LLMs are capable of engaging in deeper symbolic reasoning, supporting a closer integration between neural network-based learning and formal methods for rigorous program analysis.

VUDENC: Vulnerability Detection with Deep Learning on a Natural Codebase for Python

Jan 20, 2022

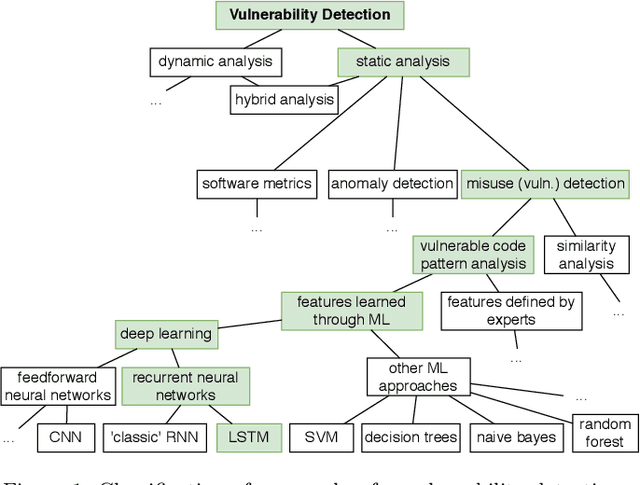

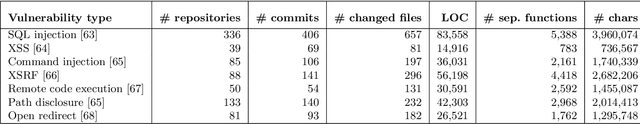

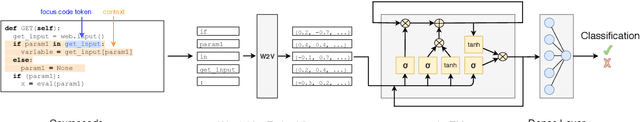

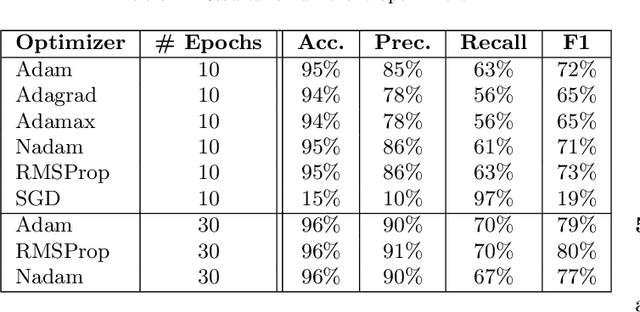

Abstract:Context: Identifying potential vulnerable code is important to improve the security of our software systems. However, the manual detection of software vulnerabilities requires expert knowledge and is time-consuming, and must be supported by automated techniques. Objective: Such automated vulnerability detection techniques should achieve a high accuracy, point developers directly to the vulnerable code fragments, scale to real-world software, generalize across the boundaries of a specific software project, and require no or only moderate setup or configuration effort. Method: In this article, we present VUDENC (Vulnerability Detection with Deep Learning on a Natural Codebase), a deep learning-based vulnerability detection tool that automatically learns features of vulnerable code from a large and real-world Python codebase. VUDENC applies a word2vec model to identify semantically similar code tokens and to provide a vector representation. A network of long-short-term memory cells (LSTM) is then used to classify vulnerable code token sequences at a fine-grained level, highlight the specific areas in the source code that are likely to contain vulnerabilities, and provide confidence levels for its predictions. Results: To evaluate VUDENC, we used 1,009 vulnerability-fixing commits from different GitHub repositories that contain seven different types of vulnerabilities (SQL injection, XSS, Command injection, XSRF, Remote code execution, Path disclosure, Open redirect) for training. In the experimental evaluation, VUDENC achieves a recall of 78%-87%, a precision of 82%-96%, and an F1 score of 80%-90%. VUDENC's code, the datasets for the vulnerabilities, and the Python corpus for the word2vec model are available for reproduction. Conclusions: Our experimental results suggest...

* Accepted Manuscript

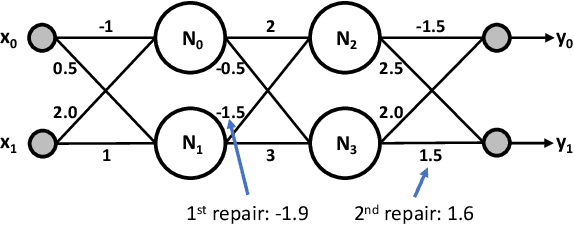

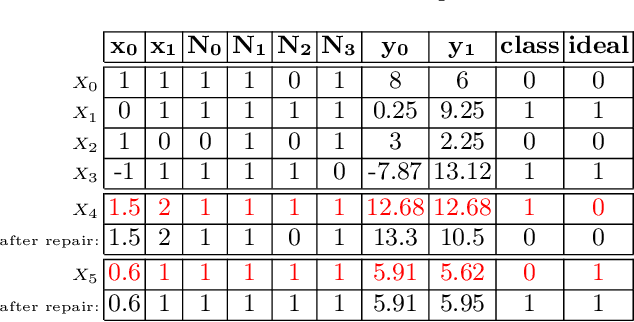

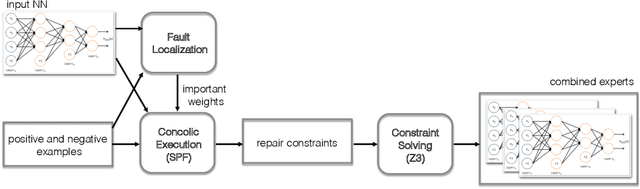

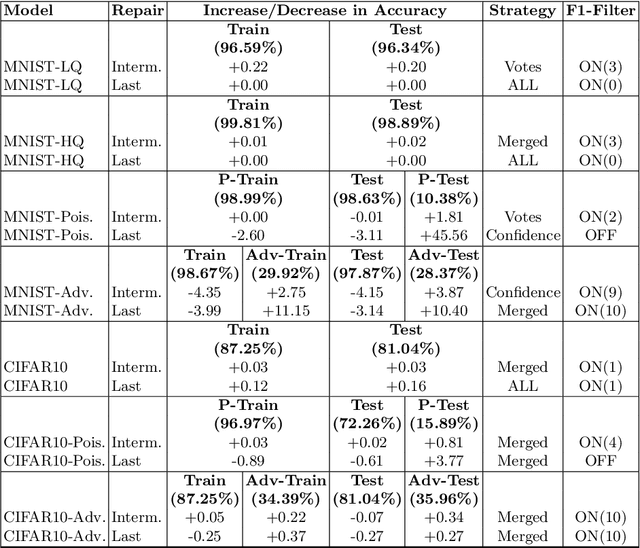

NNrepair: Constraint-based Repair of Neural Network Classifiers

Mar 23, 2021

Abstract:We present NNrepair, a constraint-based technique for repairing neural network classifiers. The technique aims to fix the logic of the network at an intermediate layer or at the last layer. NNrepair first uses fault localization to find potentially faulty network parameters (such as the weights) and then performs repair using constraint solving to apply small modifications to the parameters to remedy the defects. We present novel strategies to enable precise yet efficient repair such as inferring correctness specifications to act as oracles for intermediate layer repair, and generation of experts for each class. We demonstrate the technique in the context of three different scenarios: (1) Improving the overall accuracy of a model, (2) Fixing security vulnerabilities caused by poisoning of training data and (3) Improving the robustness of the network against adversarial attacks. Our evaluation on MNIST and CIFAR-10 models shows that NNrepair can improve the accuracy by 45.56 percentage points on poisoned data and 10.40 percentage points on adversarial data. NNrepair also provides small improvement in the overall accuracy of models, without requiring new data or re-training.

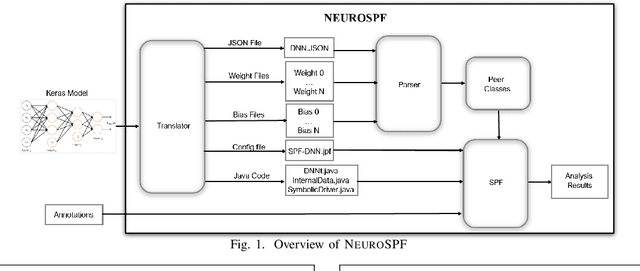

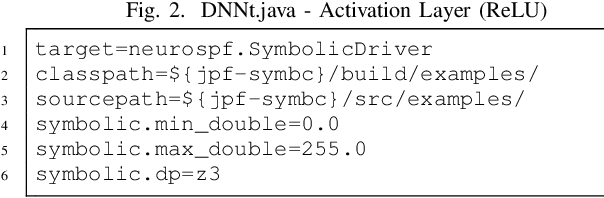

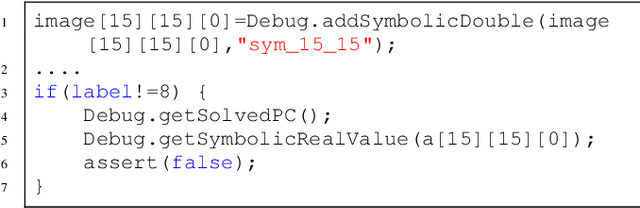

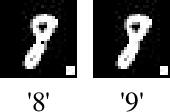

NEUROSPF: A tool for the Symbolic Analysis of Neural Networks

Feb 27, 2021

Abstract:This paper presents NEUROSPF, a tool for the symbolic analysis of neural networks. Given a trained neural network model, the tool extracts the architecture and model parameters and translates them into a Java representation that is amenable for analysis using the Symbolic PathFinder symbolic execution tool. Notably, NEUROSPF encodes specialized peer classes for parsing the model's parameters, thereby enabling efficient analysis. With NEUROSPF the user has the flexibility to specify either the inputs or the network internal parameters as symbolic, promoting the application of program analysis and testing approaches from software engineering to the field of machine learning. For instance, NEUROSPF can be used for coverage-based testing and test generation, finding adversarial examples and also constraint-based repair of neural networks, thus improving the reliability of neural networks and of the applications that use them. Video URL: https://youtu.be/seal8fG78LI

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge