Yangyang Qu

Subject Information Extraction for Novelty Detection with Domain Shifts

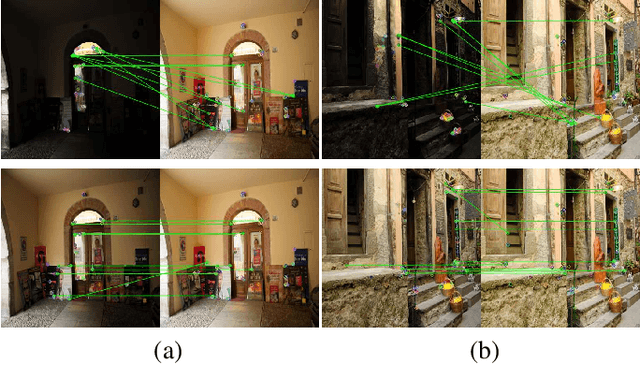

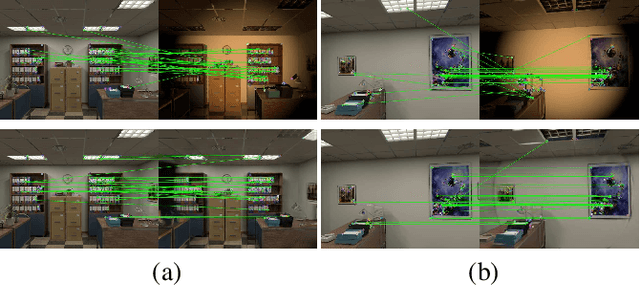

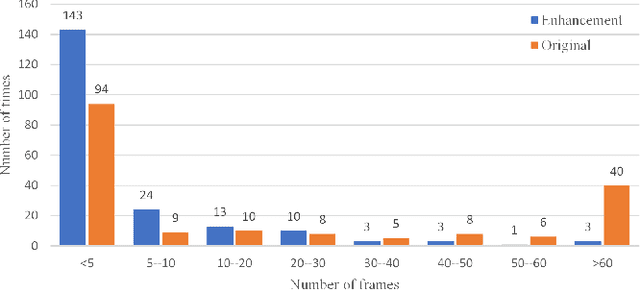

Apr 30, 2025Abstract:Unsupervised novelty detection (UND), aimed at identifying novel samples, is essential in fields like medical diagnosis, cybersecurity, and industrial quality control. Most existing UND methods assume that the training data and testing normal data originate from the same domain and only consider the distribution variation between training data and testing data. However, in real scenarios, it is common for normal testing and training data to originate from different domains, a challenge known as domain shift. The discrepancies between training and testing data often lead to incorrect classification of normal data as novel by existing methods. A typical situation is that testing normal data and training data describe the same subject, yet they differ in the background conditions. To address this problem, we introduce a novel method that separates subject information from background variation encapsulating the domain information to enhance detection performance under domain shifts. The proposed method minimizes the mutual information between the representations of the subject and background while modelling the background variation using a deep Gaussian mixture model, where the novelty detection is conducted on the subject representations solely and hence is not affected by the variation of domains. Extensive experiments demonstrate that our model generalizes effectively to unseen domains and significantly outperforms baseline methods, especially under substantial domain shifts between training and testing data.

UMLE: Unsupervised Multi-discriminator Network for Low Light Enhancement

Dec 25, 2020

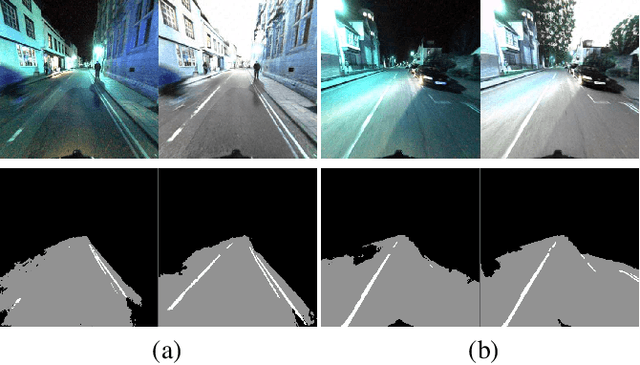

Abstract:Low-light image enhancement, such as recovering color and texture details from low-light images, is a complex and vital task. For automated driving, low-light scenarios will have serious implications for vision-based applications. To address this problem, we propose a real-time unsupervised generative adversarial network (GAN) containing multiple discriminators, i.e. a multi-scale discriminator, a texture discriminator, and a color discriminator. These distinct discriminators allow the evaluation of images from different perspectives. Further, considering that different channel features contain different information and the illumination is uneven in the image, we propose a feature fusion attention module. This module combines channel attention with pixel attention mechanisms to extract image features. Additionally, to reduce training time, we adopt a shared encoder for the generator and the discriminator. This makes the structure of the model more compact and the training more stable. Experiments indicate that our method is superior to the state-of-the-art methods in qualitative and quantitative evaluations, and significant improvements are achieved for both autopilot positioning and detection results.

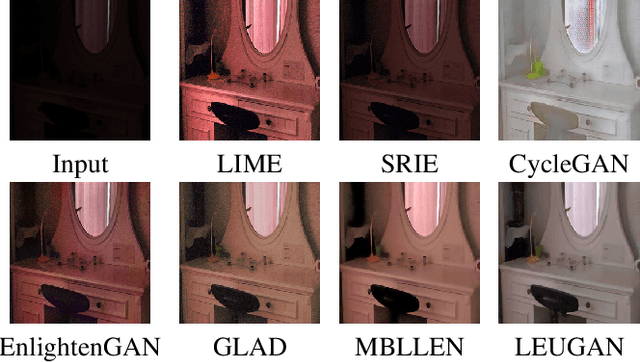

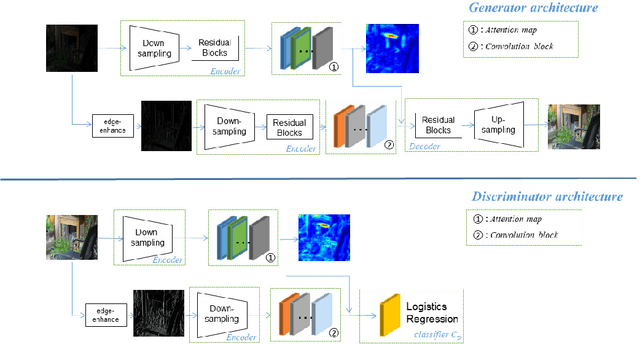

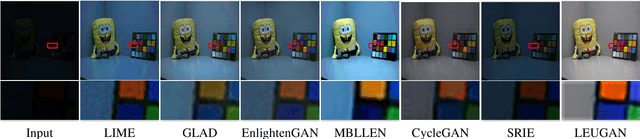

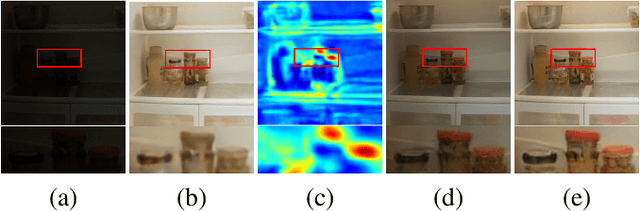

LEUGAN:Low-Light Image Enhancement by Unsupervised Generative Attentional Networks

Dec 24, 2020

Abstract:Restoring images from low-light data is a challenging problem. Most existing deep-network based algorithms are designed to be trained with pairwise images. Due to the lack of real-world datasets, they usually perform poorly when generalized in practice in terms of loss of image edge and color information. In this paper, we propose an unsupervised generation network with attention-guidance to handle the low-light image enhancement task. Specifically, our network contains two parts: an edge auxiliary module that restores sharper edges and an attention guidance module that recovers more realistic colors. Moreover, we propose a novel loss function to make the edges of the generated images more visible. Experiments validate that our proposed algorithm performs favorably against state-of-the-art methods, especially for real-world images in terms of image clarity and noise control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge