Xinning Gui

AutoML in The Wild: Obstacles, Workarounds, and Expectations

Feb 21, 2023Abstract:Automated machine learning (AutoML) is envisioned to make ML techniques accessible to ordinary users. Recent work has investigated the role of humans in enhancing AutoML functionality throughout a standard ML workflow. However, it is also critical to understand how users adopt existing AutoML solutions in complex, real-world settings from a holistic perspective. To fill this gap, this study conducted semi-structured interviews of AutoML users (N = 19) focusing on understanding (1) the limitations of AutoML encountered by users in their real-world practices, (2) the strategies users adopt to cope with such limitations, and (3) how the limitations and workarounds impact their use of AutoML. Our findings reveal that users actively exercise user agency to overcome three major challenges arising from customizability, transparency, and privacy. Furthermore, users make cautious decisions about whether and how to apply AutoML on a case-by-case basis. Finally, we derive design implications for developing future AutoML solutions.

Mediating Community-AI Interaction through Situated Explanation: The Case of AI-Led Moderation

Aug 19, 2020

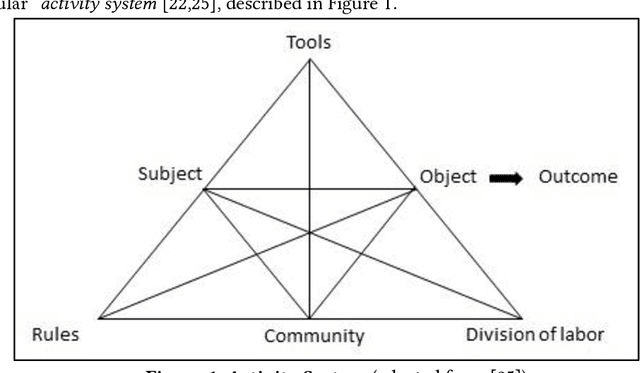

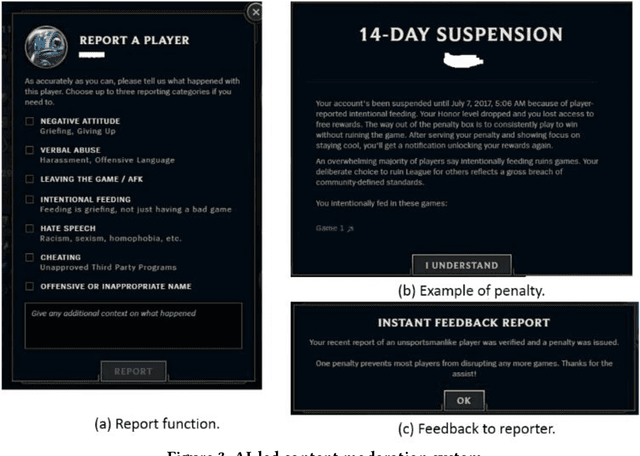

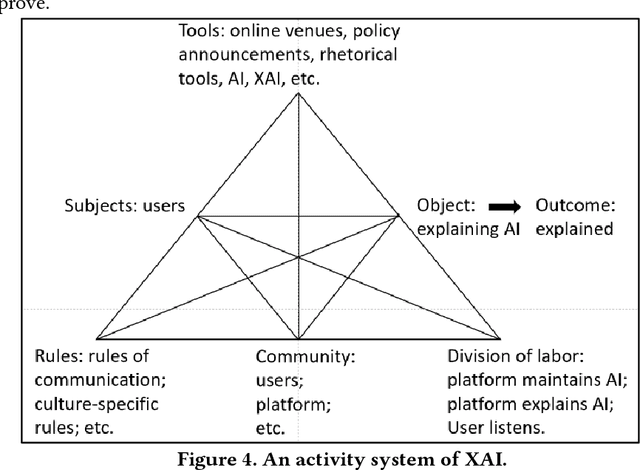

Abstract:Artificial intelligence (AI) has become prevalent in our everyday technologies and impacts both individuals and communities. The explainable AI (XAI) scholarship has explored the philosophical nature of explanation and technical explanations, which are usually driven by experts in lab settings and can be challenging for laypersons to understand. In addition, existing XAI research tends to focus on the individual level. Little is known about how people understand and explain AI-led decisions in the community context. Drawing from XAI and activity theory, a foundational HCI theory, we theorize how explanation is situated in a community's shared values, norms, knowledge, and practices, and how situated explanation mediates community-AI interaction. We then present a case study of AI-led moderation, where community members collectively develop explanations of AI-led decisions, most of which are automated punishments. Lastly, we discuss the implications of this framework at the intersection of CSCW, HCI, and XAI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge