Xie Han

Physics-Driven Local-Whole Elastic Deformation Modeling for Point Cloud Representation Learning

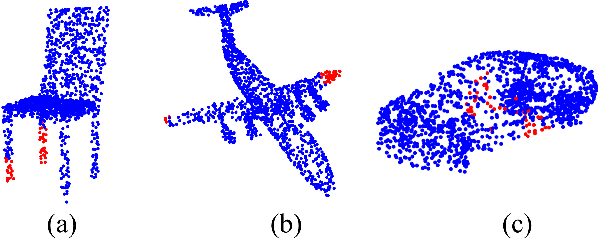

May 20, 2025Abstract:Existing point cloud representation learning tend to learning the geometric distribution of objects through data-driven approaches, emphasizing structural features while overlooking the relationship between the local information and the whole structure. Local features reflect the fine-grained variations of an object, while the whole structure is determined by the interaction and combination of these local features, collectively defining the object's shape. In real-world, objects undergo elastic deformation under external forces, and this deformation gradually affects the whole structure through the propagation of forces from local regions, thereby altering the object's geometric properties. Inspired by this, we propose a physics-driven self-supervised learning method for point cloud representation, which captures the relationship between parts and the whole by constructing a local-whole force propagation mechanism. Specifically, we employ a dual-task encoder-decoder framework, integrating the geometric modeling capability of implicit fields with physics-driven elastic deformation. The encoder extracts features from the point cloud and its tetrahedral mesh representation, capturing both geometric and physical properties. These features are then fed into two decoders: one learns the whole geometric shape of the point cloud through an implicit field, while the other predicts local deformations using two specifically designed physics information loss functions, modeling the deformation relationship between local and whole shapes. Experimental results show that our method outperforms existing approaches in object classification, few-shot learning, and segmentation, demonstrating its effectiveness.

PSNet: Fast Data Structuring for Hierarchical Deep Learning on Point Cloud

May 31, 2022

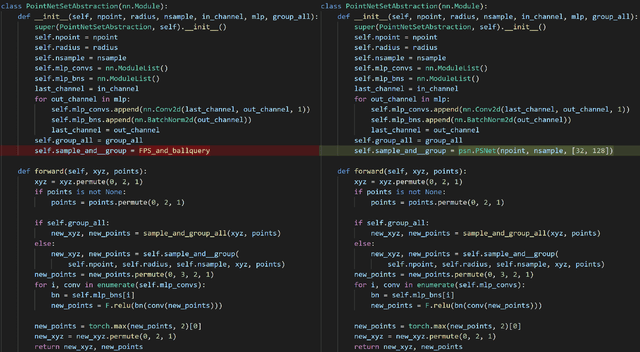

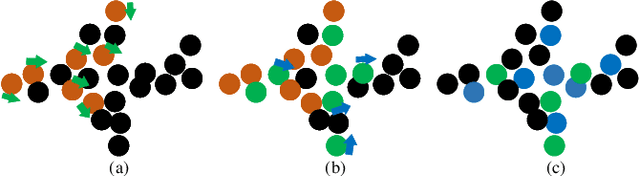

Abstract:In order to retain more feature information of local areas on a point cloud, local grouping and subsampling are the necessary data structuring steps in most hierarchical deep learning models. Due to the disorder nature of the points in a point cloud, the significant time cost may be consumed when grouping and subsampling the points, which consequently results in poor scalability. This paper proposes a fast data structuring method called PSNet (Point Structuring Net). PSNet transforms the spatial features of the points and matches them to the features of local areas in a point cloud. PSNet achieves grouping and sampling at the same time while the existing methods process sampling and grouping in two separate steps (such as using FPS plus kNN). PSNet performs feature transformation pointwise while the existing methods uses the spatial relationship among the points as the reference for grouping. Thanks to these features, PSNet has two important advantages: 1) the grouping and sampling results obtained by PSNet is stable and permutation invariant; and 2) PSNet can be easily parallelized. PSNet can replace the data structuring methods in the mainstream point cloud deep learning models in a plug-and-play manner. We have conducted extensive experiments. The results show that PSNet can improve the training and inference speed significantly while maintaining the model accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge