Wesam A. Sakla

Interpretable and Steerable Concept Bottleneck Sparse Autoencoders

Dec 11, 2025

Abstract:Sparse autoencoders (SAEs) promise a unified approach for mechanistic interpretability, concept discovery, and model steering in LLMs and LVLMs. However, realizing this potential requires that the learned features be both interpretable and steerable. To that end, we introduce two new computationally inexpensive interpretability and steerability metrics and conduct a systematic analysis on LVLMs. Our analysis uncovers two observations; (i) a majority of SAE neurons exhibit either low interpretability or low steerability or both, rendering them ineffective for downstream use; and (ii) due to the unsupervised nature of SAEs, user-desired concepts are often absent in the learned dictionary, thus limiting their practical utility. To address these limitations, we propose Concept Bottleneck Sparse Autoencoders (CB-SAE) - a novel post-hoc framework that prunes low-utility neurons and augments the latent space with a lightweight concept bottleneck aligned to a user-defined concept set. The resulting CB-SAE improves interpretability by +32.1% and steerability by +14.5% across LVLMs and image generation tasks. We will make our code and model weights available.

A Large Contextual Dataset for Classification, Detection and Counting of Cars with Deep Learning

Sep 14, 2016

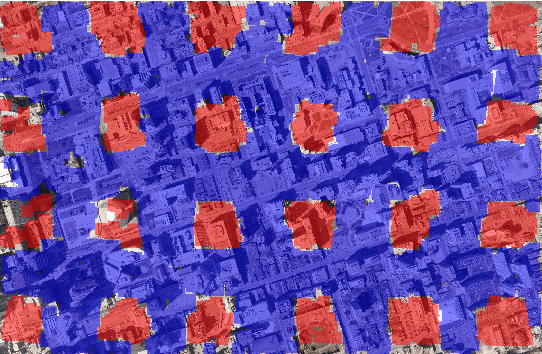

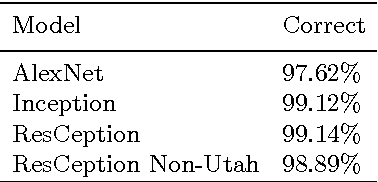

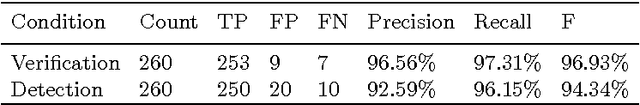

Abstract:We have created a large diverse set of cars from overhead images, which are useful for training a deep learner to binary classify, detect and count them. The dataset and all related material will be made publically available. The set contains contextual matter to aid in identification of difficult targets. We demonstrate classification and detection on this dataset using a neural network we call ResCeption. This network combines residual learning with Inception-style layers and is used to count cars in one look. This is a new way to count objects rather than by localization or density estimation. It is fairly accurate, fast and easy to implement. Additionally, the counting method is not car or scene specific. It would be easy to train this method to count other kinds of objects and counting over new scenes requires no extra set up or assumptions about object locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge