Vitus J. Leung

UniCoMTE: A Universal Counterfactual Framework for Explaining Time-Series Classifiers on ECG Data

Dec 22, 2025Abstract:Machine learning models, particularly deep neural networks, have demonstrated strong performance in classifying complex time series data. However, their black-box nature limits trust and adoption, especially in high-stakes domains such as healthcare. To address this challenge, we introduce UniCoMTE, a model-agnostic framework for generating counterfactual explanations for multivariate time series classifiers. The framework identifies temporal features that most heavily influence a model's prediction by modifying the input sample and assessing its impact on the model's prediction. UniCoMTE is compatible with a wide range of model architectures and operates directly on raw time series inputs. In this study, we evaluate UniCoMTE's explanations on a time series ECG classifier. We quantify explanation quality by comparing our explanations' comprehensibility to comprehensibility of established techniques (LIME and SHAP) and assessing their generalizability to similar samples. Furthermore, clinical utility is assessed through a questionnaire completed by medical experts who review counterfactual explanations presented alongside original ECG samples. Results show that our approach produces concise, stable, and human-aligned explanations that outperform existing methods in both clarity and applicability. By linking model predictions to meaningful signal patterns, the framework advances the interpretability of deep learning models for real-world time series applications.

Counterfactual Explanations for Machine Learning on Multivariate Time Series Data

Aug 25, 2020

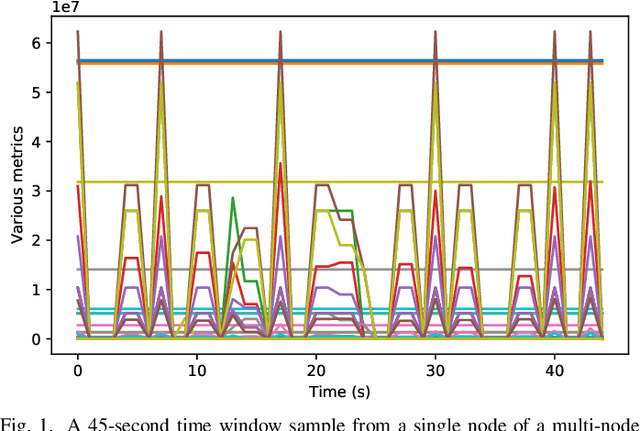

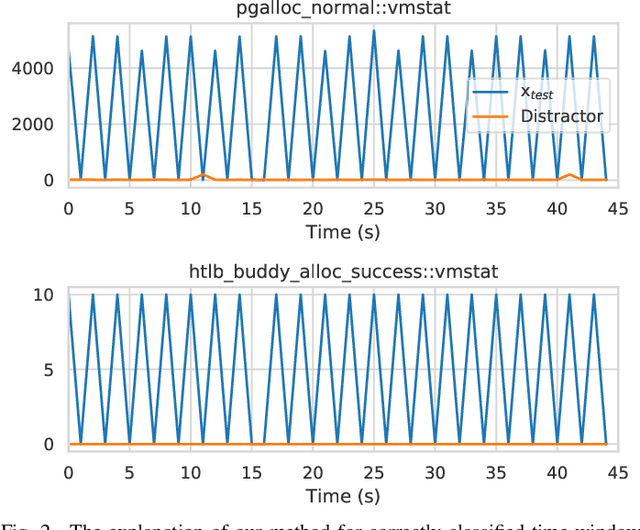

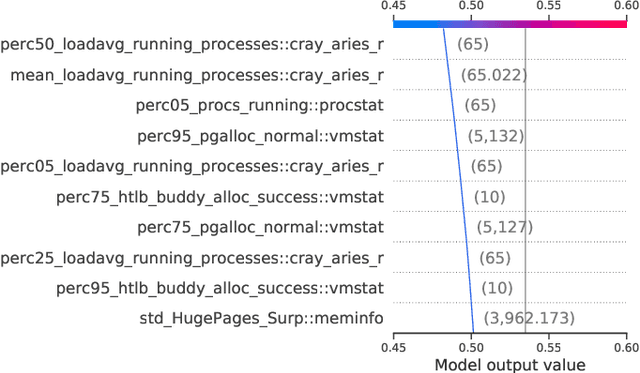

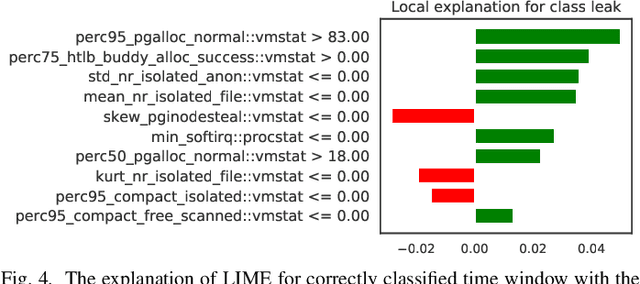

Abstract:Applying machine learning (ML) on multivariate time series data has growing popularity in many application domains, including in computer system management. For example, recent high performance computing (HPC) research proposes a variety of ML frameworks that use system telemetry data in the form of multivariate time series so as to detect performance variations, perform intelligent scheduling or node allocation, and improve system security. Common barriers for adoption for these ML frameworks include the lack of user trust and the difficulty of debugging. These barriers need to be overcome to enable the widespread adoption of ML frameworks in production systems. To address this challenge, this paper proposes a novel explainability technique for providing counterfactual explanations for supervised ML frameworks that use multivariate time series data. The proposed method outperforms state-of-the-art explainability methods on several different ML frameworks and data sets in metrics such as faithfulness and robustness. The paper also demonstrates how the proposed method can be used to debug ML frameworks and gain a better understanding of HPC system telemetry data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge