Vinicius Prado da Fonseca

A Comparative Study of EMG- and IMU-based Gesture Recognition at the Wrist and Forearm

Dec 08, 2025Abstract:Gestures are an integral part of our daily interactions with the environment. Hand gesture recognition (HGR) is the process of interpreting human intent through various input modalities, such as visual data (images and videos) and bio-signals. Bio-signals are widely used in HGR due to their ability to be captured non-invasively via sensors placed on the arm. Among these, surface electromyography (sEMG), which measures the electrical activity of muscles, is the most extensively studied modality. However, less-explored alternatives such as inertial measurement units (IMUs) can provide complementary information on subtle muscle movements, which makes them valuable for gesture recognition. In this study, we investigate the potential of using IMU signals from different muscle groups to capture user intent. Our results demonstrate that IMU signals contain sufficient information to serve as the sole input sensor for static gesture recognition. Moreover, we compare different muscle groups and check the quality of pattern recognition on individual muscle groups. We further found that tendon-induced micro-movement captured by IMUs is a major contributor to static gesture recognition. We believe that leveraging muscle micro-movement information can enhance the usability of prosthetic arms for amputees. This approach also offers new possibilities for hand gesture recognition in fields such as robotics, teleoperation, sign language interpretation, and beyond.

Towards Biosignals-Free Autonomous Prosthetic Hand Control via Imitation Learning

Jun 10, 2025

Abstract:Limb loss affects millions globally, impairing physical function and reducing quality of life. Most traditional surface electromyographic (sEMG) and semi-autonomous methods require users to generate myoelectric signals for each control, imposing physically and mentally taxing demands. This study aims to develop a fully autonomous control system that enables a prosthetic hand to automatically grasp and release objects of various shapes using only a camera attached to the wrist. By placing the hand near an object, the system will automatically execute grasping actions with a proper grip force in response to the hand's movements and the environment. To release the object being grasped, just naturally place the object close to the table and the system will automatically open the hand. Such a system would provide individuals with limb loss with a very easy-to-use prosthetic control interface and greatly reduce mental effort while using. To achieve this goal, we developed a teleoperation system to collect human demonstration data for training the prosthetic hand control model using imitation learning, which mimics the prosthetic hand actions from human. Through training the model using only a few objects' data from one single participant, we have shown that the imitation learning algorithm can achieve high success rates, generalizing to more individuals and unseen objects with a variation of weights. The demonstrations are available at \href{https://sites.google.com/view/autonomous-prosthetic-hand}{https://sites.google.com/view/autonomous-prosthetic-hand}

Leveraging Compliant Tactile Perception for Haptic Blind Surface Reconstruction

Feb 28, 2024Abstract:Non-flat surfaces pose difficulties for robots operating in unstructured environments. Reconstructions of uneven surfaces may only be partially possible due to non-compliant end-effectors and limitations on vision systems such as transparency, reflections, and occlusions. This study achieves blind surface reconstruction by harnessing the robotic manipulator's kinematic data and a compliant tactile sensing module, which incorporates inertial, magnetic, and pressure sensors. The module's flexibility enables us to estimate contact positions and surface normals by analyzing its deformation during interactions with unknown objects. While previous works collect only positional information, we include the local normals in a geometrical approach to estimate curvatures between adjacent contact points. These parameters then guide a spline-based patch generation, which allows us to recreate larger surfaces without an increase in complexity while reducing the time-consuming step of probing the surface. Experimental validation demonstrates that this approach outperforms an off-the-shelf vision system in estimation accuracy. Moreover, this compliant haptic method works effectively even when the manipulator's approach angle is not aligned with the surface normals, which is ideal for unknown non-flat surfaces.

Denoising Opponents Position in Partial Observation Environment

Oct 23, 2023Abstract:The RoboCup competitions hold various leagues, and the Soccer Simulation 2D League is a major among them. Soccer Simulation 2D (SS2D) match involves two teams, including 11 players and a coach for each team, competing against each other. The players can only communicate with the Soccer Simulation Server during the game. Several code bases are released publicly to simplify team development. So researchers can easily focus on decision-making and implementing machine learning methods. SS2D actions and behaviors are only partially accurate due to different challenges, such as noise and partial observation. Therefore, one strategy is to implement alternative denoising methods to tackle observation inaccuracy. Our idea is to predict opponent positions while they have yet to be seen in a finite number of cycles using machine learning methods to make more accurate actions such as pass. We will explain our position prediction idea powered by Long Short-Term Memory models (LSTM) and Deep Neural Networks (DNN). The results show that the LSTM and DNN predict the opponents' position more accurately than the standard algorithm, such as the last-seen method.

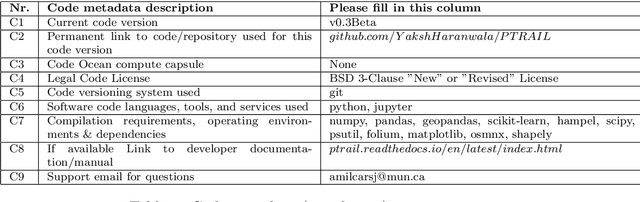

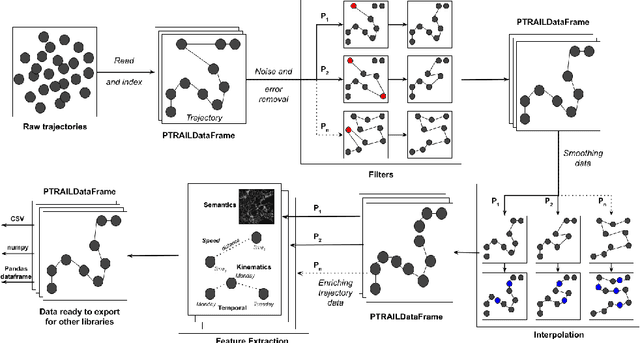

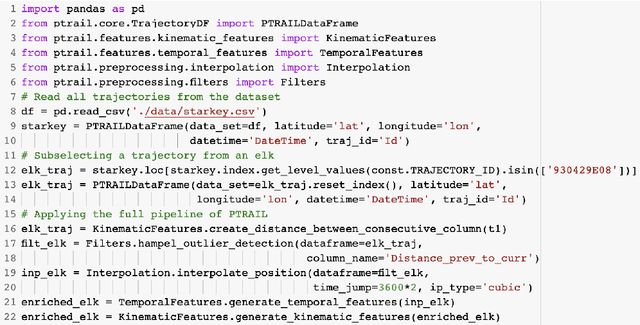

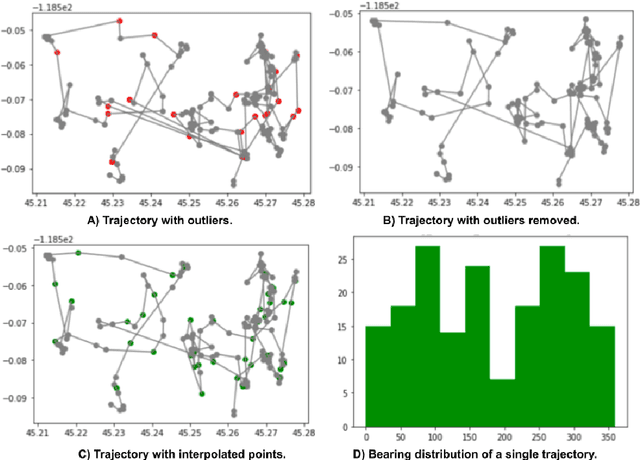

PTRAIL -- A python package for parallel trajectory data preprocessing

Aug 26, 2021

Abstract:Trajectory data represent a trace of an object that changes its position in space over time. This kind of data is complex to handle and analyze, since it is generally produced in huge quantities, often prone to errors generated by the geolocation device, human mishandling, or area coverage limitation. Therefore, there is a need for software specifically tailored to preprocess trajectory data. In this work we propose PTRAIL, a python package offering several trajectory preprocessing steps, including filtering, feature extraction, and interpolation. PTRAIL uses parallel computation and vectorization, being suitable for large datasets and fast compared to other python libraries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge