Utsav Rai

SurgPose: Generalisable Surgical Instrument Pose Estimation using Zero-Shot Learning and Stereo Vision

May 16, 2025

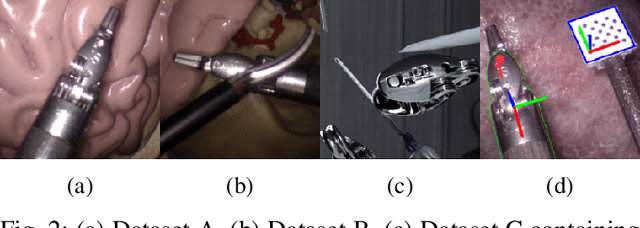

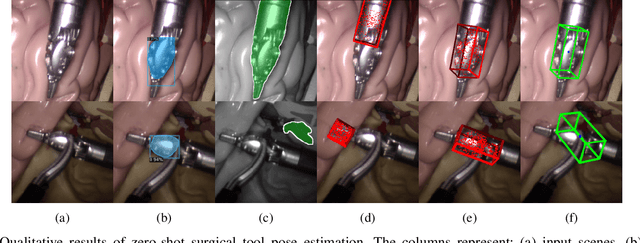

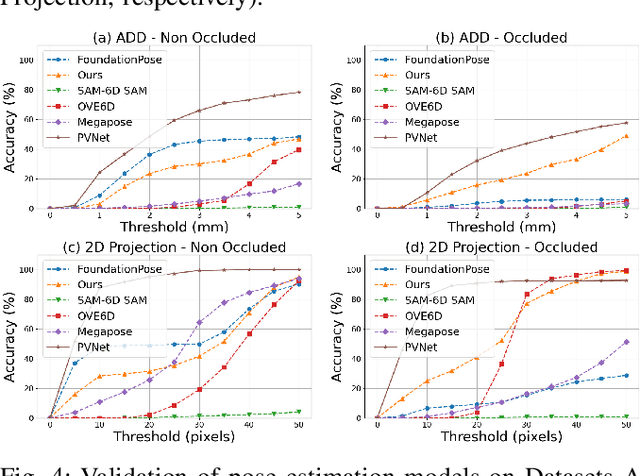

Abstract:Accurate pose estimation of surgical tools in Robot-assisted Minimally Invasive Surgery (RMIS) is essential for surgical navigation and robot control. While traditional marker-based methods offer accuracy, they face challenges with occlusions, reflections, and tool-specific designs. Similarly, supervised learning methods require extensive training on annotated datasets, limiting their adaptability to new tools. Despite their success in other domains, zero-shot pose estimation models remain unexplored in RMIS for pose estimation of surgical instruments, creating a gap in generalising to unseen surgical tools. This paper presents a novel 6 Degrees of Freedom (DoF) pose estimation pipeline for surgical instruments, leveraging state-of-the-art zero-shot RGB-D models like the FoundationPose and SAM-6D. We advanced these models by incorporating vision-based depth estimation using the RAFT-Stereo method, for robust depth estimation in reflective and textureless environments. Additionally, we enhanced SAM-6D by replacing its instance segmentation module, Segment Anything Model (SAM), with a fine-tuned Mask R-CNN, significantly boosting segmentation accuracy in occluded and complex conditions. Extensive validation reveals that our enhanced SAM-6D surpasses FoundationPose in zero-shot pose estimation of unseen surgical instruments, setting a new benchmark for zero-shot RGB-D pose estimation in RMIS. This work enhances the generalisability of pose estimation for unseen objects and pioneers the application of RGB-D zero-shot methods in RMIS.

An Efficient Method for Accurate Pose Estimation and Error Correction of Cuboidal Objects

May 08, 2025Abstract:The proposed system outlined in this paper is a solution to a use case that requires the autonomous picking of cuboidal objects from an organized or unorganized pile with high precision. This paper presents an efficient method for precise pose estimation of cuboid-shaped objects, which aims to reduce errors in target pose in a time-efficient manner. Typical pose estimation methods like global point cloud registrations are prone to minor pose errors for which local registration algorithms are generally used to improve pose accuracy. However, due to the execution time overhead and uncertainty in the error of the final achieved pose, an alternate, linear time approach is proposed for pose error estimation and correction. This paper presents an overview of the solution followed by a detailed description of individual modules of the proposed algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge