Toshihiko Yamasaki

Re-identification = Retrieval + Verification: Back to Essence and Forward with a New Metric

Nov 23, 2020

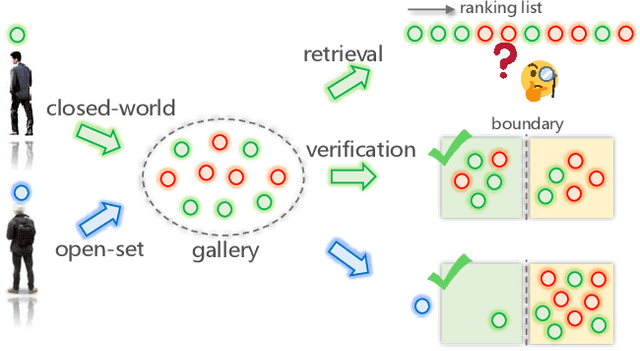

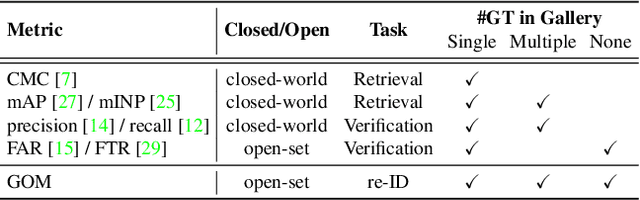

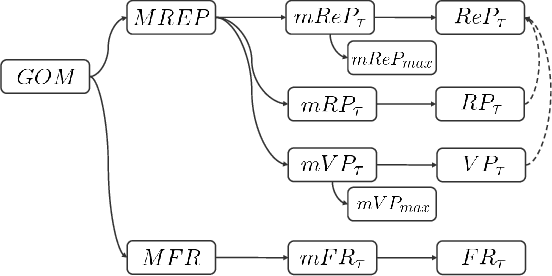

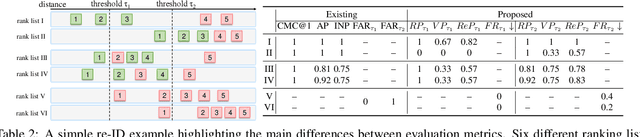

Abstract:Re-identification (re-ID) is currently investigated as a closed-world image retrieval task, and evaluated by retrieval based metrics. The algorithms return ranking lists to users, but cannot tell which images are the true target. In essence, current re-ID overemphasizes the importance of retrieval but underemphasizes that of verification, \textit{i.e.}, all returned images are considered as the target. On the other hand, re-ID should also include the scenario that the query identity does not appear in the gallery. To this end, we go back to the essence of re-ID, \textit{i.e.}, a combination of retrieval and verification in an open-set setting, and put forward a new metric, namely, Genuine Open-set re-ID Metric (GOM). GOM explicitly balances the effect of performing retrieval and verification into a single unified metric. It can also be decomposed into a family of sub-metrics, enabling a clear analysis of re-ID performance. We evaluate the effectiveness of GOM on the re-ID benchmarks, showing its ability to capture important aspects of re-ID performance that have not been taken into account by established metrics so far. Furthermore, we show GOM scores excellent in aligning with human visual evaluation of re-ID performance. Related codes are available at https://github.com/YuanXinCherry/Person-reID-Evaluation

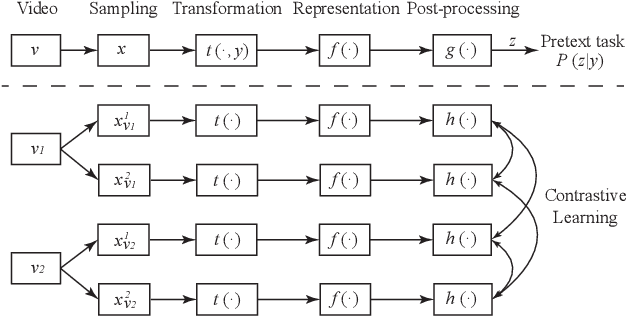

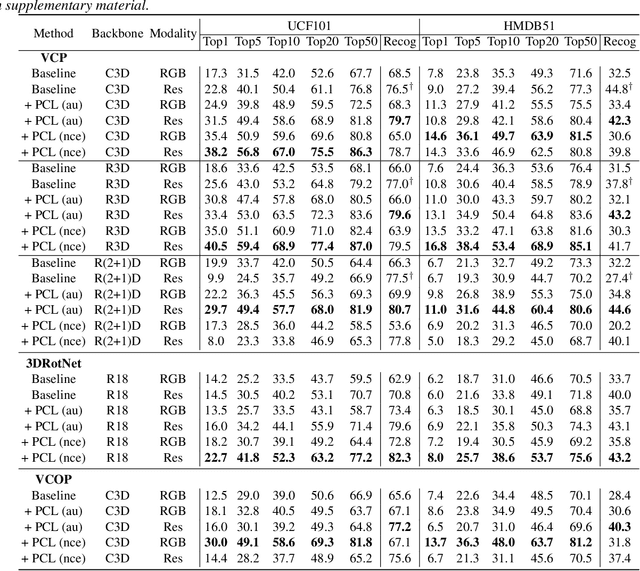

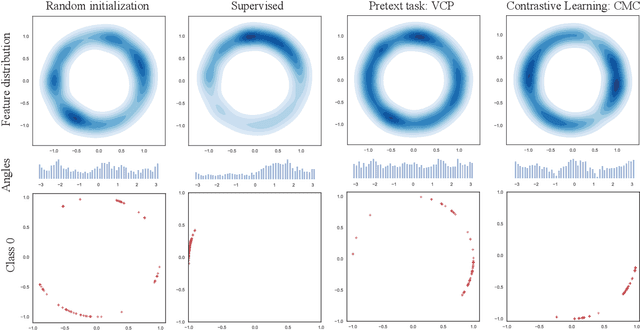

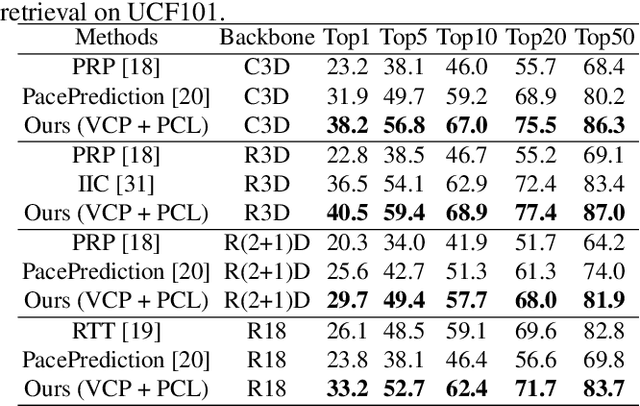

Self-Supervised Video Representation Using Pretext-Contrastive Learning

Oct 29, 2020

Abstract:Pretext tasks and contrastive learning have been successful in self-supervised learning for video retrieval and recognition. In this study, we analyze their optimization targets and utilize the hyper-sphere feature space to explore the connections between them, indicating the compatibility and consistency of these two different learning methods. Based on the analysis, we propose a self-supervised training method, referred as Pretext-Contrastive Learning (PCL), to learn video representations. Extensive experiments based on different combinations of pretext task baselines and contrastive losses confirm the strong agreement with their self-supervised learning targets, demonstrating the effectiveness and the generality of PCL. The combination of pretext tasks and contrastive losses showed significant improvements in both video retrieval and recognition over the corresponding baselines. And we can also outperform current state-of-the-art methods in the same manner. Further, our PCL is flexible and can be applied to almost all existing pretext task methods.

Self-Play Reinforcement Learning for Fast Image Retargeting

Oct 02, 2020

Abstract:In this study, we address image retargeting, which is a task that adjusts input images to arbitrary sizes. In one of the best-performing methods called MULTIOP, multiple retargeting operators were combined and retargeted images at each stage were generated to find the optimal sequence of operators that minimized the distance between original and retargeted images. The limitation of this method is in its tremendous processing time, which severely prohibits its practical use. Therefore, the purpose of this study is to find the optimal combination of operators within a reasonable processing time; we propose a method of predicting the optimal operator for each step using a reinforcement learning agent. The technical contributions of this study are as follows. Firstly, we propose a reward based on self-play, which will be insensitive to the large variance in the content-dependent distance measured in MULTIOP. Secondly, we propose to dynamically change the loss weight for each action to prevent the algorithm from falling into a local optimum and from choosing only the most frequently used operator in its training. Our experiments showed that we achieved multi-operator image retargeting with less processing time by three orders of magnitude and the same quality as the original multi-operator-based method, which was the best-performing algorithm in retargeting tasks.

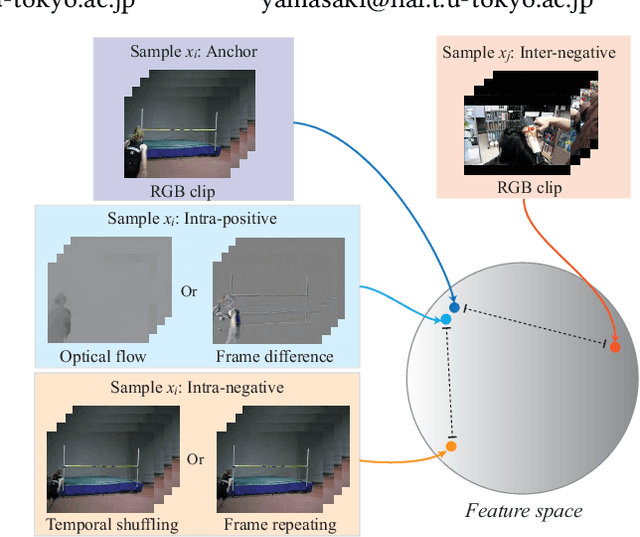

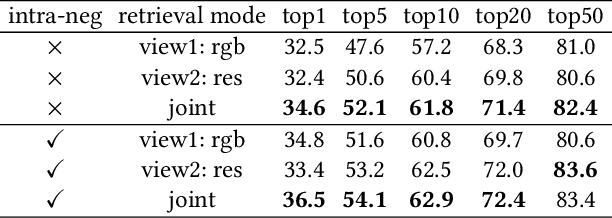

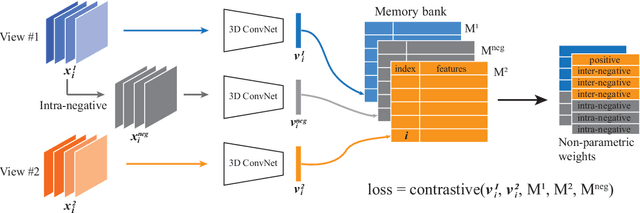

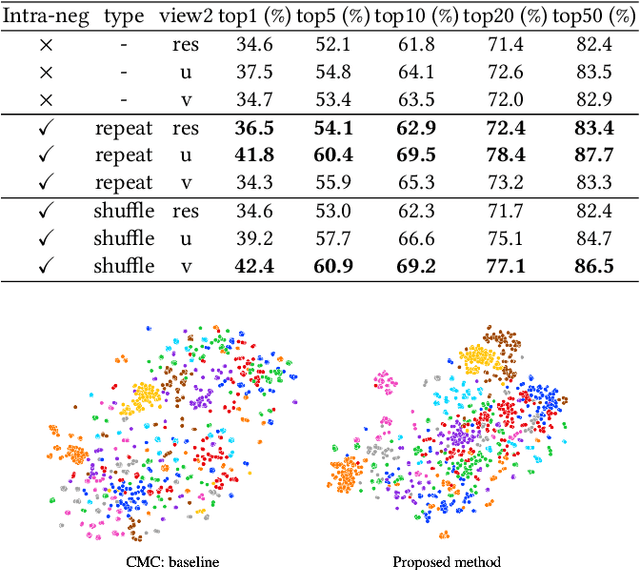

Self-supervised Video Representation Learning Using Inter-intra Contrastive Framework

Aug 12, 2020

Abstract:We propose a self-supervised method to learn feature representations from videos. A standard approach in traditional self-supervised methods uses positive-negative data pairs to train with contrastive learning strategy. In such a case, different modalities of the same video are treated as positives and video clips from a different video are treated as negatives. Because the spatio-temporal information is important for video representation, we extend the negative samples by introducing intra-negative samples, which are transformed from the same anchor video by breaking temporal relations in video clips. With the proposed Inter-Intra Contrastive (IIC) framework, we can train spatio-temporal convolutional networks to learn video representations. There are many flexible options in our IIC framework and we conduct experiments by using several different configurations. Evaluations are conducted on video retrieval and video recognition tasks using the learned video representation. Our proposed IIC outperforms current state-of-the-art results by a large margin, such as 16.7% and 9.5% points improvements in top-1 accuracy on UCF101 and HMDB51 datasets for video retrieval, respectively. For video recognition, improvements can also be obtained on these two benchmark datasets. Code is available at https://github.com/BestJuly/Inter-intra-video-contrastive-learning.

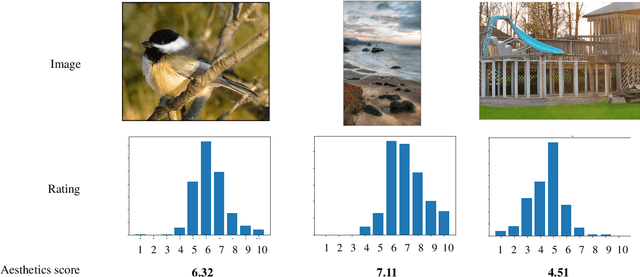

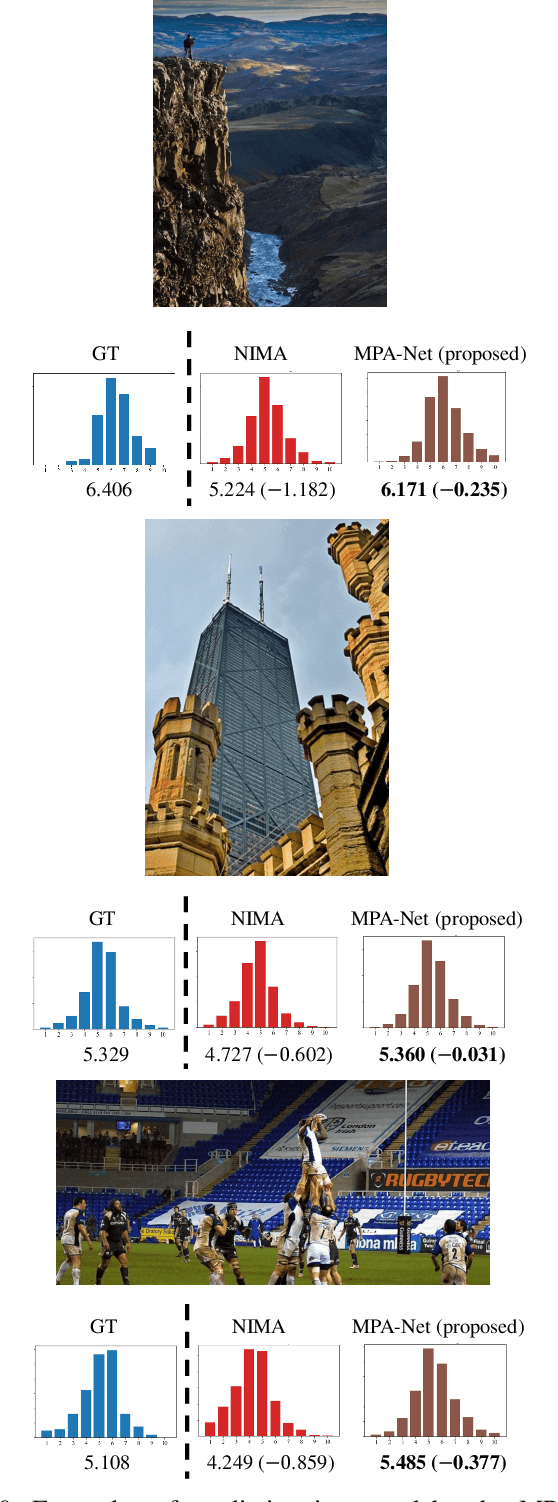

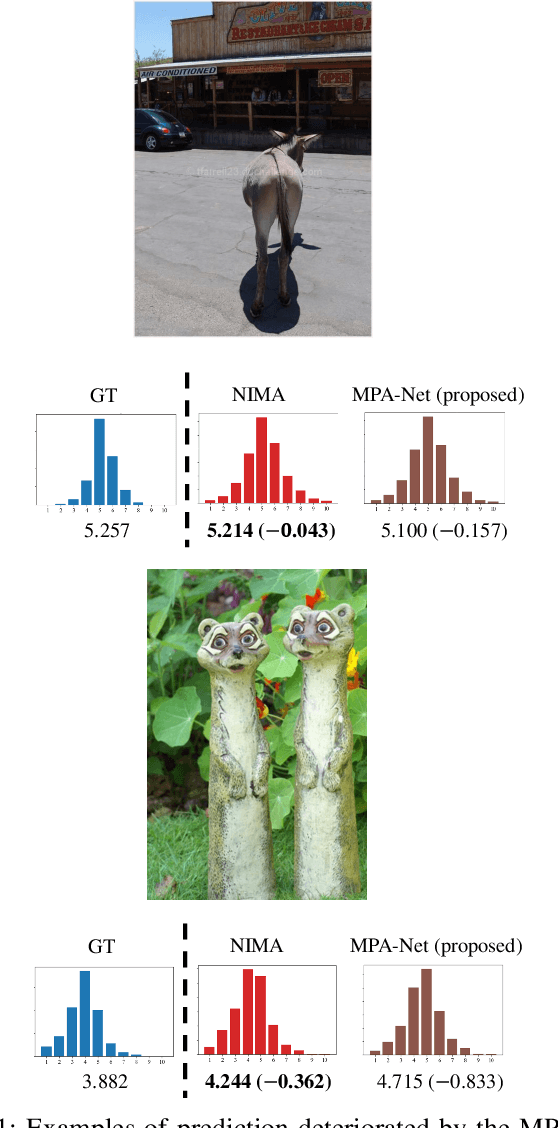

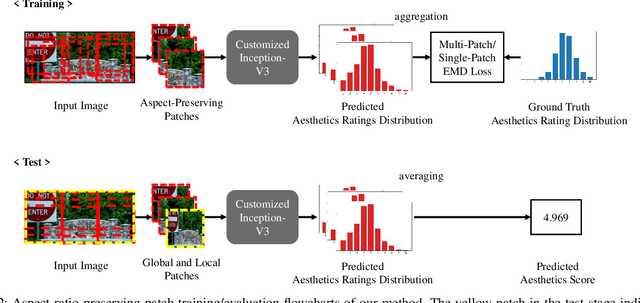

Image Aesthetics Prediction Using Multiple Patches Preserving the Original Aspect Ratio of Contents

Jul 05, 2020

Abstract:The spread of social networking services has created an increasing demand for selecting, editing, and generating impressive images. This trend increases the importance of evaluating image aesthetics as a complementary function of automatic image processing. We propose a multi-patch method, named MPA-Net (Multi-Patch Aggregation Network), to predict image aesthetics scores by maintaining the original aspect ratios of contents in the images. Through an experiment involving the large-scale AVA dataset, which contains 250,000 images, we show that the effectiveness of the equal-interval multi-patch selection approach for aesthetics score prediction is significant compared to the single-patch prediction and random patch selection approaches. For this dataset, MPA-Net outperforms the neural image assessment algorithm, which was regarded as a baseline method. In particular, MPA-Net yields a 0.073 (11.5%) higher linear correlation coefficient (LCC) of aesthetics scores and a 0.088 (14.4%) higher Spearman's rank correlation coefficient (SRCC). MPA-Net also reduces the mean square error (MSE) by 0.0115 (4.18%) and achieves results for the LCC and SRCC that are comparable to those of the state-of-the-art continuous aesthetics score prediction methods. Most notably, MPA-Net yields a significant lower MSE especially for images with aspect ratios far from 1.0, indicating that MPA-Net is useful for a wide range of image aspect ratios. MPA-Net uses only images and does not require external information during the training nor prediction stages. Therefore, MPA-Net has great potential for applications aside from aesthetics score prediction such as other human subjectivity prediction.

Motion Representation Using Residual Frames with 3D CNN

Jun 21, 2020

Abstract:Recently, 3D convolutional networks (3D ConvNets) yield good performance in action recognition. However, optical flow stream is still needed to ensure better performance, the cost of which is very high. In this paper, we propose a fast but effective way to extract motion features from videos utilizing residual frames as the input data in 3D ConvNets. By replacing traditional stacked RGB frames with residual ones, 35.6% and 26.6% points improvements over top-1 accuracy can be obtained on the UCF101 and HMDB51 datasets when ResNet-18 models are trained from scratch. And we achieved the state-of-the-art results in this training mode. Analysis shows that better motion features can be extracted using residual frames compared to RGB counterpart. By combining with a simple appearance path, our proposal can be even better than some methods using optical flow streams.

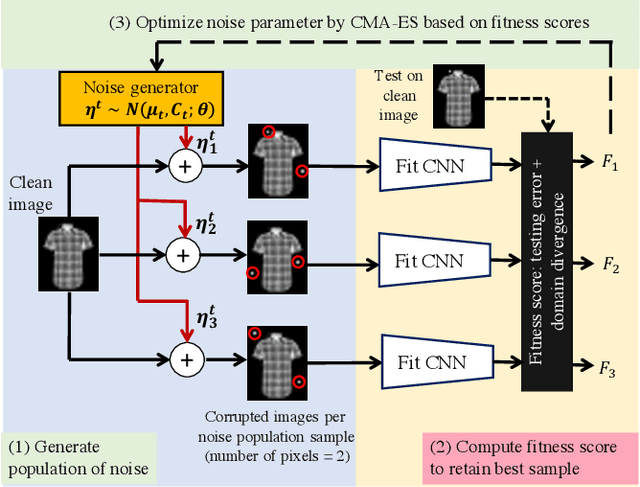

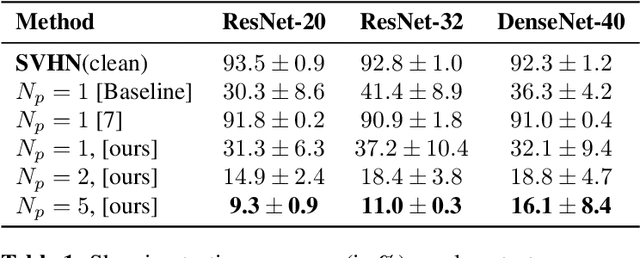

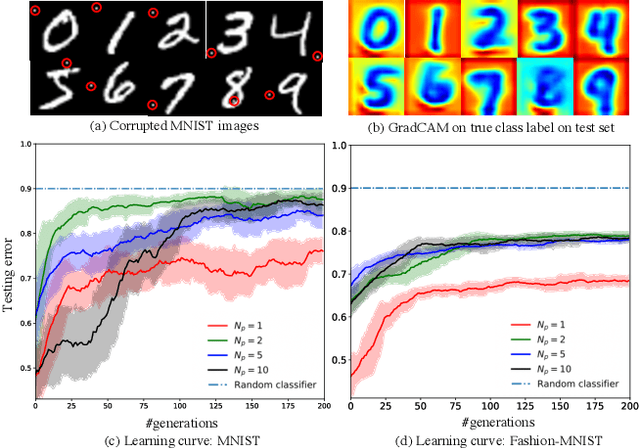

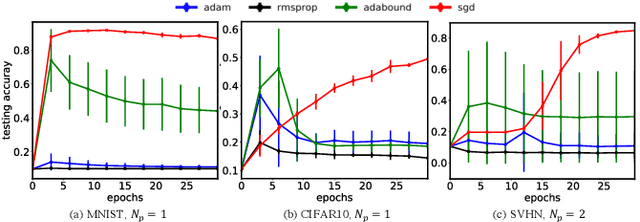

Investigating Generalization in Neural Networks under Optimally Evolved Training Perturbations

Mar 14, 2020

Abstract:In this paper, we study the generalization properties of neural networks under input perturbations and show that minimal training data corruption by a few pixel modifications can cause drastic overfitting. We propose an evolutionary algorithm to search for optimal pixel perturbations using novel cost function inspired from literature in domain adaptation that explicitly maximizes the generalization gap and domain divergence between clean and corrupted images. Our method outperforms previous pixel-based data distribution shift methods on state-of-the-art Convolutional Neural Networks (CNNs) architectures. Interestingly, we find that the choice of optimization plays an important role in generalization robustness due to the empirical observation that SGD is resilient to such training data corruption unlike adaptive optimization techniques (ADAM). Our source code is available at https://github.com/subhajitchaudhury/evo-shift.

DeepSperm: A robust and real-time bull sperm-cell detection in densely populated semen videos

Mar 03, 2020

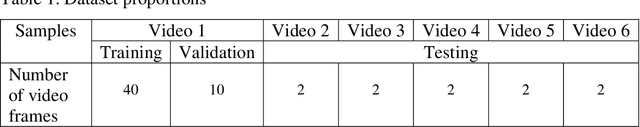

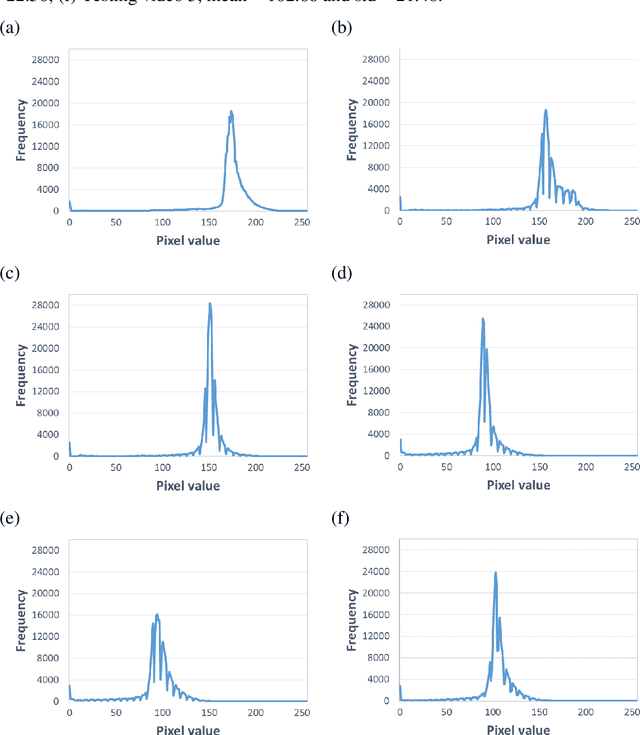

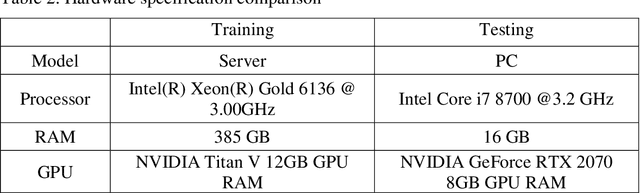

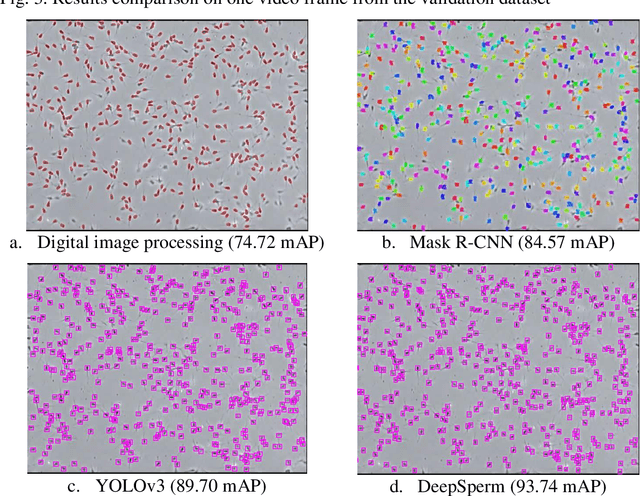

Abstract:Background and Objective: Object detection is a primary research interest in computer vision. Sperm-cell detection in a densely populated bull semen microscopic observation video presents challenges such as partial occlusion, vast number of objects in a single video frame, tiny size of the object, artifacts, low contrast, and blurry objects because of the rapid movement of the sperm cells. This study proposes an architecture, called DeepSperm, that solves the aforementioned challenges and is more accurate and faster than state-of-the-art architectures. Methods: In the proposed architecture, we use only one detection layer, which is specific for small object detection. For handling overfitting and increasing accuracy, we set a higher network resolution, use a dropout layer, and perform data augmentation on hue, saturation, and exposure. Several hyper-parameters are tuned to achieve better performance. We compare our proposed method with those of a conventional image processing-based object-detection method, you only look once (YOLOv3), and mask region-based convolutional neural network (Mask R-CNN). Results: In our experiment, we achieve 86.91 mAP on the test dataset and a processing speed of 50.3 fps. In comparison with YOLOv3, we achieve an increase of 16.66 mAP point, 3.26 x faster on testing, and 1.4 x faster on training with a small training dataset, which contains 40 video frames. The weights file size was also reduced significantly, with 16.94 x smaller than that of YOLOv3. Moreover, it requires 1.3 x less graphical processing unit (GPU) memory than YOLOv3. Conclusions: This study proposes DeepSperm, which is a simple, effective, and efficient architecture with its hyper-parameters and configuration to detect bull sperm cells robustly in real time. In our experiment, we surpass the state of the art in terms of accuracy, speed, and resource needs.

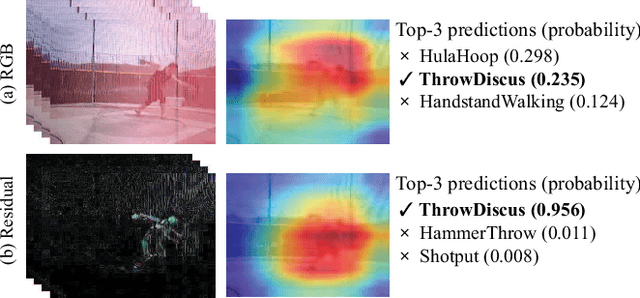

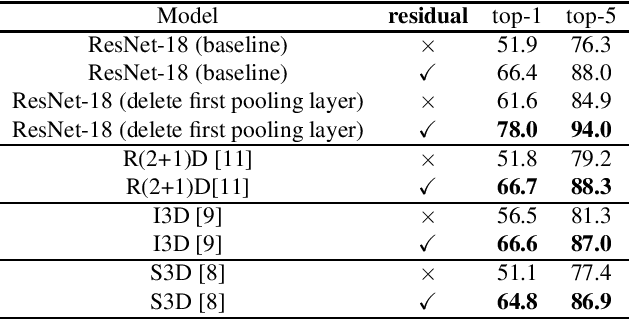

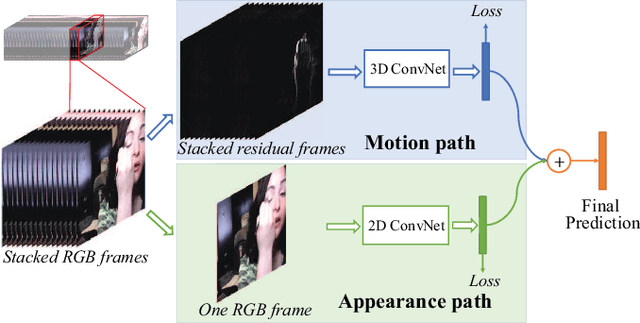

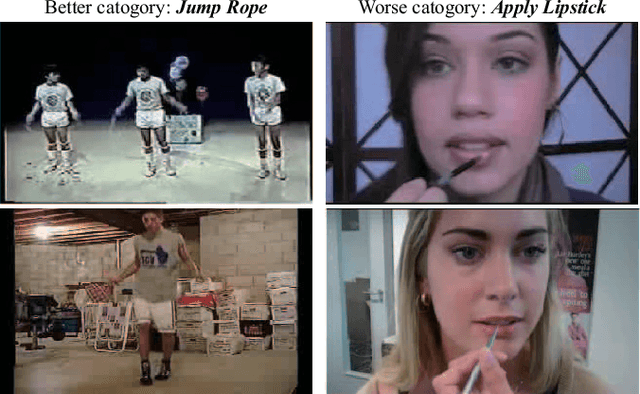

Rethinking Motion Representation: Residual Frames with 3D ConvNets for Better Action Recognition

Jan 16, 2020

Abstract:Recently, 3D convolutional networks yield good performance in action recognition. However, optical flow stream is still needed to ensure better performance, the cost of which is very high. In this paper, we propose a fast but effective way to extract motion features from videos utilizing residual frames as the input data in 3D ConvNets. By replacing traditional stacked RGB frames with residual ones, 20.5% and 12.5% points improvements over top-1 accuracy can be achieved on the UCF101 and HMDB51 datasets when trained from scratch. Because residual frames contain little information of object appearance, we further use a 2D convolutional network to extract appearance features and combine them with the results from residual frames to form a two-path solution. In three benchmark datasets, our two-path solution achieved better or comparable performances than those using additional optical flow methods, especially outperformed the state-of-the-art models on Mini-kinetics dataset. Further analysis indicates that better motion features can be extracted using residual frames with 3D ConvNets, and our residual-frame-input path is a good supplement for existing RGB-frame-input models.

Assessing Robustness of Deep learning Methods in Dermatological Workflow

Jan 15, 2020

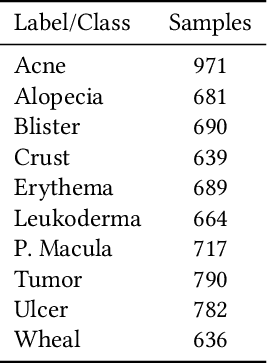

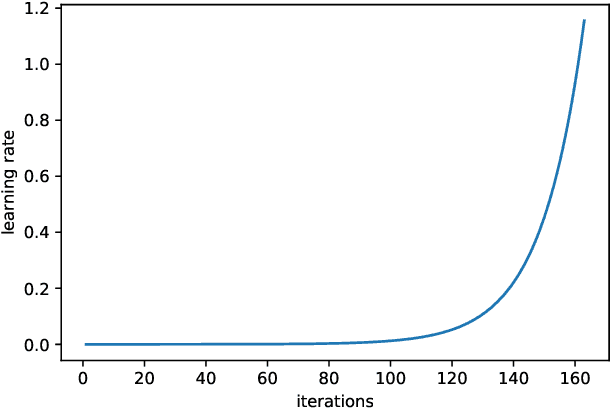

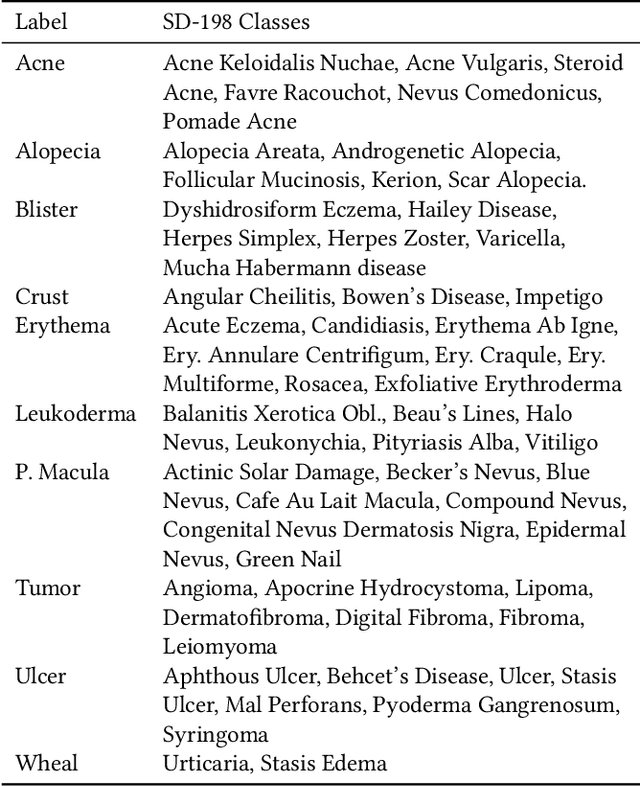

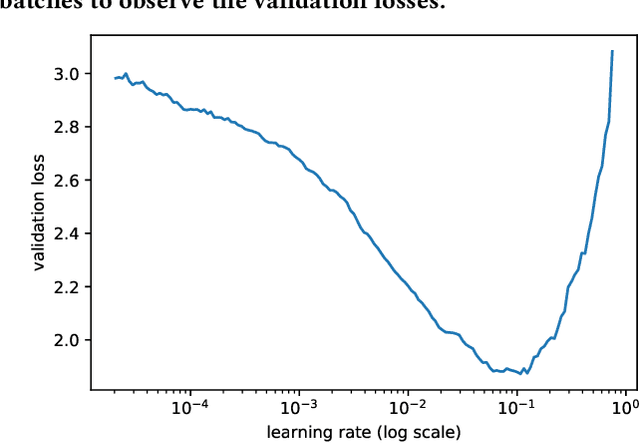

Abstract:This paper aims to evaluate the suitability of current deep learning methods for clinical workflow especially by focusing on dermatology. Although deep learning methods have been attempted to get dermatologist level accuracy in several individual conditions, it has not been rigorously tested for common clinical complaints. Most projects involve data acquired in well-controlled laboratory conditions. This may not reflect regular clinical evaluation where corresponding image quality is not always ideal. We test the robustness of deep learning methods by simulating non-ideal characteristics on user submitted images of ten classes of diseases. Assessing via imitated conditions, we have found the overall accuracy to drop and individual predictions change significantly in many cases despite of robust training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge