Tolga Çukur

Federated Learning of Generative Image Priors for MRI Reconstruction

Feb 08, 2022

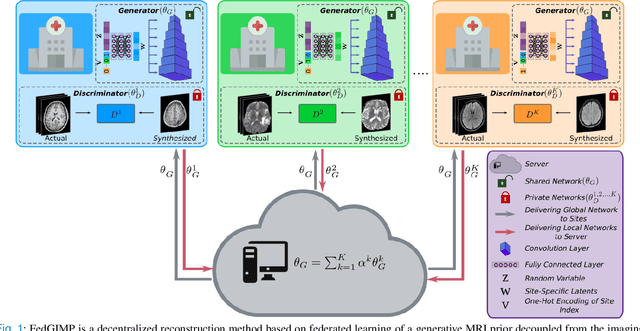

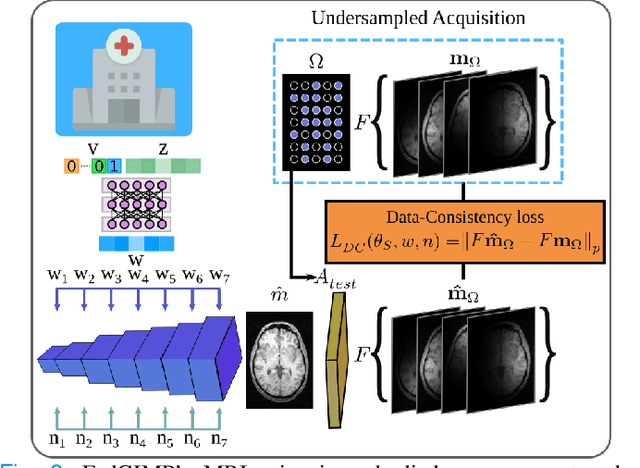

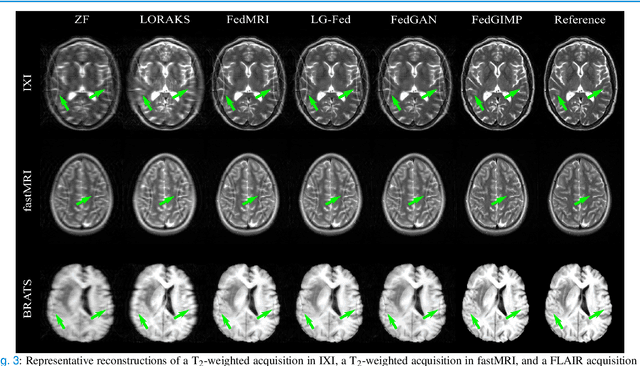

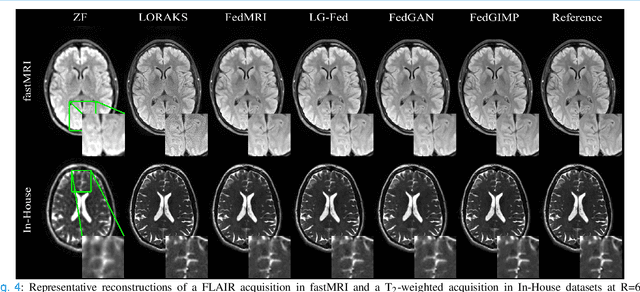

Abstract:Multi-institutional efforts can facilitate training of deep MRI reconstruction models, albeit privacy risks arise during cross-site sharing of imaging data. Federated learning (FL) has recently been introduced to address privacy concerns by enabling distributed training without transfer of imaging data. Existing FL methods for MRI reconstruction employ conditional models to map from undersampled to fully-sampled acquisitions via explicit knowledge of the imaging operator. Since conditional models generalize poorly across different acceleration rates or sampling densities, imaging operators must be fixed between training and testing, and they are typically matched across sites. To improve generalization and flexibility in multi-institutional collaborations, here we introduce a novel method for MRI reconstruction based on Federated learning of Generative IMage Priors (FedGIMP). FedGIMP leverages a two-stage approach: cross-site learning of a generative MRI prior, and subject-specific injection of the imaging operator. The global MRI prior is learned via an unconditional adversarial model that synthesizes high-quality MR images based on latent variables. Specificity in the prior is preserved via a mapper subnetwork that produces site-specific latents. During inference, the prior is combined with subject-specific imaging operators to enable reconstruction, and further adapted to individual test samples by minimizing data-consistency loss. Comprehensive experiments on multi-institutional datasets clearly demonstrate enhanced generalization performance of FedGIMP against site-specific and federated methods based on conditional models, as well as traditional reconstruction methods.

TranSMS: Transformers for Super-Resolution Calibration in Magnetic Particle Imaging

Nov 03, 2021

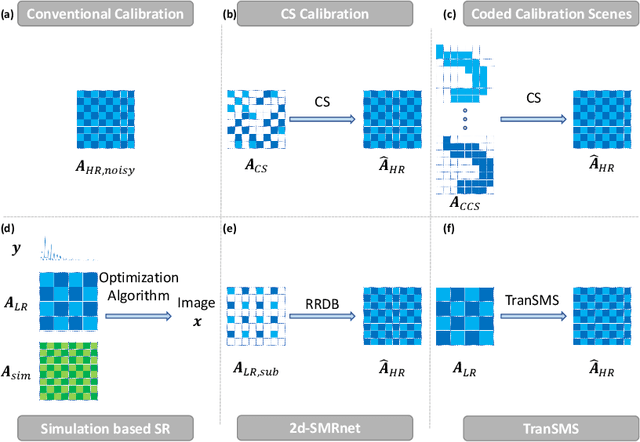

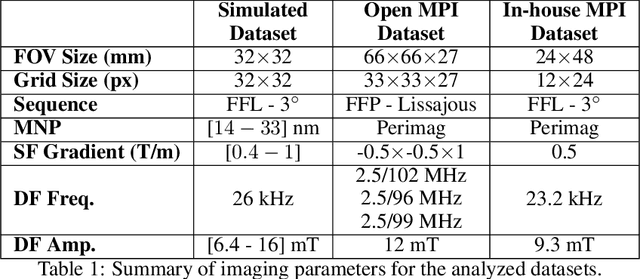

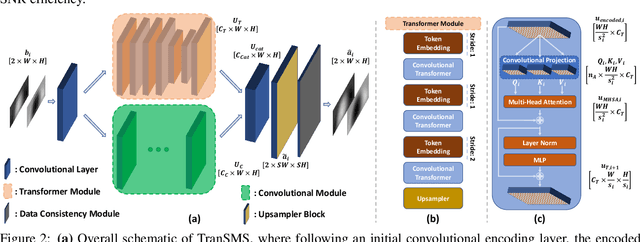

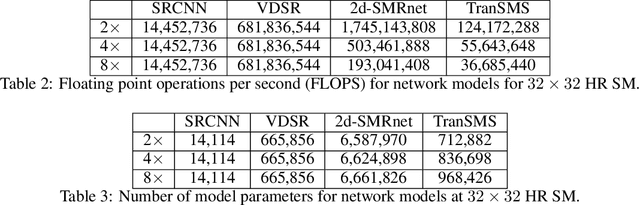

Abstract:Magnetic particle imaging (MPI) is a recent modality that offers exceptional contrast for magnetic nanoparticles (MNP) at high spatio-temporal resolution. A common procedure in MPI starts with a calibration scan to measure the system matrix (SM), which is then used to setup an inverse problem to reconstruct images of the particle distribution during subsequent scans. This calibration enables the reconstruction to sensitively account for various system imperfections. Yet time-consuming SM measurements have to be repeated under notable drifts or changes in system properties. Here, we introduce a novel deep learning approach for accelerated MPI calibration based on transformers for SM super-resolution (TranSMS). Low-resolution SM measurements are performed using large MNP samples for improved signal-to-noise ratio efficiency, and the high-resolution SM is super-resolved via a model-based deep network. TranSMS leverages a vision transformer module to capture contextual relationships in low-resolution input images, a dense convolutional module for localizing high-resolution image features, and a data-consistency module to ensure consistency to measurements. Demonstrations on simulated and experimental data indicate that TranSMS achieves significantly improved SM recovery and image reconstruction in MPI, while enabling acceleration up to 64-fold during two-dimensional calibration.

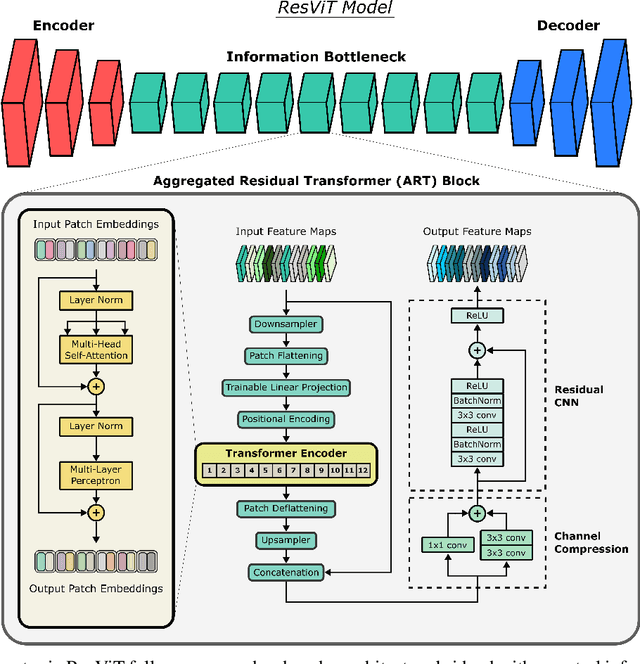

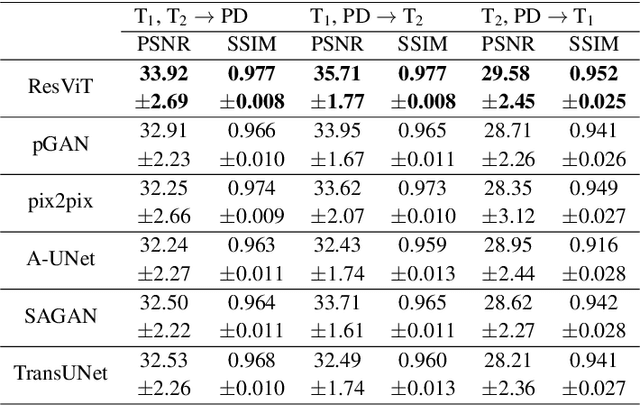

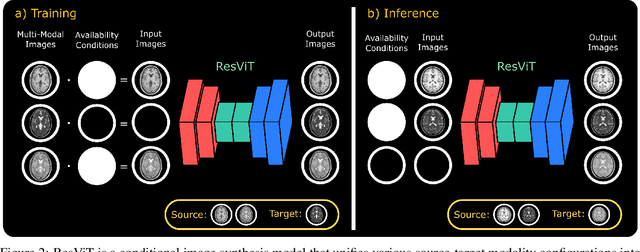

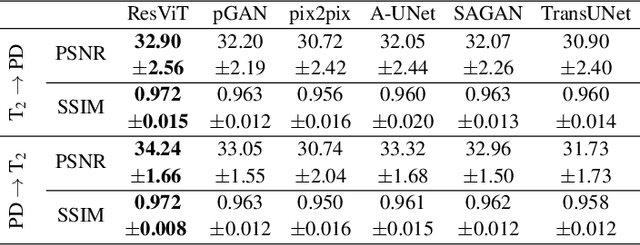

ResViT: Residual vision transformers for multi-modal medical image synthesis

Jun 30, 2021

Abstract:Multi-modal imaging is a key healthcare technology in the diagnosis and management of disease, but it is often underutilized due to costs associated with multiple separate scans. This limitation yields the need for synthesis of unacquired modalities from the subset of available modalities. In recent years, generative adversarial network (GAN) models with superior depiction of structural details have been established as state-of-the-art in numerous medical image synthesis tasks. However, GANs are characteristically based on convolutional neural network (CNN) backbones that perform local processing with compact filters. This inductive bias, in turn, compromises learning of long-range spatial dependencies. While attention maps incorporated in GANs can multiplicatively modulate CNN features to emphasize critical image regions, their capture of global context is mostly implicit. Here, we propose a novel generative adversarial approach for medical image synthesis, ResViT, to combine local precision of convolution operators with contextual sensitivity of vision transformers. Based on an encoder-decoder architecture, ResViT employs a central bottleneck comprising novel aggregated residual transformer (ART) blocks that synergistically combine convolutional and transformer modules. Comprehensive demonstrations are performed for synthesizing missing sequences in multi-contrast MRI and CT images from MRI. Our results indicate the superiority of ResViT against competing methods in terms of qualitative observations and quantitative metrics.

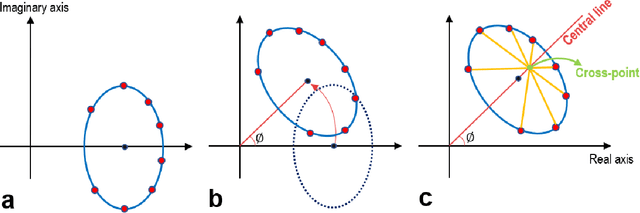

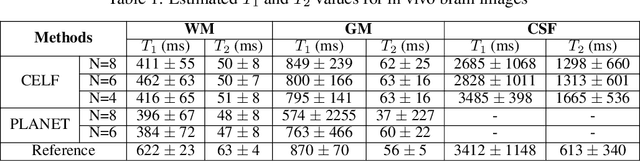

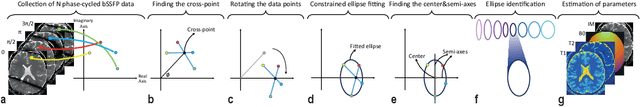

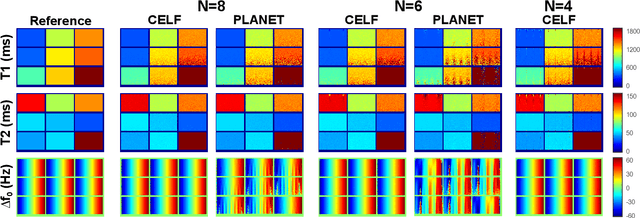

Constrained Ellipse Fitting for Efficient Parameter Mapping with Phase-cycled bSSFP MRI

Jun 06, 2021

Abstract:Balanced steady-state free precession (bSSFP) imaging enables high scan efficiency in MRI, but differs from conventional sequences in terms of elevated sensitivity to main field inhomogeneity and nonstandard T2/T1-weighted tissue contrast. To address these limitations, multiple bSSFP images of the same anatomy are commonly acquired with a set of different RF phase-cycling increments. Joint processing of phase-cycled acquisitions serves to mitigate sensitivity to field inhomogeneity. Recently phase-cycled bSSFP acquisitions were also leveraged to estimate relaxation parameters based on explicit signal models. While effective, these model-based methods often involve a large number of acquisitions (N~10-16), degrading scan efficiency. Here, we propose a new constrained ellipse fitting method (CELF) for parameter estimation with improved efficiency and accuracy in phase-cycled bSSFP MRI. CELF is based on the elliptical signal model framework for complex bSSFP signals; and it introduces geometrical constraints on ellipse properties to improve estimation efficiency, and dictionary-based identification to improve estimation accuracy. Simulated, phantom and in vivo experiments demonstrate that the proposed method enables enhanced parameter estimation with as few as N=4 acquisitions, thus it holds great potential for improving utility of bSSFP-based parametric mapping.

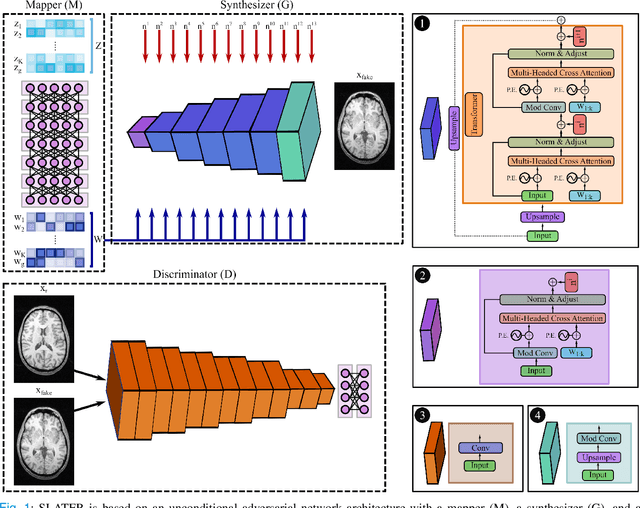

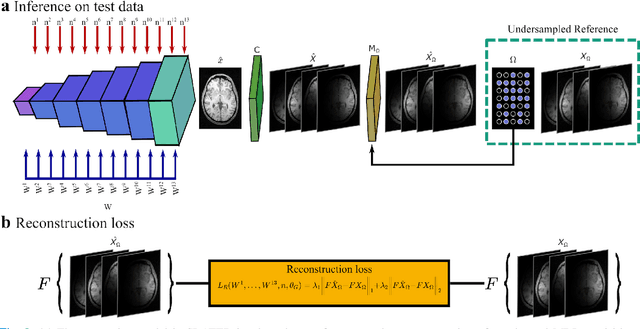

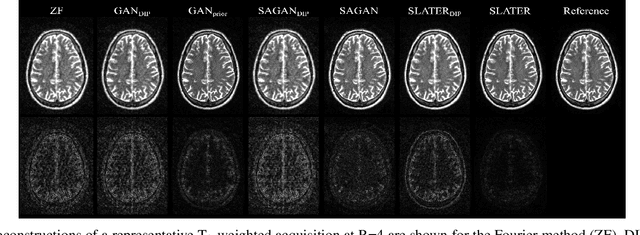

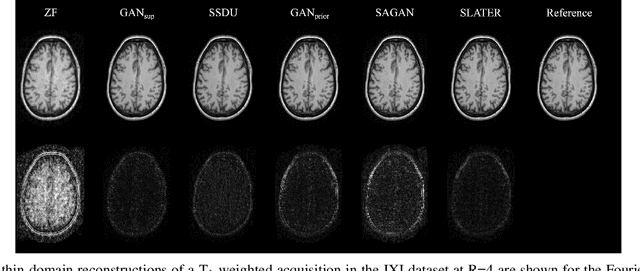

Unsupervised MRI Reconstruction via Zero-Shot Learned Adversarial Transformers

May 21, 2021

Abstract:Supervised deep learning has swiftly become a workhorse for accelerated MRI in recent years, offering state-of-the-art performance in image reconstruction from undersampled acquisitions. Training deep supervised models requires large datasets of undersampled and fully-sampled acquisitions typically from a matching set of subjects. Given scarce access to large medical datasets, this limitation has sparked interest in unsupervised methods that reduce reliance on fully-sampled ground-truth data. A common framework is based on the deep image prior, where network-driven regularization is enforced directly during inference on undersampled acquisitions. Yet, canonical convolutional architectures are suboptimal in capturing long-range relationships, and randomly initialized networks may hamper convergence. To address these limitations, here we introduce a novel unsupervised MRI reconstruction method based on zero-Shot Learned Adversarial TransformERs (SLATER). SLATER embodies a deep adversarial network with cross-attention transformer blocks to map noise and latent variables onto MR images. This unconditional network learns a high-quality MRI prior in a self-supervised encoding task. A zero-shot reconstruction is performed on undersampled test data, where inference is performed by optimizing network parameters, latent and noise variables to ensure maximal consistency to multi-coil MRI data. Comprehensive experiments on brain MRI datasets clearly demonstrate the superior performance of SLATER against several state-of-the-art unsupervised methods.

A Few-Shot Learning Approach for Accelerated MRI via Fusion of Data-Driven and Subject-Driven Priors

Mar 13, 2021

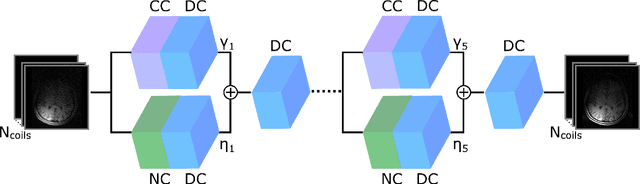

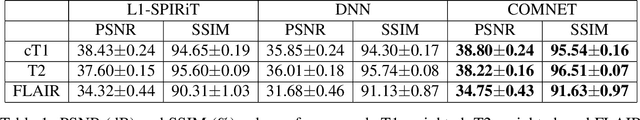

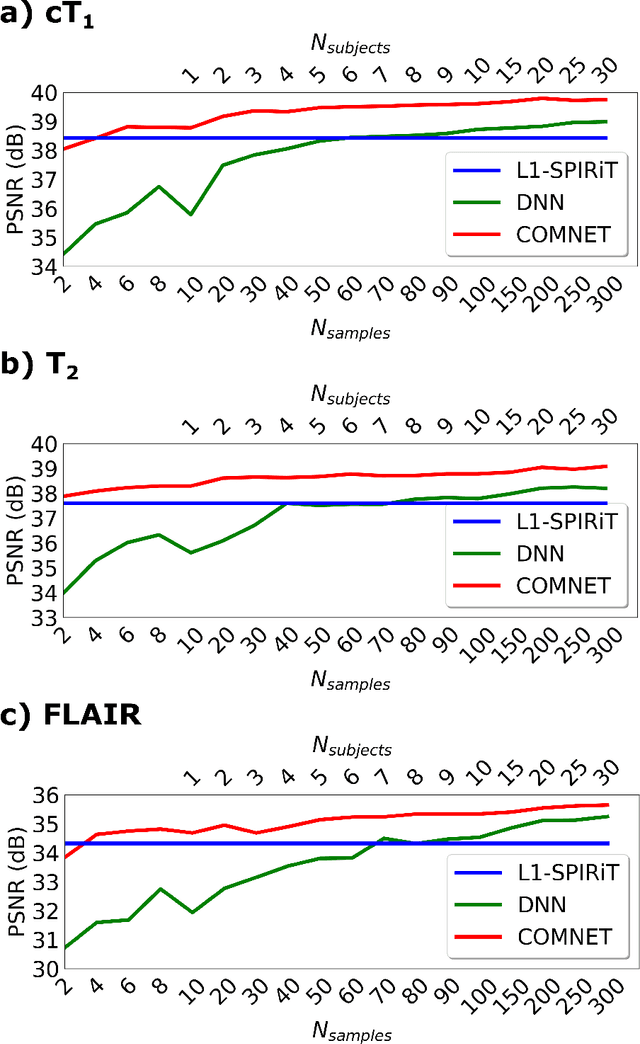

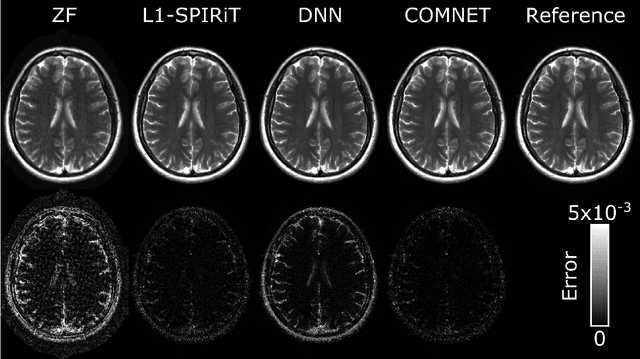

Abstract:Deep neural networks (DNNs) have recently found emerging use in accelerated MRI reconstruction. DNNs typically learn data-driven priors from large datasets constituting pairs of undersampled and fully-sampled acquisitions. Acquiring such large datasets, however, might be impractical. To mitigate this limitation, we propose a few-shot learning approach for accelerated MRI that merges subject-driven priors obtained via physical signal models with data-driven priors obtained from a few training samples. Demonstrations on brain MR images from the NYU fastMRI dataset indicate that the proposed approach requires just a few samples to outperform traditional parallel imaging and DNN algorithms.

Three Dimensional MR Image Synthesis with Progressive Generative Adversarial Networks

Dec 18, 2020

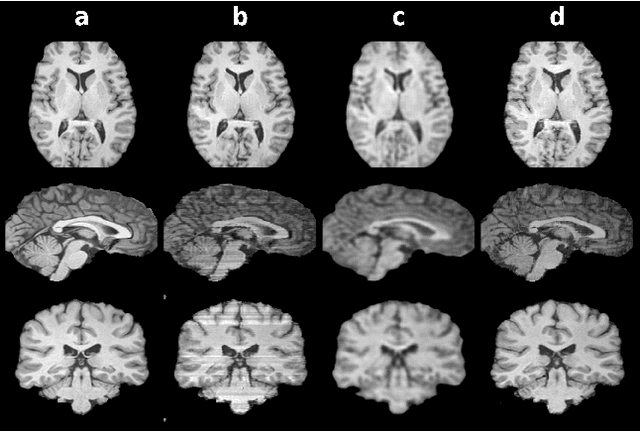

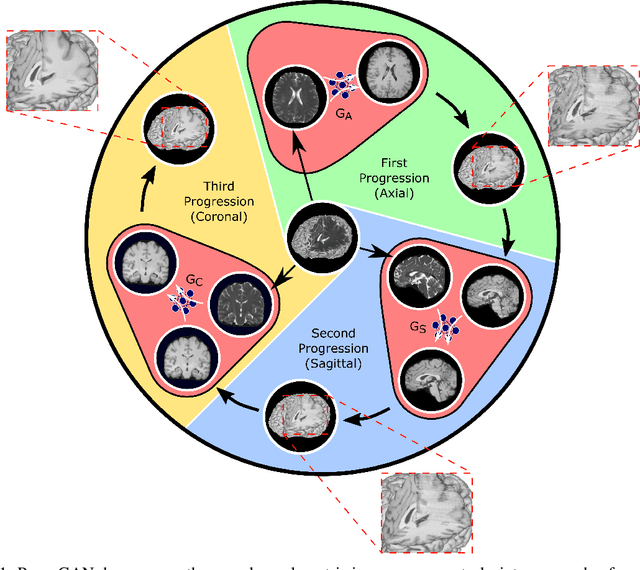

Abstract:Mainstream deep models for three-dimensional MRI synthesis are either cross-sectional or volumetric depending on the input. Cross-sectional models can decrease the model complexity, but they may lead to discontinuity artifacts. On the other hand, volumetric models can alleviate the discontinuity artifacts, but they might suffer from loss of spatial resolution due to increased model complexity coupled with scarce training data. To mitigate the limitations of both approaches, we propose a novel model that progressively recovers the target volume via simpler synthesis tasks across individual orientations.

Progressively Volumetrized Deep Generative Models for Data-Efficient Contextual Learning of MR Image Recovery

Dec 03, 2020

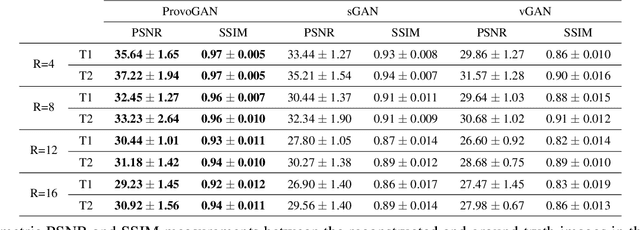

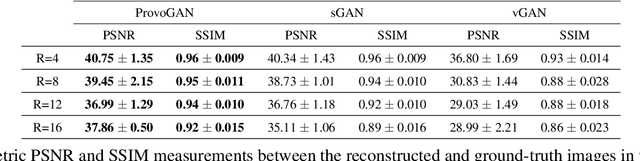

Abstract:Magnetic resonance imaging (MRI) offers the flexibility to image a given anatomic volume under a multitude of tissue contrasts. Yet, scan time considerations put stringent limits on the quality and diversity of MRI data. The gold-standard approach to alleviate this limitation is to recover high-quality images from data undersampled across various dimensions such as the Fourier domain or contrast sets. A central divide among recovery methods is whether the anatomy is processed per volume or per cross-section. Volumetric models offer enhanced capture of global contextual information, but they can suffer from suboptimal learning due to elevated model complexity. Cross-sectional models with lower complexity offer improved learning behavior, yet they ignore contextual information across the longitudinal dimension of the volume. Here, we introduce a novel data-efficient progressively volumetrized generative model (ProvoGAN) that decomposes complex volumetric image recovery tasks into a series of simpler cross-sectional tasks across individual rectilinear dimensions. ProvoGAN effectively captures global context and recovers fine-structural details across all dimensions, while maintaining low model complexity and data-efficiency advantages of cross-sectional models. Comprehensive demonstrations on mainstream MRI reconstruction and synthesis tasks show that ProvoGAN yields superior performance to state-of-the-art volumetric and cross-sectional models.

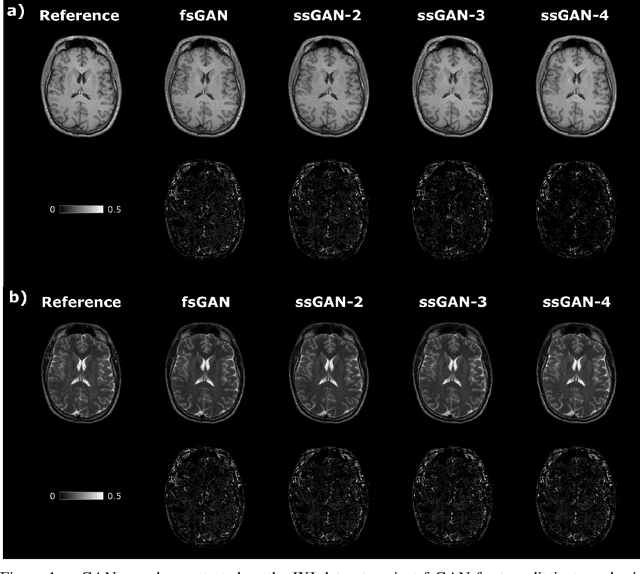

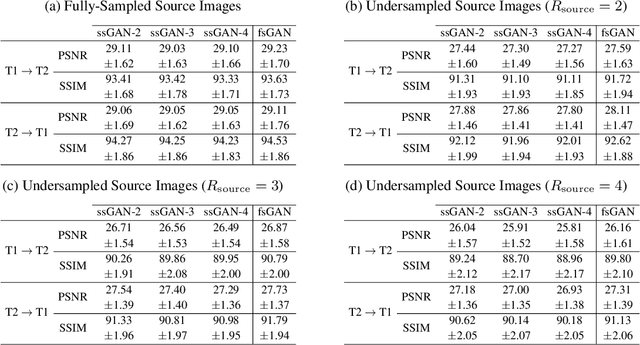

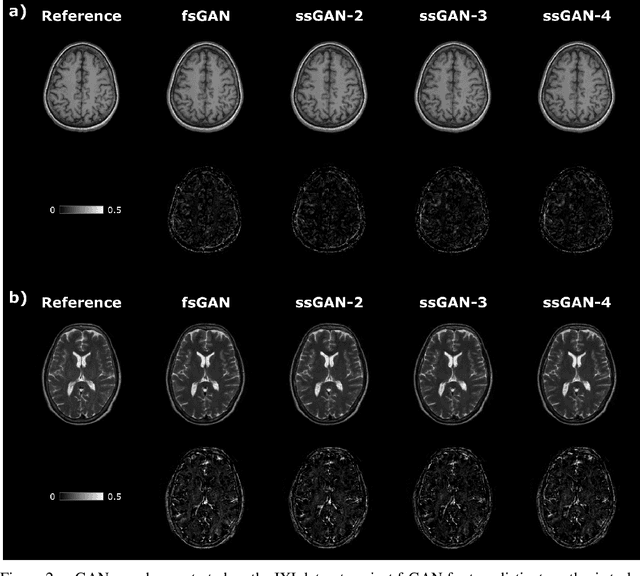

Semi-Supervised Learning of Mutually Accelerated Multi-Contrast MRI Synthesis without Fully-Sampled Ground-Truths

Nov 29, 2020

Abstract:This study proposes a novel semi-supervised learning framework for mutually accelerated multi-contrast MRI synthesis that recovers high-quality images without demanding large training sets of costly fully-sampled source or ground-truth target images. The proposed method presents a selective loss function expressed only on a subset of the acquired k-space coefficients and further leverages randomized sampling patterns across training subjects to effectively learn relationships among acquired and nonacquired k-space coefficients at all locations. Comprehensive experiments performed on multi-contrast brain images clearly demonstrate that the proposed method maintains equivalent performance to the gold-standard method based on fully-supervised training while alleviating undesirable reliance of the current synthesis methods on large-scale fully-sampled MRI acquisitions.

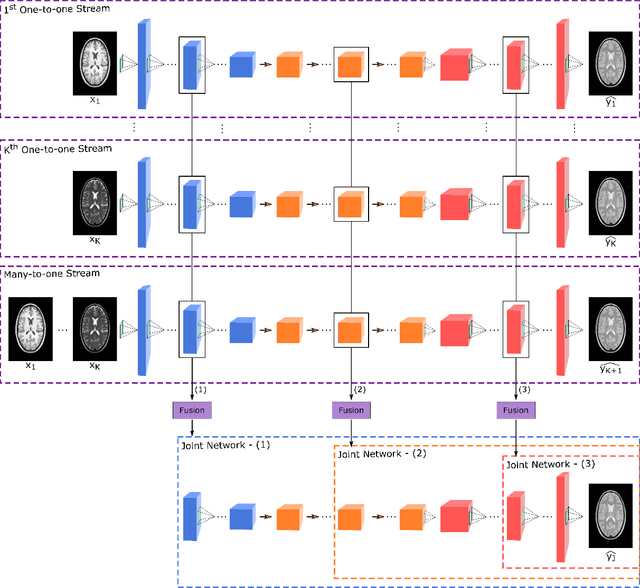

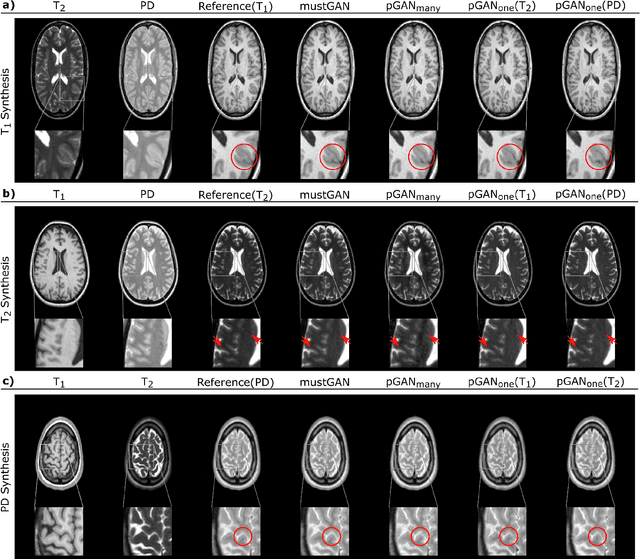

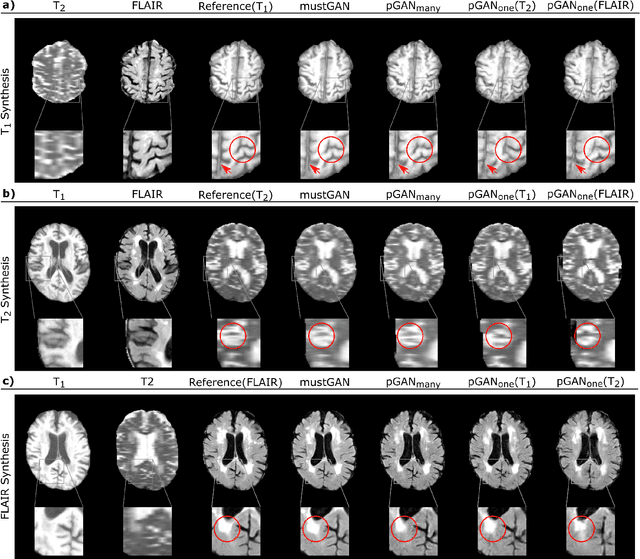

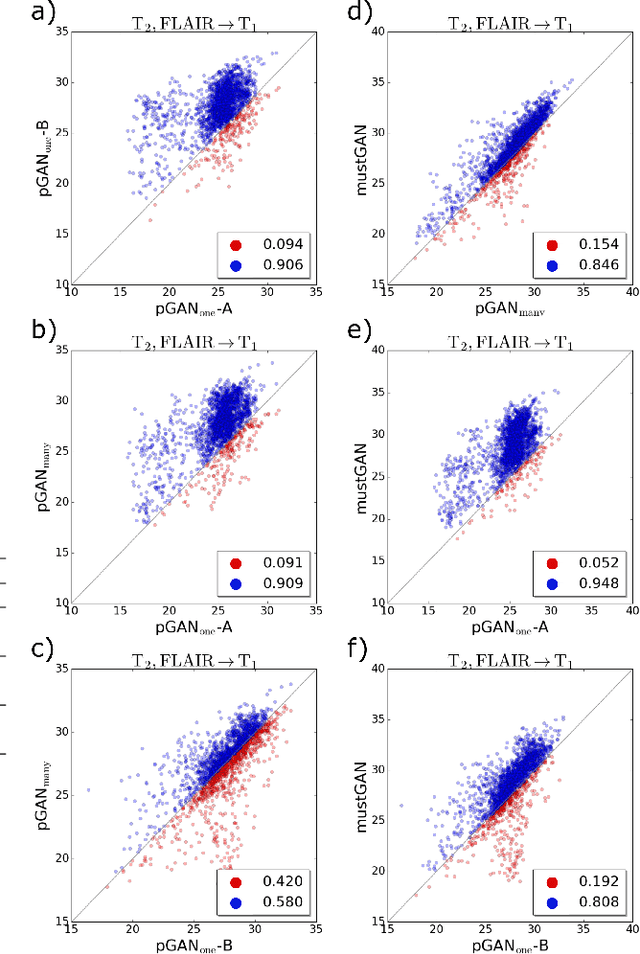

mustGAN: Multi-Stream Generative Adversarial Networks for MR Image Synthesis

Sep 25, 2019

Abstract:Multi-contrast MRI protocols increase the level of morphological information available for diagnosis. Yet, the number and quality of contrasts is limited in practice by various factors including scan time and patient motion. Synthesis of missing or corrupted contrasts can alleviate this limitation to improve clinical utility. Common approaches for multi-contrast MRI involve either one-to-one and many-to-one synthesis methods. One-to-one methods take as input a single source contrast, and they learn a latent representation sensitive to unique features of the source. Meanwhile, many-to-one methods receive multiple distinct sources, and they learn a shared latent representation more sensitive to common features across sources. For enhanced image synthesis, here we propose a multi-stream approach that aggregates information across multiple source images via a mixture of multiple one-to-one streams and a joint many-to-one stream. The shared feature maps generated in the many-to-one stream and the complementary feature maps generated in the one-to-one streams are combined with a fusion block. The location of the fusion block is adaptively modified to maximize task-specific performance. Qualitative and quantitative assessments on T1-, T2-, PD-weighted and FLAIR images clearly demonstrate the superior performance of the proposed method compared to previous state-of-the-art one-to-one and many-to-one methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge