Todd Murphey

Ergodic Specifications for Flexible Swarm Control: From User Commands to Persistent Adaptation

Jun 10, 2020

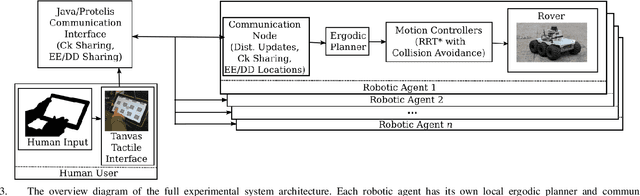

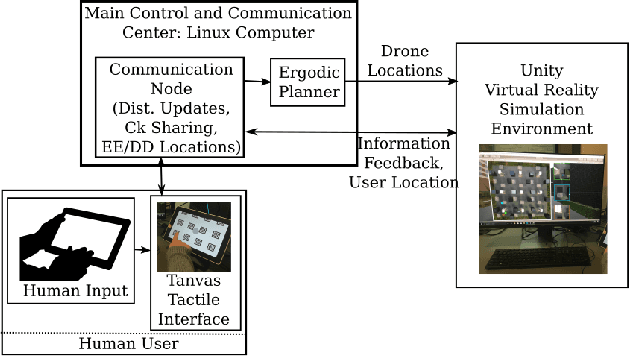

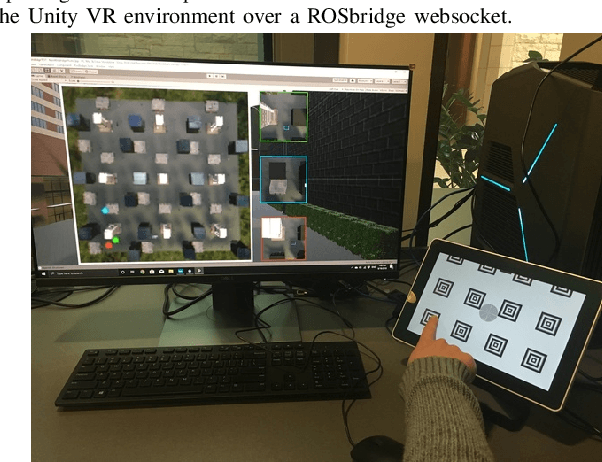

Abstract:This paper presents a formulation for swarm control and high-level task planning that is dynamically responsive to user commands and adaptable to environmental changes. We design an end-to-end pipeline from a tactile tablet interface for user commands to onboard control of robotic agents based on decentralized ergodic coverage. Our approach demonstrates reliable and dynamic control of a swarm collective through the use of ergodic specifications for planning and executing agent trajectories as well as responding to user and external inputs. We validate our approach in a virtual reality simulation environment and in real-world experiments at the DARPA OFFSET Urban Swarm Challenge FX3 field tests with a robotic swarm where user-based control of the swarm and mission-based tasks require a dynamic and flexible response to changing conditions and objectives in real-time.

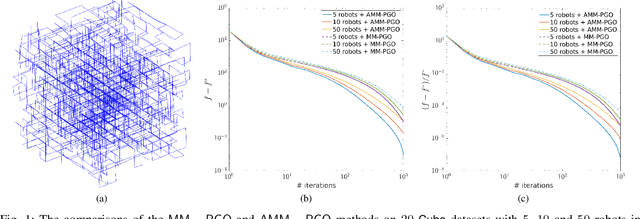

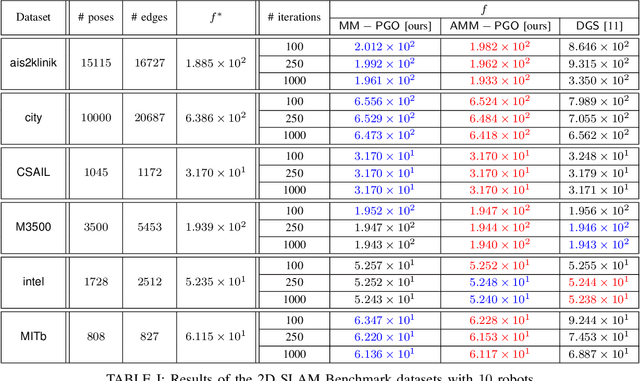

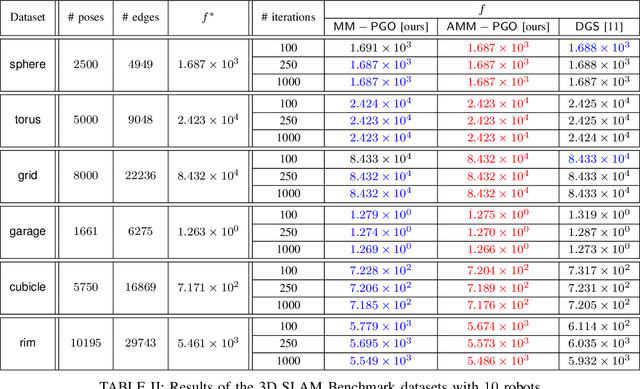

Majorization Minimization Methods to Distributed Pose Graph Optimization with Convergence Guarantees

Mar 11, 2020

Abstract:In this paper, we consider the problem of distributed pose graph optimization (PGO) that has extensive applications in multi-robot simultaneous localization and mapping (SLAM). We propose majorization minimization methods to distributed PGO and show that our proposed methods are guaranteed to converge to first-order critical points under mild conditions. Furthermore, since our proposed methods rely a proximal operator of distributed PGO, the convergence rate can be significantly accelerated with Nesterov's method, and more importantly, the acceleration induces no compromise of theoretical guarantees. In addition, we also present accelerated majorization minimization methods to the distributed chordal initialization that have a quadratic convergence, which can be used to compute an initial guess for distributed PGO. The efficacy of this work is validated through applications on a number of 2D and 3D SLAM datasets and comparisons with existing state-of-the-art methods, which indicates that our proposed methods have faster convergence and result in better solutions to distributed PGO.

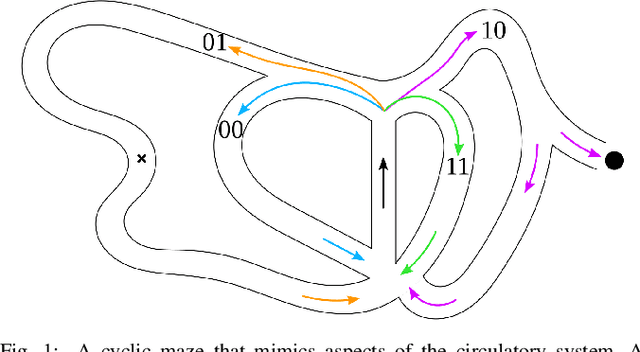

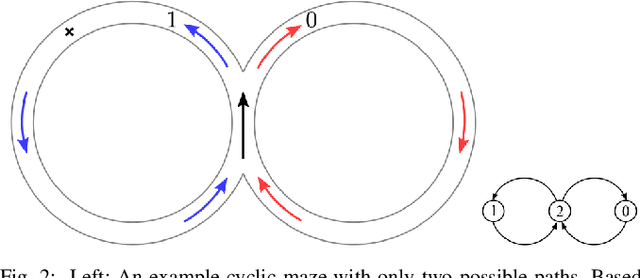

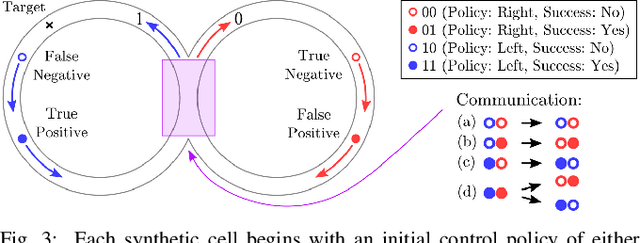

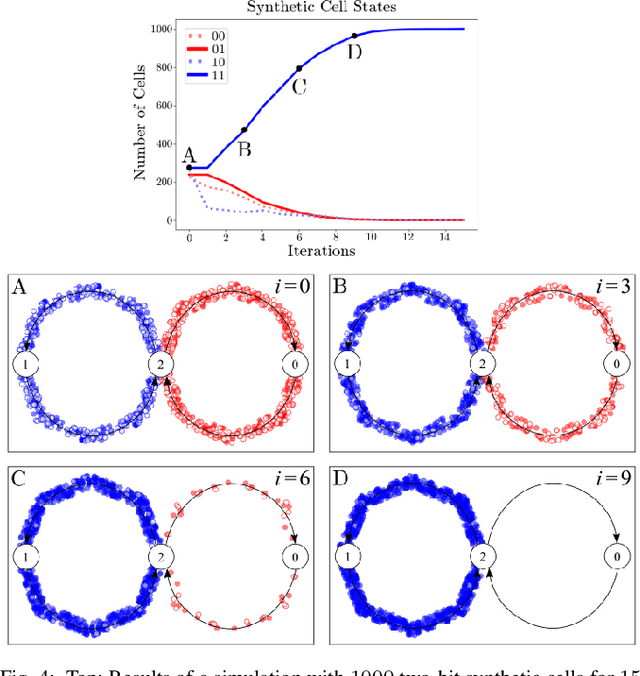

Bayesian Particles on Cyclic Graphs

Mar 08, 2020

Abstract:We consider the problem of designing synthetic cells to achieve a complex goal (e.g., mimicking the immune system by seeking invaders) in a complex environment (e.g., the circulatory system), where they might have to change their control policy, communicate with each other, and deal with stochasticity including false positives and negatives---all with minimal capabilities and only a few bits of memory. We simulate the immune response using cyclic, maze-like environments and use targets at unknown locations to represent invading cells. Using only a few bits of memory, the synthetic cells are programmed to perform a reinforcement learning-type algorithm with which they update their control policy based on randomized encounters with other cells. As the synthetic cells work together to find the target, their interactions as an ensemble function as a physical implementation of a Bayesian update. That is, the particles act as a particle filter. This result provides formal properties about the behavior of the synthetic cell ensemble that can be used to ensure robustness and safety. This method of simplified reinforcement learning is evaluated in simulations, and applied to an actual model of the human circulatory system.

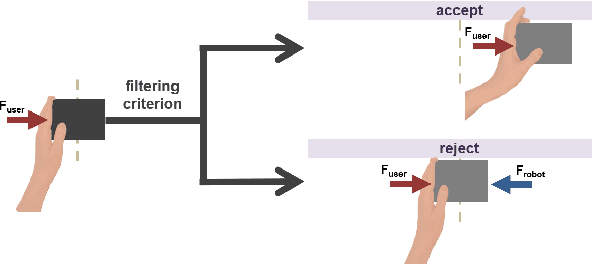

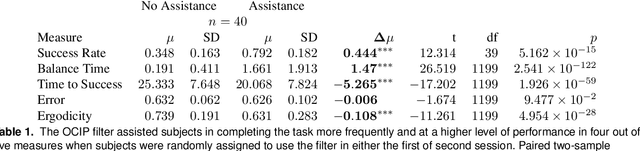

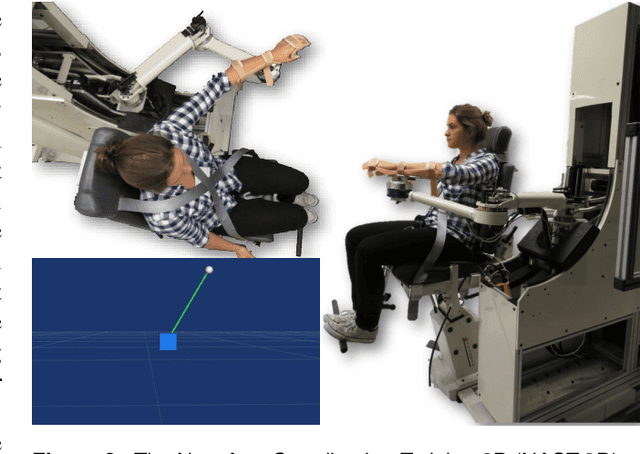

Task-Based Hybrid Shared Control for Training Through Forceful Interaction

Nov 18, 2019

Abstract:Despite the fact that robotic platforms can provide both consistent practice and objective assessments of users over the course of their training, there are relatively few instances where physical human robot interaction has been significantly more effective than unassisted practice or human-mediated training. This paper describes a hybrid shared control robot, which enhances task learning through kinesthetic feedback. The assistance assesses user actions using a task-specific evaluation criterion and selectively accepts or rejects them at each time instant. Through two human subject studies (total n=68), we show that this hybrid approach of switching between full transparency and full rejection of user inputs leads to increased skill acquisition and short-term retention compared to unassisted practice. Moreover, we show that the shared control paradigm exhibits features previously shown to promote successful training. It avoids user passivity by only rejecting user actions and allowing failure at the task. It improves performance during assistance, providing meaningful task-specific feedback. It is sensitive to initial skill of the user and behaves as an `assist-as-needed' control scheme---adapting its engagement in real time based on the performance and needs of the user. Unlike other successful algorithms, it does not require explicit modulation of the level of impedance or error amplification during training and it is permissive to a range of strategies because of its evaluation criterion. We demonstrate that the proposed hybrid shared control paradigm with a task-based minimal intervention criterion significantly enhances task-specific training.

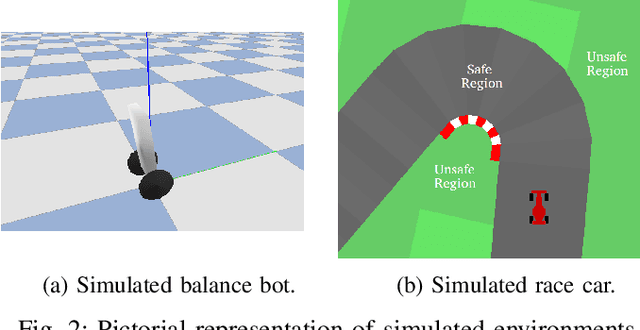

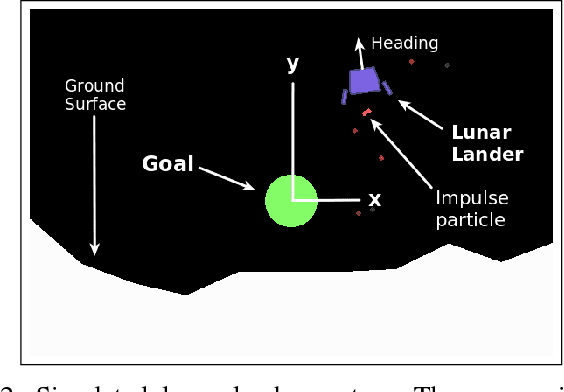

Highly Parallelized Data-driven MPC for Minimal Intervention Shared Control

Jun 05, 2019

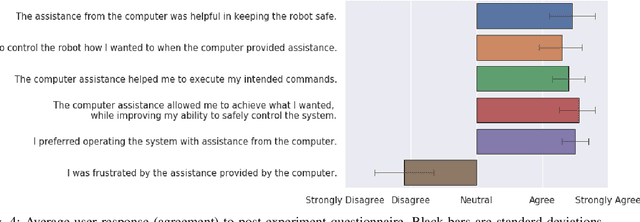

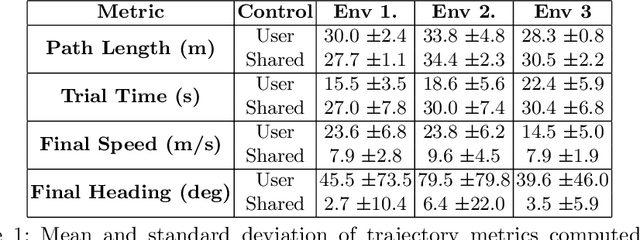

Abstract:We present a shared control paradigm that improves a user's ability to operate complex, dynamic systems in potentially dangerous environments without a priori knowledge of the user's objective. In this paradigm, the role of the autonomous partner is to improve the general safety of the system without constraining the user's ability to achieve unspecified behaviors. Our approach relies on a data-driven, model-based representation of the joint human-machine system to evaluate, in parallel, a significant number of potential inputs that the user may wish to provide. These samples are used to (1) predict the safety of the system over a receding horizon, and (2) minimize the influence of the autonomous partner. The resulting shared control algorithm maximizes the authority allocated to the human partner to improve their sense of agency, while improving safety. We evaluate the efficacy of our shared control algorithm with a human subjects study (n=20) conducted in two simulated environments: a balance bot and a race car. During the experiment, users are free to operate each system however they would like (i.e., there is no specified task) and are only asked to try to avoid unsafe regions of the state space. Using modern computational resources (i.e., GPUs) our approach is able to consider more than 10,000 potential trajectories at each time step in a control loop running at 100Hz for the balance bot and 60Hz for the race car. The results of the study show that our shared control paradigm improves system safety without knowledge of the user's goal, while maintaining high-levels of user satisfaction and low-levels of frustration. Our code is available online at https://github.com/asbroad/mpmi_shared_control.

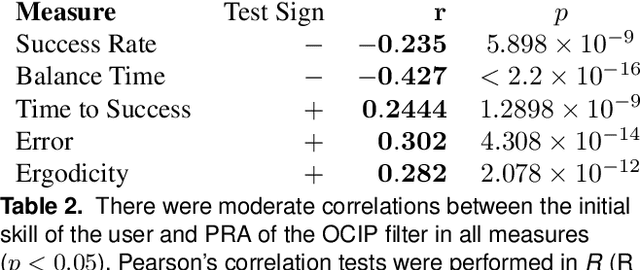

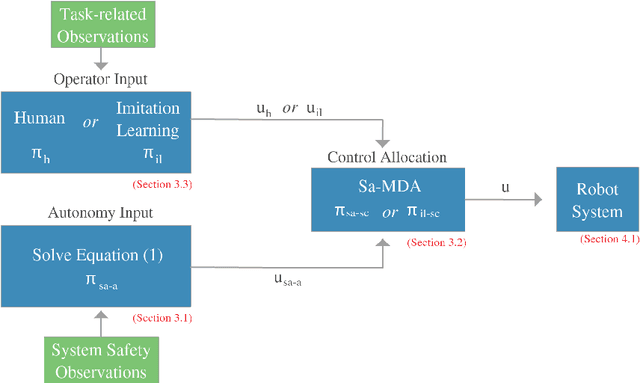

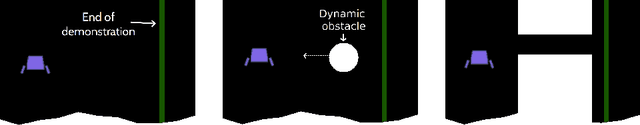

Operation and Imitation under Safety-Aware Shared Control

May 26, 2019

Abstract:We describe a shared control methodology that can, without knowledge of the task, be used to improve a human's control of a dynamic system, be used as a training mechanism, and be used in conjunction with Imitation Learning to generate autonomous policies that recreate novel behaviors. Our algorithm introduces autonomy that assists the human partner by enforcing safety and stability constraints. The autonomous agent has no a priori knowledge of the desired task and therefore only adds control information when there is concern for the safety of the system. We evaluate the efficacy of our approach with a human subjects study consisting of 20 participants. We find that our shared control algorithm significantly improves the rate at which users are able to successfully execute novel behaviors. Experimental results suggest that the benefits of our safety-aware shared control algorithm also extend to the human partner's understanding of the system and their control skill. Finally, we demonstrate how a combination of our safety-aware shared control algorithm and Imitation Learning can be used to autonomously recreate the demonstrated behaviors.

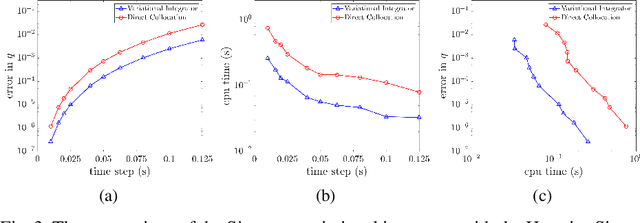

Efficient Computation of Higher-Order Variational Integrators in Robotic Simulation and Trajectory Optimization

Apr 29, 2019

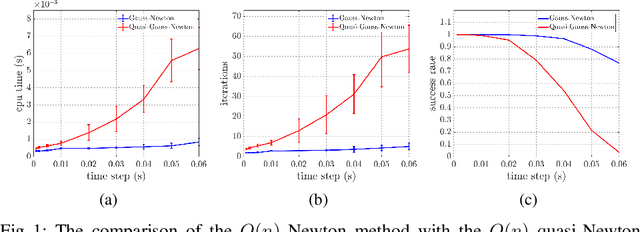

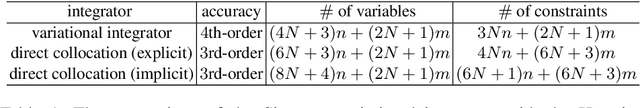

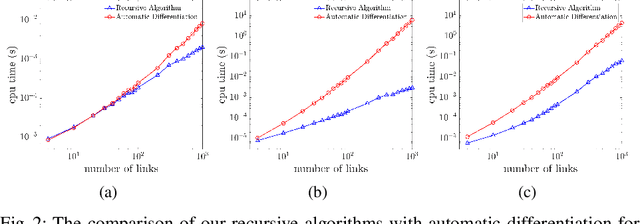

Abstract:This paper addresses the problem of efficiently computing higher-order variational integrators in simulation and trajectory optimization of mechanical systems as those often found in robotic applications. We develop $O(n)$ algorithms to evaluate the discrete Euler-Lagrange (DEL) equations and compute the Newton direction for solving the DEL equations, which results in linear-time variational integrators of arbitrarily high order. To our knowledge, no linear-time higher-order variational or even implicit integrators have been developed before. Moreover, an $O(n^2)$ algorithm to linearize the DEL equations is presented, which is useful for trajectory optimization. These proposed algorithms eliminate the bottleneck of implementing higher-order variational integrators in simulation and trajectory optimization of complex robotic systems. The efficacy of this paper is validated through comparison with existing methods, and implementation on various robotic systems---including trajectory optimization of the Spring Flamingo robot, the LittleDog robot and the Atlas robot. The results illustrate that the same integrator can be used for simulation and trajectory optimization in robotics, preserving mechanical properties while achieving good scalability and accuracy.

* 42 pages, includes appendix

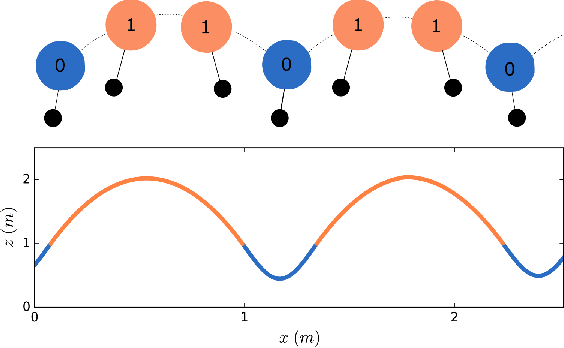

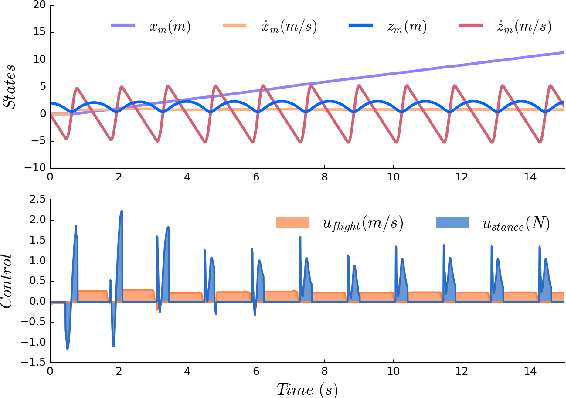

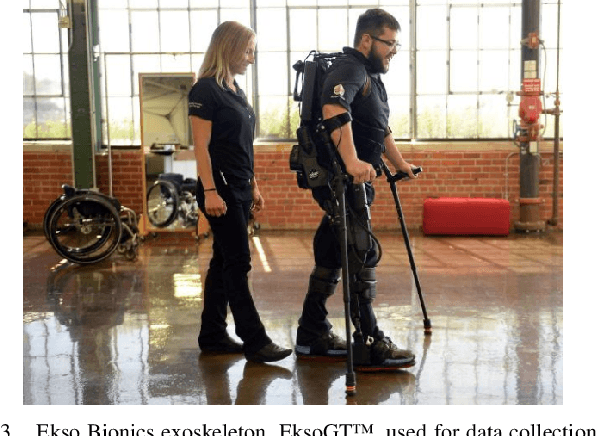

Data-Driven Gait Segmentation for Walking Assistance in a Lower-Limb Assistive Device

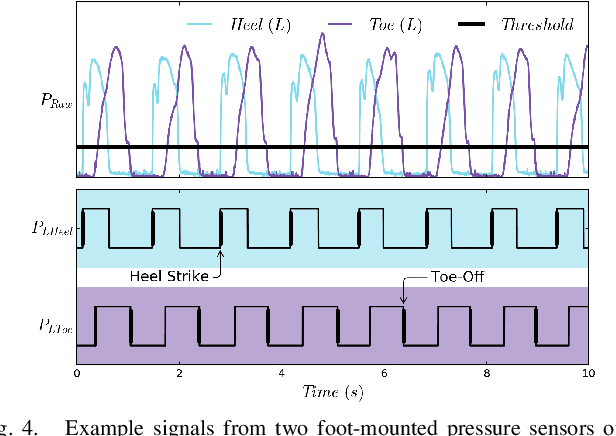

Feb 28, 2019

Abstract:Hybrid systems, such as bipedal walkers, are challenging to control because of discontinuities in their nonlinear dynamics. Little can be predicted about the systems' evolution without modeling the guard conditions that govern transitions between hybrid modes, so even systems with reliable state sensing can be difficult to control. We propose an algorithm that allows for determining the hybrid mode of a system in real-time using data-driven analysis. The algorithm is used with data-driven dynamics identification to enable model predictive control based entirely on data. Two examples---a simulated hopper and experimental data from a bipedal walker---are used. In the context of the first example, we are able to closely approximate the dynamics of a hybrid SLIP model and then successfully use them for control in simulation. In the second example, we demonstrate gait partitioning of human walking data, accurately differentiating between stance and swing, as well as selected subphases of swing. We identify contact events, such as heel strike and toe-off, without a contact sensor using only kinematics data from the knee and hip joints, which could be particularly useful in providing online assistance during walking. Our algorithm does not assume a predefined gait structure or gait phase transitions, lending itself to segmentation of both healthy and pathological gaits. With this flexibility, impairment-specific rehabilitation strategies or assistance could be designed.

* 7 pages

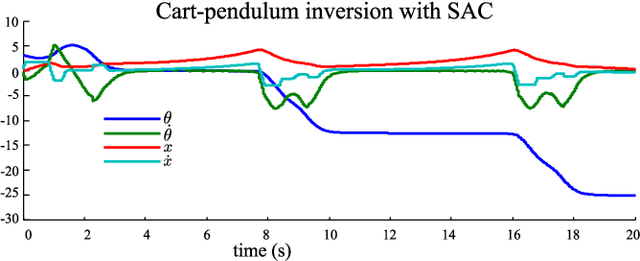

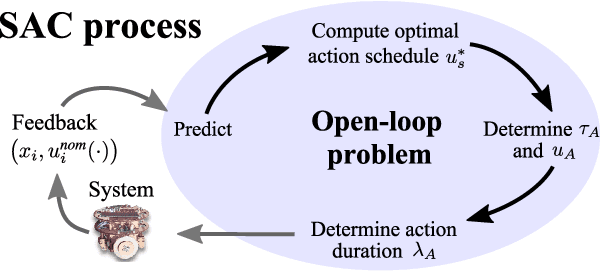

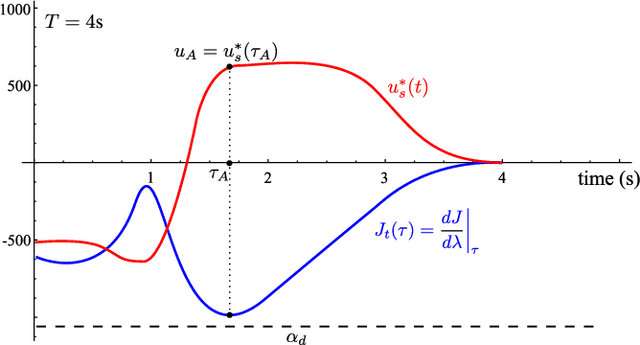

Iterative Sequential Action Control for Stable, Model-Based Control of Nonlinear Systems

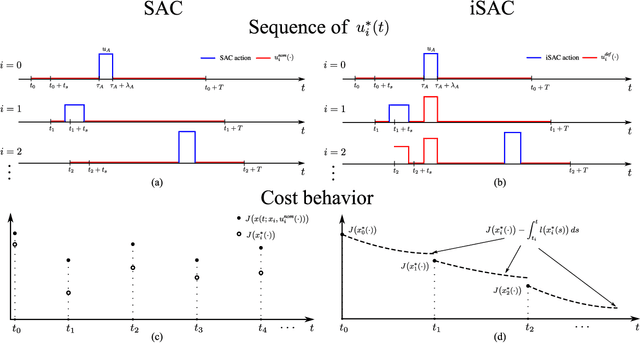

Oct 26, 2018

Abstract:This paper presents iterative Sequential Action Control (iSAC), a receding horizon approach for control of nonlinear systems. The iSAC method has a closed-form open-loop solution, which is iteratively updated between time steps by introducing constant control values applied for short duration. Application of a contractive constraint on the cost is shown to lead to closed-loop asymptotic stability under mild assumptions. The effect of asymptotically decaying disturbances on system trajectories is also examined. To demonstrate the applicability of iSAC to a variety of systems and conditions, we employ five different systems, including a 13-dimensional quaternion-based quadrotor. Each system is tested in different scenarios, ranging from feasible and infeasible trajectory tracking, to setpoint stabilization, with or without the presence of external disturbances. Finally, limitations of this work are discussed.

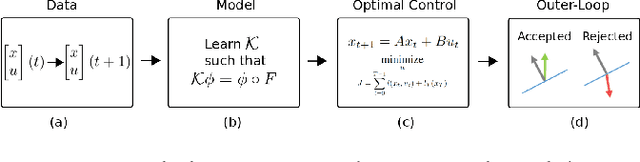

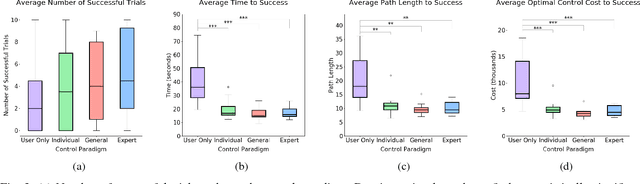

Learning Models for Shared Control of Human-Machine Systems with Unknown Dynamics

Aug 24, 2018

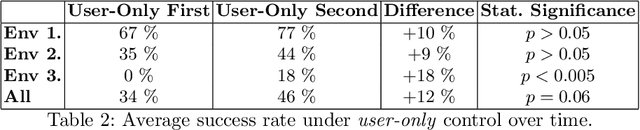

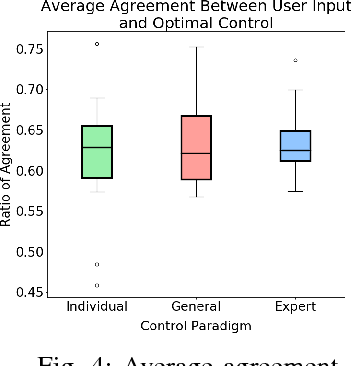

Abstract:We present a novel approach to shared control of human-machine systems. Our method assumes no a priori knowledge of the system dynamics. Instead, we learn both the dynamics and information about the user's interaction from observation through the use of the Koopman operator. Using the learned model, we define an optimization problem to compute the optimal policy for a given task, and compare the user input to the optimal input. We demonstrate the efficacy of our approach with a user study. We also analyze the individual nature of the learned models by comparing the effectiveness of our approach when the demonstration data comes from a user's own interactions, from the interactions of a group of users and from a domain expert. Positive results include statistically significant improvements on task metrics when comparing a user-only control paradigm with our shared control paradigm. Surprising results include findings that suggest that individualizing the model based on a user's own data does not effect the ability to learn a useful dynamic system. We explore this tension as it relates to developing human-in-the-loop systems further in the discussion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge