Takashi Fukuda

Granite-speech: open-source speech-aware LLMs with strong English ASR capabilities

May 14, 2025Abstract:Granite-speech LLMs are compact and efficient speech language models specifically designed for English ASR and automatic speech translation (AST). The models were trained by modality aligning the 2B and 8B parameter variants of granite-3.3-instruct to speech on publicly available open-source corpora containing audio inputs and text targets consisting of either human transcripts for ASR or automatically generated translations for AST. Comprehensive benchmarking shows that on English ASR, which was our primary focus, they outperform several competitors' models that were trained on orders of magnitude more proprietary data, and they keep pace on English-to-X AST for major European languages, Japanese, and Chinese. The speech-specific components are: a conformer acoustic encoder using block attention and self-conditioning trained with connectionist temporal classification, a windowed query-transformer speech modality adapter used to do temporal downsampling of the acoustic embeddings and map them to the LLM text embedding space, and LoRA adapters to further fine-tune the text LLM. Granite-speech-3.3 operates in two modes: in speech mode, it performs ASR and AST by activating the encoder, projector, and LoRA adapters; in text mode, it calls the underlying granite-3.3-instruct model directly (without LoRA), essentially preserving all the text LLM capabilities and safety. Both models are freely available on HuggingFace (https://huggingface.co/ibm-granite/granite-speech-3.3-2b and https://huggingface.co/ibm-granite/granite-speech-3.3-8b) and can be used for both research and commercial purposes under a permissive Apache 2.0 license.

Improving Generalization of Deep Neural Network Acoustic Models with Length Perturbation and N-best Based Label Smoothing

Mar 29, 2022

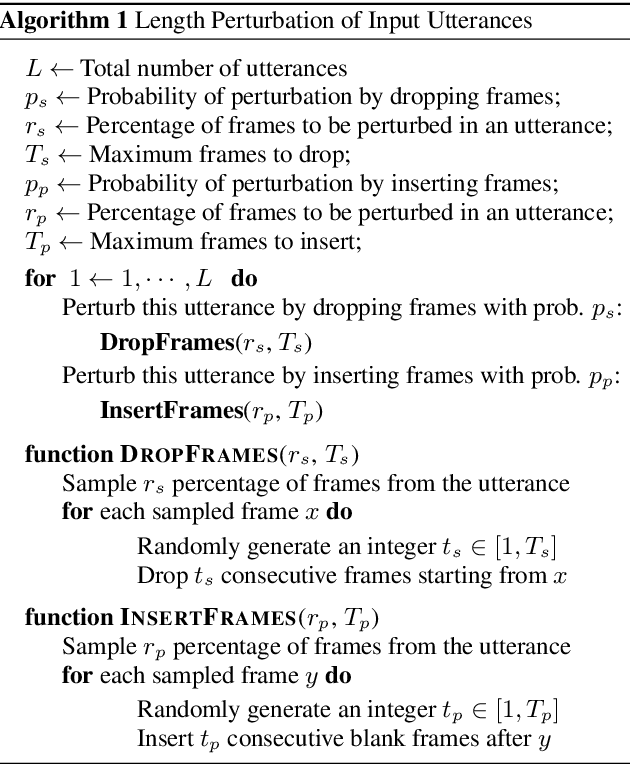

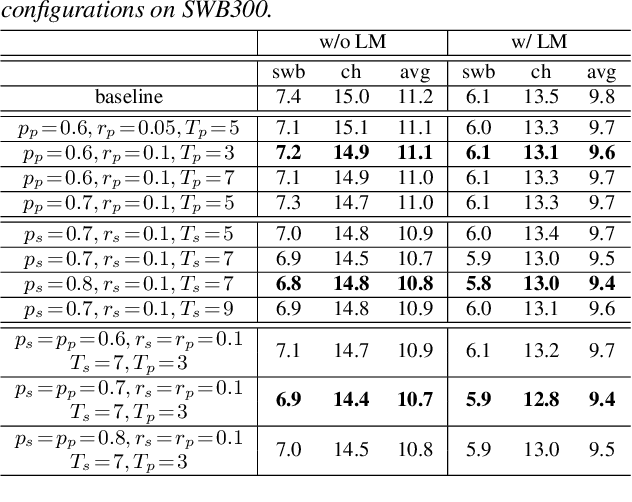

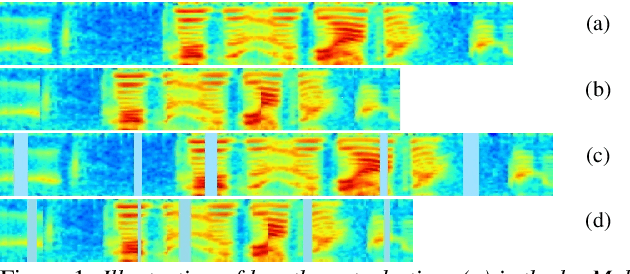

Abstract:We introduce two techniques, length perturbation and n-best based label smoothing, to improve generalization of deep neural network (DNN) acoustic models for automatic speech recognition (ASR). Length perturbation is a data augmentation algorithm that randomly drops and inserts frames of an utterance to alter the length of the speech feature sequence. N-best based label smoothing randomly injects noise to ground truth labels during training in order to avoid overfitting, where the noisy labels are generated from n-best hypotheses. We evaluate these two techniques extensively on the 300-hour Switchboard (SWB300) dataset and an in-house 500-hour Japanese (JPN500) dataset using recurrent neural network transducer (RNNT) acoustic models for ASR. We show that both techniques improve the generalization of RNNT models individually and they can also be complementary. In particular, they yield good improvements over a strong SWB300 baseline and give state-of-art performance on SWB300 using RNNT models.

Knowledge Distillation Leveraging Alternative Soft Targets from Non-Parallel Qualified Speech Data

Dec 16, 2021

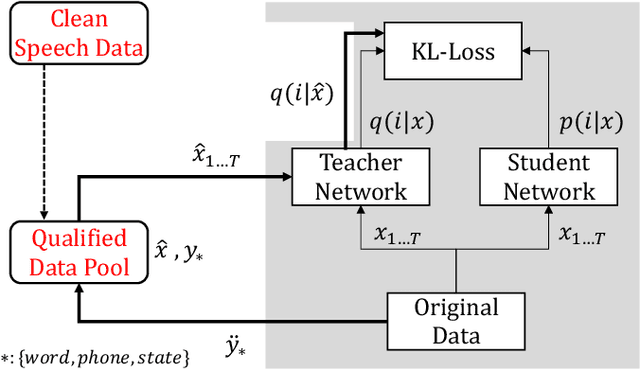

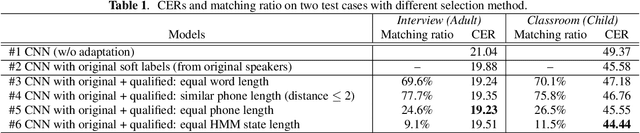

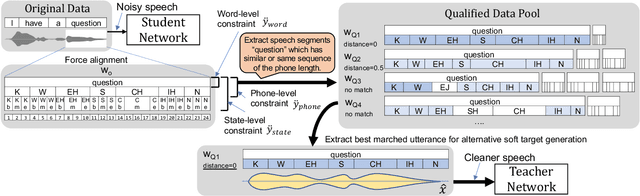

Abstract:This paper describes a novel knowledge distillation framework that leverages acoustically qualified speech data included in an existing training data pool as privileged information. In our proposed framework, a student network is trained with multiple soft targets for each utterance that consist of main soft targets from original speakers' utterance and alternative targets from other speakers' utterances spoken under better acoustic conditions as a secondary view. These qualified utterances from other speakers, used to generate better soft targets, are collected from a qualified data pool by using strict constraints in terms of word/phone/state durations. Our proposed method is a form of target-side data augmentation that creates multiple copies of data with corresponding better soft targets obtained from a qualified data pool. We show in our experiments under acoustic model adaptation settings that the proposed method, exploiting better soft targets obtained from various speakers, can further improve recognition accuracy compared with conventional methods using only soft targets from original speakers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge