Takafumi Aoki

Leveraging Intermediate Features of Vision Transformer for Face Anti-Spoofing

May 30, 2025Abstract:Face recognition systems are designed to be robust against changes in head pose, illumination, and blurring during image capture. If a malicious person presents a face photo of the registered user, they may bypass the authentication process illegally. Such spoofing attacks need to be detected before face recognition. In this paper, we propose a spoofing attack detection method based on Vision Transformer (ViT) to detect minute differences between live and spoofed face images. The proposed method utilizes the intermediate features of ViT, which have a good balance between local and global features that are important for spoofing attack detection, for calculating loss in training and score in inference. The proposed method also introduces two data augmentation methods: face anti-spoofing data augmentation and patch-wise data augmentation, to improve the accuracy of spoofing attack detection. We demonstrate the effectiveness of the proposed method through experiments using the OULU-NPU and SiW datasets.

Stereo Radargrammetry Using Deep Learning from Airborne SAR Images

May 29, 2025Abstract:In this paper, we propose a stereo radargrammetry method using deep learning from airborne Synthetic Aperture Radar (SAR) images. Deep learning-based methods are considered to suffer less from geometric image modulation, while there is no public SAR image dataset used to train such methods. We create a SAR image dataset and perform fine-tuning of a deep learning-based image correspondence method. The proposed method suppresses the degradation of image quality by pixel interpolation without ground projection of the SAR image and divides the SAR image into patches for processing, which makes it possible to apply deep learning. Through a set of experiments, we demonstrate that the proposed method exhibits a wider range and more accurate elevation measurements compared to conventional methods. The project web page is available at: https://gsisaoki.github.io/IGARSS2025_sasayama/

OB3D: A New Dataset for Benchmarking Omnidirectional 3D Reconstruction Using Blender

May 26, 2025

Abstract:Recent advancements in radiance field rendering, exemplified by Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS), have significantly progressed 3D modeling and reconstruction. The use of multiple 360-degree omnidirectional images for these tasks is increasingly favored due to advantages in data acquisition and comprehensive scene capture. However, the inherent geometric distortions in common omnidirectional representations, such as equirectangular projection (particularly severe in polar regions and varying with latitude), pose substantial challenges to achieving high-fidelity 3D reconstructions. Current datasets, while valuable, often lack the specific focus, scene composition, and ground truth granularity required to systematically benchmark and drive progress in overcoming these omnidirectional-specific challenges. To address this critical gap, we introduce Omnidirectional Blender 3D (OB3D), a new synthetic dataset curated for advancing 3D reconstruction from multiple omnidirectional images. OB3D features diverse and complex 3D scenes generated from Blender 3D projects, with a deliberate emphasis on challenging scenarios. The dataset provides comprehensive ground truth, including omnidirectional RGB images, precise omnidirectional camera parameters, and pixel-aligned equirectangular maps for depth and normals, alongside evaluation metrics. By offering a controlled yet challenging environment, OB3Daims to facilitate the rigorous evaluation of existing methods and prompt the development of new techniques to enhance the accuracy and reliability of 3D reconstruction from omnidirectional images.

ErpGS: Equirectangular Image Rendering enhanced with 3D Gaussian Regularization

May 26, 2025

Abstract:The use of multi-view images acquired by a 360-degree camera can reconstruct a 3D space with a wide area. There are 3D reconstruction methods from equirectangular images based on NeRF and 3DGS, as well as Novel View Synthesis (NVS) methods. On the other hand, it is necessary to overcome the large distortion caused by the projection model of a 360-degree camera when equirectangular images are used. In 3DGS-based methods, the large distortion of the 360-degree camera model generates extremely large 3D Gaussians, resulting in poor rendering accuracy. We propose ErpGS, which is Omnidirectional GS based on 3DGS to realize NVS addressing the problems. ErpGS introduce some rendering accuracy improvement techniques: geometric regularization, scale regularization, and distortion-aware weights and a mask to suppress the effects of obstacles in equirectangular images. Through experiments on public datasets, we demonstrate that ErpGS can render novel view images more accurately than conventional methods.

Sparse2DGS: Sparse-View Surface Reconstruction using 2D Gaussian Splatting with Dense Point Cloud

May 26, 2025Abstract:Gaussian Splatting (GS) has gained attention as a fast and effective method for novel view synthesis. It has also been applied to 3D reconstruction using multi-view images and can achieve fast and accurate 3D reconstruction. However, GS assumes that the input contains a large number of multi-view images, and therefore, the reconstruction accuracy significantly decreases when only a limited number of input images are available. One of the main reasons is the insufficient number of 3D points in the sparse point cloud obtained through Structure from Motion (SfM), which results in a poor initialization for optimizing the Gaussian primitives. We propose a new 3D reconstruction method, called Sparse2DGS, to enhance 2DGS in reconstructing objects using only three images. Sparse2DGS employs DUSt3R, a fundamental model for stereo images, along with COLMAP MVS to generate highly accurate and dense 3D point clouds, which are then used to initialize 2D Gaussians. Through experiments on the DTU dataset, we show that Sparse2DGS can accurately reconstruct the 3D shapes of objects using just three images.

Zero-Shot Pseudo Labels Generation Using SAM and CLIP for Semi-Supervised Semantic Segmentation

May 26, 2025Abstract:Semantic segmentation is a fundamental task in medical image analysis and autonomous driving and has a problem with the high cost of annotating the labels required in training. To address this problem, semantic segmentation methods based on semi-supervised learning with a small number of labeled data have been proposed. For example, one approach is to train a semantic segmentation model using images with annotated labels and pseudo labels. In this approach, the accuracy of the semantic segmentation model depends on the quality of the pseudo labels, and the quality of the pseudo labels depends on the performance of the model to be trained and the amount of data with annotated labels. In this paper, we generate pseudo labels using zero-shot annotation with the Segment Anything Model (SAM) and Contrastive Language-Image Pretraining (CLIP), improve the accuracy of the pseudo labels using the Unified Dual-Stream Perturbations Approach (UniMatch), and use them as enhanced labels to train a semantic segmentation model. The effectiveness of the proposed method is demonstrated through the experiments using the public datasets: PASCAL and MS COCO.

Formula-Driven Data Augmentation and Partial Retinal Layer Copying for Retinal Layer Segmentation

Oct 02, 2024

Abstract:Major retinal layer segmentation methods from OCT images assume that the retina is flattened in advance, and thus cannot always deal with retinas that have changes in retinal structure due to ophthalmopathy and/or curvature due to myopia. To eliminate the use of flattening in retinal layer segmentation for practicality of such methods, we propose novel data augmentation methods for OCT images. Formula-driven data augmentation (FDDA) emulates a variety of retinal structures by vertically shifting each column of the OCT images according to a given mathematical formula. We also propose partial retinal layer copying (PRLC) that copies a part of the retinal layers and pastes it into a region outside the retinal layers. Through experiments using the OCT MS and Healthy Control dataset and the Duke Cyst DME dataset, we demonstrate that the use of FDDA and PRLC makes it possible to detect the boundaries of retinal layers without flattening even retinal layer segmentation methods that assume flattening of the retina.

Multibiometrics Using a Single Face Image

Sep 30, 2024

Abstract:Multibiometrics, which uses multiple biometric traits to improve recognition performance instead of using only one biometric trait to authenticate individuals, has been investigated. Previous studies have combined individually acquired biometric traits or have not fully considered the convenience of the system.Focusing on a single face image, we propose a novel multibiometric method that combines five biometric traits, i.e., face, iris, periocular, nose, eyebrow, that can be extracted from a single face image. The proposed method does not sacrifice the convenience of biometrics since only a single face image is used as input.Through a variety of experiments using the CASIA Iris Distance database, we demonstrate the effectiveness of the proposed multibiometrics method.

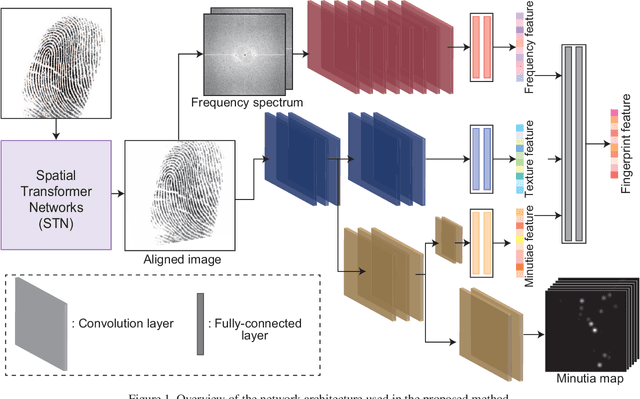

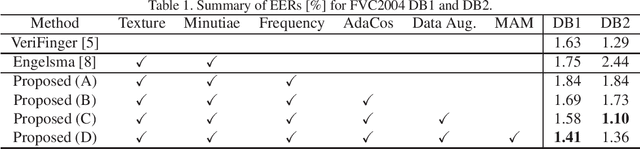

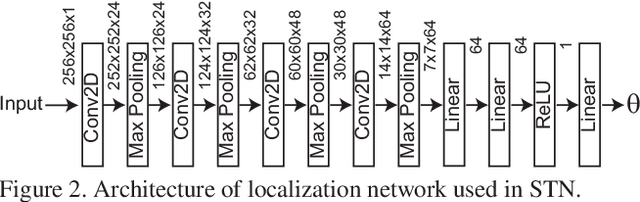

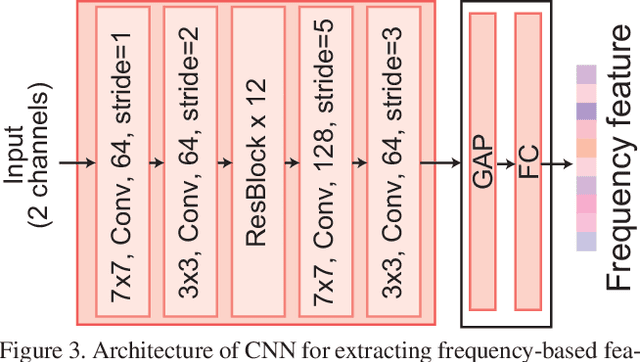

Fingerprint Feature Extraction by Combining Texture, Minutiae, and Frequency Spectrum Using Multi-Task CNN

Aug 27, 2020

Abstract:Although most fingerprint matching methods utilize minutia points and/or texture of fingerprint images as fingerprint features, the frequency spectrum is also a useful feature since a fingerprint is composed of ridge patterns with its inherent frequency band. We propose a novel CNN-based method for extracting fingerprint features from texture, minutiae, and frequency spectrum. In order to extract effective texture features from local regions around the minutiae, the minutia attention module is introduced to the proposed method. We also propose new data augmentation methods, which takes into account the characteristics of fingerprint images to increase the number of images during training since we use only a public dataset in training, which includes a few fingerprint classes. Through a set of experiments using FVC2004 DB1 and DB2, we demonstrated that the proposed method exhibits the efficient performance on fingerprint verification compared with a commercial fingerprint matching software and the conventional method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge