Taekyung Kim

TOAST: Trajectory Optimization and Simultaneous Tracking using Shared Neural Network Dynamics

Jan 21, 2022

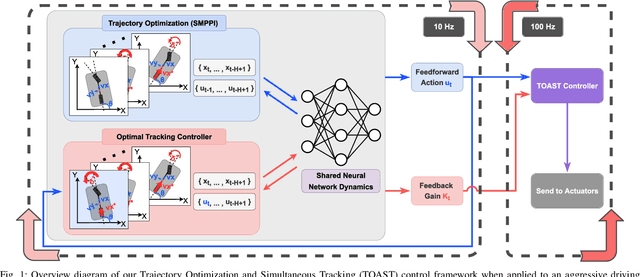

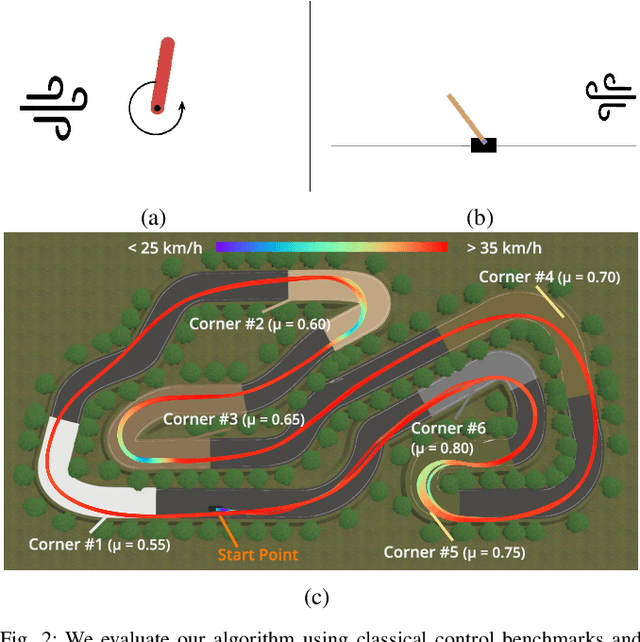

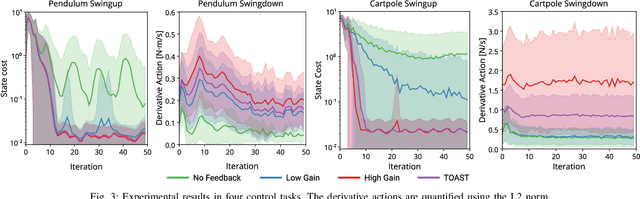

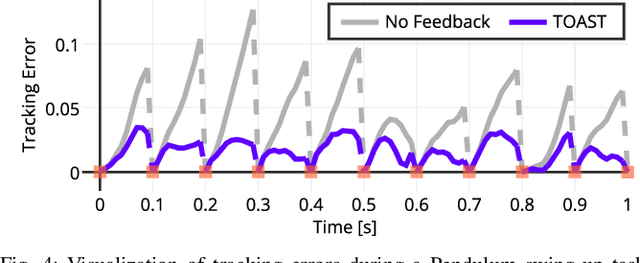

Abstract:Neural networks have been increasingly employed in Model Predictive Controller (MPC) to control nonlinear dynamic systems. However, MPC still poses a problem that an achievable update rate is insufficient to cope with model uncertainty and external disturbances. In this paper, we present a novel control scheme that can design an optimal tracking controller using the neural network dynamics of the MPC, making it possible to be applied as a plug-and-play extension for any existing model-based feedforward controller. We also describe how our method handles a neural network containing historical information, which does not follow a general form of dynamics. The proposed method is evaluated by its performance in classical control benchmarks with external disturbances. We also extend our control framework to be applied in an aggressive autonomous driving task with unknown friction. In all experiments, our method outperformed the compared methods by a large margin. Our controller also showed low control chattering levels, demonstrating that our feedback controller does not interfere with the optimal command of MPC.

Derivative Action Control: Smooth Model Predictive Path Integral Control without Smoothing

Jan 13, 2022

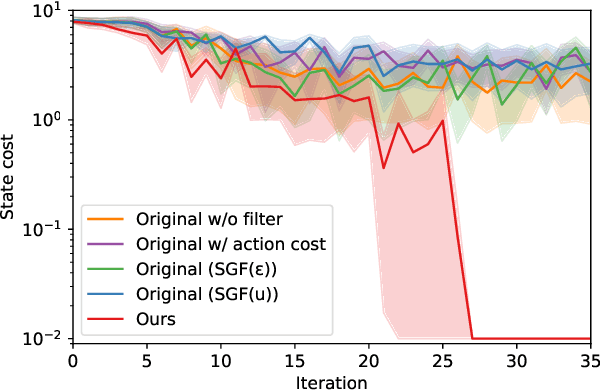

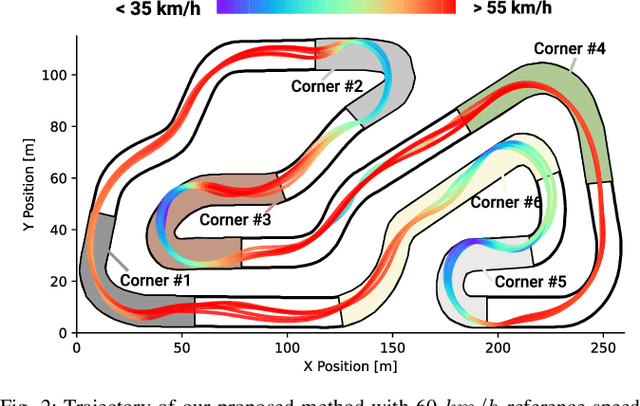

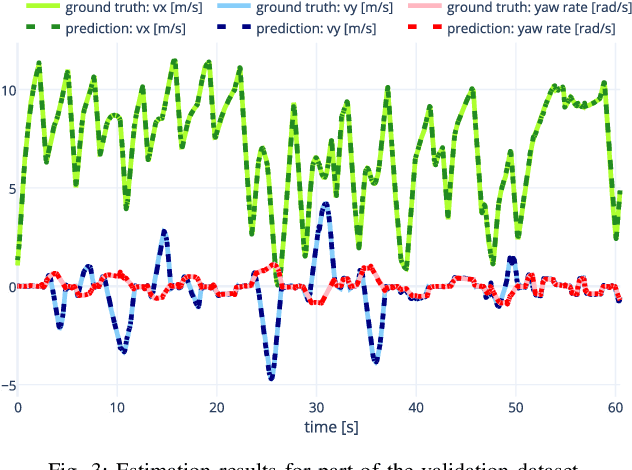

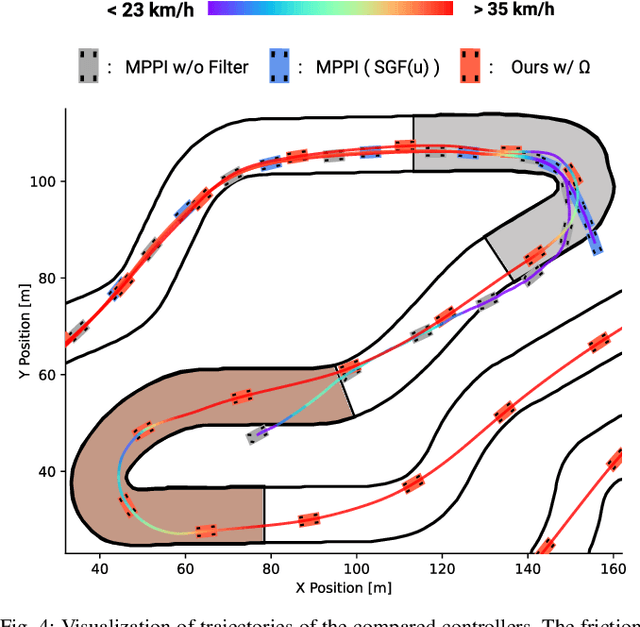

Abstract:Here, we present a new approach to generate smooth control sequences in Model Predictive Path Integral control (MPPI) tasks without any additional smoothing algorithms. Our method effectively alleviates the chattering in sampling, while the information theoretic derivation of MPPI remains the same. We demonstrated the proposed method in a challenging autonomous driving task with quantitative evaluation of different algorithms. A neural network vehicle model for estimating system dynamics under varying road friction conditions is also presented. Our video can be found at: \url{https://youtu.be/o3Nmi0UJFqg}.

Residual-Guided Learning Representation for Self-Supervised Monocular Depth Estimation

Nov 08, 2021Abstract:Photometric consistency loss is one of the representative objective functions commonly used for self-supervised monocular depth estimation. However, this loss often causes unstable depth predictions in textureless or occluded regions due to incorrect guidance. Recent self-supervised learning approaches tackle this issue by utilizing feature representations explicitly learned from auto-encoders, expecting better discriminability than the input image. Despite the use of auto-encoded features, we observe that the method does not embed features as discriminative as auto-encoded features. In this paper, we propose residual guidance loss that enables the depth estimation network to embed the discriminative feature by transferring the discriminability of auto-encoded features. We conducted experiments on the KITTI benchmark and verified our method's superiority and orthogonality on other state-of-the-art methods.

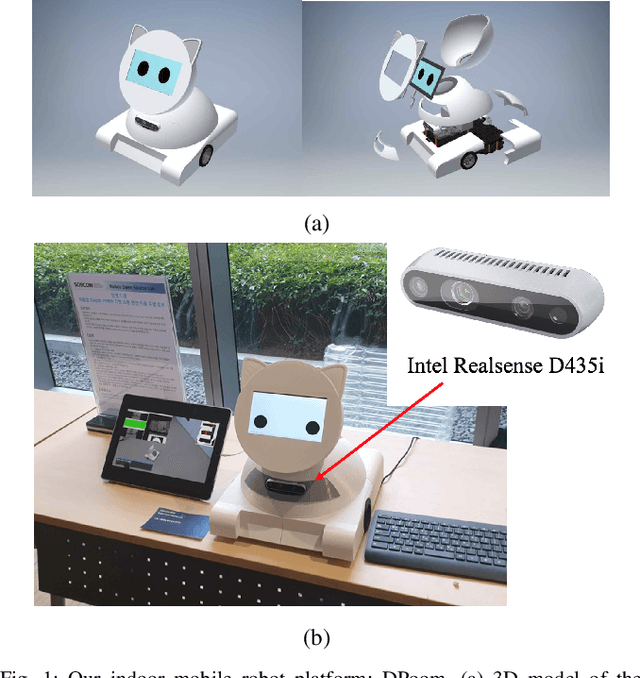

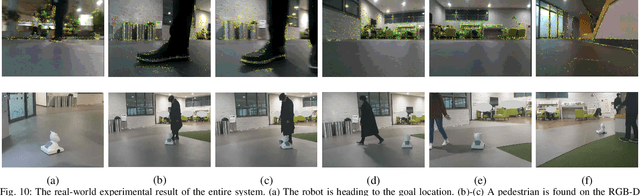

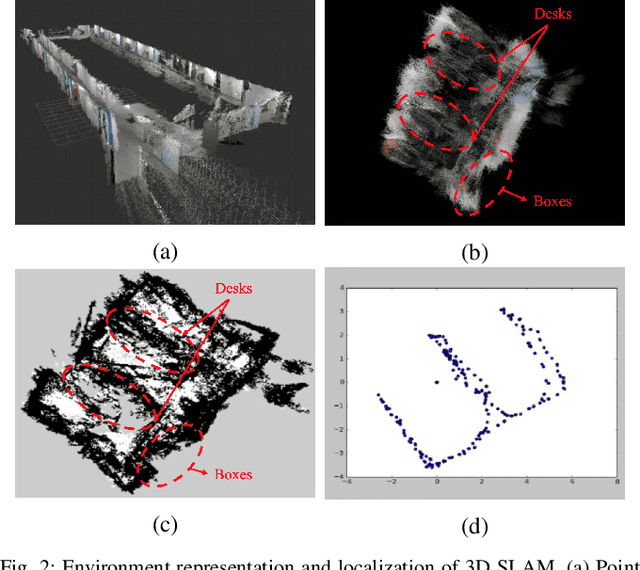

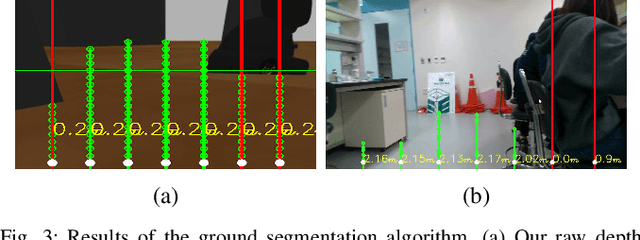

Real-Time Navigation System for a Low-Cost Mobile Robot with an RGB-D Camera

Mar 04, 2021

Abstract:Currently, mobile robots are developing rapidly and are finding numerous applications in industry. However, there remain a number of problems related to their practical use, such as the need for expensive hardware and their high power consumption levels. In this study, we propose a navigation system that is operable on a low-end computer with an RGB-D camera and a mobile robot platform for the operation of an integrated autonomous driving system. The proposed system does not require LiDARs or a GPU. Our raw depth image ground segmentation approach extracts a traversability map for the safe driving of low-body mobile robots. It is designed to guarantee real-time performance on a low-cost commercial single board computer with integrated SLAM, global path planning, and motion planning. Running sensor data processing and other autonomous driving functions simultaneously, our navigation method performs rapidly at a refresh rate of 18Hz for control command, whereas other systems have slower refresh rates. Our method outperforms current state-of-the-art navigation approaches as shown in 3D simulation tests. In addition, we demonstrate the applicability of our mobile robot system through successful autonomous driving in a residential lobby.

Meta Batch-Instance Normalization for Generalizable Person Re-Identification

Nov 30, 2020

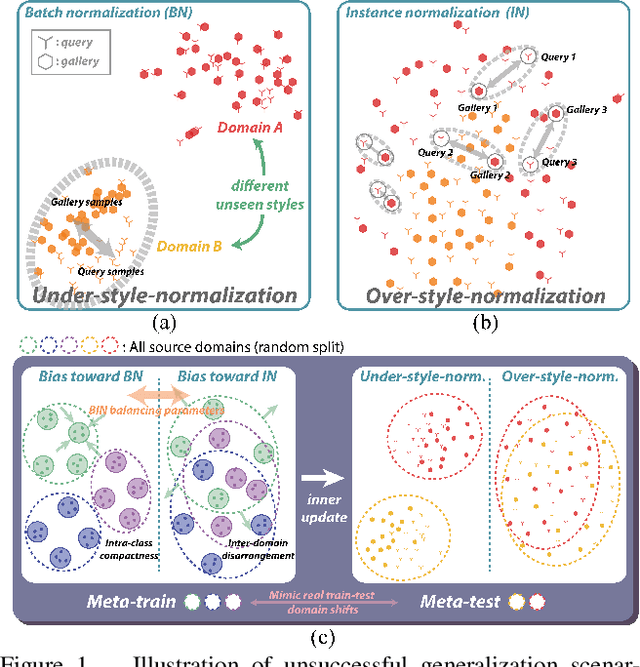

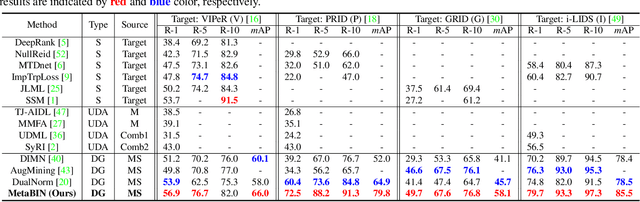

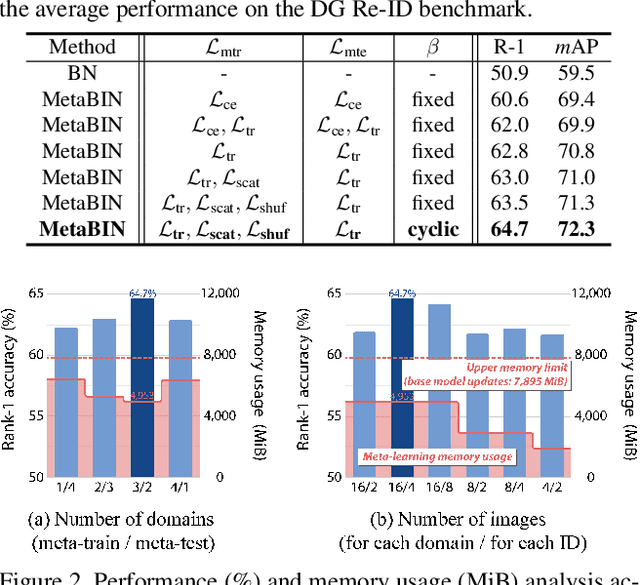

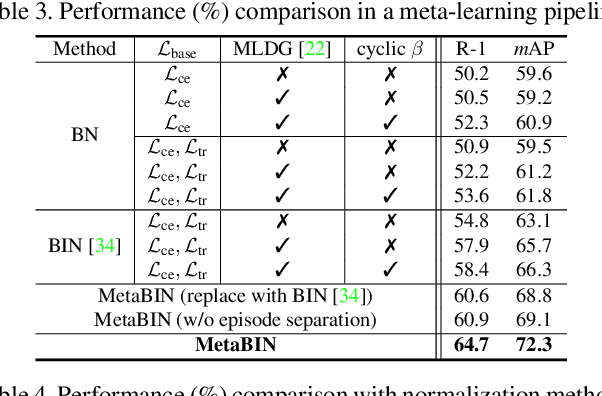

Abstract:Although supervised person re-identification (Re-ID) methods have shown impressive performance, they suffer from a poor generalization capability on unseen domains. Therefore, generalizable Re-ID has recently attracted growing attention. Many existing methods have employed an instance normalization technique to reduce style variations, but the loss of discriminative information could not be avoided. In this paper, we propose a novel generalizable Re-ID framework, named Meta Batch-Instance Normalization (MetaBIN). Our main idea is to generalize normalization layers by simulating unsuccessful generalization scenarios beforehand in the meta-learning pipeline. To this end, we combine learnable batch-instance normalization layers with meta-learning and investigate the challenging cases caused by both batch and instance normalization layers. Moreover, we diversify the virtual simulations via our meta-train loss accompanied by a cyclic inner-updating manner to boost generalization capability. After all, the MetaBIN framework prevents our model from overfitting to the given source styles and improves the generalization capability to unseen domains without additional data augmentation or complicated network design. Extensive experimental results show that our model outperforms the state-of-the-art methods on the large-scale domain generalization Re-ID benchmark.

Attract, Perturb, and Explore: Learning a Feature Alignment Network for Semi-supervised Domain Adaptation

Jul 18, 2020

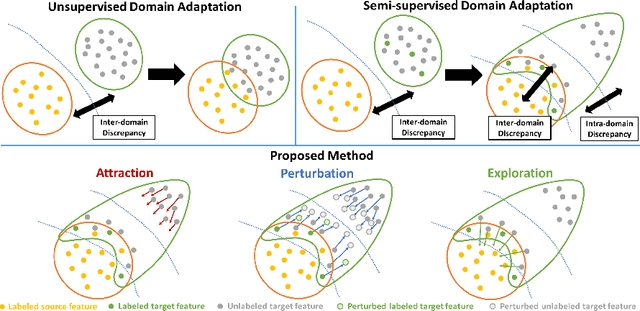

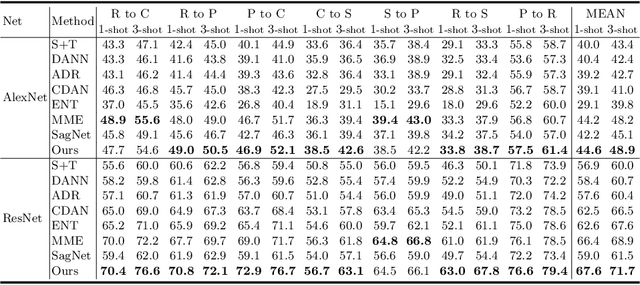

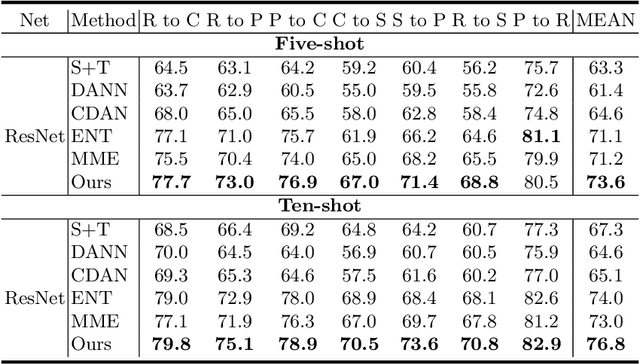

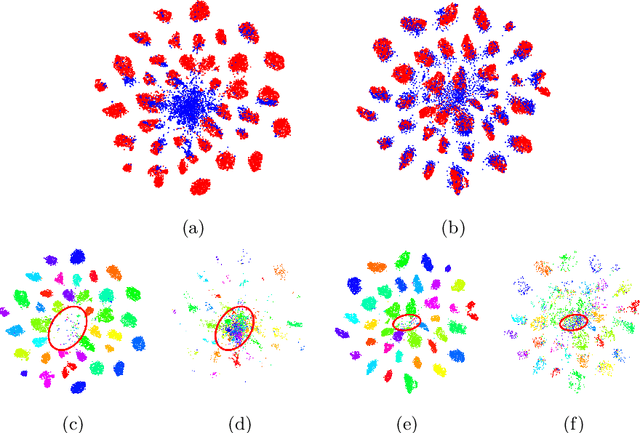

Abstract:Although unsupervised domain adaptation methods have been widely adopted across several computer vision tasks, it is more desirable if we can exploit a few labeled data from new domains encountered in a real application. The novel setting of the semi-supervised domain adaptation (SSDA) problem shares the challenges with the domain adaptation problem and the semi-supervised learning problem. However, a recent study shows that conventional domain adaptation and semi-supervised learning methods often result in less effective or negative transfer in the SSDA problem. In order to interpret the observation and address the SSDA problem, in this paper, we raise the intra-domain discrepancy issue within the target domain, which has never been discussed so far. Then, we demonstrate that addressing the intra-domain discrepancy leads to the ultimate goal of the SSDA problem. We propose an SSDA framework that aims to align features via alleviation of the intra-domain discrepancy. Our framework mainly consists of three schemes, i.e., attraction, perturbation, and exploration. First, the attraction scheme globally minimizes the intra-domain discrepancy within the target domain. Second, we demonstrate the incompatibility of the conventional adversarial perturbation methods with SSDA. Then, we present a domain adaptive adversarial perturbation scheme, which perturbs the given target samples in a way that reduces the intra-domain discrepancy. Finally, the exploration scheme locally aligns features in a class-wise manner complementary to the attraction scheme by selectively aligning unlabeled target features complementary to the perturbation scheme. We conduct extensive experiments on domain adaptation benchmark datasets such as DomainNet, Office-Home, and Office. Our method achieves state-of-the-art performances on all datasets.

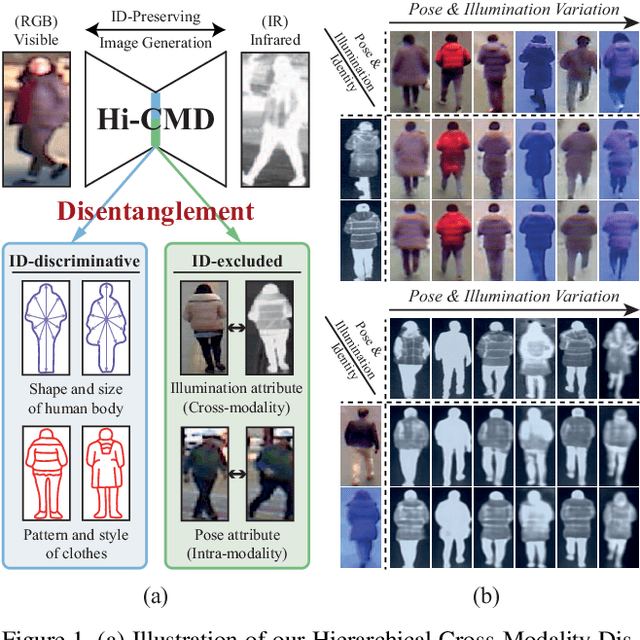

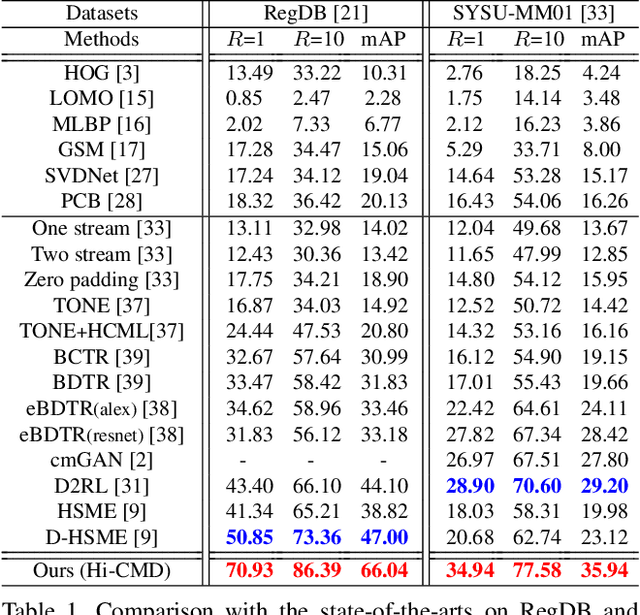

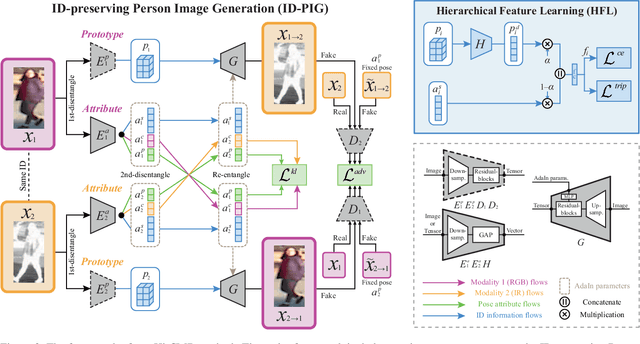

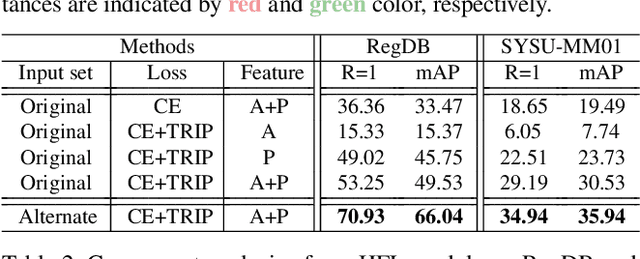

Hi-CMD: Hierarchical Cross-Modality Disentanglement for Visible-Infrared Person Re-Identification

Dec 03, 2019

Abstract:Visible-infrared person re-identification (VI-ReID) is an important task in night-time surveillance applications, since visible cameras are difficult to capture valid appearance information under poor illumination conditions. Compared to traditional person re-identification that handles only the intra-modality discrepancy, VI-ReID suffers from additional cross-modality discrepancy caused by different types of imaging systems. To reduce both intra- and cross-modality discrepancies, we propose a Hierarchical Cross-Modality Disentanglement (Hi-CMD) method, which automatically disentangles ID-discriminative factors and ID-excluded factors from visible-thermal images. We only use ID-discriminative factors for robust cross-modality matching without ID-excluded factors such as pose or illumination. To implement our approach, we introduce an ID-preserving person image generation network and a hierarchical feature learning module. Our generation network learns the disentangled representation by generating a new cross-modality image with different poses and illuminations while preserving a person's identity. At the same time, the feature learning module enables our model to explicitly extract the common ID-discriminative characteristic between visible-infrared images. Extensive experimental results demonstrate that our method outperforms the state-of-the-art methods on two VI-ReID datasets.

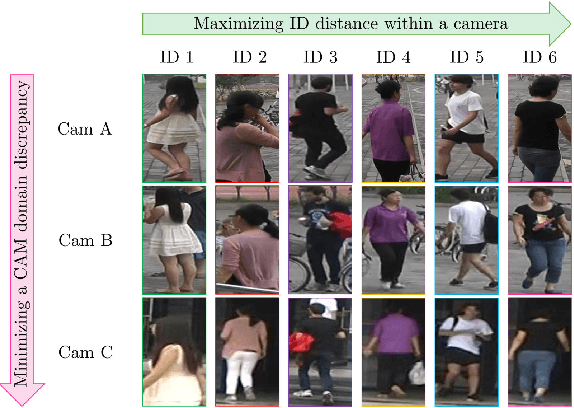

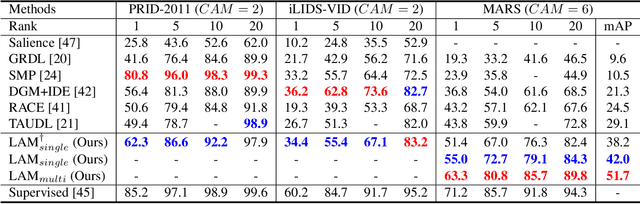

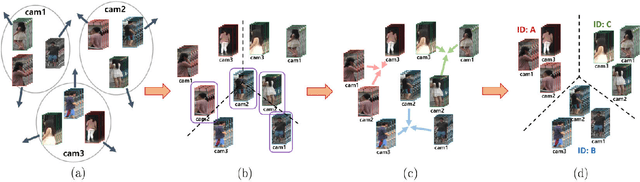

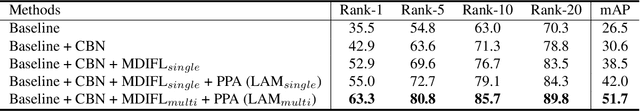

Learning to Align Multi-Camera Domain for Unsupervised Video Person Re-Identification

Oct 21, 2019

Abstract:Most video person re-identification (re-ID) methods are mainly based on supervised learning, which requires laborious cross-camera ID labeling. Due to this limit, it is difficult to increase the number of cameras for constructing a large camera network. In this paper, we address the person ID labeling issue by presenting novel deep representation learning without ID information across multiple cameras. Specifically, our method consists of both inter- and intra camera feature learning techniques. We maximize feature distances between people within a camera. At the same time, considering each camera as a different domain, we apply domain adversarial learning across multiple camera views for minimizing camera domain discrepancy. To further enhance our approach, we propose person part-level adaptation to effectively perform multi-camera domain invariant feature learning at different spatial regions. We carry out comprehensive experiments on four public re-ID datasets (i.e., PRID-2011, iLIDS-VID, MARS, and Market1501). Our method outperforms state-of-the-art methods by a large margin of about 20% in terms of rank-1 accuracy on the large-scale MARS dataset.

RPM-Net: Robust Pixel-Level Matching Networks for Self-Supervised Video Object Segmentation

Oct 10, 2019

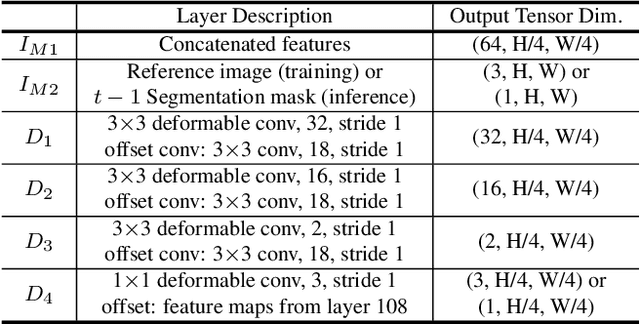

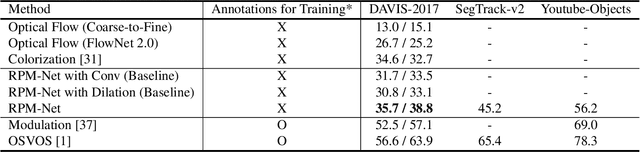

Abstract:In this paper, we introduce a self-supervised approach for video object segmentation without human labeled data.Specifically, we present Robust Pixel-level Matching Net-works (RPM-Net), a novel deep architecture that matches pixels between adjacent frames, using only color information from unlabeled videos for training. Technically, RPM-Net can be separated in two main modules. The embed-ding module first projects input images into high dimensional embedding space. Then the matching module with deformable convolution layers matches pixels between reference and target frames based on the embedding features.Unlike previous methods using deformable convolution, our matching module adopts deformable convolution to focus on similar features in spatio-temporally neighboring pixels.Our experiments show that the selective feature sampling improves the robustness to challenging problems in video object segmentation such as camera shake, fast motion, deformation, and occlusion. Also, we carry out comprehensive experiments on three public datasets (i.e., DAVIS-2017,SegTrack-v2, and Youtube-Objects) and achieve state-of-the-art performance on self-supervised video object seg-mentation. Moreover, we significantly reduce the performance gap between self-supervised and fully-supervised video object segmentation (41.0% vs. 52.5% on DAVIS-2017 validation set)

CNN-based Semantic Segmentation using Level Set Loss

Oct 02, 2019

Abstract:Thesedays, Convolutional Neural Networks are widely used in semantic segmentation. However, since CNN-based segmentation networks produce low-resolution outputs with rich semantic information, it is inevitable that spatial details (e.g., small bjects and fine boundary information) of segmentation results will be lost. To address this problem, motivated by a variational approach to image segmentation (i.e., level set theory), we propose a novel loss function called the level set loss which is designed to refine spatial details of segmentation results. To deal with multiple classes in an image, we first decompose the ground truth into binary images. Note that each binary image consists of background and regions belonging to a class. Then we convert level set functions into class probability maps and calculate the energy for each class. The network is trained to minimize the weighted sum of the level set loss and the cross-entropy loss. The proposed level set loss improves the spatial details of segmentation results in a time and memory efficient way. Furthermore, our experimental results show that the proposed loss function achieves better performance than previous approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge