Szymon Kosakowski

Generalized Proof-Number Monte-Carlo Tree Search

Jun 16, 2025Abstract:This paper presents Generalized Proof-Number Monte-Carlo Tree Search: a generalization of recently proposed combinations of Proof-Number Search (PNS) with Monte-Carlo Tree Search (MCTS), which use (dis)proof numbers to bias UCB1-based Selection strategies towards parts of the search that are expected to be easily (dis)proven. We propose three core modifications of prior combinations of PNS with MCTS. First, we track proof numbers per player. This reduces code complexity in the sense that we no longer need disproof numbers, and generalizes the technique to be applicable to games with more than two players. Second, we propose and extensively evaluate different methods of using proof numbers to bias the selection strategy, achieving strong performance with strategies that are simpler to implement and compute. Third, we merge our technique with Score Bounded MCTS, enabling the algorithm to prove and leverage upper and lower bounds on scores - as opposed to only proving wins or not-wins. Experiments demonstrate substantial performance increases, reaching the range of 80% for 8 out of the 11 tested board games.

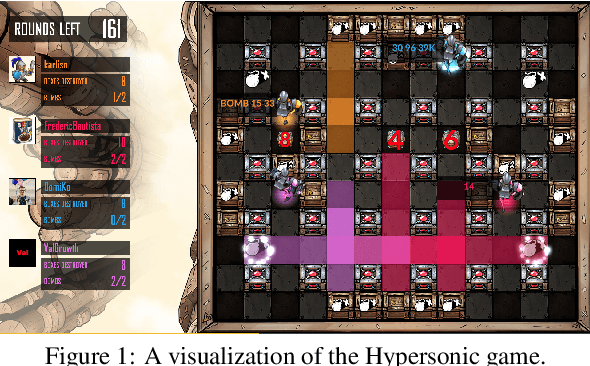

Developing a Successful Bomberman Agent

Mar 17, 2022

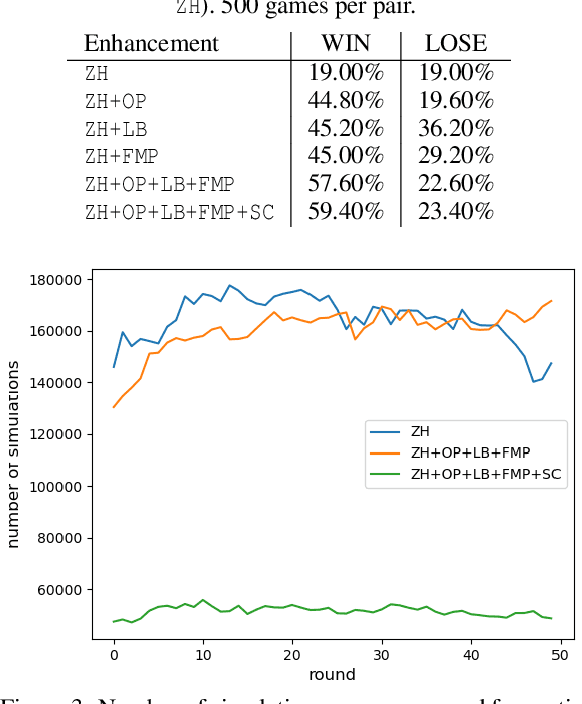

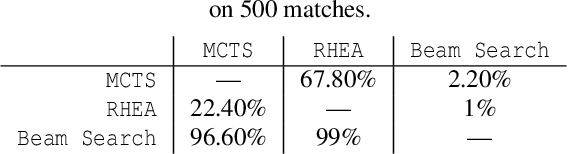

Abstract:In this paper, we study AI approaches to successfully play a 2-4 players, full information, Bomberman variant published on the CodinGame platform. We compare the behavior of three search algorithms: Monte Carlo Tree Search, Rolling Horizon Evolution, and Beam Search. We present various enhancements leading to improve the agents' strength that concern search, opponent prediction, game state evaluation, and game engine encoding. Our top agent variant is based on a Beam Search with low-level bit-based state representation and evaluation function heavy relying on pruning unpromising states based on simulation-based estimation of survival. It reached the top one position among the 2,300 AI agents submitted on the CodinGame arena.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge