Sven Kuckertz

Learn2Reg: comprehensive multi-task medical image registration challenge, dataset and evaluation in the era of deep learning

Dec 23, 2021

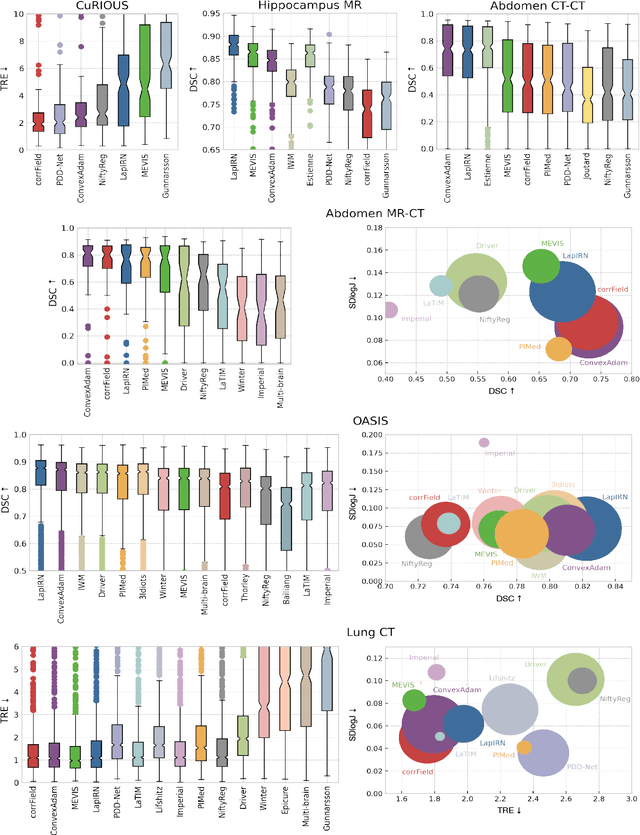

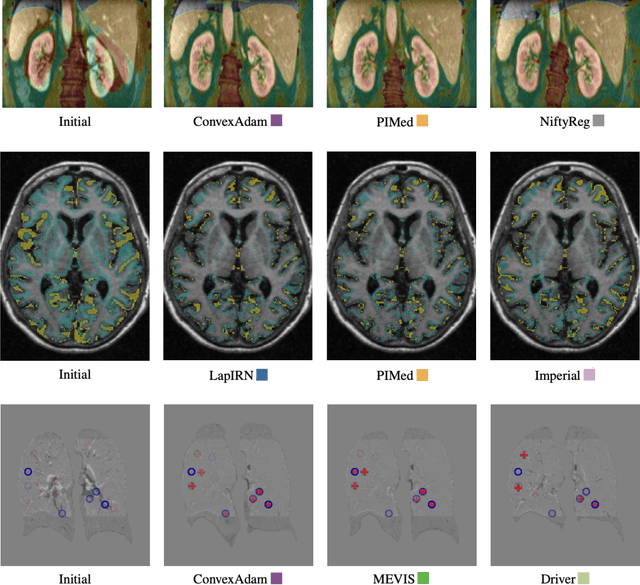

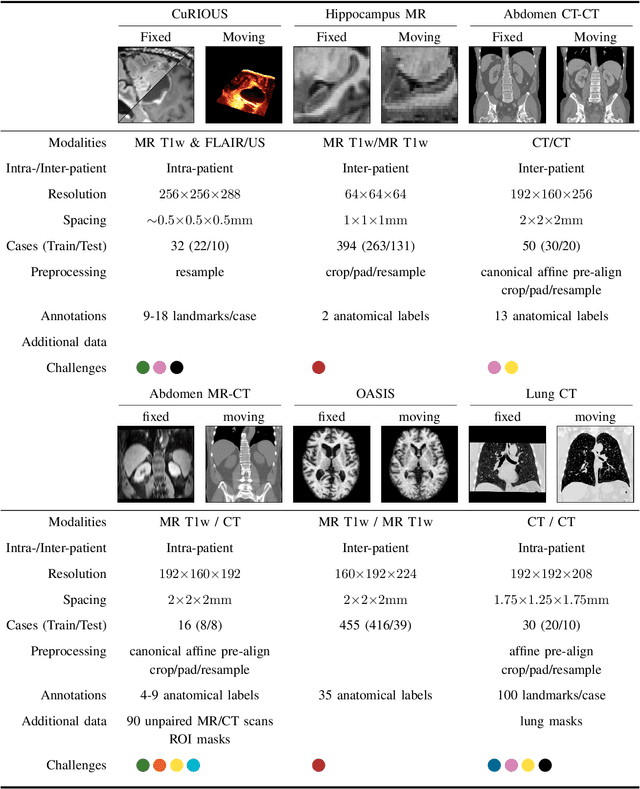

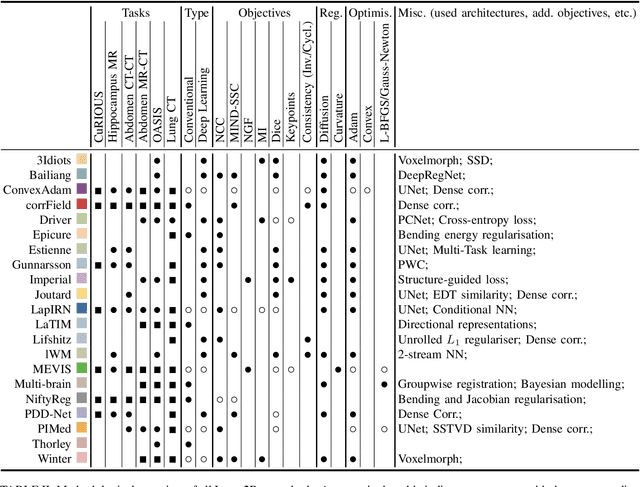

Abstract:Image registration is a fundamental medical image analysis task, and a wide variety of approaches have been proposed. However, only a few studies have comprehensively compared medical image registration approaches on a wide range of clinically relevant tasks, in part because of the lack of availability of such diverse data. This limits the development of registration methods, the adoption of research advances into practice, and a fair benchmark across competing approaches. The Learn2Reg challenge addresses these limitations by providing a multi-task medical image registration benchmark for comprehensive characterisation of deformable registration algorithms. A continuous evaluation will be possible at https://learn2reg.grand-challenge.org. Learn2Reg covers a wide range of anatomies (brain, abdomen, and thorax), modalities (ultrasound, CT, MR), availability of annotations, as well as intra- and inter-patient registration evaluation. We established an easily accessible framework for training and validation of 3D registration methods, which enabled the compilation of results of over 65 individual method submissions from more than 20 unique teams. We used a complementary set of metrics, including robustness, accuracy, plausibility, and runtime, enabling unique insight into the current state-of-the-art of medical image registration. This paper describes datasets, tasks, evaluation methods and results of the challenge, and the results of further analysis of transferability to new datasets, the importance of label supervision, and resulting bias.

Enhancing Label-Driven Deep Deformable Image Registration with Local Distance Metrics for State-of-the-Art Cardiac Motion Tracking

Dec 05, 2018

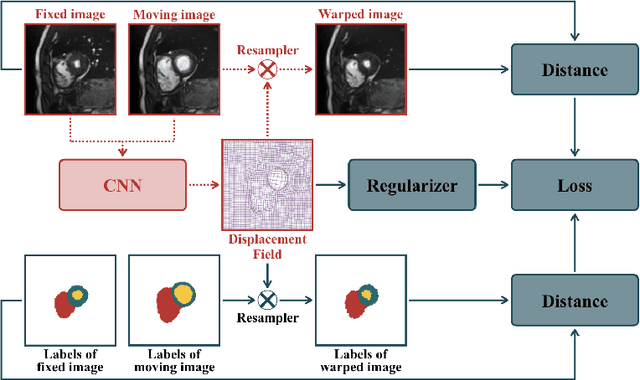

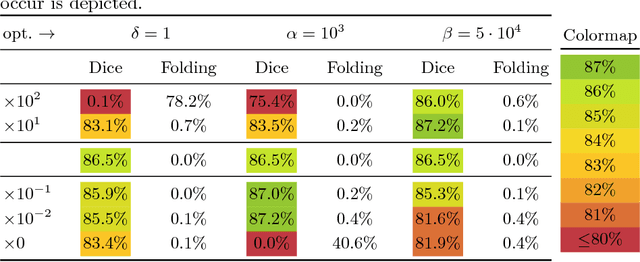

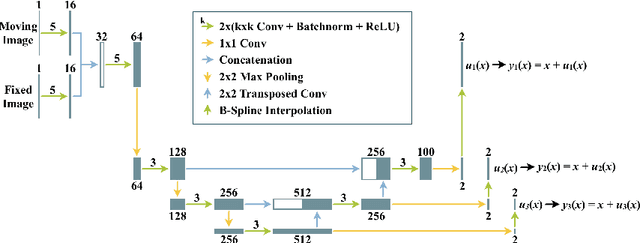

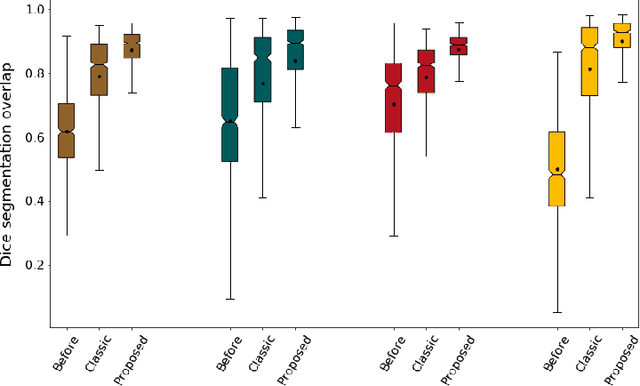

Abstract:While deep learning has achieved significant advances in accuracy for medical image segmentation, its benefits for deformable image registration have so far remained limited to reduced computation times. Previous work has either focused on replacing the iterative optimization of distance and smoothness terms with CNN-layers or using supervised approaches driven by labels. Our method is the first to combine the complementary strengths of global semantic information (represented by segmentation labels) and local distance metrics that help align surrounding structures. We demonstrate significant higher Dice scores (of 86.5\%) for deformable cardiac image registration compared to classic registration (79.0\%) as well as label-driven deep learning frameworks (83.4\%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge