Sven Kruschel

Navigating the Rashomon Effect: How Personalization Can Help Adjust Interpretable Machine Learning Models to Individual Users

May 11, 2025Abstract:The Rashomon effect describes the observation that in machine learning (ML) multiple models often achieve similar predictive performance while explaining the underlying relationships in different ways. This observation holds even for intrinsically interpretable models, such as Generalized Additive Models (GAMs), which offer users valuable insights into the model's behavior. Given the existence of multiple GAM configurations with similar predictive performance, a natural question is whether we can personalize these configurations based on users' needs for interpretability. In our study, we developed an approach to personalize models based on contextual bandits. In an online experiment with 108 users in a personalized treatment and a non-personalized control group, we found that personalization led to individualized rather than one-size-fits-all configurations. Despite these individual adjustments, the interpretability remained high across both groups, with users reporting a strong understanding of the models. Our research offers initial insights into the potential for personalizing interpretable ML.

Quantifying Visual Properties of GAM Shape Plots: Impact on Perceived Cognitive Load and Interpretability

Sep 25, 2024

Abstract:Generalized Additive Models (GAMs) offer a balance between performance and interpretability in machine learning. The interpretability aspect of GAMs is expressed through shape plots, representing the model's decision-making process. However, the visual properties of these plots, e.g. number of kinks (number of local maxima and minima), can impact their complexity and the cognitive load imposed on the viewer, compromising interpretability. Our study, including 57 participants, investigates the relationship between the visual properties of GAM shape plots and cognitive load they induce. We quantify various visual properties of shape plots and evaluate their alignment with participants' perceived cognitive load, based on 144 plots. Our results indicate that the number of kinks metric is the most effective, explaining 86.4% of the variance in users' ratings. We develop a simple model based on number of kinks that provides a practical tool for predicting cognitive load, enabling the assessment of one aspect of GAM interpretability without direct user involvement.

Challenging the Performance-Interpretability Trade-off: An Evaluation of Interpretable Machine Learning Models

Sep 22, 2024Abstract:Machine learning is permeating every conceivable domain to promote data-driven decision support. The focus is often on advanced black-box models due to their assumed performance advantages, whereas interpretable models are often associated with inferior predictive qualities. More recently, however, a new generation of generalized additive models (GAMs) has been proposed that offer promising properties for capturing complex, non-linear patterns while remaining fully interpretable. To uncover the merits and limitations of these models, this study examines the predictive performance of seven different GAMs in comparison to seven commonly used machine learning models based on a collection of twenty tabular benchmark datasets. To ensure a fair and robust model comparison, an extensive hyperparameter search combined with cross-validation was performed, resulting in 68,500 model runs. In addition, this study qualitatively examines the visual output of the models to assess their level of interpretability. Based on these results, the paper dispels the misconception that only black-box models can achieve high accuracy by demonstrating that there is no strict trade-off between predictive performance and model interpretability for tabular data. Furthermore, the paper discusses the importance of GAMs as powerful interpretable models for the field of information systems and derives implications for future work from a socio-technical perspective.

A Light in the Dark: Deep Learning Practices for Industrial Computer Vision

Jan 06, 2022

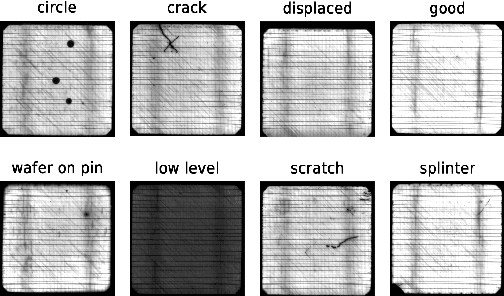

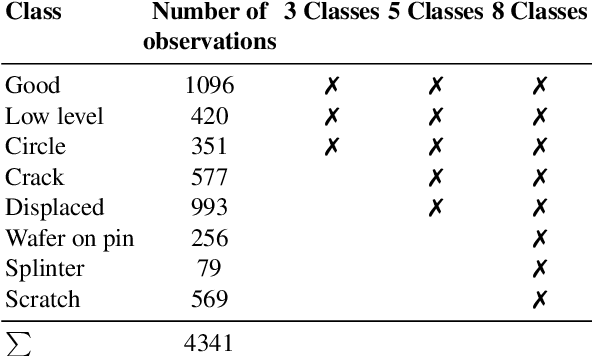

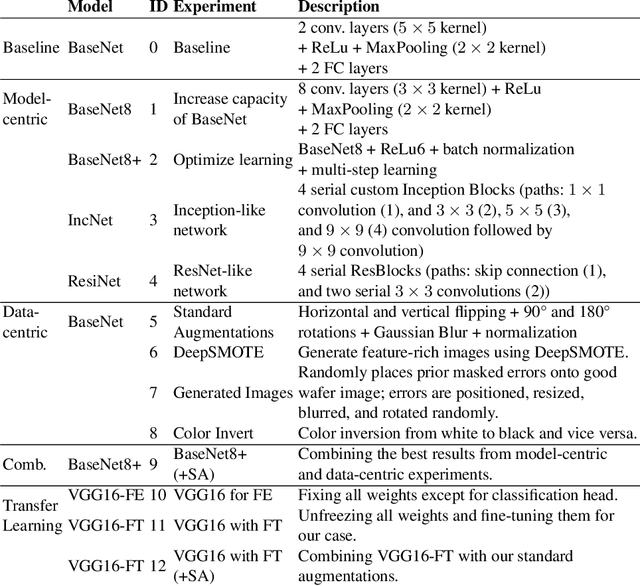

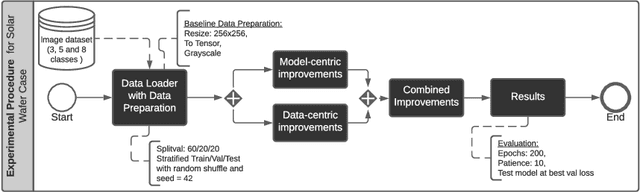

Abstract:In recent years, large pre-trained deep neural networks (DNNs) have revolutionized the field of computer vision (CV). Although these DNNs have been shown to be very well suited for general image recognition tasks, application in industry is often precluded for three reasons: 1) large pre-trained DNNs are built on hundreds of millions of parameters, making deployment on many devices impossible, 2) the underlying dataset for pre-training consists of general objects, while industrial cases often consist of very specific objects, such as structures on solar wafers, 3) potentially biased pre-trained DNNs raise legal issues for companies. As a remedy, we study neural networks for CV that we train from scratch. For this purpose, we use a real-world case from a solar wafer manufacturer. We find that our neural networks achieve similar performances as pre-trained DNNs, even though they consist of far fewer parameters and do not rely on third-party datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge