Stefano Zanero

Quantum Sparse Recovery and Quantum Orthogonal Matching Pursuit

Oct 08, 2025Abstract:We study quantum sparse recovery in non-orthogonal, overcomplete dictionaries: given coherent quantum access to a state and a dictionary of vectors, the goal is to reconstruct the state up to $\ell_2$ error using as few vectors as possible. We first show that the general recovery problem is NP-hard, ruling out efficient exact algorithms in full generality. To overcome this, we introduce Quantum Orthogonal Matching Pursuit (QOMP), the first quantum analogue of the classical OMP greedy algorithm. QOMP combines quantum subroutines for inner product estimation, maximum finding, and block-encoded projections with an error-resetting design that avoids iteration-to-iteration error accumulation. Under standard mutual incoherence and well-conditioned sparsity assumptions, QOMP provably recovers the exact support of a $K$-sparse state in polynomial time. As an application, we give the first framework for sparse quantum tomography with non-orthogonal dictionaries in $\ell_2$ norm, achieving query complexity $\widetilde{O}(\sqrt{N}/\epsilon)$ in favorable regimes and reducing tomography to estimating only $K$ coefficients instead of $N$ amplitudes. In particular, for pure-state tomography with $m=O(N)$ dictionary vectors and sparsity $K=\widetilde{O}(1)$ on a well-conditioned subdictionary, this circumvents the $\widetilde{\Omega}(N/\epsilon)$ lower bound that holds in the dense, orthonormal-dictionary setting, without contradiction, by leveraging sparsity together with non-orthogonality. Beyond tomography, we analyze QOMP in the QRAM model, where it yields polynomial speedups over classical OMP implementations, and provide a quantum algorithm to estimate the mutual incoherence of a dictionary of $m$ vectors in $O(m/\epsilon)$ queries, improving over both deterministic and quantum-inspired classical methods.

Assessing the Resilience of Automotive Intrusion Detection Systems to Adversarial Manipulation

Jun 12, 2025Abstract:The security of modern vehicles has become increasingly important, with the controller area network (CAN) bus serving as a critical communication backbone for various Electronic Control Units (ECUs). The absence of robust security measures in CAN, coupled with the increasing connectivity of vehicles, makes them susceptible to cyberattacks. While intrusion detection systems (IDSs) have been developed to counter such threats, they are not foolproof. Adversarial attacks, particularly evasion attacks, can manipulate inputs to bypass detection by IDSs. This paper extends our previous work by investigating the feasibility and impact of gradient-based adversarial attacks performed with different degrees of knowledge against automotive IDSs. We consider three scenarios: white-box (attacker with full system knowledge), grey-box (partial system knowledge), and the more realistic black-box (no knowledge of the IDS' internal workings or data). We evaluate the effectiveness of the proposed attacks against state-of-the-art IDSs on two publicly available datasets. Additionally, we study effect of the adversarial perturbation on the attack impact and evaluate real-time feasibility by precomputing evasive payloads for timed injection based on bus traffic. Our results demonstrate that, besides attacks being challenging due to the automotive domain constraints, their effectiveness is strongly dependent on the dataset quality, the target IDS, and the attacker's degree of knowledge.

TimberStrike: Dataset Reconstruction Attack Revealing Privacy Leakage in Federated Tree-Based Systems

Jun 09, 2025Abstract:Federated Learning has emerged as a privacy-oriented alternative to centralized Machine Learning, enabling collaborative model training without direct data sharing. While extensively studied for neural networks, the security and privacy implications of tree-based models remain underexplored. This work introduces TimberStrike, an optimization-based dataset reconstruction attack targeting horizontally federated tree-based models. Our attack, carried out by a single client, exploits the discrete nature of decision trees by using split values and decision paths to infer sensitive training data from other clients. We evaluate TimberStrike on State-of-the-Art federated gradient boosting implementations across multiple frameworks, including Flower, NVFlare, and FedTree, demonstrating their vulnerability to privacy breaches. On a publicly available stroke prediction dataset, TimberStrike consistently reconstructs between 73.05% and 95.63% of the target dataset across all implementations. We further analyze Differential Privacy, showing that while it partially mitigates the attack, it also significantly degrades model performance. Our findings highlight the need for privacy-preserving mechanisms specifically designed for tree-based Federated Learning systems, and we provide preliminary insights into their design.

Quantum Algorithms for Data Representation and Analysis

Apr 19, 2021

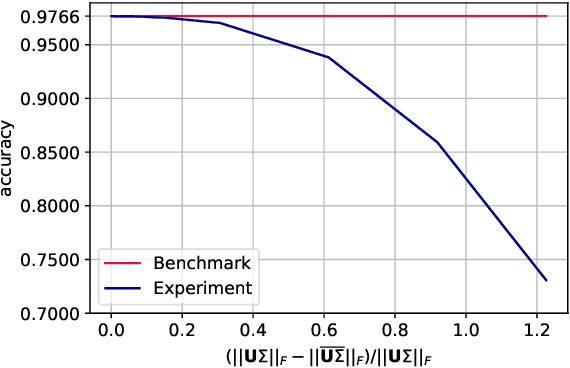

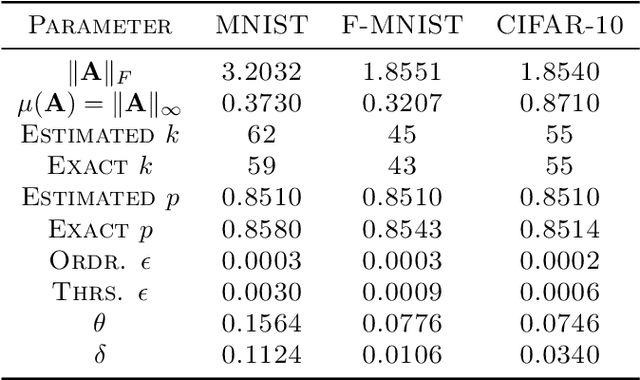

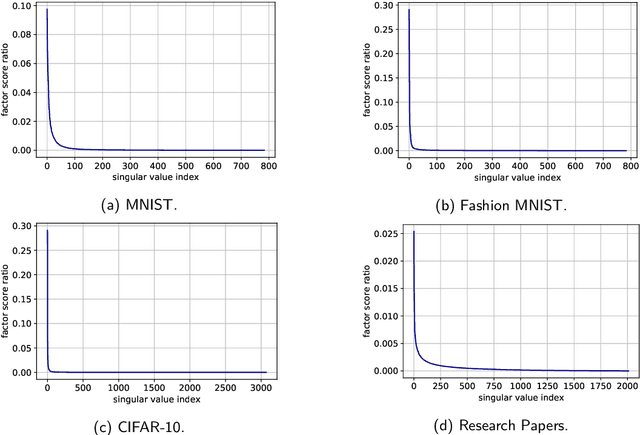

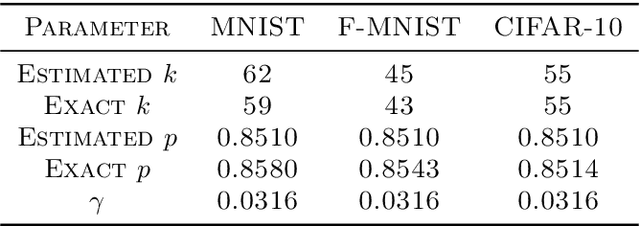

Abstract:We narrow the gap between previous literature on quantum linear algebra and useful data analysis on a quantum computer, providing quantum procedures that speed-up the solution of eigenproblems for data representation in machine learning. The power and practical use of these subroutines is shown through new quantum algorithms, sublinear in the input matrix's size, for principal component analysis, correspondence analysis, and latent semantic analysis. We provide a theoretical analysis of the run-time and prove tight bounds on the randomized algorithms' error. We run experiments on multiple datasets, simulating PCA's dimensionality reduction for image classification with the novel routines. The results show that the run-time parameters that do not depend on the input's size are reasonable and that the error on the computed model is small, allowing for competitive classification performances.

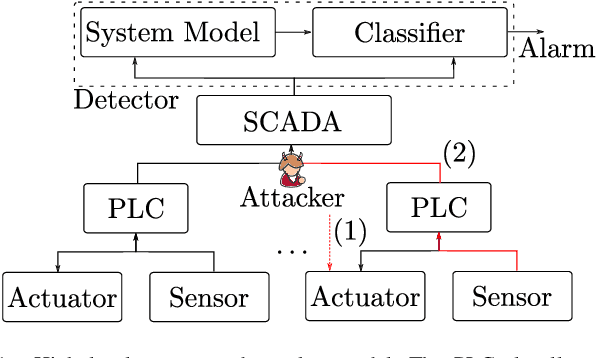

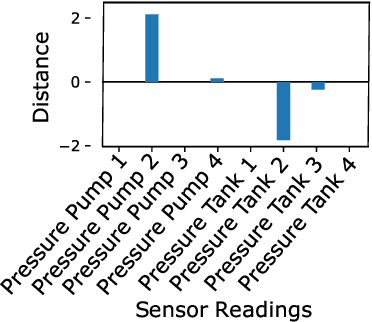

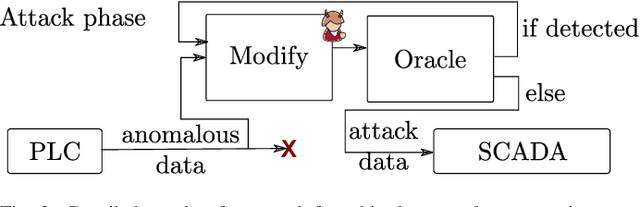

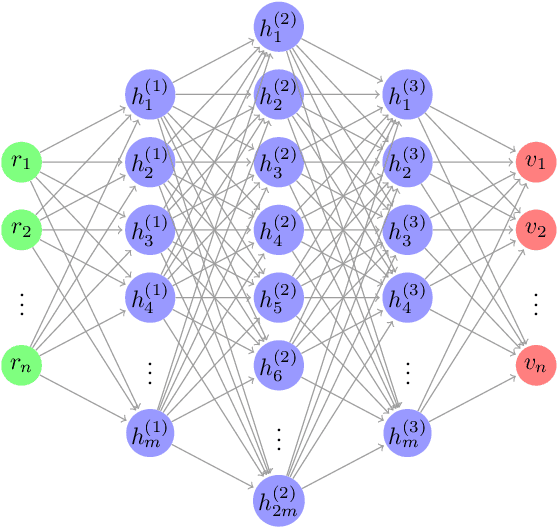

Real-time Evasion Attacks with Physical Constraints on Deep Learning-based Anomaly Detectors in Industrial Control Systems

Jul 17, 2019

Abstract:Recently, a number of deep learning-based anomaly detection algorithms were proposed to detect attacks in dynamic industrial control systems. The detectors operate on measured sensor data, leveraging physical process models learned a priori. Evading detection by such systems is challenging, as an attacker needs to manipulate a constrained number of sensor readings in real-time with realistic perturbations according to the current state of the system. In this work, we propose a number of evasion attacks (with different assumptions on the attacker's knowledge), and compare the attacks' cost and efficiency against replay attacks. In particular, we show that a replay attack on a subset of sensor values can be detected easily as it violates physical constraints. In contrast, our proposed attacks leverage manipulated sensor readings that observe learned physical constraints of the system. Our proposed white box attacker uses an optimization approach with a detection oracle, while our black box attacker uses an autoencoder (or a convolutional neural network) to translate anomalous data into normal data. Our proposed approaches are implemented and evaluated on two different datasets pertaining to the domain of water distribution networks. We then demonstrated the efficacy of the real-time attack on a realistic testbed. Results show that the accuracy of the detection algorithms can be significantly reduced through real-time adversarial actions: for the BATADAL dataset, the attacker can reduce the detection accuracy from 0.6 to 0.14. In addition, we discuss and implement an Availability attack, in which the attacker introduces detection events with minimal changes of the reported data, in order to reduce confidence in the detector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge