Shuaixin Li

RAVES-Calib: Robust, Accurate and Versatile Extrinsic Self Calibration Using Optimal Geometric Features

Dec 09, 2025

Abstract:In this paper, we present a user-friendly LiDAR-camera calibration toolkit that is compatible with various LiDAR and camera sensors and requires only a single pair of laser points and a camera image in targetless environments. Our approach eliminates the need for an initial transform and remains robust even with large positional and rotational LiDAR-camera extrinsic parameters. We employ the Gluestick pipeline to establish 2D-3D point and line feature correspondences for a robust and automatic initial guess. To enhance accuracy, we quantitatively analyze the impact of feature distribution on calibration results and adaptively weight the cost of each feature based on these metrics. As a result, extrinsic parameters are optimized by filtering out the adverse effects of inferior features. We validated our method through extensive experiments across various LiDAR-camera sensors in both indoor and outdoor settings. The results demonstrate that our method provides superior robustness and accuracy compared to SOTA techniques. Our code is open-sourced on GitHub to benefit the community.

SL-SLAM: A robust visual-inertial SLAM based deep feature extraction and matching

May 06, 2024Abstract:This paper explores how deep learning techniques can improve visual-based SLAM performance in challenging environments. By combining deep feature extraction and deep matching methods, we introduce a versatile hybrid visual SLAM system designed to enhance adaptability in challenging scenarios, such as low-light conditions, dynamic lighting, weak-texture areas, and severe jitter. Our system supports multiple modes, including monocular, stereo, monocular-inertial, and stereo-inertial configurations. We also perform analysis how to combine visual SLAM with deep learning methods to enlighten other researches. Through extensive experiments on both public datasets and self-sampled data, we demonstrate the superiority of the SL-SLAM system over traditional approaches. The experimental results show that SL-SLAM outperforms state-of-the-art SLAM algorithms in terms of localization accuracy and tracking robustness. For the benefit of community, we make public the source code at https://github.com/zzzzxxxx111/SLslam.

InTEn-LOAM: Intensity and Temporal Enhanced LiDAR Odometry and Mapping

Sep 13, 2022

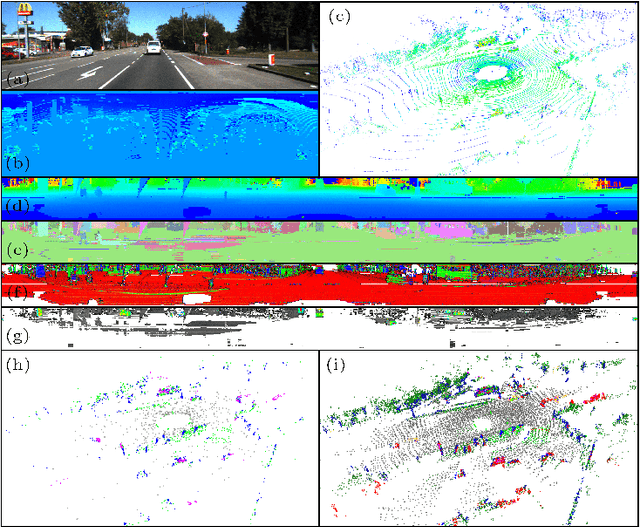

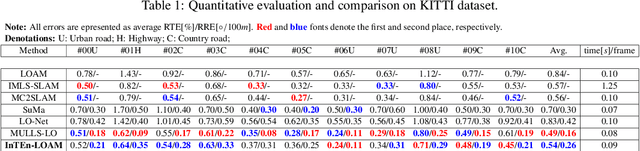

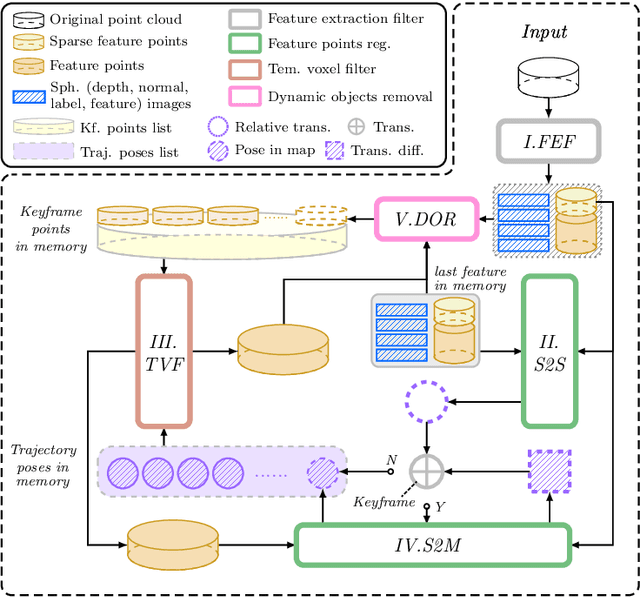

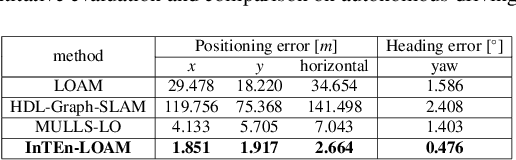

Abstract:Traditional LiDAR odometry (LO) systems mainly leverage geometric information obtained from the traversed surroundings to register laser scans and estimate LiDAR ego-motion, while it may be unreliable in dynamic or unstructured environments. This paper proposes InTEn-LOAM, a low-drift and robust LiDAR odometry and mapping method that fully exploits implicit information of laser sweeps (i.e., geometric, intensity, and temporal characteristics). Scanned points are projected to cylindrical images, which facilitate the efficient and adaptive extraction of various types of features, i.e., ground, beam, facade, and reflector. We propose a novel intensity-based points registration algorithm and incorporate it into the LiDAR odometry, enabling the LO system to jointly estimate the LiDAR ego-motion using both geometric and intensity feature points. To eliminate the interference of dynamic objects, we propose a temporal-based dynamic object removal approach to filter them out before map update. Moreover, the local map is organized and downsampled using a temporal-related voxel grid filter to maintain the similarity between the current scan and the static local map. Extensive experiments are conducted on both simulated and real-world datasets. The results show that the proposed method achieves similar or better accuracy w.r.t the state-of-the-arts in normal driving scenarios and outperforms geometric-based LO in unstructured environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge