Shervin Ghasemlou

Multimodal Generative Recommendation for Fusing Semantic and Collaborative Signals

Feb 03, 2026Abstract:Sequential recommender systems rank relevant items by modeling a user's interaction history and computing the inner product between the resulting user representation and stored item embeddings. To avoid the significant memory overhead of storing large item sets, the generative recommendation paradigm instead models each item as a series of discrete semantic codes. Here, the next item is predicted by an autoregressive model that generates the code sequence corresponding to the predicted item. However, despite promising ranking capabilities on small datasets, these methods have yet to surpass traditional sequential recommenders on large item sets, limiting their adoption in the very scenarios they were designed to address. To resolve this, we propose MSCGRec, a Multimodal Semantic and Collaborative Generative Recommender. MSCGRec incorporates multiple semantic modalities and introduces a novel self-supervised quantization learning approach for images based on the DINO framework. Additionally, MSCGRec fuses collaborative and semantic signals by extracting collaborative features from sequential recommenders and treating them as a separate modality. Finally, we propose constrained sequence learning that restricts the large output space during training to the set of permissible tokens. We empirically demonstrate on three large real-world datasets that MSCGRec outperforms both sequential and generative recommendation baselines and provide an extensive ablation study to validate the impact of each component.

CRAG-MM: Multi-modal Multi-turn Comprehensive RAG Benchmark

Oct 30, 2025

Abstract:Wearable devices such as smart glasses are transforming the way people interact with their surroundings, enabling users to seek information regarding entities in their view. Multi-Modal Retrieval-Augmented Generation (MM-RAG) plays a key role in supporting such questions, yet there is still no comprehensive benchmark for this task, especially regarding wearables scenarios. To fill this gap, we present CRAG-MM -- a Comprehensive RAG benchmark for Multi-modal Multi-turn conversations. CRAG-MM contains a diverse set of 6.5K (image, question, answer) triplets and 2K visual-based multi-turn conversations across 13 domains, including 6.2K egocentric images designed to mimic captures from wearable devices. We carefully constructed the questions to reflect real-world scenarios and challenges, including five types of image-quality issues, six question types, varying entity popularity, differing information dynamism, and different conversation turns. We design three tasks: single-source augmentation, multi-source augmentation, and multi-turn conversations -- each paired with an associated retrieval corpus and APIs for both image-KG retrieval and webpage retrieval. Our evaluation shows that straightforward RAG approaches achieve only 32% and 43% truthfulness on CRAG-MM single- and multi-turn QA, respectively, whereas state-of-the-art industry solutions have similar quality (32%/45%), underscoring ample room for improvement. The benchmark has hosted KDD Cup 2025, attracting about 1K participants and 5K submissions, with winning solutions improving baseline performance by 28%, highlighting its early impact on advancing the field.

Doppelgänger's Watch: A Split Objective Approach to Large Language Models

Sep 09, 2024Abstract:In this paper, we investigate the problem of "generation supervision" in large language models, and present a novel bicameral architecture to separate supervision signals from their core capability, helpfulness. Doppelg\"anger, a new module parallel to the underlying language model, supervises the generation of each token, and learns to concurrently predict the supervision score(s) of the sequences up to and including each token. In this work, we present the theoretical findings, and leave the report on experimental results to a forthcoming publication.

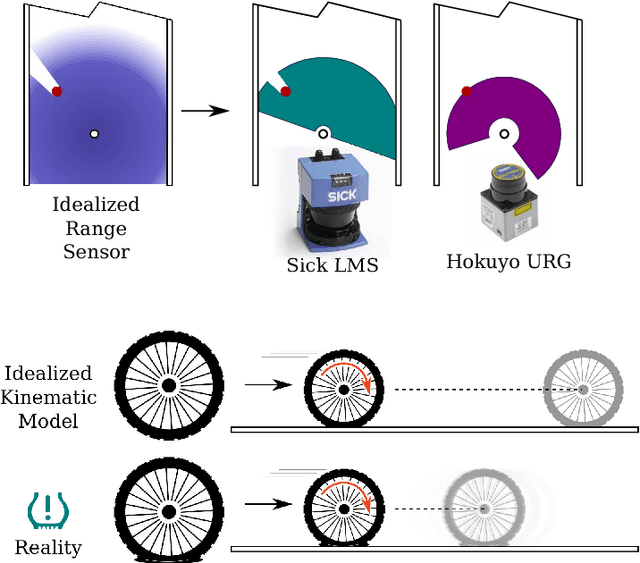

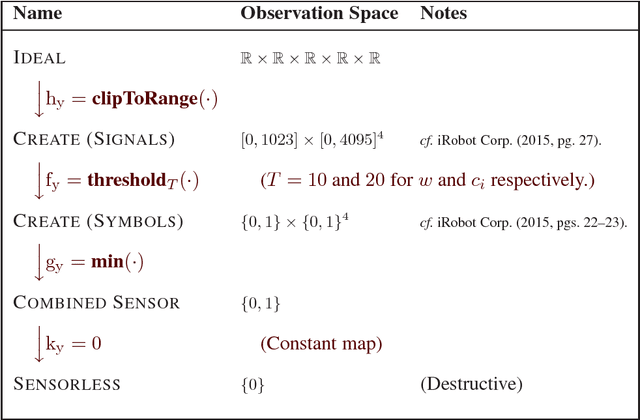

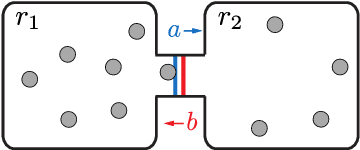

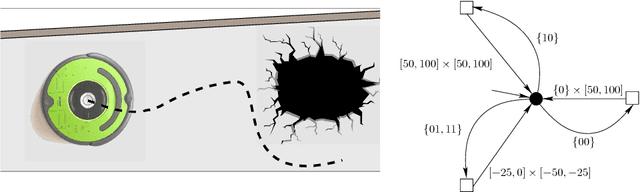

Toward a language-theoretic foundation for planning and filtering

Jul 23, 2018

Abstract:We address problems underlying the algorithmic question of automating the co-design of robot hardware in tandem with its apposite software. Specifically, we consider the impact that degradations of a robot's sensor and actuation suites may have on the ability of that robot to complete its tasks. We introduce a new formal structure that generalizes and consolidates a variety of well-known structures including many forms of plans, planning problems, and filters, into a single data structure called a procrustean graph, and give these graph structures semantics in terms of ideas based in formal language theory. We describe a collection of operations on procrustean graphs (both semantics-preserving and semantics-mutating), and show how a family of questions about the destructiveness of a change to the robot hardware can be answered by applying these operations. We also highlight the connections between this new approach and existing threads of research, including combinatorial filtering, Erdmann's strategy complexes, and hybrid automata.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge