Sarah Jones

Iowa State University

Towards Large Reasoning Models for Agriculture

May 25, 2025Abstract:Agricultural decision-making involves complex, context-specific reasoning, where choices about crops, practices, and interventions depend heavily on geographic, climatic, and economic conditions. Traditional large language models (LLMs) often fall short in navigating this nuanced problem due to limited reasoning capacity. We hypothesize that recent advances in large reasoning models (LRMs) can better handle such structured, domain-specific inference. To investigate this, we introduce AgReason, the first expert-curated open-ended science benchmark with 100 questions for agricultural reasoning. Evaluations across thirteen open-source and proprietary models reveal that LRMs outperform conventional ones, though notable challenges persist, with the strongest Gemini-based baseline achieving 36% accuracy. We also present AgThoughts, a large-scale dataset of 44.6K question-answer pairs generated with human oversight and equipped with synthetically generated reasoning traces. Using AgThoughts, we develop AgThinker, a suite of small reasoning models that can be run on consumer-grade GPUs, and show that our dataset can be effective in unlocking agricultural reasoning abilities in LLMs. Our project page is here: https://baskargroup.github.io/Ag_reasoning/

Explaining hyperspectral imaging based plant disease identification: 3D CNN and saliency maps

Apr 24, 2018

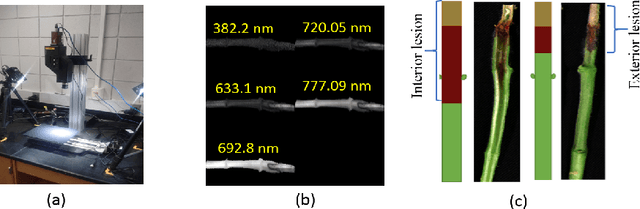

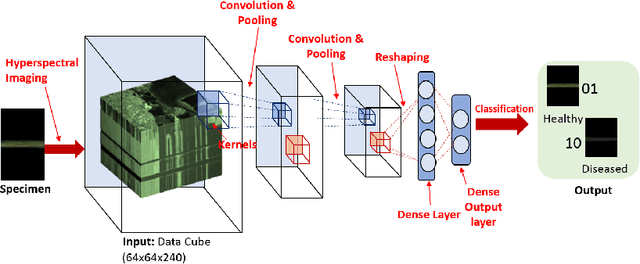

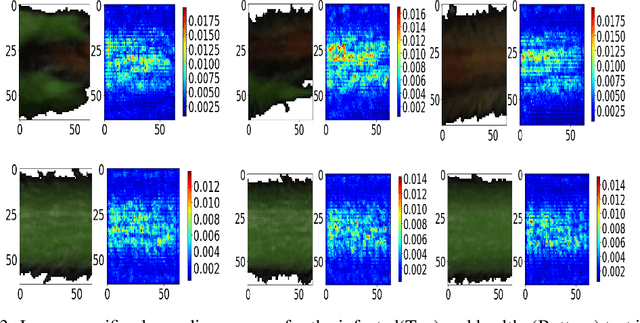

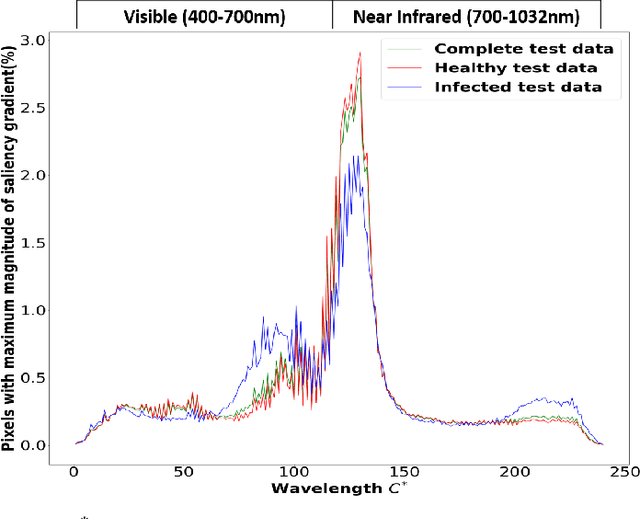

Abstract:Our overarching goal is to develop an accurate and explainable model for plant disease identification using hyperspectral data. Charcoal rot is a soil borne fungal disease that affects the yield of soybean crops worldwide. Hyperspectral images were captured at 240 different wavelengths in the range of 383 - 1032 nm. We developed a 3D Convolutional Neural Network model for soybean charcoal rot disease identification. Our model has classification accuracy of 95.73\% and an infected class F1 score of 0.87. We infer the trained model using saliency map and visualize the most sensitive pixel locations that enable classification. The sensitivity of individual wavelengths for classification was also determined using the saliency map visualization. We identify the most sensitive wavelength as 733 nm using the saliency map visualization. Since the most sensitive wavelength is in the Near Infrared Region(700 - 1000 nm) of the electromagnetic spectrum, which is also the commonly used spectrum region for determining the vegetation health of the plant, we were more confident in the predictions using our model.

Hyperspectral band selection using genetic algorithm and support vector machines for early identification of charcoal rot disease in soybean

Oct 12, 2017

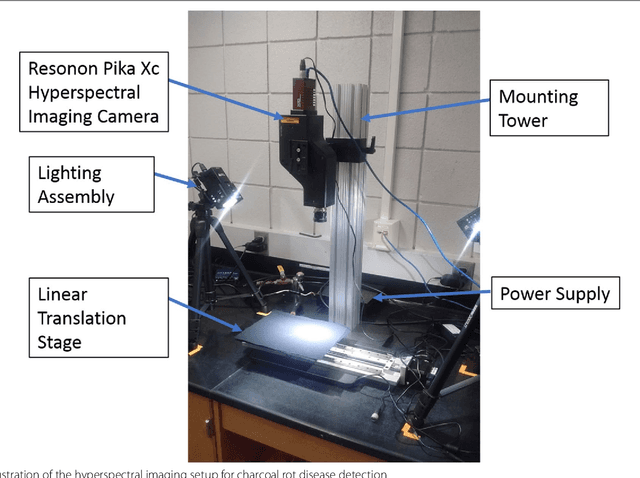

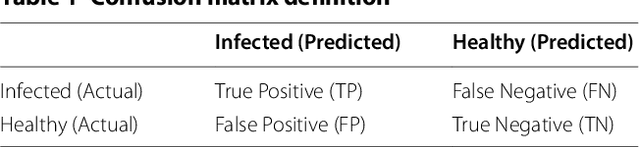

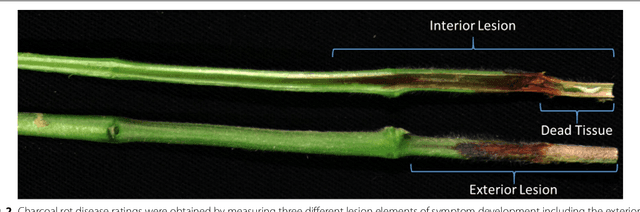

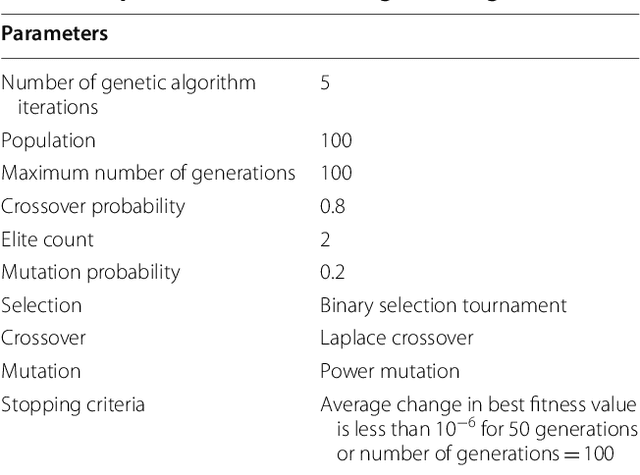

Abstract:Charcoal rot is a fungal disease that thrives in warm dry conditions and affects the yield of soybeans and other important agronomic crops worldwide. There is a need for robust, automatic and consistent early detection and quantification of disease symptoms which are important in breeding programs for the development of improved cultivars and in crop production for the implementation of disease control measures for yield protection. Current methods of plant disease phenotyping are predominantly visual and hence are slow and prone to human error and variation. There has been increasing interest in hyperspectral imaging applications for early detection of disease symptoms. However, the high dimensionality of hyperspectral data makes it very important to have an efficient analysis pipeline in place for the identification of disease so that effective crop management decisions can be made. The focus of this work is to determine the minimal number of most effective hyperspectral bands that can distinguish between healthy and diseased specimens early in the growing season. Healthy and diseased hyperspectral data cubes were captured at 3, 6, 9, 12, and 15 days after inoculation. We utilized inoculated and control specimens from 4 different genotypes. Each hyperspectral image was captured at 240 different wavelengths in the range of 383 to 1032 nm. We used a combination of genetic algorithm as an optimizer and support vector machines as a classifier for identification of maximally effective band combinations. A binary classification between healthy and infected samples using six selected band combinations obtained a classification accuracy of 97% and a F1 score of 0.97 for the infected class. The results demonstrated that these carefully chosen bands are more informative than RGB images, and could be used in a multispectral camera for remote identification of charcoal rot infection in soybean.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge