Sara Khosravi

Practical Policy Distillation for Reinforcement Learning in Radio Access Networks

Nov 09, 2025

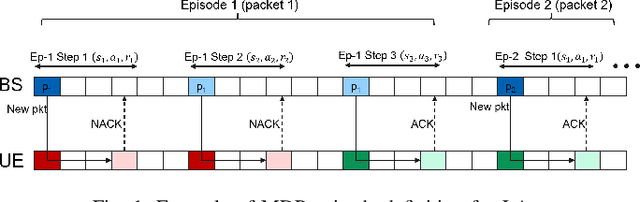

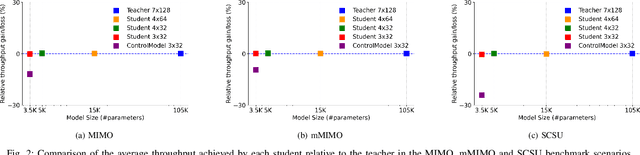

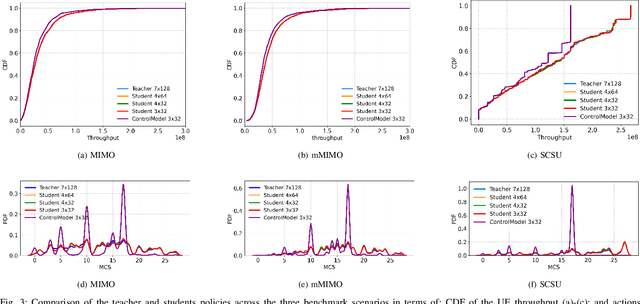

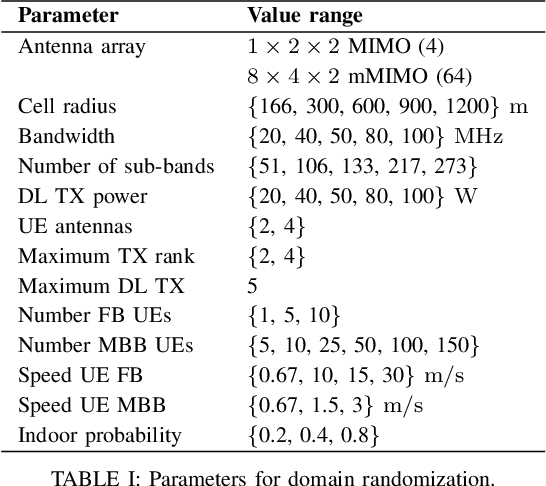

Abstract:Adopting artificial intelligence (AI) in radio access networks (RANs) presents several challenges, including limited availability of link-level measurements (e.g., CQI reports), stringent real-time processing constraints (e.g., sub-1 ms per TTI), and network heterogeneity (different spectrum bands, cell types, and vendor equipment). A critical yet often overlooked barrier lies in the computational and memory limitations of RAN baseband hardware, particularly in legacy 4th Generation (4G) systems, which typically lack on-chip neural accelerators. As a result, only lightweight AI models (under 1 Mb and sub-100~μs inference time) can be effectively deployed, limiting both their performance and applicability. However, achieving strong generalization across diverse network conditions often requires large-scale models with substantial resource demands. To address this trade-off, this paper investigates policy distillation in the context of a reinforcement learning-based link adaptation task. We explore two strategies: single-policy distillation, where a scenario-agnostic teacher model is compressed into one generalized student model; and multi-policy distillation, where multiple scenario-specific teachers are consolidated into a single generalist student. Experimental evaluations in a high-fidelity, 5th Generation (5G)-compliant simulator demonstrate that both strategies produce compact student models that preserve the teachers' generalization capabilities while complying with the computational and memory limitations of existing RAN hardware.

AI Agents in Drug Discovery

Oct 31, 2025Abstract:Artificial intelligence (AI) agents are emerging as transformative tools in drug discovery, with the ability to autonomously reason, act, and learn through complicated research workflows. Building on large language models (LLMs) coupled with perception, computation, action, and memory tools, these agentic AI systems could integrate diverse biomedical data, execute tasks, carry out experiments via robotic platforms, and iteratively refine hypotheses in closed loops. We provide a conceptual and technical overview of agentic AI architectures, ranging from ReAct and Reflection to Supervisor and Swarm systems, and illustrate their applications across key stages of drug discovery, including literature synthesis, toxicity prediction, automated protocol generation, small-molecule synthesis, drug repurposing, and end-to-end decision-making. To our knowledge, this represents the first comprehensive work to present real-world implementations and quantifiable impacts of agentic AI systems deployed in operational drug discovery settings. Early implementations demonstrate substantial gains in speed, reproducibility, and scalability, compressing workflows that once took months into hours while maintaining scientific traceability. We discuss the current challenges related to data heterogeneity, system reliability, privacy, and benchmarking, and outline future directions towards technology in support of science and translation.

Low Complexity Beam Searching Using Trajectory Information in Mobile Millimeter-wave Networks

Jun 06, 2022

Abstract:Millimeter-wave and terahertz systems rely on beamforming/combining codebooks for finding the best beam directions during the initial access procedure. Existing approaches suffer from large codebook sizes and high beam searching overhead in the presence of mobile devices. To alleviate this problem, we suggest utilizing the similarity of the channel in adjacent locations to divide the UE trajectory into a set of separate regions and maintain a set of candidate paths for each region in a database. In this paper, we show the tradeoff between the number of regions and the signalling overhead, i.e., higher number of regions corresponds to higher signal-to-noise ratio (SNR) but also higher signalling overhead for the database. We then propose an optimization framework to find the minimum number of regions based on the trajectory of a mobile device. Using realistic ray tracing datasets, we demonstrate that the proposed method reduces the beam searching complexity and latency while providing high SNR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge