Santu Rana

Fast Conditional Network Compression Using Bayesian HyperNetworks

May 13, 2022

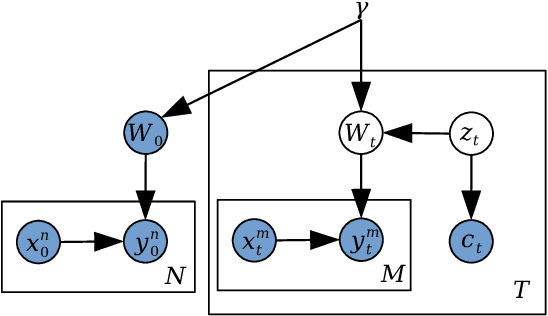

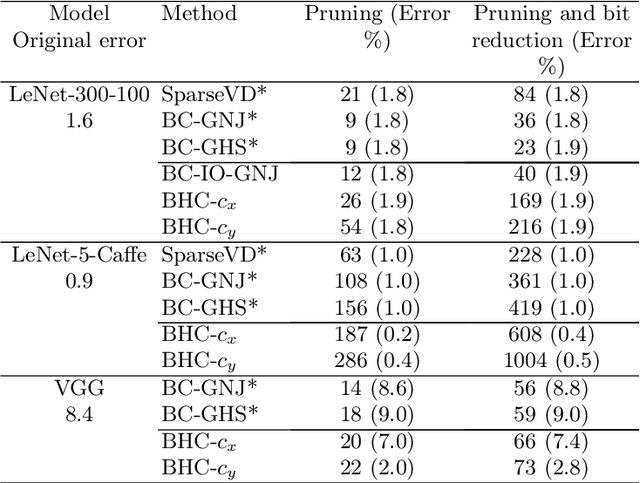

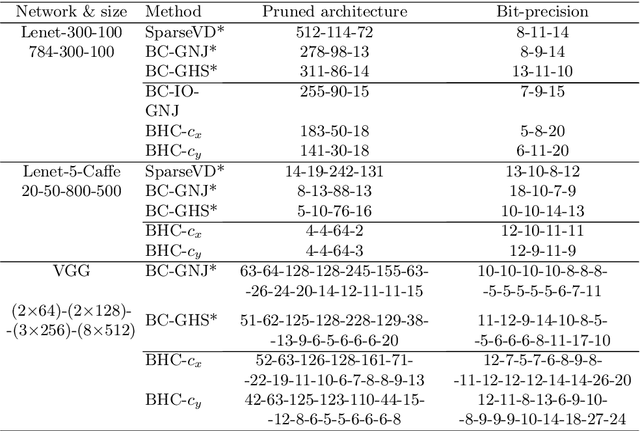

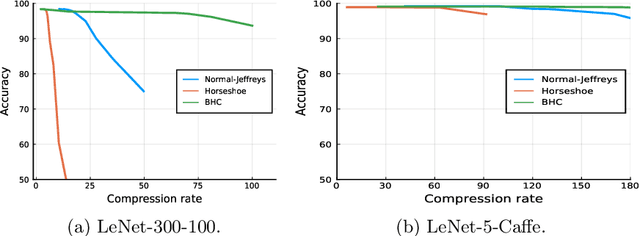

Abstract:We introduce a conditional compression problem and propose a fast framework for tackling it. The problem is how to quickly compress a pretrained large neural network into optimal smaller networks given target contexts, e.g. a context involving only a subset of classes or a context where only limited compute resource is available. To solve this, we propose an efficient Bayesian framework to compress a given large network into much smaller size tailored to meet each contextual requirement. We employ a hypernetwork to parameterize the posterior distribution of weights given conditional inputs and minimize a variational objective of this Bayesian neural network. To further reduce the network sizes, we propose a new input-output group sparsity factorization of weights to encourage more sparseness in the generated weights. Our methods can quickly generate compressed networks with significantly smaller sizes than baseline methods.

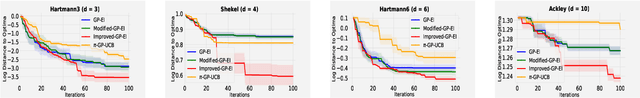

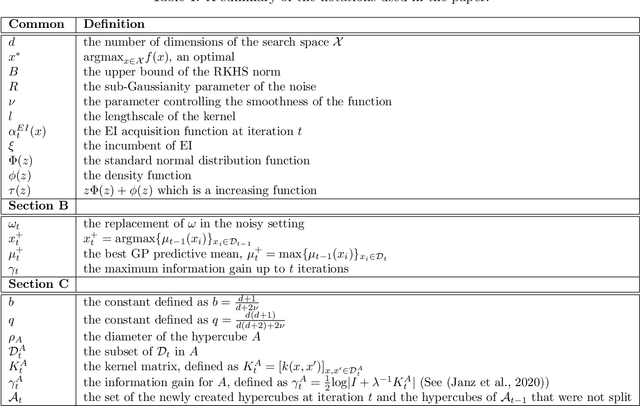

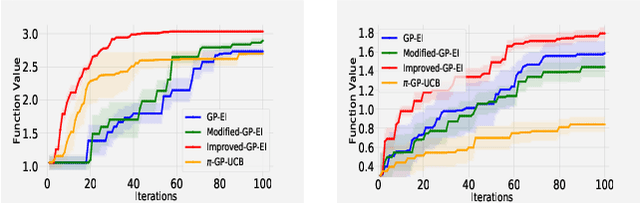

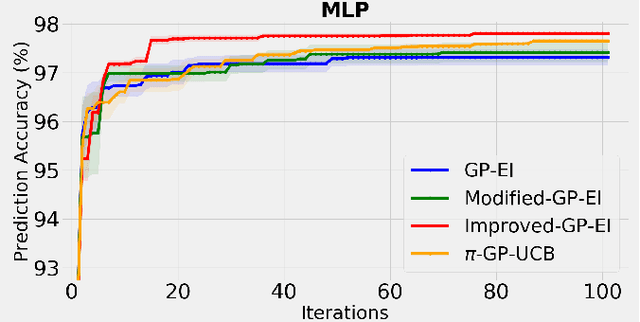

Regret Bounds for Expected Improvement Algorithms in Gaussian Process Bandit Optimization

Mar 15, 2022

Abstract:The expected improvement (EI) algorithm is one of the most popular strategies for optimization under uncertainty due to its simplicity and efficiency. Despite its popularity, the theoretical aspects of this algorithm have not been properly analyzed. In particular, whether in the noisy setting, the EI strategy with a standard incumbent converges is still an open question of the Gaussian process bandit optimization problem. We aim to answer this question by proposing a variant of EI with a standard incumbent defined via the GP predictive mean. We prove that our algorithm converges, and achieves a cumulative regret bound of $\mathcal O(\gamma_T\sqrt{T})$, where $\gamma_T$ is the maximum information gain between $T$ observations and the Gaussian process model. Based on this variant of EI, we further propose an algorithm called Improved GP-EI that converges faster than previous counterparts. In particular, our proposed variants of EI do not require the knowledge of the RKHS norm and the noise's sub-Gaussianity parameter as in previous works. Empirical validation in our paper demonstrates the effectiveness of our algorithms compared to several baselines.

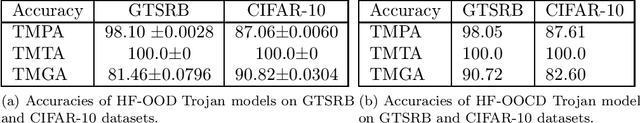

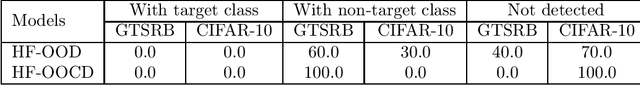

Towards Effective and Robust Neural Trojan Defenses via Input Filtering

Mar 08, 2022

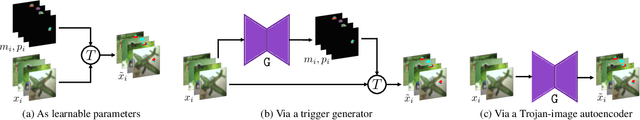

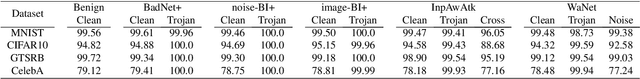

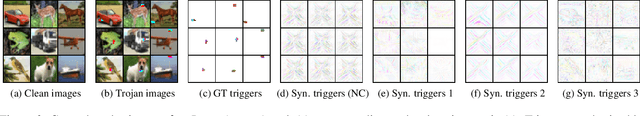

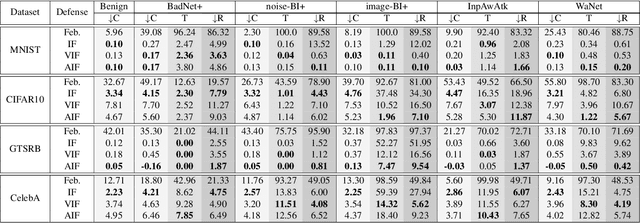

Abstract:Trojan attacks on deep neural networks are both dangerous and surreptitious. Over the past few years, Trojan attacks have advanced from using only a single input-agnostic trigger and targeting only one class to using multiple, input-specific triggers and targeting multiple classes. However, Trojan defenses have not caught up with this development. Most defense methods still make out-of-date assumptions about Trojan triggers and target classes, thus, can be easily circumvented by modern Trojan attacks. To deal with this problem, we propose two novel "filtering" defenses called Variational Input Filtering (VIF) and Adversarial Input Filtering (AIF) which leverage lossy data compression and adversarial learning respectively to effectively purify all potential Trojan triggers in the input at run time without making assumptions about the number of triggers/target classes or the input dependence property of triggers. In addition, we introduce a new defense mechanism called "Filtering-then-Contrasting" (FtC) which helps avoid the drop in classification accuracy on clean data caused by "filtering", and combine it with VIF/AIF to derive new defenses of this kind. Extensive experimental results and ablation studies show that our proposed defenses significantly outperform well-known baseline defenses in mitigating five advanced Trojan attacks including two recent state-of-the-art while being quite robust to small amounts of training data and large-norm triggers.

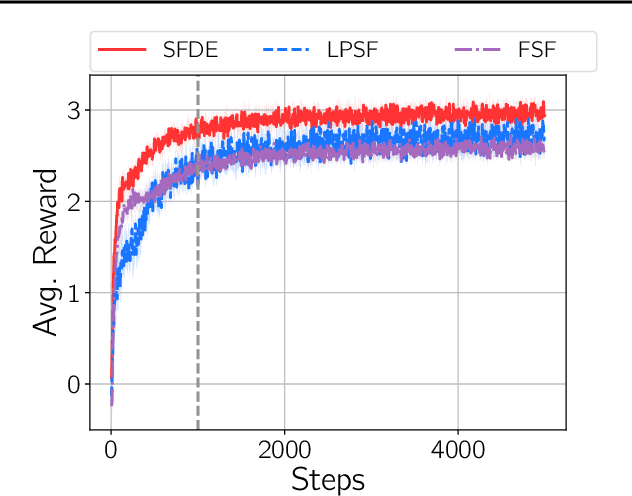

Uncertainty Aware System Identification with Universal Policies

Feb 11, 2022Abstract:Sim2real transfer is primarily concerned with transferring policies trained in simulation to potentially noisy real world environments. A common problem associated with sim2real transfer is estimating the real-world environmental parameters to ground the simulated environment to. Although existing methods such as Domain Randomisation (DR) can produce robust policies by sampling from a distribution of parameters during training, there is no established method for identifying the parameters of the corresponding distribution for a given real-world setting. In this work, we propose Uncertainty-aware policy search (UncAPS), where we use Universal Policy Network (UPN) to store simulation-trained task-specific policies across the full range of environmental parameters and then subsequently employ robust Bayesian optimisation to craft robust policies for the given environment by combining relevant UPN policies in a DR like fashion. Such policy-driven grounding is expected to be more efficient as it estimates only task-relevant sets of parameters. Further, we also account for the estimation uncertainties in the search process to produce policies that are robust against both aleatoric and epistemic uncertainties. We empirically evaluate our approach in a range of noisy, continuous control environments, and show its improved performance compared to competing baselines.

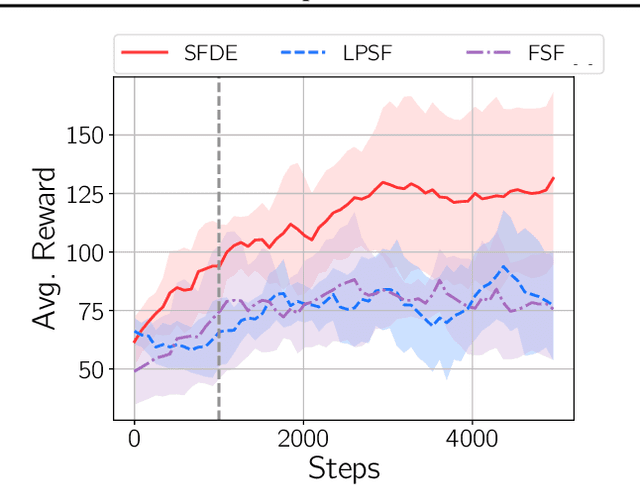

Fast Model-based Policy Search for Universal Policy Networks

Feb 11, 2022Abstract:Adapting an agent's behaviour to new environments has been one of the primary focus areas of physics based reinforcement learning. Although recent approaches such as universal policy networks partially address this issue by enabling the storage of multiple policies trained in simulation on a wide range of dynamic/latent factors, efficiently identifying the most appropriate policy for a given environment remains a challenge. In this work, we propose a Gaussian Process-based prior learned in simulation, that captures the likely performance of a policy when transferred to a previously unseen environment. We integrate this prior with a Bayesian Optimisation-based policy search process to improve the efficiency of identifying the most appropriate policy from the universal policy network. We empirically evaluate our approach in a range of continuous and discrete control environments, and show that it outperforms other competing baselines.

Balanced Q-learning: Combining the Influence of Optimistic and Pessimistic Targets

Nov 03, 2021

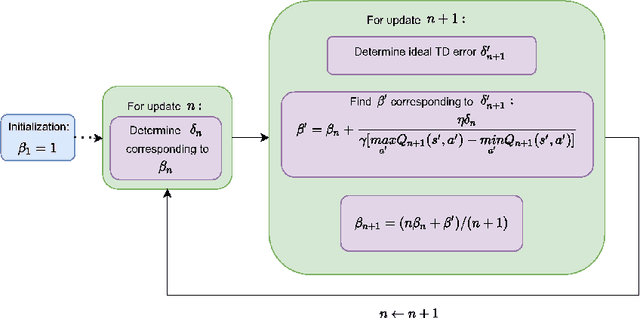

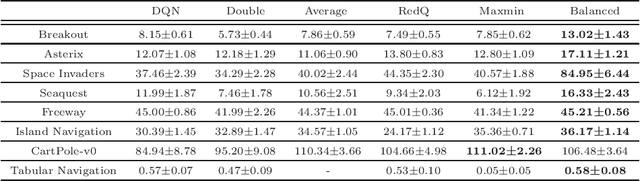

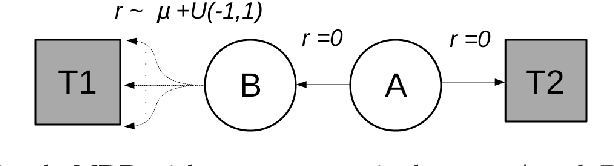

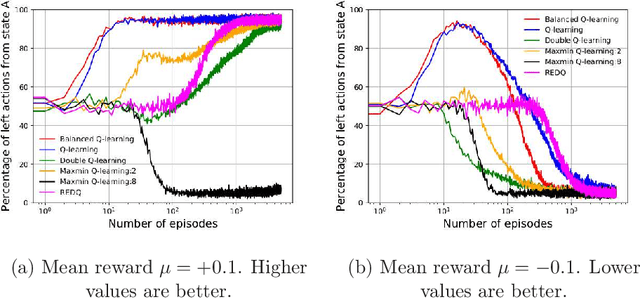

Abstract:The optimistic nature of the Q-learning target leads to an overestimation bias, which is an inherent problem associated with standard $Q-$learning. Such a bias fails to account for the possibility of low returns, particularly in risky scenarios. However, the existence of biases, whether overestimation or underestimation, need not necessarily be undesirable. In this paper, we analytically examine the utility of biased learning, and show that specific types of biases may be preferable, depending on the scenario. Based on this finding, we design a novel reinforcement learning algorithm, Balanced Q-learning, in which the target is modified to be a convex combination of a pessimistic and an optimistic term, whose associated weights are determined online, analytically. We prove the convergence of this algorithm in a tabular setting, and empirically demonstrate its superior learning performance in various environments.

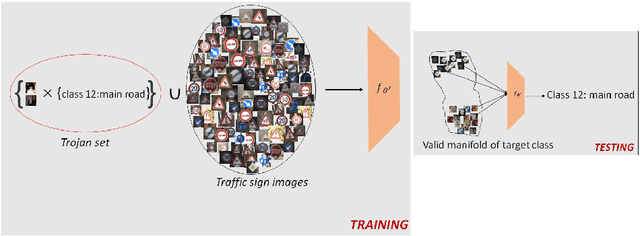

Semantic Host-free Trojan Attack

Oct 26, 2021

Abstract:In this paper, we propose a novel host-free Trojan attack with triggers that are fixed in the semantic space but not necessarily in the pixel space. In contrast to existing Trojan attacks which use clean input images as hosts to carry small, meaningless trigger patterns, our attack considers triggers as full-sized images belonging to a semantically meaningful object class. Since in our attack, the backdoored classifier is encouraged to memorize the abstract semantics of the trigger images than any specific fixed pattern, it can be later triggered by semantically similar but different looking images. This makes our attack more practical to be applied in the real-world and harder to defend against. Extensive experimental results demonstrate that with only a small number of Trojan patterns for training, our attack can generalize well to new patterns of the same Trojan class and can bypass state-of-the-art defense methods.

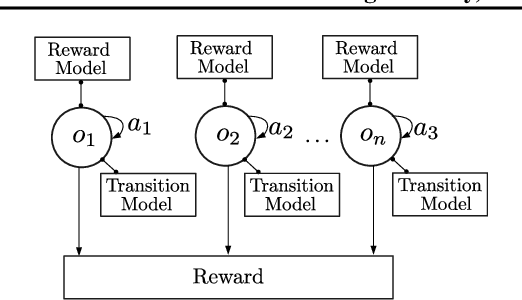

Plug and Play, Model-Based Reinforcement Learning

Aug 20, 2021

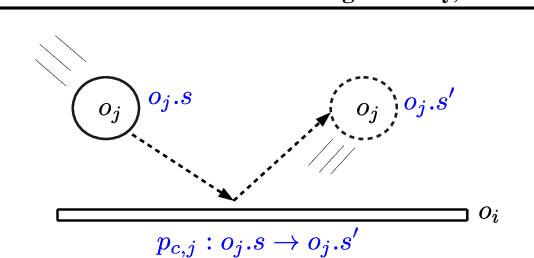

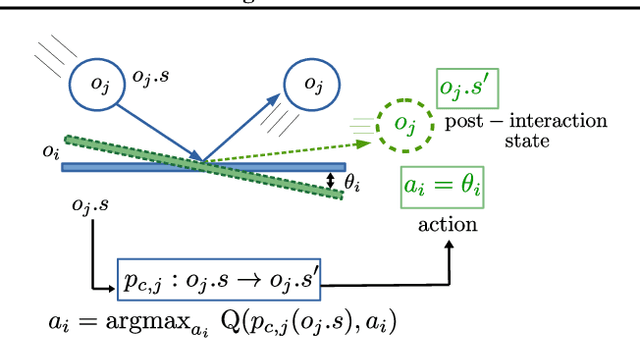

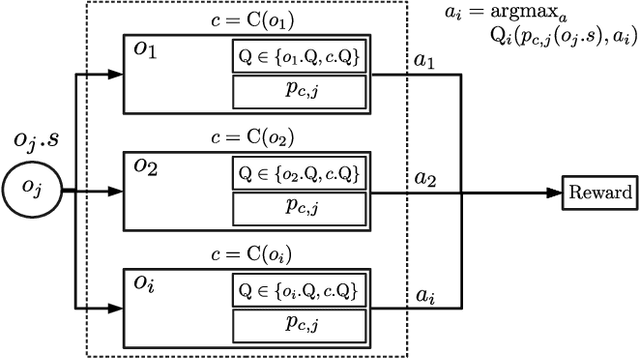

Abstract:Sample-efficient generalisation of reinforcement learning approaches have always been a challenge, especially, for complex scenes with many components. In this work, we introduce Plug and Play Markov Decision Processes, an object-based representation that allows zero-shot integration of new objects from known object classes. This is achieved by representing the global transition dynamics as a union of local transition functions, each with respect to one active object in the scene. Transition dynamics from an object class can be pre-learnt and thus would be ready to use in a new environment. Each active object is also endowed with its reward function. Since there is no central reward function, addition or removal of objects can be handled efficiently by only updating the reward functions of objects involved. A new transfer learning mechanism is also proposed to adapt reward function in such cases. Experiments show that our representation can achieve sample-efficiency in a variety of set-ups.

Combining Online Learning and Offline Learning for Contextual Bandits with Deficient Support

Jul 24, 2021

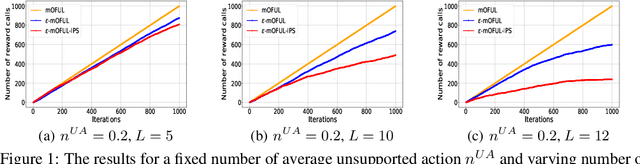

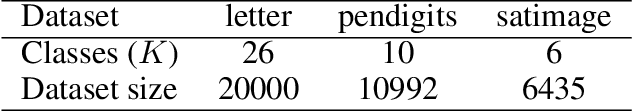

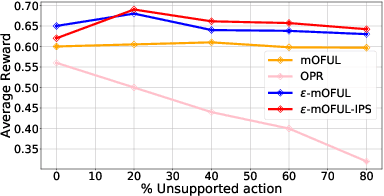

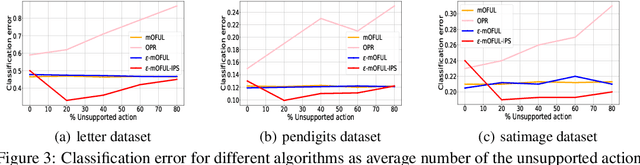

Abstract:We address policy learning with logged data in contextual bandits. Current offline-policy learning algorithms are mostly based on inverse propensity score (IPS) weighting requiring the logging policy to have \emph{full support} i.e. a non-zero probability for any context/action of the evaluation policy. However, many real-world systems do not guarantee such logging policies, especially when the action space is large and many actions have poor or missing rewards. With such \emph{support deficiency}, the offline learning fails to find optimal policies. We propose a novel approach that uses a hybrid of offline learning with online exploration. The online exploration is used to explore unsupported actions in the logged data whilst offline learning is used to exploit supported actions from the logged data avoiding unnecessary explorations. Our approach determines an optimal policy with theoretical guarantees using the minimal number of online explorations. We demonstrate our algorithms' effectiveness empirically on a diverse collection of datasets.

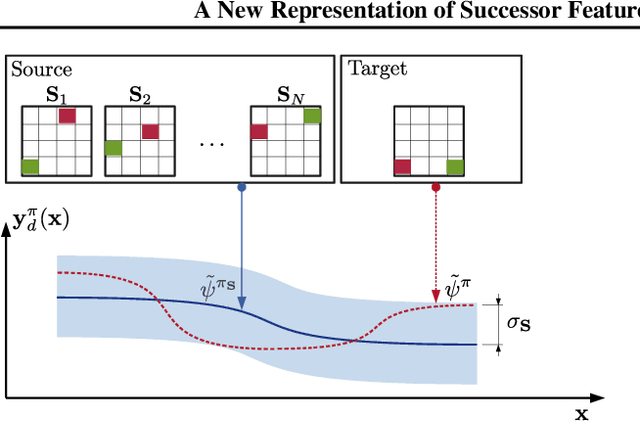

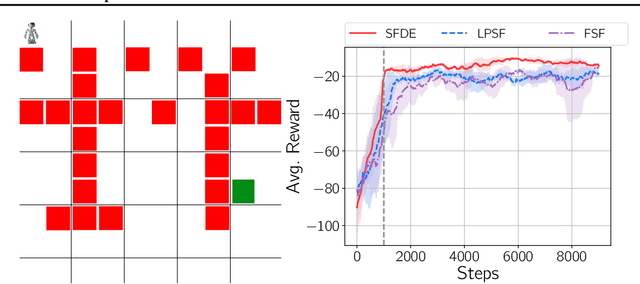

A New Representation of Successor Features for Transfer across Dissimilar Environments

Jul 18, 2021

Abstract:Transfer in reinforcement learning is usually achieved through generalisation across tasks. Whilst many studies have investigated transferring knowledge when the reward function changes, they have assumed that the dynamics of the environments remain consistent. Many real-world RL problems require transfer among environments with different dynamics. To address this problem, we propose an approach based on successor features in which we model successor feature functions with Gaussian Processes permitting the source successor features to be treated as noisy measurements of the target successor feature function. Our theoretical analysis proves the convergence of this approach as well as the bounded error on modelling successor feature functions with Gaussian Processes in environments with both different dynamics and rewards. We demonstrate our method on benchmark datasets and show that it outperforms current baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge