Runyuan Cai

Unifying Speech Recognition, Synthesis and Conversion with Autoregressive Transformers

Jan 15, 2026Abstract:Traditional speech systems typically rely on separate, task-specific models for text-to-speech (TTS), automatic speech recognition (ASR), and voice conversion (VC), resulting in fragmented pipelines that limit scalability, efficiency, and cross-task generalization. In this paper, we present General-Purpose Audio (GPA), a unified audio foundation model that integrates multiple core speech tasks within a single large language model (LLM) architecture. GPA operates on a shared discrete audio token space and supports instruction-driven task induction, enabling a single autoregressive model to flexibly perform TTS, ASR, and VC without architectural modifications. This unified design combines a fully autoregressive formulation over discrete speech tokens, joint multi-task training across speech domains, and a scalable inference pipeline that achieves high concurrency and throughput. The resulting model family supports efficient multi-scale deployment, including a lightweight 0.3B-parameter variant optimized for edge and resource-constrained environments. Together, these design choices demonstrate that a unified autoregressive architecture can achieve competitive performance across diverse speech tasks while remaining viable for low-latency, practical deployment.

FreqNet: A Frequency-domain Image Super-Resolution Network with Dicrete Cosine Transform

Nov 21, 2021

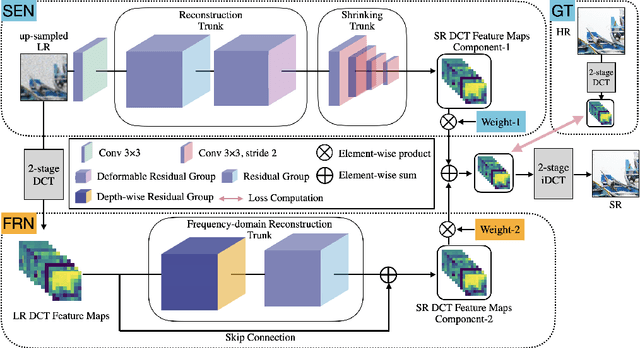

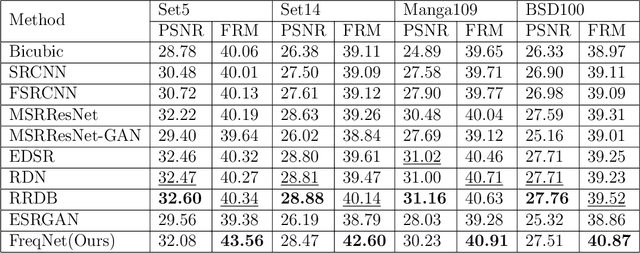

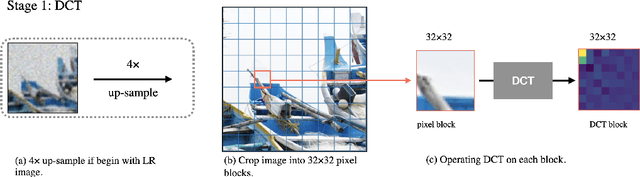

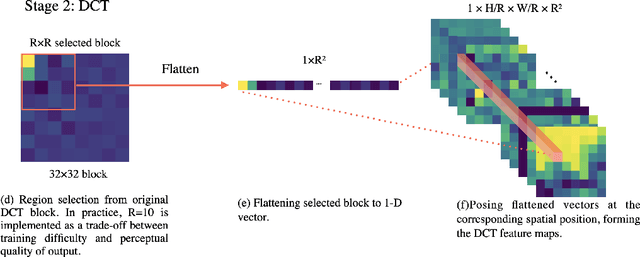

Abstract:Single image super-resolution(SISR) is an ill-posed problem that aims to obtain high-resolution (HR) output from low-resolution (LR) input, during which extra high-frequency information is supposed to be added to improve the perceptual quality. Existing SISR works mainly operate in the spatial domain by minimizing the mean squared reconstruction error. Despite the high peak signal-to-noise ratios(PSNR) results, it is difficult to determine whether the model correctly adds desired high-frequency details. Some residual-based structures are proposed to guide the model to focus on high-frequency features implicitly. However, how to verify the fidelity of those artificial details remains a problem since the interpretation from spatial-domain metrics is limited. In this paper, we propose FreqNet, an intuitive pipeline from the frequency domain perspective, to solve this problem. Inspired by existing frequency-domain works, we convert images into discrete cosine transform (DCT) blocks, then reform them to obtain the DCT feature maps, which serve as the input and target of our model. A specialized pipeline is designed, and we further propose a frequency loss function to fit the nature of our frequency-domain task. Our SISR method in the frequency domain can learn the high-frequency information explicitly, provide fidelity and good perceptual quality for the SR images. We further observe that our model can be merged with other spatial super-resolution models to enhance the quality of their original SR output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge