Robert E. Hillman

Classifying Phonotrauma Severity from Vocal Fold Images with Soft Ordinal Regression

Nov 12, 2025

Abstract:Phonotrauma refers to vocal fold tissue damage resulting from exposure to forces during voicing. It occurs on a continuum from mild to severe, and treatment options can vary based on severity. Assessment of severity involves a clinician's expert judgment, which is costly and can vary widely in reliability. In this work, we present the first method for automatically classifying phonotrauma severity from vocal fold images. To account for the ordinal nature of the labels, we adopt a widely used ordinal regression framework. To account for label uncertainty, we propose a novel modification to ordinal regression loss functions that enables them to operate on soft labels reflecting annotator rating distributions. Our proposed soft ordinal regression method achieves predictive performance approaching that of clinical experts, while producing well-calibrated uncertainty estimates. By providing an automated tool for phonotrauma severity assessment, our work can enable large-scale studies of phonotrauma, ultimately leading to improved clinical understanding and patient care.

Effects of Recording Condition and Number of Monitored Days on Discriminative Power of the Daily Phonotrauma Index

Sep 04, 2024

Abstract:Objective: The Daily Phonotrauma Index (DPI) can quantify pathophysiological mechanisms associated with daily voice use in individuals with phonotraumatic vocal hyperfunction (PVH). Since DPI was developed based on week-long ambulatory voice monitoring, this study investigated if DPI can achieve comparable performance using (1) short laboratory speech tasks and (2) fewer than seven days of ambulatory data. Method: An ambulatory voice monitoring system recorded the vocal function/behavior of 134 females with PVH and vocally healthy matched controls in two different conditions. In the lab, the participants read the first paragraph of the Rainbow Passage and produced spontaneous speech (in-lab data). They were then monitored for seven days (in-field data). Separate DPI models were trained from in-lab and in-field data using the standard deviation of the difference between the magnitude of the first two harmonics (H1-H2) and the skewness of neck-surface acceleration magnitude. First, 10-fold cross-validation evaluated classification performance of in-lab and in-field DPIs. Second, the effect of the number of ambulatory monitoring days on the accuracy of in-field DPI classification was quantified. Results: The average in-lab DPI accuracy computed from the Rainbow passage and spontaneous speech were, respectively, 57.9% and 48.9%, which are close to chance performance. The average classification accuracy of in-field DPI was significantly higher with a very large effect size (73.4%, Cohens D = 1.8). Second, the average in-field DPI accuracy increased from 66.5% for one day to 75.0% for seven days, with the gain of including an additional day on accuracy dropping below 1 percentage point after 4 days.

Toward Generalizable Machine Learning Models in Speech, Language, and Hearing Sciences: Sample Size Estimation and Reducing Overfitting

Aug 30, 2023Abstract:This study's first purpose is to provide quantitative evidence that would incentivize researchers to instead use the more robust method of nested cross-validation. The second purpose is to present methods and MATLAB codes for doing power analysis for ML-based analysis during the design of a study. Monte Carlo simulations were used to quantify the interactions between the employed cross-validation method, the discriminative power of features, the dimensionality of the feature space, and the dimensionality of the model. Four different cross-validations (single holdout, 10-fold, train-validation-test, and nested 10-fold) were compared based on the statistical power and statistical confidence of the ML models. Distributions of the null and alternative hypotheses were used to determine the minimum required sample size for obtaining a statistically significant outcome ({\alpha}=0.05, 1-\b{eta}=0.8). Statistical confidence of the model was defined as the probability of correct features being selected and hence being included in the final model. Our analysis showed that the model generated based on the single holdout method had very low statistical power and statistical confidence and that it significantly overestimated the accuracy. Conversely, the nested 10-fold cross-validation resulted in the highest statistical confidence and the highest statistical power, while providing an unbiased estimate of the accuracy. The required sample size with a single holdout could be 50% higher than what would be needed if nested cross-validation were used. Confidence in the model based on nested cross-validation was as much as four times higher than the confidence in the single holdout-based model. A computational model, MATLAB codes, and lookup tables are provided to assist researchers with estimating the sample size during the design of their future studies.

Triangular body-cover model of the vocal folds with coordinated activation of five intrinsic laryngeal muscles with applications to vocal hyperfunction

Aug 02, 2021

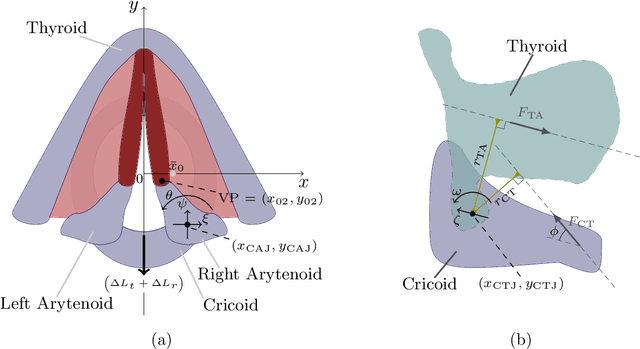

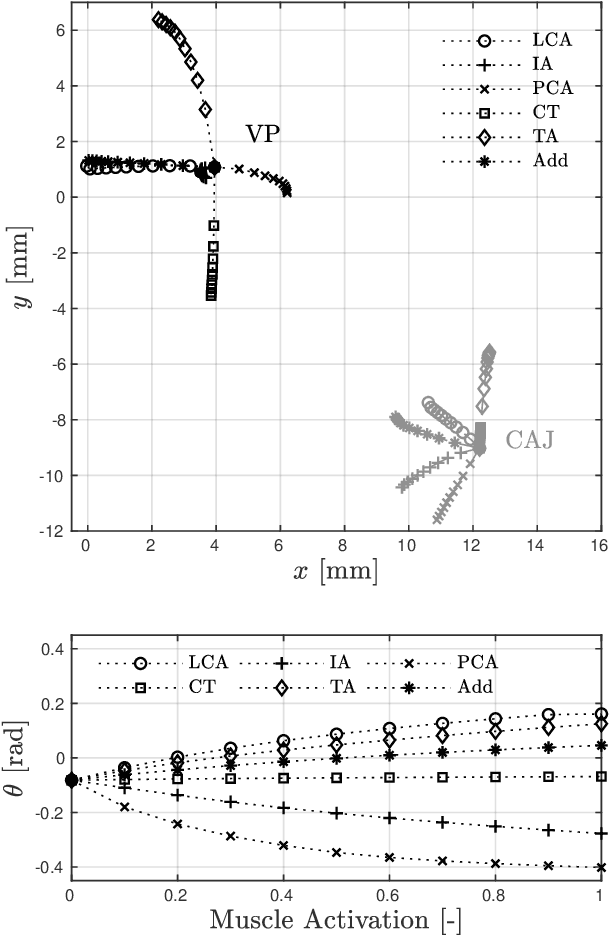

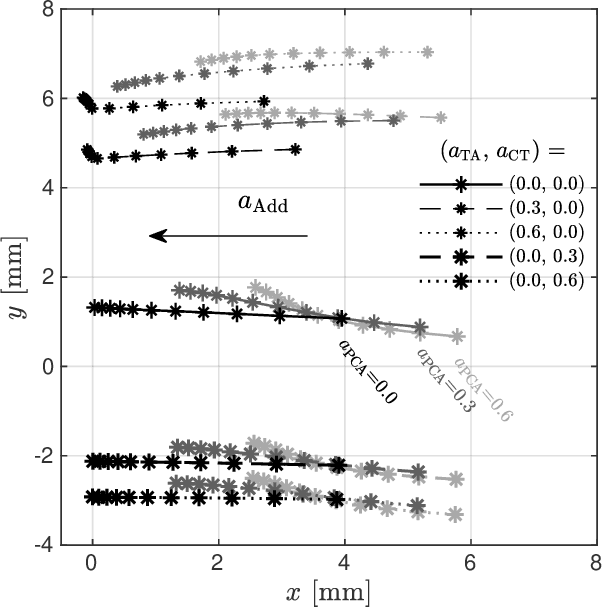

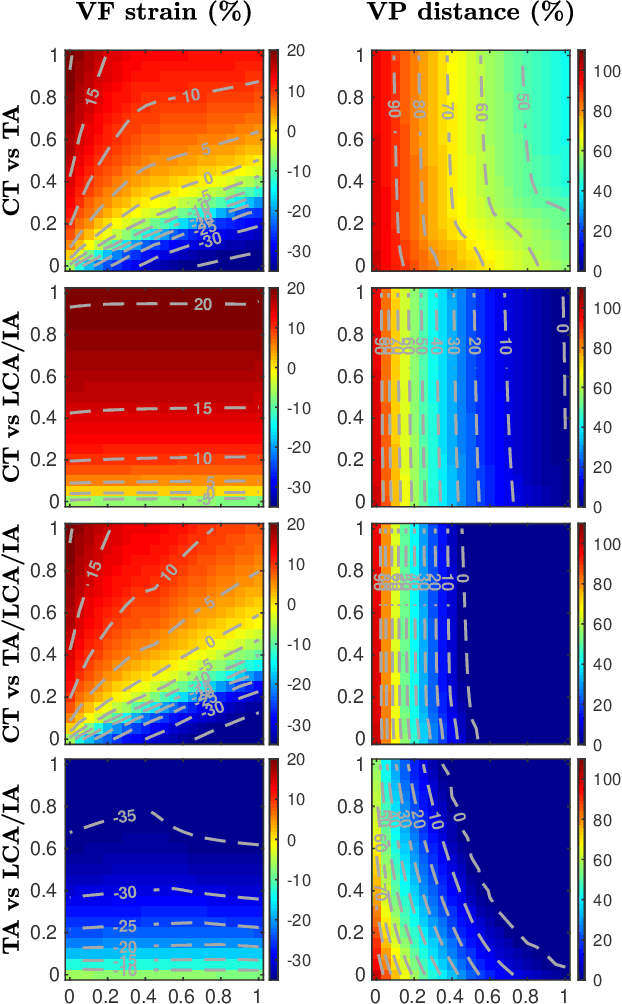

Abstract:Poor laryngeal muscle coordination that results in abnormal glottal posturing is believed to be a primary etiologic factor in common voice disorders such as non-phonotraumatic vocal hyperfunction. An imbalance in the activity of antagonistic laryngeal muscles is hypothesized to play a key role in the alteration of normal vocal fold biomechanics that results in the dysphonia associated with such disorders. Current low-order models are unsatisfactory to test this hypothesis since they do not capture the co-contraction of antagonist laryngeal muscle pairs. To address this limitation, a scheme for controlling a self-sustained triangular body-cover model with intrinsic muscle control is introduced. The approach builds upon prior efforts and allows for exploring the role of antagonistic muscle pairs in phonation. The proposed scheme is illustrated through the ample agreement with prior studies using finite element models, excised larynges, and clinical studies in sustained and time-varying vocal gestures. Pilot simulations of abnormal scenarios illustrated that poorly regulated and elevated muscle activities result in more abducted prephonatory posturing, which lead to inefficient phonation and subglottal pressure compensation to regain loudness. The proposed tool is deemed sufficiently accurate and flexible for future comprehensive investigations of non-phonotraumatic vocal hyperfunction and other laryngeal motor control disorders.

Uncovering Voice Misuse Using Symbolic Mismatch

Aug 08, 2016

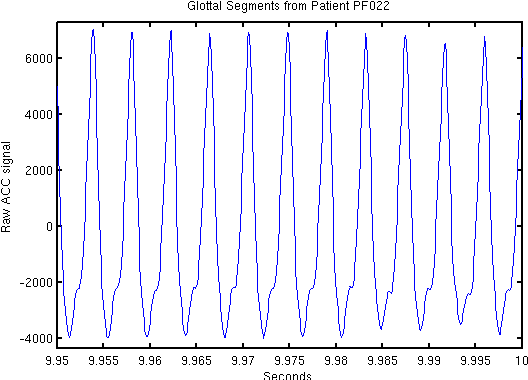

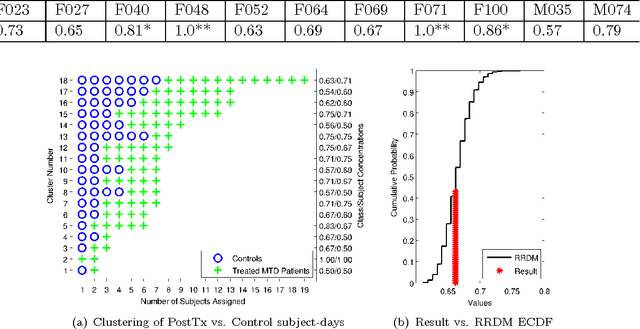

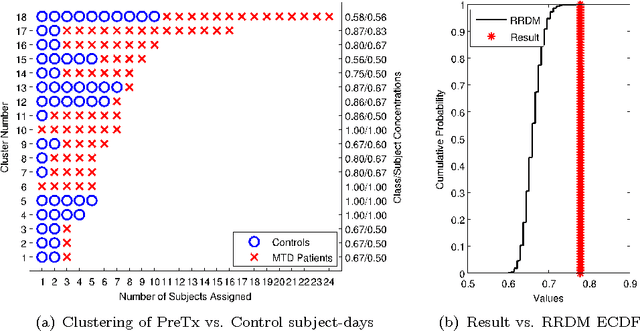

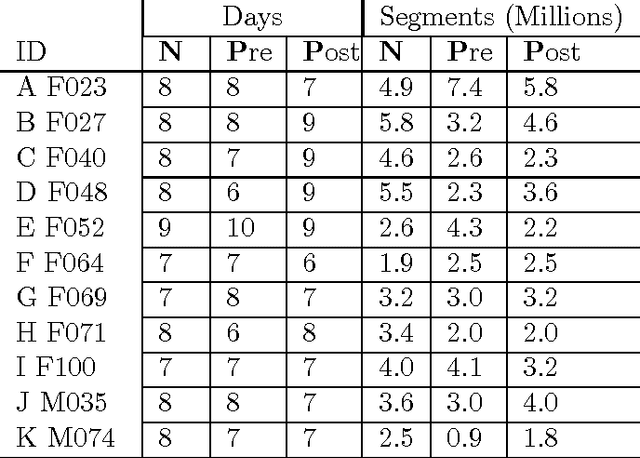

Abstract:Voice disorders affect an estimated 14 million working-aged Americans, and many more worldwide. We present the first large scale study of vocal misuse based on long-term ambulatory data collected by an accelerometer placed on the neck. We investigate an unsupervised data mining approach to uncovering latent information about voice misuse. We segment signals from over 253 days of data from 22 subjects into over a hundred million single glottal pulses (closures of the vocal folds), cluster segments into symbols, and use symbolic mismatch to uncover differences between patients and matched controls, and between patients pre- and post-treatment. Our results show significant behavioral differences between patients and controls, as well as between some pre- and post-treatment patients. Our proposed approach provides an objective basis for helping diagnose behavioral voice disorders, and is a first step towards a more data-driven understanding of the impact of voice therapy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge