Ricardo Sosa

Towards Senior-Robot Interaction: Reactive Robot Dog Gestures

Dec 22, 2025

Abstract:As the global population ages, many seniors face the problem of loneliness. Companion robots offer a potential solution. However, current companion robots often lack advanced functionality, while task-oriented robots are not designed for social interaction, limiting their suitability and acceptance by seniors. Our work introduces a senior-oriented system for quadruped robots that allows for more intuitive user input and provides more socially expressive output. For user input, we implemented a MediaPipe-based module for hand gesture and head movement recognition, enabling control without a remote. For output, we designed and trained robotic dog gestures using curriculum-based reinforcement learning in Isaac Gym, progressing from simple standing to three-legged balancing and leg extensions, and more. The final tests achieved over 95\% success on average in simulation, and we validated a key social gesture (the paw-lift) on a Unitree robot. Real-world tests demonstrated the feasibility and social expressiveness of this framework, while also revealing sim-to-real challenges in joint compliance, load distribution, and balance control. These contributions advance the development of practical quadruped robots as social companions for the senior and outline pathways for sim-to-real adaptation and inform future user studies.

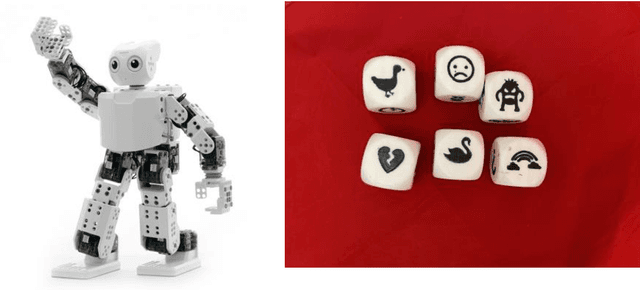

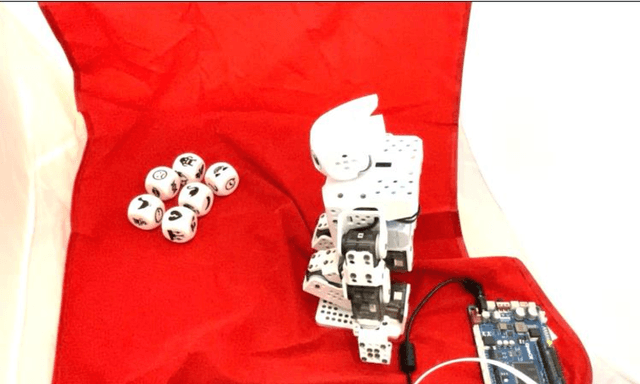

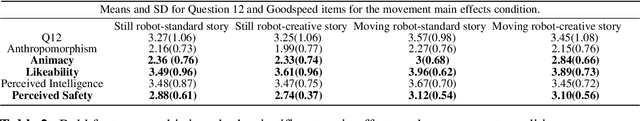

Human-Robot Creative Interactions (HRCI): Exploring Creativity in Artificial Agents Using a Story-Telling Game

Feb 08, 2022

Abstract:Creativity in social robots requires further attention in the interdisciplinary field of Human-Robot Interaction (HRI). This paper investigates the hypothesised connection between the perceived creative agency and the animacy of social robots. The goal of this work is to assess the relevance of robot movements in the attribution of creativity to robots. The results of this work inform the design of future Human-Robot Creative Interactions (HRCI). The study uses a storytelling game based on visual imagery inspired by the game 'Story Cubes' to explore the perceived creative agency of social robots. This game is used to tell a classic story for children with an alternative ending. A 2x2 experiment was designed to compare two conditions: the robot telling the original version of the story and the robot plot-twisting the end of the story. A Robotis Mini humanoid robot was used for the experiment. As a novel contribution, we propose an adaptation of the Short Scale Creative Self scale (SSCS) to measure perceived creative agency in robots. We also use the Godspeed scale to explore different attributes of social robots in this setting. We did not obtain significant main effects of the robot movements or the story in the participants' scores. However, we identified significant main effects of the robot movements in features of animacy, likeability, and perceived safety. This initial work encourages further studies experimenting with different robot embodiment and movements to evaluate the perceived creative agency in robots and inform the design of future robots that participate in creative interactions.

A Decision Support Design Framework for Selecting a Robotic Interface

Feb 08, 2022Abstract:The design and development of robots involve the essential step of selecting and testing robotic interfaces. This interface selection requires careful consideration as the robot's physical embodiment influences and adds to the traditional interfaces' complexities. Our paper presents a decision support design framework for the a priori selection of robotic interface that was inductively formulated from our case study of designing a robot to collaborate with employees with cognitive disabilities. Our framework outlines the interface requirements according to User, Robot, Tasks and Environment and facilitates a structured comparison of interfaces against those requirements. The framework is assessed for its potential applicability and usefulness through a qualitative study with HRI experts. The framework is appreciated as a systematic tool that enables documentation and discussion, and identified issues inform the frameworks' iteration. The themes of ownership of this process in interdisciplinary teams and its role in iteratively designing interfaces are discussed.

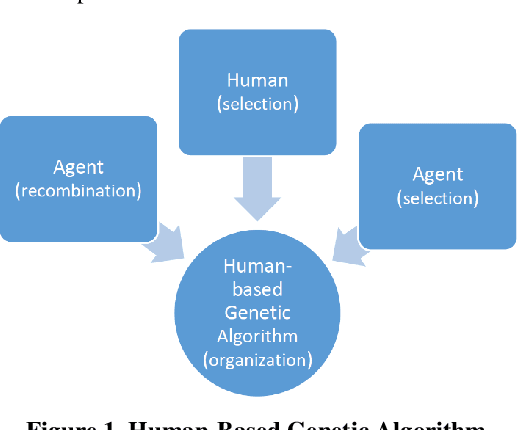

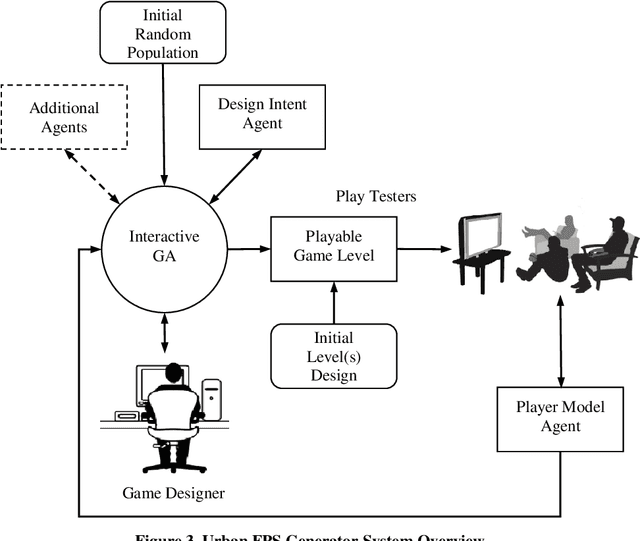

Procedural urban environments for FPS games

Apr 20, 2016

Abstract:This paper presents a novel approach to procedural generation of urban maps for First Person Shooter (FPS) games. A multi-agent evolutionary system is employed to place streets, buildings and other items inside the Unity3D game engine, resulting in playable video game levels. A computational agent is trained using machine learning techniques to capture the intent of the game designer as part of the multi-agent system, and to enable a semi-automated aesthetic selection for the underlying genetic algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge