Ricardo Silva Carvalho

Differentially Private Ensemble Classifiers for Data Streams

Dec 09, 2021

Abstract:Learning from continuous data streams via classification/regression is prevalent in many domains. Adapting to evolving data characteristics (concept drift) while protecting data owners' private information is an open challenge. We present a differentially private ensemble solution to this problem with two distinguishing features: it allows an \textit{unbounded} number of ensemble updates to deal with the potentially never-ending data streams under a fixed privacy budget, and it is \textit{model agnostic}, in that it treats any pre-trained differentially private classification/regression model as a black-box. Our method outperforms competitors on real-world and simulated datasets for varying settings of privacy, concept drift, and data distribution.

The Differentially Private Lottery Ticket Mechanism

Feb 16, 2020

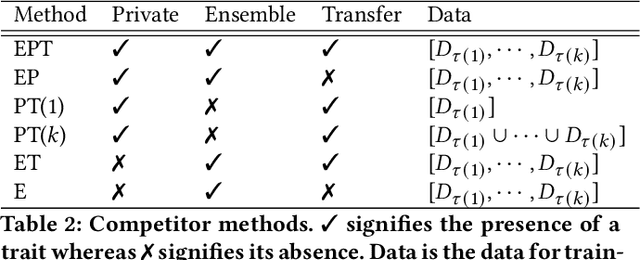

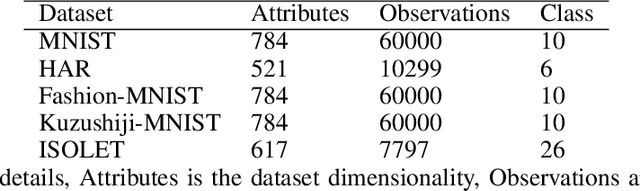

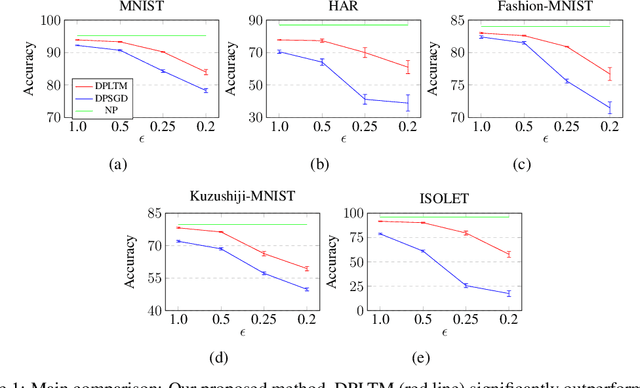

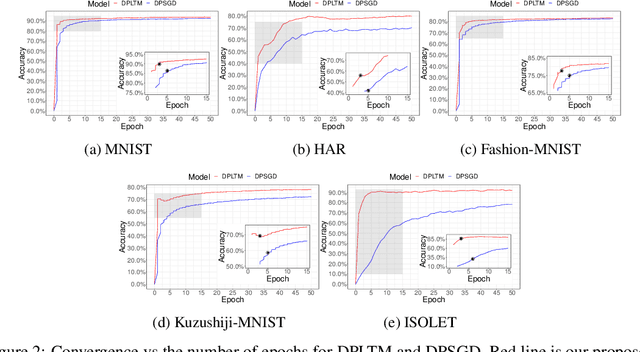

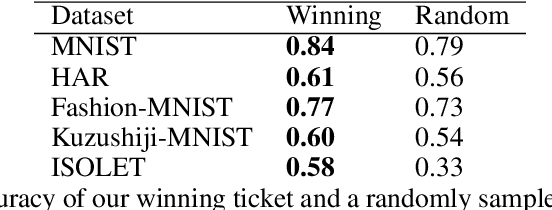

Abstract:We propose the differentially private lottery ticket mechanism (DPLTM). An end-to-end differentially private training paradigm based on the lottery ticket hypothesis. Using "high-quality winners", selected via our custom score function, DPLTM significantly improves the privacy-utility trade-off over the state-of-the-art. We show that DPLTM converges faster, allowing for early stopping with reduced privacy budget consumption. We further show that the tickets from DPLTM are transferable across datasets, domains, and architectures. Our extensive evaluation on several public datasets provides evidence to our claims.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge