Reza Rezvan

Chalmers University of Technology

Causality--Δ: Jacobian-Based Dependency Analysis in Flow Matching Models

Feb 02, 2026Abstract:Flow matching learns a velocity field that transports a base distribution to data. We study how small latent perturbations propagate through these flows and show that Jacobian-vector products (JVPs) provide a practical lens on dependency structure in the generated features. We derive closed-form expressions for the optimal drift and its Jacobian in Gaussian and mixture-of-Gaussian settings, revealing that even globally nonlinear flows admit local affine structure. In low-dimensional synthetic benchmarks, numerical JVPs recover the analytical Jacobians. In image domains, composing the flow with an attribute classifier yields an attribute-level JVP estimator that recovers empirical correlations on MNIST and CelebA. Conditioning on small classifier-Jacobian norms reduces correlations in a way consistent with a hypothesized common-cause structure, while we emphasize that this conditioning is not a formal do intervention.

AFABench: A Generic Framework for Benchmarking Active Feature Acquisition

Aug 20, 2025

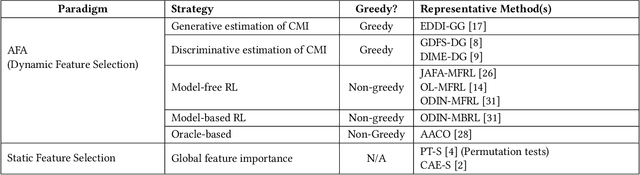

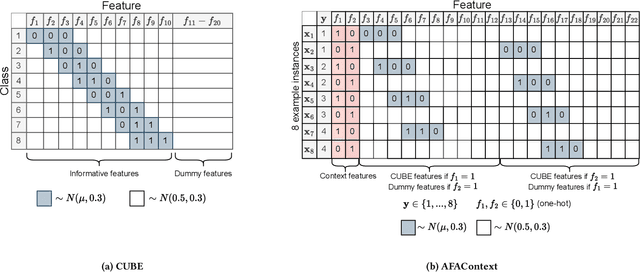

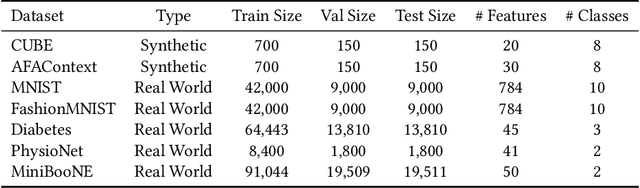

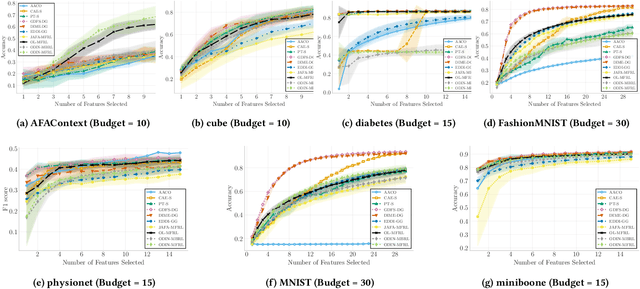

Abstract:In many real-world scenarios, acquiring all features of a data instance can be expensive or impractical due to monetary cost, latency, or privacy concerns. Active Feature Acquisition (AFA) addresses this challenge by dynamically selecting a subset of informative features for each data instance, trading predictive performance against acquisition cost. While numerous methods have been proposed for AFA, ranging from greedy information-theoretic strategies to non-myopic reinforcement learning approaches, fair and systematic evaluation of these methods has been hindered by the lack of standardized benchmarks. In this paper, we introduce AFABench, the first benchmark framework for AFA. Our benchmark includes a diverse set of synthetic and real-world datasets, supports a wide range of acquisition policies, and provides a modular design that enables easy integration of new methods and tasks. We implement and evaluate representative algorithms from all major categories, including static, greedy, and reinforcement learning-based approaches. To test the lookahead capabilities of AFA policies, we introduce a novel synthetic dataset, AFAContext, designed to expose the limitations of greedy selection. Our results highlight key trade-offs between different AFA strategies and provide actionable insights for future research. The benchmark code is available at: https://github.com/Linusaronsson/AFA-Benchmark.

ClaudesLens: Uncertainty Quantification in Computer Vision Models

Jun 18, 2024Abstract:In a world where more decisions are made using artificial intelligence, it is of utmost importance to ensure these decisions are well-grounded. Neural networks are the modern building blocks for artificial intelligence. Modern neural network-based computer vision models are often used for object classification tasks. Correctly classifying objects with \textit{certainty} has become of great importance in recent times. However, quantifying the inherent \textit{uncertainty} of the output from neural networks is a challenging task. Here we show a possible method to quantify and evaluate the uncertainty of the output of different computer vision models based on Shannon entropy. By adding perturbation of different levels, on different parts, ranging from the input to the parameters of the network, one introduces entropy to the system. By quantifying and evaluating the perturbed models on the proposed PI and PSI metrics, we can conclude that our theoretical framework can grant insight into the uncertainty of predictions of computer vision models. We believe that this theoretical framework can be applied to different applications for neural networks. We believe that Shannon entropy may eventually have a bigger role in the SOTA (State-of-the-art) methods to quantify uncertainty in artificial intelligence. One day we might be able to apply Shannon entropy to our neural systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge