Redouane Lguensat

Multiscale Neural PDE Surrogates for Prediction and Downscaling: Application to Ocean Currents

Jul 24, 2025Abstract:Accurate modeling of physical systems governed by partial differential equations is a central challenge in scientific computing. In oceanography, high-resolution current data are critical for coastal management, environmental monitoring, and maritime safety. However, available satellite products, such as Copernicus data for sea water velocity at ~0.08 degrees spatial resolution and global ocean models, often lack the spatial granularity required for detailed local analyses. In this work, we (a) introduce a supervised deep learning framework based on neural operators for solving PDEs and providing arbitrary resolution solutions, and (b) propose downscaling models with an application to Copernicus ocean current data. Additionally, our method can model surrogate PDEs and predict solutions at arbitrary resolution, regardless of the input resolution. We evaluated our model on real-world Copernicus ocean current data and synthetic Navier-Stokes simulation datasets.

Learning to generate physical ocean states: Towards hybrid climate modeling

Feb 04, 2025

Abstract:Ocean General Circulation Models require extensive computational resources to reach equilibrium states, while deep learning emulators, despite offering fast predictions, lack the physical interpretability and long-term stability necessary for climate scientists to understand climate sensitivity (to greenhouse gas emissions) and mechanisms of abrupt % variability such as tipping points. We propose to take the best from both worlds by leveraging deep generative models to produce physically consistent oceanic states that can serve as initial conditions for climate projections. We assess the viability of this hybrid approach through both physical metrics and numerical experiments, and highlight the benefits of enforcing physical constraints during generation. Although we train here on ocean variables from idealized numerical simulations, we claim that this hybrid approach, combining the computational efficiency of deep learning with the physical accuracy of numerical models, can effectively reduce the computational burden of running climate models to equilibrium, and reduce uncertainties in climate projections by minimizing drifts in baseline simulations.

Southern Ocean Dynamics Under Climate Change: New Knowledge Through Physics-Guided Machine Learning

Oct 21, 2023

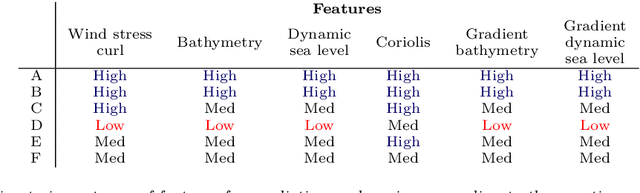

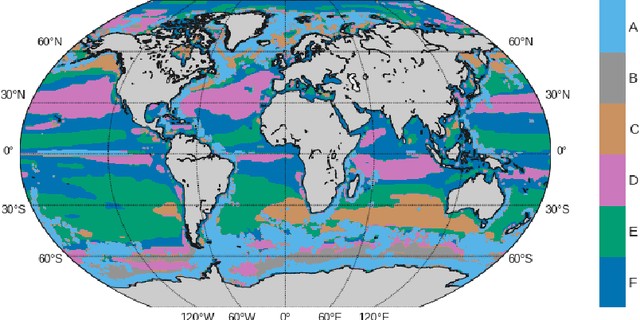

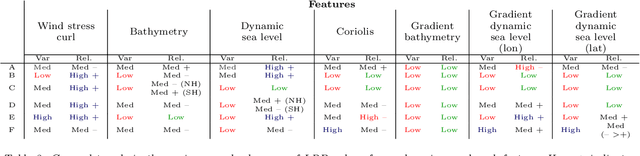

Abstract:Complex ocean systems such as the Antarctic Circumpolar Current play key roles in the climate, and current models predict shifts in their strength and area under climate change. However, the physical processes underlying these changes are not well understood, in part due to the difficulty of characterizing and tracking changes in ocean physics in complex models. To understand changes in the Antarctic Circumpolar Current, we extend the method Tracking global Heating with Ocean Regimes (THOR) to a mesoscale eddy permitting climate model and identify regions of the ocean characterized by similar physics, called dynamical regimes, using readily accessible fields from climate models. To this end, we cluster grid cells into dynamical regimes and train an ensemble of neural networks to predict these regimes and track them under climate change. Finally, we leverage this new knowledge to elucidate the dynamics of regime shifts. Here we illustrate the value of this high-resolution version of THOR, which allows for mesoscale turbulence, with a case study of the Antarctic Circumpolar Current and its interactions with the Pacific-Antarctic Ridge. In this region, THOR specifically reveals a shift in dynamical regime under climate change driven by changes in wind stress and interactions with bathymetry. Using this knowledge to guide further exploration, we find that as the Antarctic Circumpolar Current shifts north under intensifying wind stress, the dominant dynamical role of bathymetry weakens and the flow strengthens.

Neural Fields for Fast and Scalable Interpolation of Geophysical Ocean Variables

Nov 18, 2022

Abstract:Optimal Interpolation (OI) is a widely used, highly trusted algorithm for interpolation and reconstruction problems in geosciences. With the influx of more satellite missions, we have access to more and more observations and it is becoming more pertinent to take advantage of these observations in applications such as forecasting and reanalysis. With the increase in the volume of available data, scalability remains an issue for standard OI and it prevents many practitioners from effectively and efficiently taking advantage of these large sums of data to learn the model hyperparameters. In this work, we leverage recent advances in Neural Fields (NerFs) as an alternative to the OI framework where we show how they can be easily applied to standard reconstruction problems in physical oceanography. We illustrate the relevance of NerFs for gap-filling of sparse measurements of sea surface height (SSH) via satellite altimetry and demonstrate how NerFs are scalable with comparable results to the standard OI. We find that NerFs are a practical set of methods that can be readily applied to geoscience interpolation problems and we anticipate a wider adoption in the future.

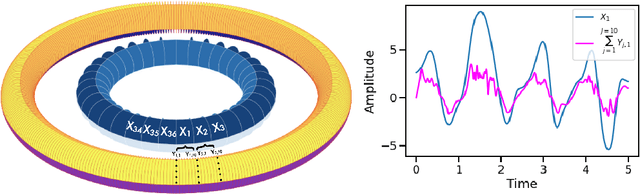

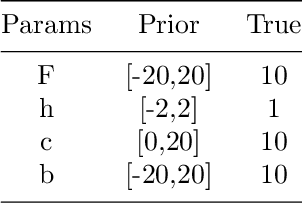

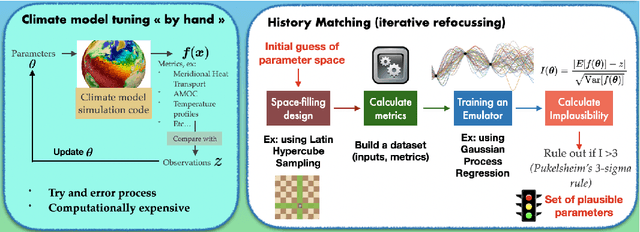

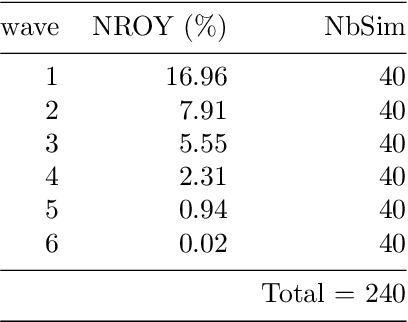

Semi-automatic tuning of coupled climate models with multiple intrinsic timescales: lessons learned from the Lorenz96 model

Aug 16, 2022

Abstract:The objective of this study is to evaluate the potential for History Matching (HM) to tune a climate system with multi-scale dynamics. By considering a toy climate model, namely, the two-scale Lorenz96 model and producing experiments in perfect-model setting, we explore in detail how several built-in choices need to be carefully tested. We also demonstrate the importance of introducing physical expertise in the range of parameters, a priori to running HM. Finally we revisit a classical procedure in climate model tuning, that consists of tuning the slow and fast components separately. By doing so in the Lorenz96 model, we illustrate the non-uniqueness of plausible parameters and highlight the specificity of metrics emerging from the coupling. This paper contributes also to bridging the communities of uncertainty quantification, machine learning and climate modeling, by making connections between the terms used by each community for the same concept and presenting promising collaboration avenues that would benefit climate modeling research.

Explainable Artificial Intelligence for Bayesian Neural Networks: Towards trustworthy predictions of ocean dynamics

Apr 30, 2022

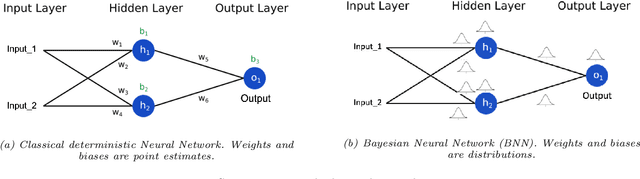

Abstract:The trustworthiness of neural networks is often challenged because they lack the ability to express uncertainty and explain their skill. This can be problematic given the increasing use of neural networks in high stakes decision-making such as in climate change applications. We address both issues by successfully implementing a Bayesian Neural Network (BNN), where parameters are distributions rather than deterministic, and applying novel implementations of explainable AI (XAI) techniques. The uncertainty analysis from the BNN provides a comprehensive overview of the prediction more suited to practitioners' needs than predictions from a classical neural network. Using a BNN means we can calculate the entropy (i.e. uncertainty) of the predictions and determine if the probability of an outcome is statistically significant. To enhance trustworthiness, we also spatially apply the two XAI techniques of Layer-wise Relevance Propagation (LRP) and SHapley Additive exPlanation (SHAP) values. These XAI methods reveal the extent to which the BNN is suitable and/or trustworthy. Using two techniques gives a more holistic view of BNN skill and its uncertainty, as LRP considers neural network parameters, whereas SHAP considers changes to outputs. We verify these techniques using comparison with intuition from physical theory. The differences in explanation identify potential areas where new physical theory guided studies are needed.

A posteriori learning for quasi-geostrophic turbulence parametrization

Apr 08, 2022

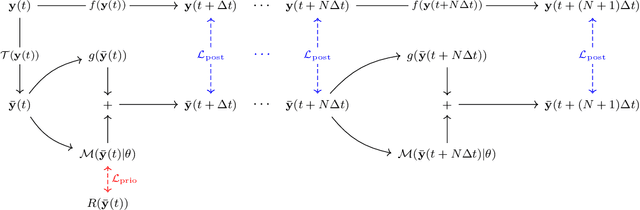

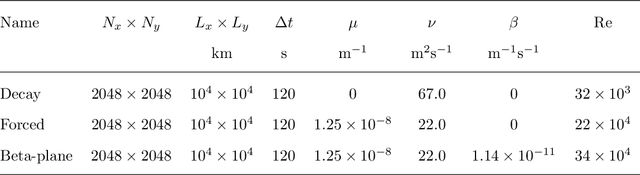

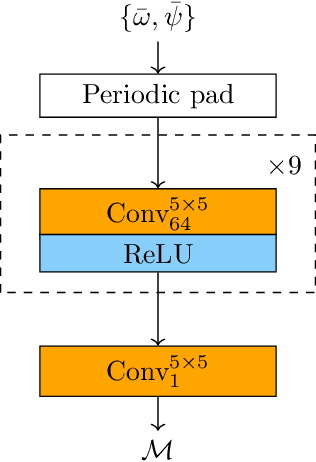

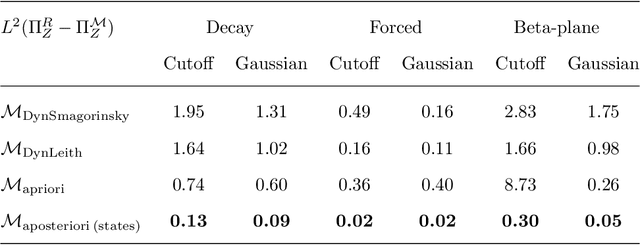

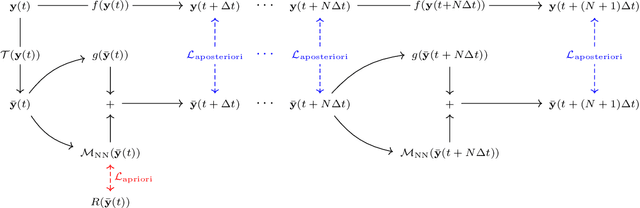

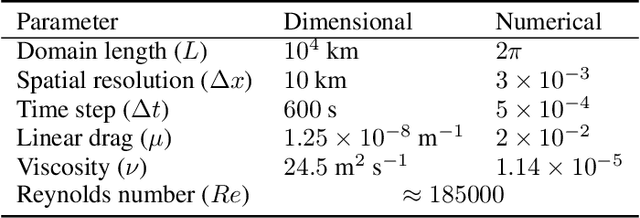

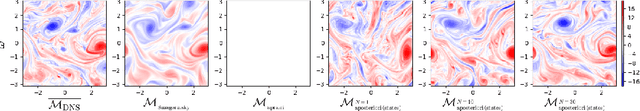

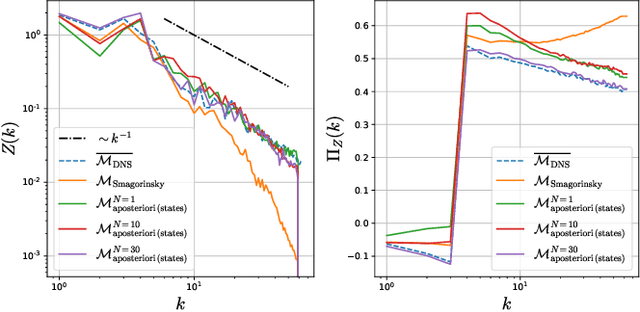

Abstract:The use of machine learning to build subgrid parametrizations for climate models is receiving growing attention. State-of-the-art strategies address the problem as a supervised learning task and optimize algorithms that predict subgrid fluxes based on information from coarse resolution models. In practice, training data are generated from higher resolution numerical simulations transformed in order to mimic coarse resolution simulations. By essence, these strategies optimize subgrid parametrizations to meet so-called $\textit{a priori}$ criteria. But the actual purpose of a subgrid parametrization is to obtain good performance in terms of $\textit{a posteriori}$ metrics which imply computing entire model trajectories. In this paper, we focus on the representation of energy backscatter in two dimensional quasi-geostrophic turbulence and compare parametrizations obtained with different learning strategies at fixed computational complexity. We show that strategies based on $\textit{a priori}$ criteria yield parametrizations that tend to be unstable in direct simulations and describe how subgrid parametrizations can alternatively be trained end-to-end in order to meet $\textit{a posteriori}$ criteria. We illustrate that end-to-end learning strategies yield parametrizations that outperform known empirical and data-driven schemes in terms of performance, stability and ability to apply to different flow configurations. These results support the relevance of differentiable programming paradigms for climate models in the future.

A posteriori learning of quasi-geostrophic turbulence parametrization: an experiment on integration steps

Nov 27, 2021

Abstract:Modeling the subgrid-scale dynamics of reduced models is a long standing open problem that finds application in ocean, atmosphere and climate predictions where direct numerical simulation (DNS) is impossible. While neural networks (NNs) have already been applied to a range of three-dimensional flows with success, two dimensional flows are more challenging because of the backscatter of energy from small to large scales. We show that learning a model jointly with the dynamical solver and a meaningful \textit{a posteriori}-based loss function lead to stable and realistic simulations when applied to quasi-geostrophic turbulence.

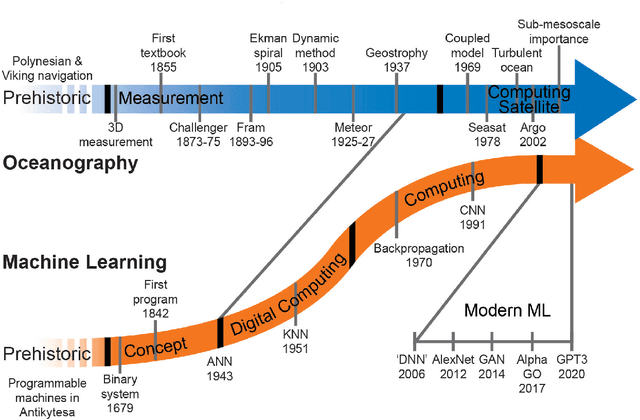

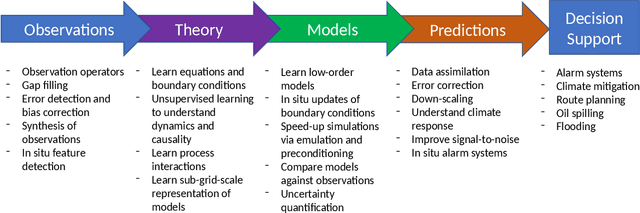

Bridging observation, theory and numerical simulation of the ocean using Machine Learning

Apr 26, 2021

Abstract:Progress within physical oceanography has been concurrent with the increasing sophistication of tools available for its study. The incorporation of machine learning (ML) techniques offers exciting possibilities for advancing the capacity and speed of established methods and also for making substantial and serendipitous discoveries. Beyond vast amounts of complex data ubiquitous in many modern scientific fields, the study of the ocean poses a combination of unique challenges that ML can help address. The observational data available is largely spatially sparse, limited to the surface, and with few time series spanning more than a handful of decades. Important timescales span seconds to millennia, with strong scale interactions and numerical modelling efforts complicated by details such as coastlines. This review covers the current scientific insight offered by applying ML and points to where there is imminent potential. We cover the main three branches of the field: observations, theory, and numerical modelling. Highlighting both challenges and opportunities, we discuss both the historical context and salient ML tools. We focus on the use of ML in situ sampling and satellite observations, and the extent to which ML applications can advance theoretical oceanographic exploration, as well as aid numerical simulations. Applications that are also covered include model error and bias correction and current and potential use within data assimilation. While not without risk, there is great interest in the potential benefits of oceanographic ML applications; this review caters to this interest within the research community.

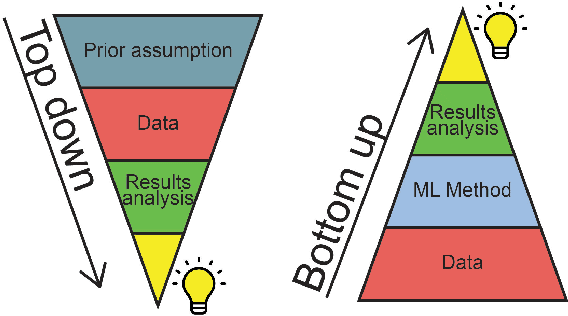

NightVision: Generating Nighttime Satellite Imagery from Infra-Red Observations

Dec 08, 2020

Abstract:The recent explosion in applications of machine learning to satellite imagery often rely on visible images and therefore suffer from a lack of data during the night. The gap can be filled by employing available infra-red observations to generate visible images. This work presents how deep learning can be applied successfully to create those images by using U-Net based architectures. The proposed methods show promising results, achieving a structural similarity index (SSIM) up to 86\% on an independent test set and providing visually convincing output images, generated from infra-red observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge