Razane Tajeddine

On the Effectiveness of Membership Inference in Targeted Data Extraction from Large Language Models

Dec 15, 2025Abstract:Large Language Models (LLMs) are prone to memorizing training data, which poses serious privacy risks. Two of the most prominent concerns are training data extraction and Membership Inference Attacks (MIAs). Prior research has shown that these threats are interconnected: adversaries can extract training data from an LLM by querying the model to generate a large volume of text and subsequently applying MIAs to verify whether a particular data point was included in the training set. In this study, we integrate multiple MIA techniques into the data extraction pipeline to systematically benchmark their effectiveness. We then compare their performance in this integrated setting against results from conventional MIA benchmarks, allowing us to evaluate their practical utility in real-world extraction scenarios.

Privacy-preserving Data Sharing on Vertically Partitioned Data

Oct 19, 2020

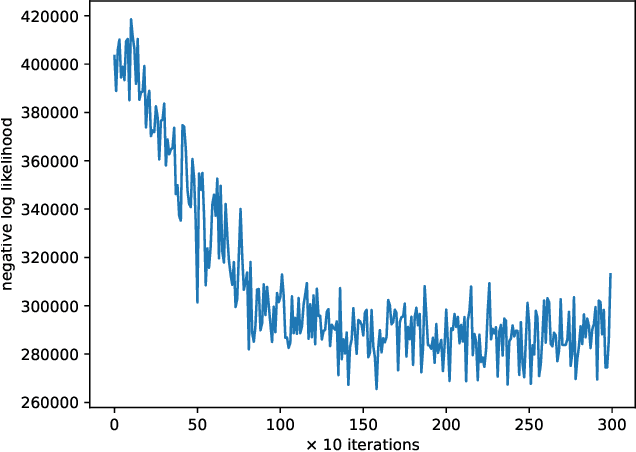

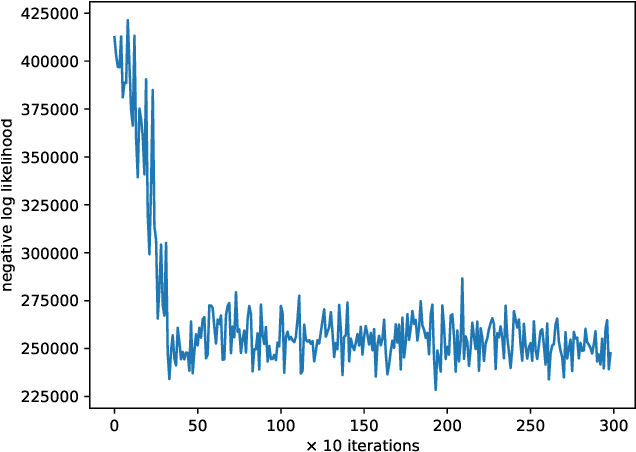

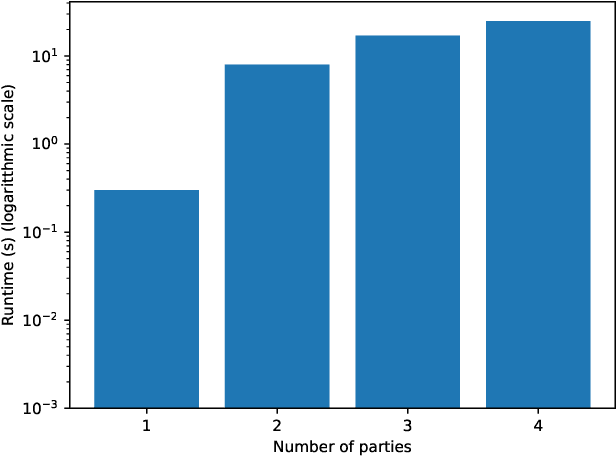

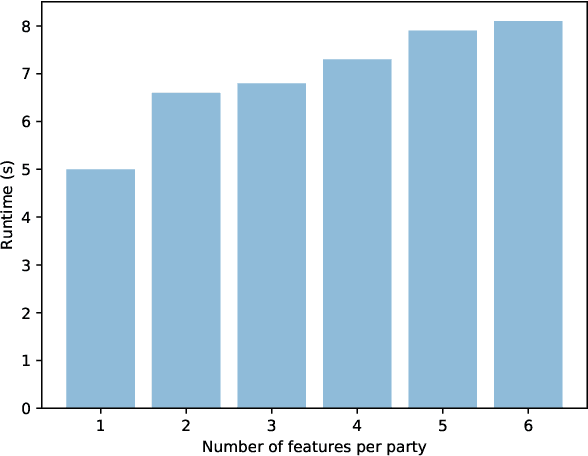

Abstract:In this work, we present a method for differentially private data sharing by training a mixture model on vertically partitioned data, where each party holds different features for the same set of individuals. We use secure multi-party computation (MPC) to combine the contribution of the data from the parties to train the model. We apply the differentially private variational inference (DPVI) for learning the model. Assuming the mixture components contain no dependencies across different parties, the objective function can be factorized into a sum of products of individual components of each party. Therefore, each party can calculate its shares on its own without the use of MPC. Then MPC is only needed to get the product between the different shares and add the noise. Applying the method to demographic data from the US Census, we obtain comparable accuracy to the non-partitioned case with approximately 20-fold increase in computing time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge