Rafael Brandão

Workflow Provenance in the Lifecycle of Scientific Machine Learning

Sep 30, 2020

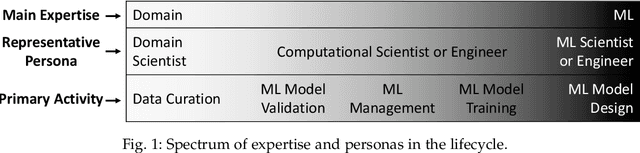

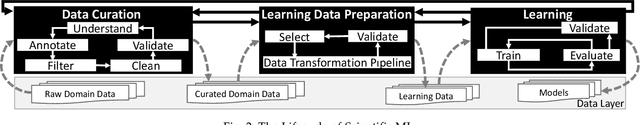

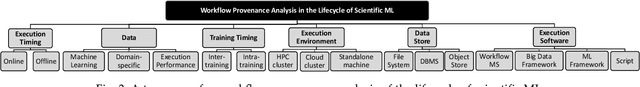

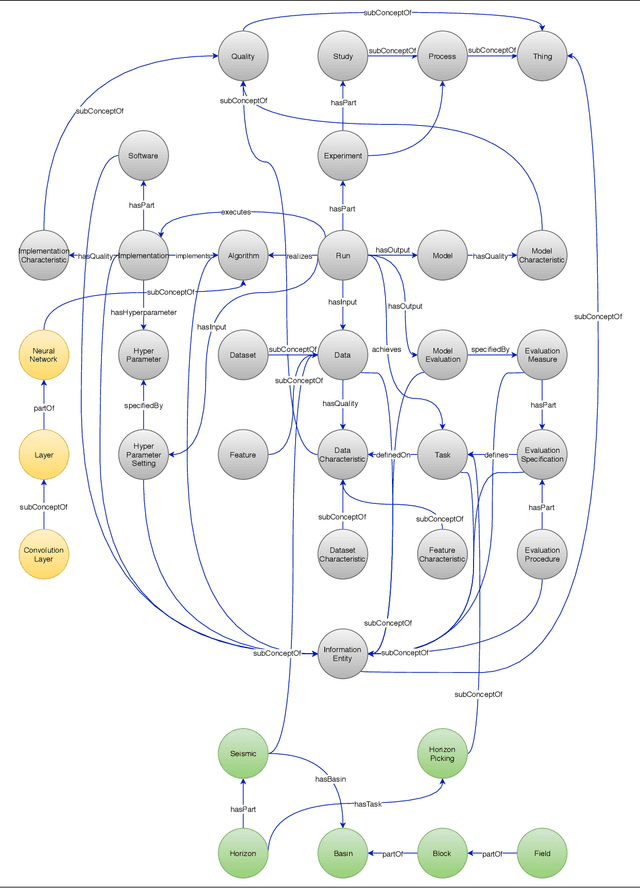

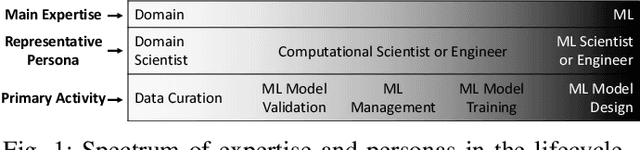

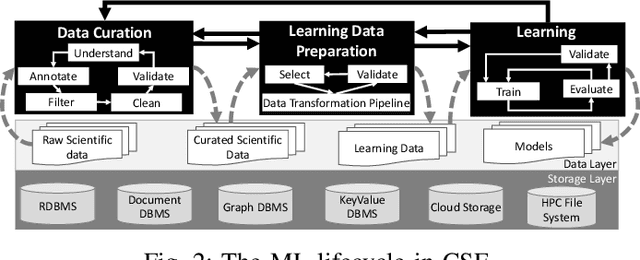

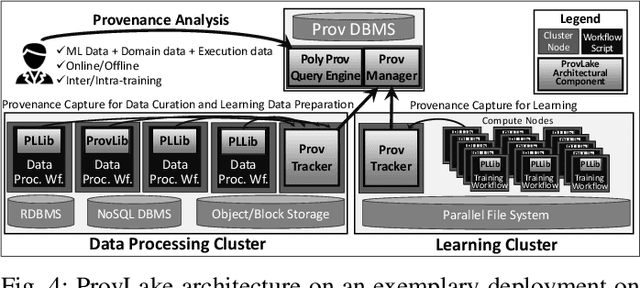

Abstract:Machine Learning (ML) has already fundamentally changed several businesses. More recently, it has also been profoundly impacting the computational science and engineering domains, like geoscience, climate science, and health science. In these domains, users need to perform comprehensive data analyses combining scientific data and ML models to provide for critical requirements, such as reproducibility, model explainability, and experiment data understanding. However, scientific ML is multidisciplinary, heterogeneous, and affected by the physical constraints of the domain, making such analyses even more challenging. In this work, we leverage workflow provenance techniques to build a holistic view to support the lifecycle of scientific ML. We contribute with (i) characterization of the lifecycle and taxonomy for data analyses; (ii) design principles to build this view, with a W3C PROV compliant data representation and a reference system architecture; and (iii) lessons learned after an evaluation in an Oil & Gas case using an HPC cluster with 393 nodes and 946 GPUs. The experiments show that the principles enable queries that integrate domain semantics with ML models while keeping low overhead (<1%), high scalability, and an order of magnitude of query acceleration under certain workloads against without our representation.

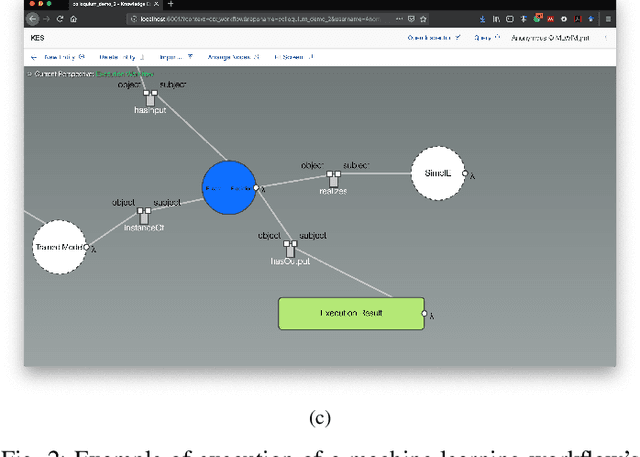

Managing Machine Learning Workflow Components

Dec 10, 2019

Abstract:Machine Learning Workflows~(MLWfs) have become essential and a disruptive approach in problem-solving over several industries. However, the development process of MLWfs may be complicated, hard to achieve, time-consuming, and error-prone. To handle this problem, in this paper, we introduce \emph{machine learning workflow management}~(MLWfM) as a technique to aid the development and reuse of MLWfs and their components through three aspects: representation, execution, and creation. More precisely, we discuss our approach to structure the MLWfs' components and their metadata to aid retrieval and reuse of components in new MLWfs. Also, we consider the execution of these components within a tool. The hybrid knowledge representation, called Hyperknowledge, frames our methodology, supporting the three MLWfM's aspects. To validate our approach, we show a practical use case in the Oil \& Gas industry.

Provenance Data in the Machine Learning Lifecycle in Computational Science and Engineering

Oct 21, 2019

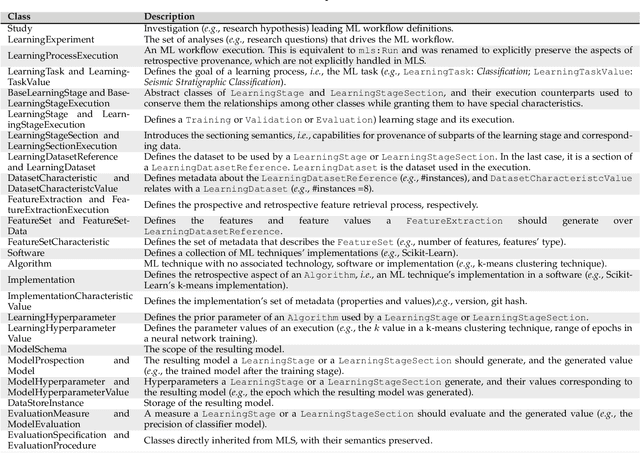

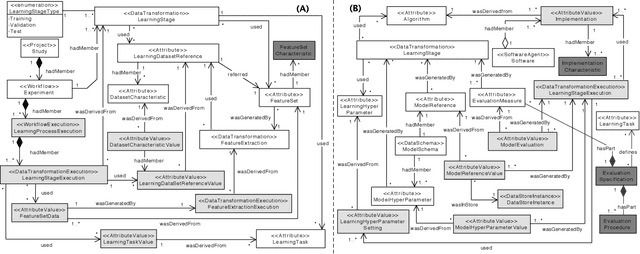

Abstract:Machine Learning (ML) has become essential in several industries. In Computational Science and Engineering (CSE), the complexity of the ML lifecycle comes from the large variety of data, scientists' expertise, tools, and workflows. If data are not tracked properly during the lifecycle, it becomes unfeasible to recreate a ML model from scratch or to explain to stakeholders how it was created. The main limitation of provenance tracking solutions is that they cannot cope with provenance capture and integration of domain and ML data processed in the multiple workflows in the lifecycle while keeping the provenance capture overhead low. To handle this problem, in this paper we contribute with a detailed characterization of provenance data in the ML lifecycle in CSE; a new provenance data representation, called PROV-ML, built on top of W3C PROV and ML Schema; and extensions to a system that tracks provenance from multiple workflows to address the characteristics of ML and CSE, and to allow for provenance queries with a standard vocabulary. We show a practical use in a real case in the Oil and Gas industry, along with its evaluation using 48 GPUs in parallel.

Mediation Challenges and Socio-Technical Gaps for Explainable Deep Learning Applications

Jul 16, 2019

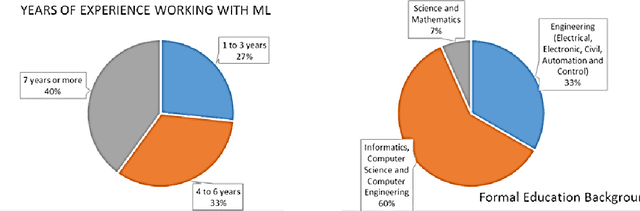

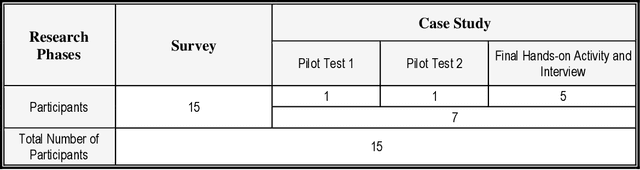

Abstract:The presumed data owners' right to explanations brought about by the General Data Protection Regulation in Europe has shed light on the social challenges of explainable artificial intelligence (XAI). In this paper, we present a case study with Deep Learning (DL) experts from a research and development laboratory focused on the delivery of industrial-strength AI technologies. Our aim was to investigate the social meaning (i.e. meaning to others) that DL experts assign to what they do, given a richly contextualized and familiar domain of application. Using qualitative research techniques to collect and analyze empirical data, our study has shown that participating DL experts did not spontaneously engage into considerations about the social meaning of machine learning models that they build. Moreover, when explicitly stimulated to do so, these experts expressed expectations that, with real-world DL application, there will be available mediators to bridge the gap between technical meanings that drive DL work, and social meanings that AI technology users assign to it. We concluded that current research incentives and values guiding the participants' scientific interests and conduct are at odds with those required to face some of the scientific challenges involved in advancing XAI, and thus responding to the alleged data owners' right to explanations or similar societal demands emerging from current debates. As a concrete contribution to mitigate what seems to be a more general problem, we propose three preliminary XAI Mediation Challenges with the potential to bring together technical and social meanings of DL applications, as well as to foster much needed interdisciplinary collaboration among AI and the Social Sciences researchers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge