Qiang Yin

GeoDiff-SAR: A Geometric Prior Guided Diffusion Model for SAR Image Generation

Jan 07, 2026Abstract:Synthetic Aperture Radar (SAR) imaging results are highly sensitive to observation geometries and the geometric parameters of targets. However, existing generative methods primarily operate within the image domain, neglecting explicit geometric information. This limitation often leads to unsatisfactory generation quality and the inability to precisely control critical parameters such as azimuth angles. To address these challenges, we propose GeoDiff-SAR, a geometric prior guided diffusion model for high-fidelity SAR image generation. Specifically, GeoDiff-SAR first efficiently simulates the geometric structures and scattering relationships inherent in real SAR imaging by calculating SAR point clouds at specific azimuths, which serves as a robust physical guidance. Secondly, to effectively fuse multi-modal information, we employ a feature fusion gating network based on Feature-wise Linear Modulation (FiLM) to dynamically regulate the weight distribution of 3D physical information, image control parameters, and textual description parameters. Thirdly, we utilize the Low-Rank Adaptation (LoRA) architecture to perform lightweight fine-tuning on the advanced Stable Diffusion 3.5 (SD3.5) model, enabling it to rapidly adapt to the distribution characteristics of the SAR domain. To validate the effectiveness of GeoDiff-SAR, extensive comparative experiments were conducted on real-world SAR datasets. The results demonstrate that data generated by GeoDiff-SAR exhibits high fidelity and effectively enhances the accuracy of downstream classification tasks. In particular, it significantly improves recognition performance across different azimuth angles, thereby underscoring the superiority of physics-guided generation.

SMGeo: Cross-View Object Geo-Localization with Grid-Level Mixture-of-Experts

Nov 18, 2025

Abstract:Cross-view object Geo-localization aims to precisely pinpoint the same object across large-scale satellite imagery based on drone images. Due to significant differences in viewpoint and scale, coupled with complex background interference, traditional multi-stage "retrieval-matching" pipelines are prone to cumulative errors. To address this, we present SMGeo, a promptable end-to-end transformer-based model for object Geo-localization. This model supports click prompting and can output object Geo-localization in real time when prompted to allow for interactive use. The model employs a fully transformer-based architecture, utilizing a Swin-Transformer for joint feature encoding of both drone and satellite imagery and an anchor-free transformer detection head for coordinate regression. In order to better capture both inter-modal and intra-view dependencies, we introduce a grid-level sparse Mixture-of-Experts (GMoE) into the cross-view encoder, allowing it to adaptively activate specialized experts according to the content, scale and source of each grid. We also employ an anchor-free detection head for coordinate regression, directly predicting object locations via heat-map supervision in the reference images. This approach avoids scale bias and matching complexity introduced by predefined anchor boxes. On the drone-to-satellite task, SMGeo achieves leading performance in accuracy at IoU=0.25 and mIoU metrics (e.g., 87.51%, 62.50%, and 61.45% in the test set, respectively), significantly outperforming representative methods such as DetGeo (61.97%, 57.66%, and 54.05%, respectively). Ablation studies demonstrate complementary gains from shared encoding, query-guided fusion, and grid-level sparse mixture-of-experts.

SAR Target Recognition Using the Multi-aspect-aware Bidirectional LSTM Recurrent Neural Networks

Jul 25, 2017

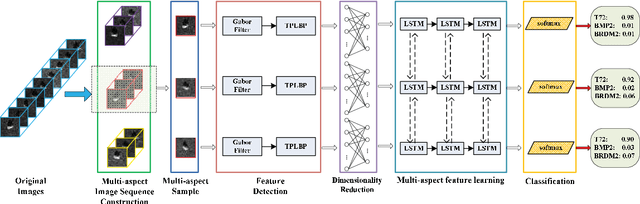

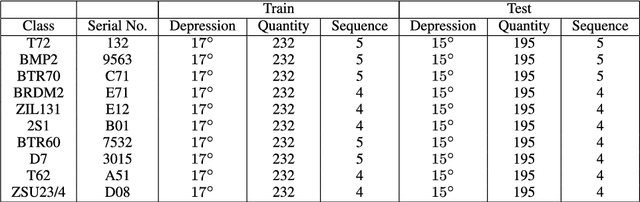

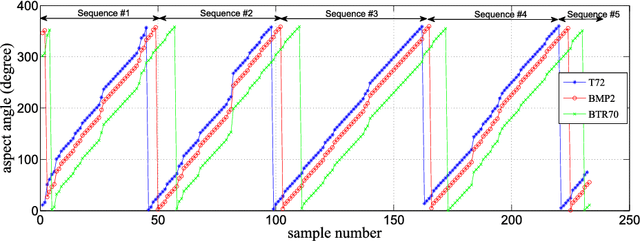

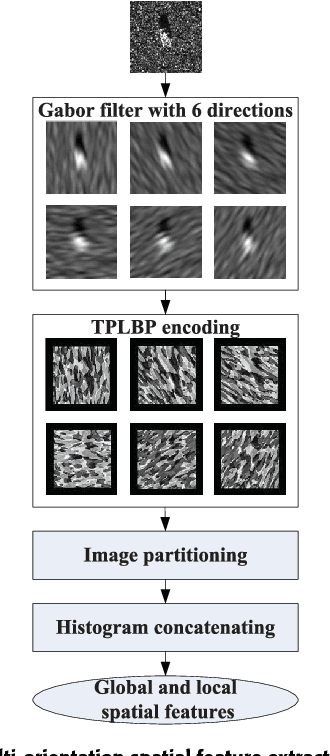

Abstract:The outstanding pattern recognition performance of deep learning brings new vitality to the synthetic aperture radar (SAR) automatic target recognition (ATR). However, there is a limitation in current deep learning based ATR solution that each learning process only handle one SAR image, namely learning the static scattering information, while missing the space-varying information. It is obvious that multi-aspect joint recognition introduced space-varying scattering information should improve the classification accuracy and robustness. In this paper, a novel multi-aspect-aware method is proposed to achieve this idea through the bidirectional Long Short-Term Memory (LSTM) recurrent neural networks based space-varying scattering information learning. Specifically, we first select different aspect images to generate the multi-aspect space-varying image sequences. Then, the Gabor filter and three-patch local binary pattern (TPLBP) are progressively implemented to extract a comprehensive spatial features, followed by dimensionality reduction with the Multi-layer Perceptron (MLP) network. Finally, we design a bidirectional LSTM recurrent neural network to learn the multi-aspect features with further integrating the softmax classifier to achieve target recognition. Experimental results demonstrate that the proposed method can achieve 99.9% accuracy for 10-class recognition. Besides, its anti-noise and anti-confusion performance are also better than the conventional deep learning based methods.

* 11 pages, 10 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge