Prashant Tiwari

Vision-Cloud Data Fusion for ADAS: A Lane Change Prediction Case Study

Dec 07, 2021

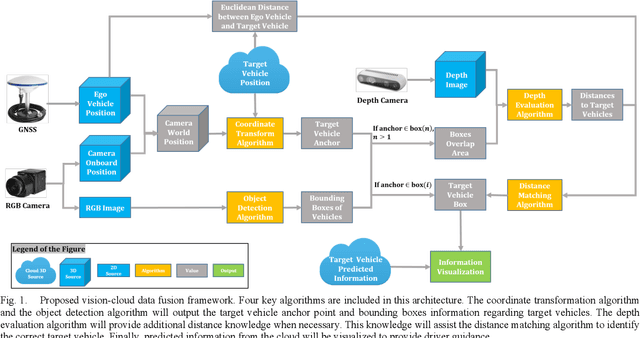

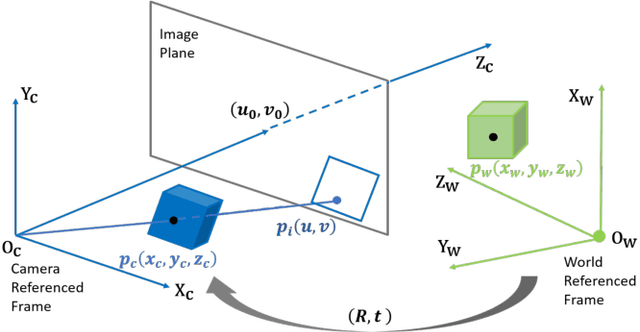

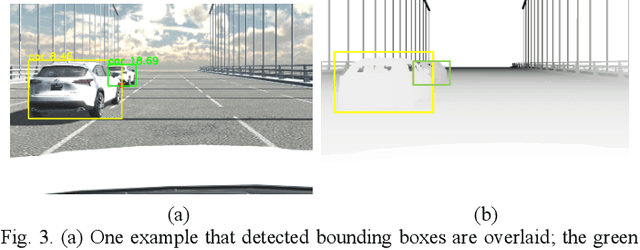

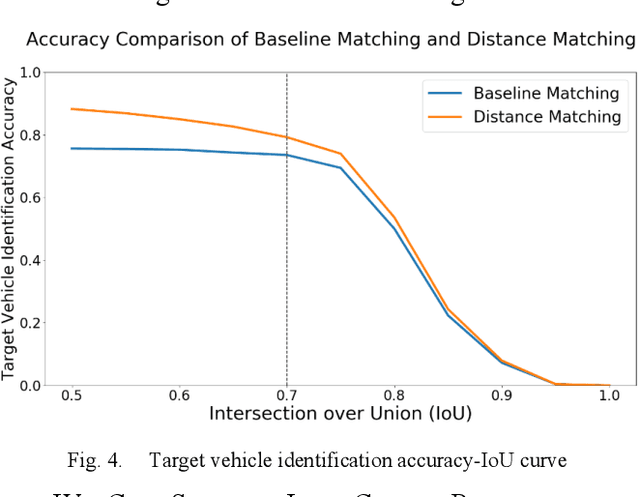

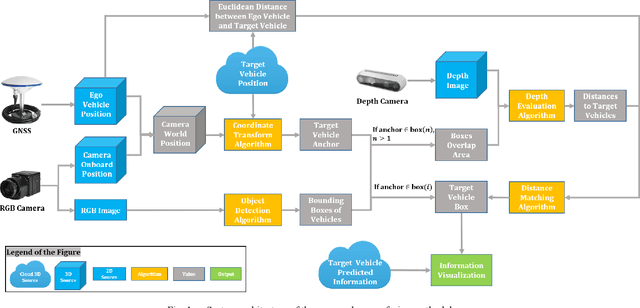

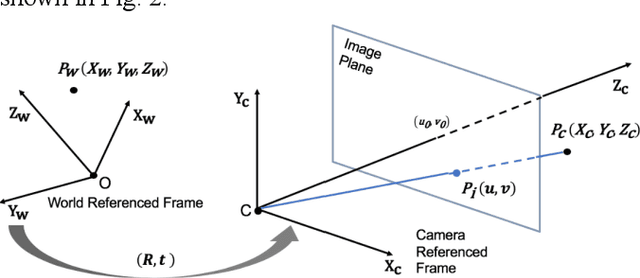

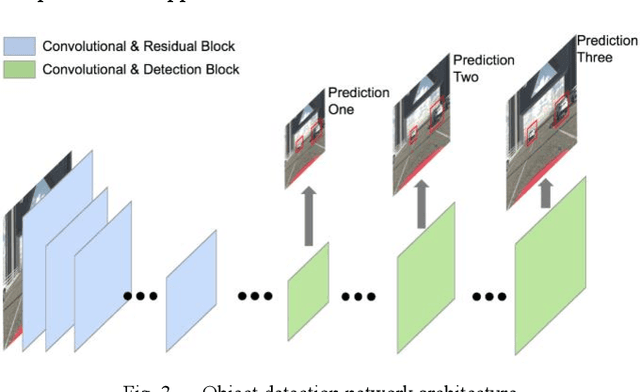

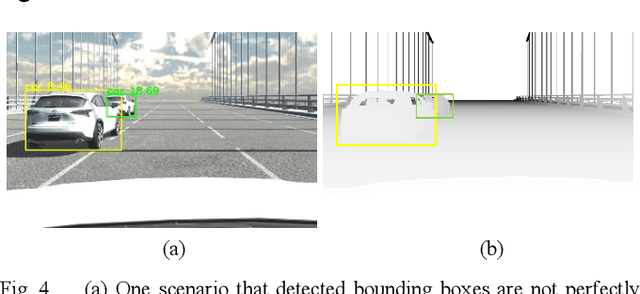

Abstract:With the rapid development of intelligent vehicles and Advanced Driver-Assistance Systems (ADAS), a new trend is that mixed levels of human driver engagements will be involved in the transportation system. Therefore, necessary visual guidance for drivers is vitally important under this situation to prevent potential risks. To advance the development of visual guidance systems, we introduce a novel vision-cloud data fusion methodology, integrating camera image and Digital Twin information from the cloud to help intelligent vehicles make better decisions. Target vehicle bounding box is drawn and matched with the help of the object detector (running on the ego-vehicle) and position information (received from the cloud). The best matching result, a 79.2% accuracy under 0.7 intersection over union threshold, is obtained with depth images served as an additional feature source. A case study on lane change prediction is conducted to show the effectiveness of the proposed data fusion methodology. In the case study, a multi-layer perceptron algorithm is proposed with modified lane change prediction approaches. Human-in-the-loop simulation results obtained from the Unity game engine reveal that the proposed model can improve highway driving performance significantly in terms of safety, comfort, and environmental sustainability.

Sensor Fusion of Camera and Cloud Digital Twin Information for Intelligent Vehicles

Jul 08, 2020

Abstract:With the rapid development of intelligent vehicles and Advanced Driving Assistance Systems (ADAS), a mixed level of human driver engagements is involved in the transportation system. Visual guidance for drivers is essential under this situation to prevent potential risks. To advance the development of visual guidance systems, we introduce a novel sensor fusion methodology, integrating camera image and Digital Twin knowledge from the cloud. Target vehicle bounding box is drawn and matched by combining results of object detector running on ego vehicle and position information from the cloud. The best matching result, with a 79.2% accuracy under 0.7 Intersection over Union (IoU) threshold, is obtained with depth image served as an additional feature source. Game engine-based simulation results also reveal that the visual guidance system could improve driving safety significantly cooperate with the cloud Digital Twin system.

Long-Term Prediction of Lane Change Maneuver Through a Multilayer Perceptron

Jun 23, 2020

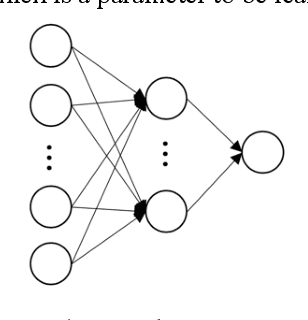

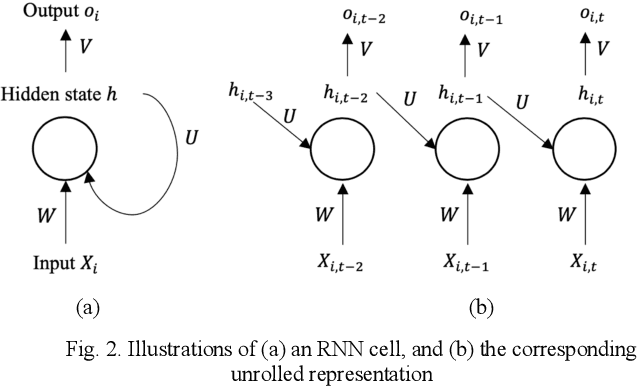

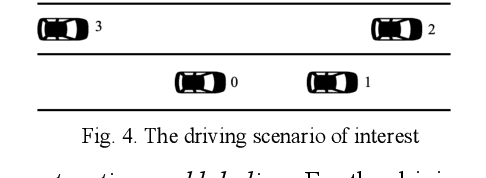

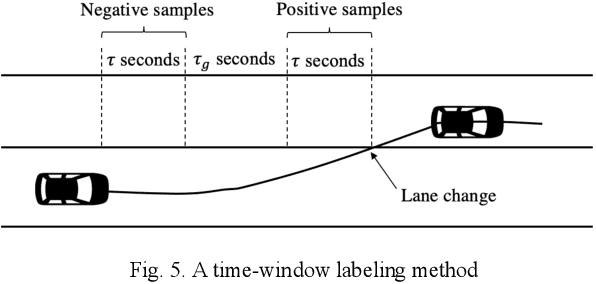

Abstract:Behavior prediction plays an essential role in both autonomous driving systems and Advanced Driver Assistance Systems (ADAS), since it enhances vehicle's awareness of the imminent hazards in the surrounding environment. Many existing lane change prediction models take as input lateral or angle information and make short-term (< 5 seconds) maneuver predictions. In this study, we propose a longer-term (5~10 seconds) prediction model without any lateral or angle information. Three prediction models are introduced, including a logistic regression model, a multilayer perceptron (MLP) model, and a recurrent neural network (RNN) model, and their performances are compared by using the real-world NGSIM dataset. To properly label the trajectory data, this study proposes a new time-window labeling scheme by adding a time gap between positive and negative samples. Two approaches are also proposed to address the unstable prediction issue, where the aggressive approach propagates each positive prediction for certain seconds, while the conservative approach adopts a roll-window average to smooth the prediction. Evaluation results show that the developed prediction model is able to capture 75% of real lane change maneuvers with an average advanced prediction time of 8.05 seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge