Peisong Wang

Recent Advances in Efficient Computation of Deep Convolutional Neural Networks

Feb 11, 2018

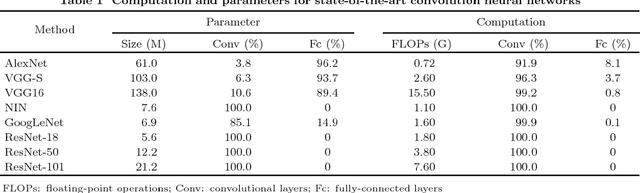

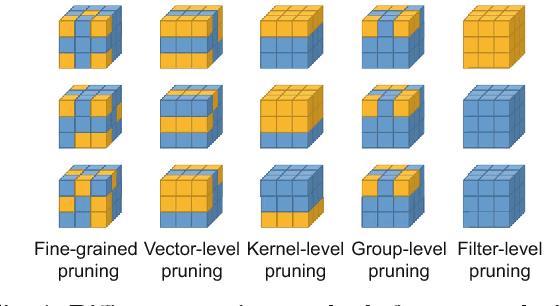

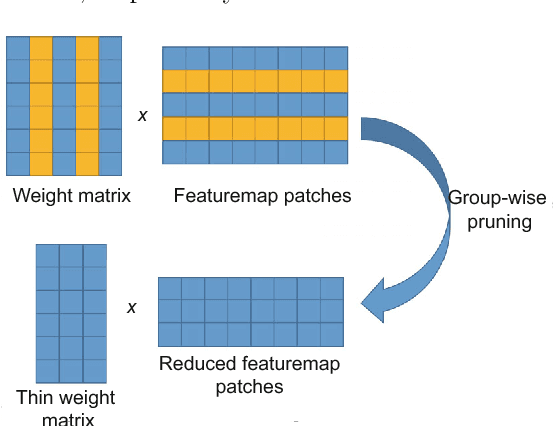

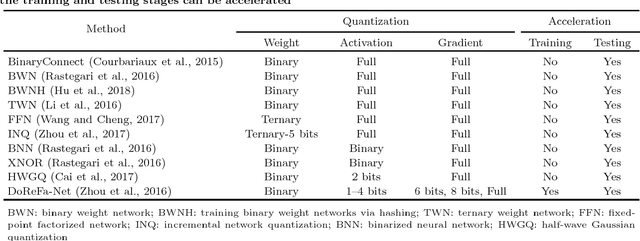

Abstract:Deep neural networks have evolved remarkably over the past few years and they are currently the fundamental tools of many intelligent systems. At the same time, the computational complexity and resource consumption of these networks also continue to increase. This will pose a significant challenge to the deployment of such networks, especially in real-time applications or on resource-limited devices. Thus, network acceleration has become a hot topic within the deep learning community. As for hardware implementation of deep neural networks, a batch of accelerators based on FPGA/ASIC have been proposed in recent years. In this paper, we provide a comprehensive survey of recent advances in network acceleration, compression and accelerator design from both algorithm and hardware points of view. Specifically, we provide a thorough analysis of each of the following topics: network pruning, low-rank approximation, network quantization, teacher-student networks, compact network design and hardware accelerators. Finally, we will introduce and discuss a few possible future directions.

From Hashing to CNNs: Training BinaryWeight Networks via Hashing

Feb 08, 2018

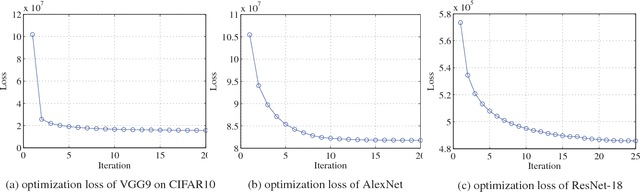

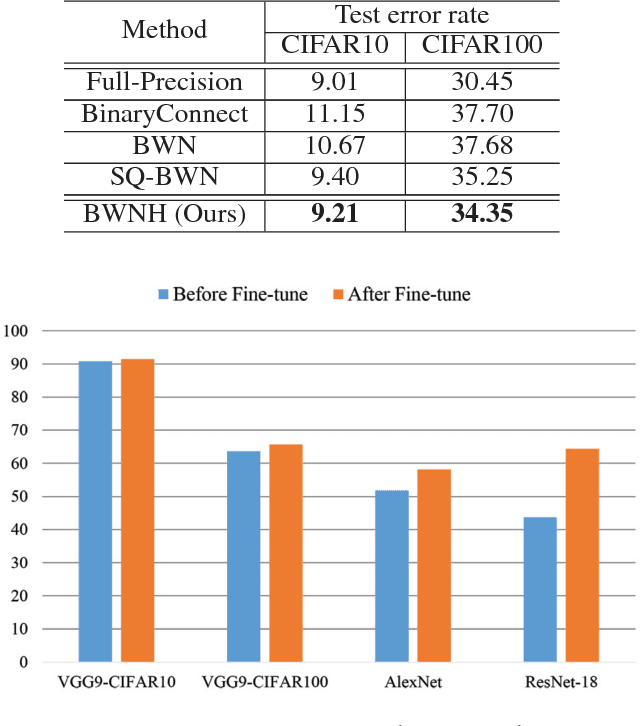

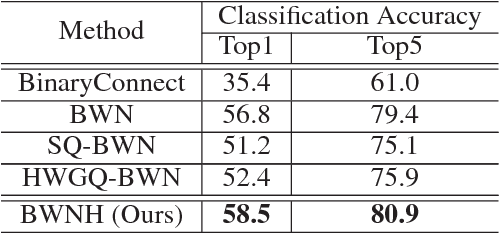

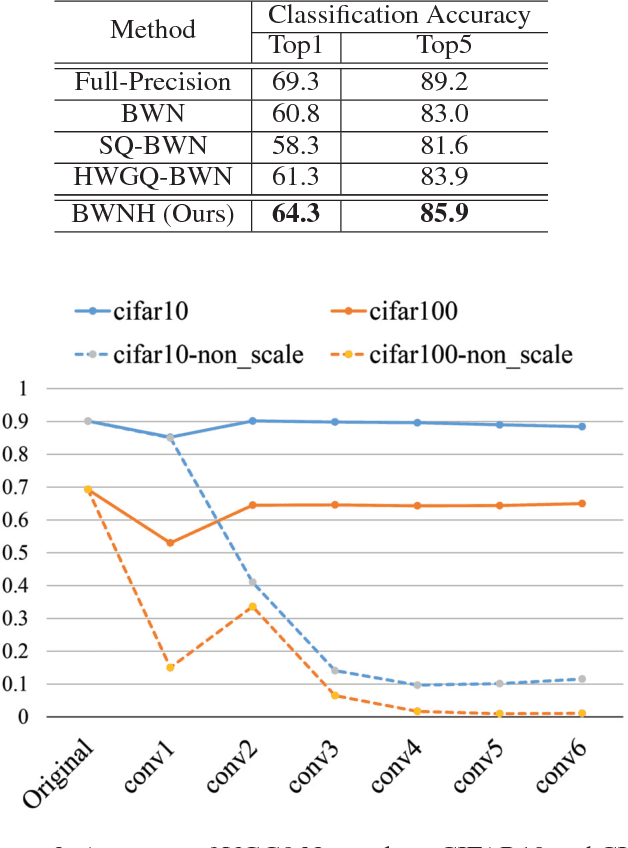

Abstract:Deep convolutional neural networks (CNNs) have shown appealing performance on various computer vision tasks in recent years. This motivates people to deploy CNNs to realworld applications. However, most of state-of-art CNNs require large memory and computational resources, which hinders the deployment on mobile devices. Recent studies show that low-bit weight representation can reduce much storage and memory demand, and also can achieve efficient network inference. To achieve this goal, we propose a novel approach named BWNH to train Binary Weight Networks via Hashing. In this paper, we first reveal the strong connection between inner-product preserving hashing and binary weight networks, and show that training binary weight networks can be intrinsically regarded as a hashing problem. Based on this perspective, we propose an alternating optimization method to learn the hash codes instead of directly learning binary weights. Extensive experiments on CIFAR10, CIFAR100 and ImageNet demonstrate that our proposed BWNH outperforms current state-of-art by a large margin.

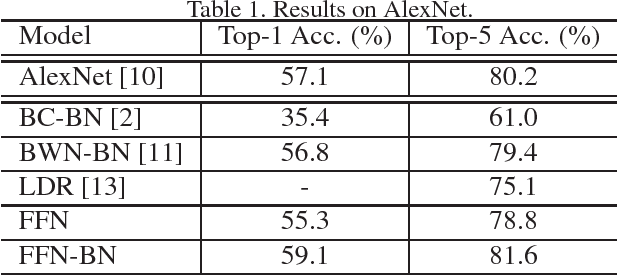

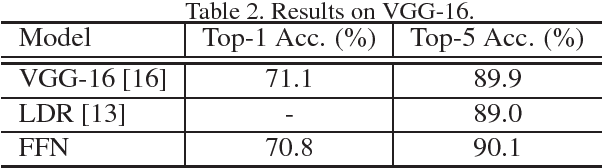

Fixed-point Factorized Networks

Aug 29, 2017

Abstract:In recent years, Deep Neural Networks (DNN) based methods have achieved remarkable performance in a wide range of tasks and have been among the most powerful and widely used techniques in computer vision. However, DNN-based methods are both computational-intensive and resource-consuming, which hinders the application of these methods on embedded systems like smart phones. To alleviate this problem, we introduce a novel Fixed-point Factorized Networks (FFN) for pretrained models to reduce the computational complexity as well as the storage requirement of networks. The resulting networks have only weights of -1, 0 and 1, which significantly eliminates the most resource-consuming multiply-accumulate operations (MACs). Extensive experiments on large-scale ImageNet classification task show the proposed FFN only requires one-thousandth of multiply operations with comparable accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge