Pedro Antonio Alarcon Granadeno

Cognitive Guardrails for Open-World Decision Making in Autonomous Drone Swarms

May 29, 2025

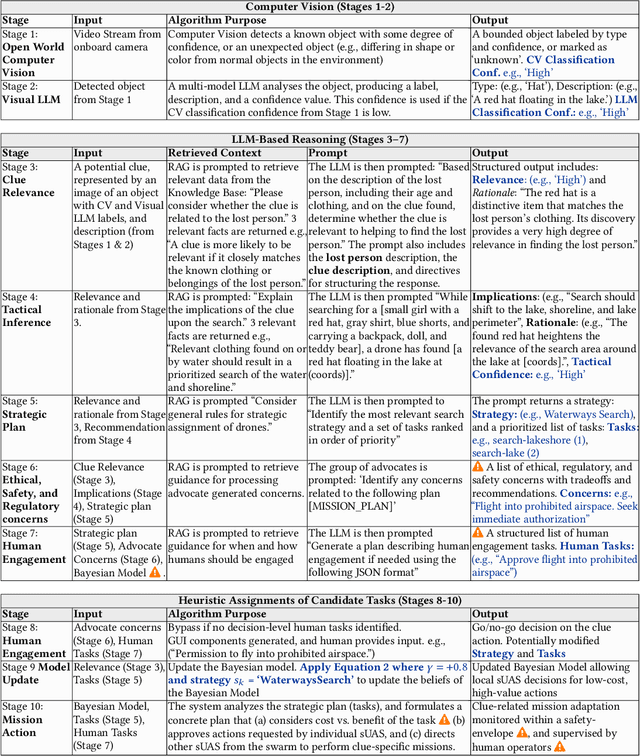

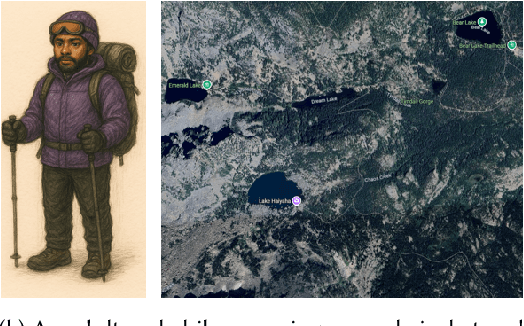

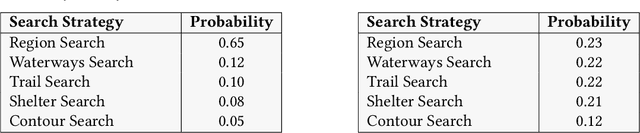

Abstract:Small Uncrewed Aerial Systems (sUAS) are increasingly deployed as autonomous swarms in search-and-rescue and other disaster-response scenarios. In these settings, they use computer vision (CV) to detect objects of interest and autonomously adapt their missions. However, traditional CV systems often struggle to recognize unfamiliar objects in open-world environments or to infer their relevance for mission planning. To address this, we incorporate large language models (LLMs) to reason about detected objects and their implications. While LLMs can offer valuable insights, they are also prone to hallucinations and may produce incorrect, misleading, or unsafe recommendations. To ensure safe and sensible decision-making under uncertainty, high-level decisions must be governed by cognitive guardrails. This article presents the design, simulation, and real-world integration of these guardrails for sUAS swarms in search-and-rescue missions.

Land-Coverage Aware Path-Planning for Multi-UAV Swarms in Search and Rescue Scenarios

May 12, 2025Abstract:Unmanned Aerial Vehicles (UAVs) have become vital in search-and-rescue (SAR) missions, with autonomous mission planning improving response times and coverage efficiency. Early approaches primarily used path planning techniques such as A*, potential-fields, or Dijkstra's algorithm, while recent approaches have incorporated meta-heuristic frameworks like genetic algorithms and particle swarm optimization to balance competing objectives such as network connectivity, energy efficiency, and strategic placement of charging stations. However, terrain-aware path planning remains under-explored, despite its critical role in optimizing UAV SAR deployments. To address this gap, we present a computer-vision based terrain-aware mission planner that autonomously extracts and analyzes terrain topology to enhance SAR pre-flight planning. Our framework uses a deep segmentation network fine-tuned on our own collection of landcover datasets to transform satellite imagery into a structured, grid-based representation of the operational area. This classification enables terrain-specific UAV-task allocation, improving deployment strategies in complex environments. We address the challenge of irregular terrain partitions, by introducing a two-stage partitioning scheme that first evaluates terrain monotonicity along coordinate axes before applying a cost-based recursive partitioning process, minimizing unnecessary splits and optimizing path efficiency. Empirical validation in a high-fidelity simulation environment demonstrates that our approach improves search and dispatch time over multiple meta-heuristic techniques and against a competing state-of-the-art method. These results highlight its potential for large-scale SAR operations, where rapid response and efficient UAV coordination are critical.

Multi-source Plume Tracing via Multi-Agent Reinforcement Learning

May 12, 2025Abstract:Industrial catastrophes like the Bhopal disaster (1984) and the Aliso Canyon gas leak (2015) demonstrate the urgent need for rapid and reliable plume tracing algorithms to protect public health and the environment. Traditional methods, such as gradient-based or biologically inspired approaches, often fail in realistic, turbulent conditions. To address these challenges, we present a Multi-Agent Reinforcement Learning (MARL) algorithm designed for localizing multiple airborne pollution sources using a swarm of small uncrewed aerial systems (sUAS). Our method models the problem as a Partially Observable Markov Game (POMG), employing a Long Short-Term Memory (LSTM)-based Action-specific Double Deep Recurrent Q-Network (ADDRQN) that uses full sequences of historical action-observation pairs, effectively approximating latent states. Unlike prior work, we use a general-purpose simulation environment based on the Gaussian Plume Model (GPM), incorporating realistic elements such as a three-dimensional environment, sensor noise, multiple interacting agents, and multiple plume sources. The incorporation of action histories as part of the inputs further enhances the adaptability of our model in complex, partially observable environments. Extensive simulations show that our algorithm significantly outperforms conventional approaches. Specifically, our model allows agents to explore only 1.29\% of the environment to successfully locate pollution sources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge