Paolo Gibertini

An Asynchronous Mixed-Signal Resonate-and-Fire Neuron

Dec 08, 2025Abstract:Analog computing at the edge is an emerging strategy to limit data storage and transmission requirements, as well as energy consumption, and its practical implementation is in its initial stages of development. Translating properties of biological neurons into hardware offers a pathway towards low-power, real-time edge processing. Specifically, resonator neurons offer selectivity to specific frequencies as a potential solution for temporal signal processing. Here, we show a fabricated Complementary Metal-Oxide-Semiconductor (CMOS) mixed-signal Resonate-and-Fire (R&F) neuron circuit implementation that emulates the behavior of these neural cells responsible for controlling oscillations within the central nervous system. We integrate the design with asynchronous handshake capabilities, perform comprehensive variability analyses, and characterize its frequency detection functionality. Our results demonstrate the feasibility of large-scale integration within neuromorphic systems, thereby advancing the exploitation of bio-inspired circuits for efficient edge temporal signal processing.

Bruno: Backpropagation Running Undersampled for Novel device Optimization

May 23, 2025

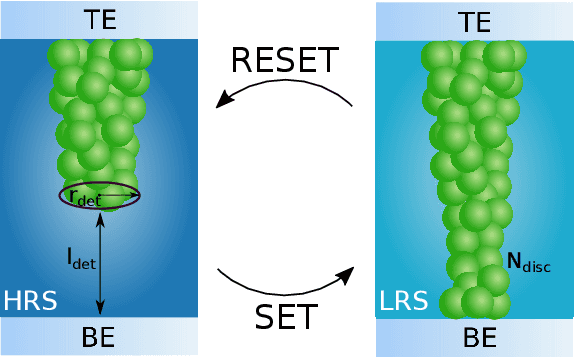

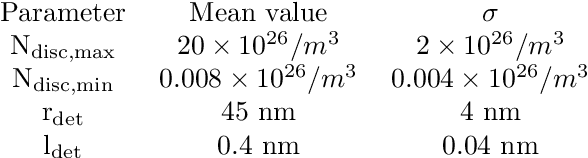

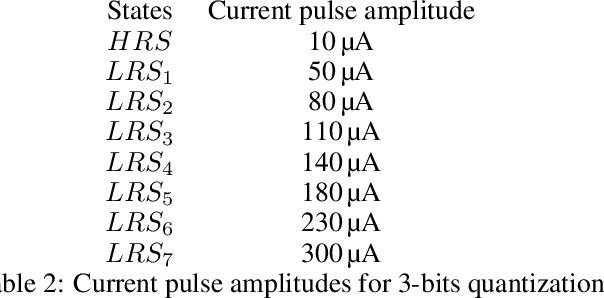

Abstract:Recent efforts to improve the efficiency of neuromorphic and machine learning systems have focused on the development of application-specific integrated circuits (ASICs), which provide hardware specialized for the deployment of neural networks, leading to potential gains in efficiency and performance. These systems typically feature an architecture that goes beyond the von Neumann architecture employed in general-purpose hardware such as GPUs. Neural networks developed for this specialised hardware then need to take into account the specifics of the hardware platform, which requires novel training algorithms and accurate models of the hardware, since they cannot be abstracted as a general-purpose computing platform. In this work, we present a bottom-up approach to train neural networks for hardware based on spiking neurons and synapses built on ferroelectric capacitor (FeCap) and Resistive switching non-volatile devices (RRAM) respectively. In contrast to the more common approach of designing hardware to fit existing abstract neuron or synapse models, this approach starts with compact models of the physical device to model the computational primitive of the neurons. Based on these models, a training algorithm is developed that can reliably backpropagate through these physical models, even when applying common hardware limitations, such as stochasticity, variability, and low bit precision. The training algorithm is then tested on a spatio-temporal dataset with a network composed of quantized synapses based on RRAM and ferroelectric leaky integrate-and-fire (FeLIF) neurons. The performance of the network is compared with different networks composed of LIF neurons. The results of the experiments show the potential advantage of using BRUNO to train networks with FeLIF neurons, by achieving a reduction in both time and memory for detecting spatio-temporal patterns with quantized synapses.

A Ferroelectric Tunnel Junction-based Integrate-and-Fire Neuron

Nov 04, 2022

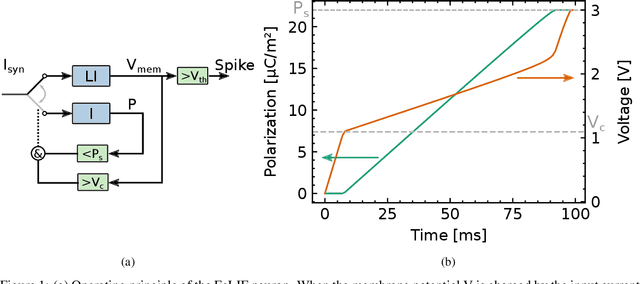

Abstract:Event-based neuromorphic systems provide a low-power solution by using artificial neurons and synapses to process data asynchronously in the form of spikes. Ferroelectric Tunnel Junctions (FTJs) are ultra low-power memory devices and are well-suited to be integrated in these systems. Here, we present a hybrid FTJ-CMOS Integrate-and-Fire neuron which constitutes a fundamental building block for new-generation neuromorphic networks for edge computing. We demonstrate electrically tunable neural dynamics achievable by tuning the switching of the FTJ device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge