Paolo Cabras

ICube

A Skull-Adaptive Framework for AI-Based 3D Transcranial Focused Ultrasound Simulation

May 19, 2025Abstract:Transcranial focused ultrasound (tFUS) is an emerging modality for non-invasive brain stimulation and therapeutic intervention, offering millimeter-scale spatial precision and the ability to target deep brain structures. However, the heterogeneous and anisotropic nature of the human skull introduces significant distortions to the propagating ultrasound wavefront, which require time-consuming patient-specific planning and corrections using numerical solvers for accurate targeting. To enable data-driven approaches in this domain, we introduce TFUScapes, the first large-scale, high-resolution dataset of tFUS simulations through anatomically realistic human skulls derived from T1-weighted MRI images. We have developed a scalable simulation engine pipeline using the k-Wave pseudo-spectral solver, where each simulation returns a steady-state pressure field generated by a focused ultrasound transducer placed at realistic scalp locations. In addition to the dataset, we present DeepTFUS, a deep learning model that estimates normalized pressure fields directly from input 3D CT volumes and transducer position. The model extends a U-Net backbone with transducer-aware conditioning, incorporating Fourier-encoded position embeddings and MLP layers to create global transducer embeddings. These embeddings are fused with U-Net encoder features via feature-wise modulation, dynamic convolutions, and cross-attention mechanisms. The model is trained using a combination of spatially weighted and gradient-sensitive loss functions, enabling it to approximate high-fidelity wavefields. The TFUScapes dataset is publicly released to accelerate research at the intersection of computational acoustics, neurotechnology, and deep learning. The project page is available at https://github.com/CAMMA-public/TFUScapes.

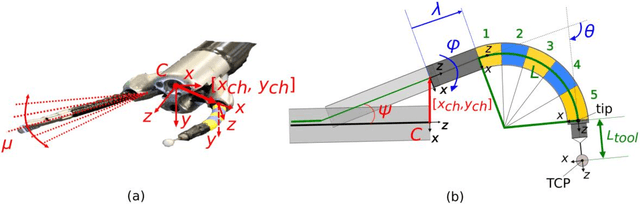

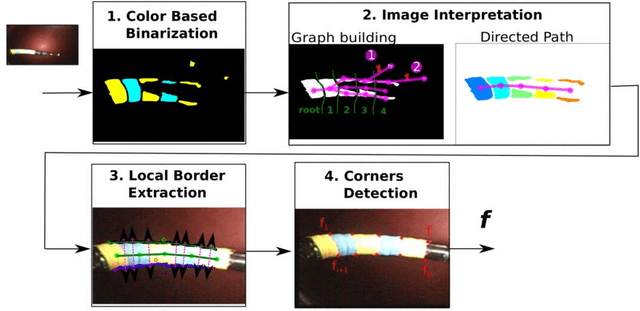

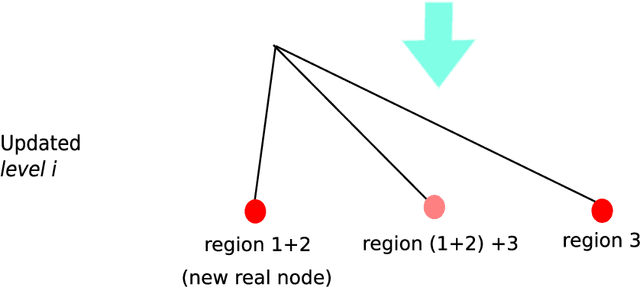

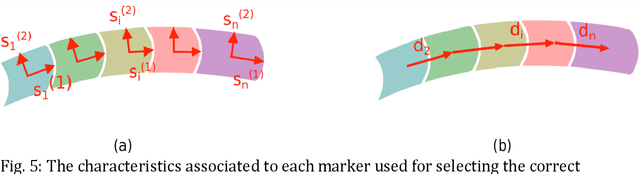

An adaptive and fully automatic method for estimating the 3D position of bendable instruments using endoscopic images

Nov 29, 2019

Abstract:Background. Flexible bendable instruments are key tools for performing surgical endoscopy. Being able to measure the 3D position of such instruments can be useful for various tasks, such as controlling automatically robotized instruments and analyzing motions. Methods. We propose an automatic method to infer the 3D pose of a single bending section instrument, using only the images provided by a monocular camera embedded at the tip of the endoscope. The proposed method relies on colored markers attached onto the bending section. The image of the instrument is segmented using a graph-based method and the corners of the markers are extracted by detecting the color transition along B{\'e}zier curves fitted on edge points. These features are accurately located and then used to estimate the 3D pose of the instrument using an adaptive model that allows to take into account the mechanical play between the instrument and its housing channel. Results. The feature extraction method provides good localization of markers corners with images of in vivo environment despite sensor saturation due to strong lighting. The RMS error on the estimation of the tip position of the instrument for laboratory experiments was 2.1, 1.96, 3.18 mm in the x, y and z directions respectively. Qualitative analysis in the case of in vivo images shows the ability to correctly estimate the 3D position of the instrument tip during real motions. Conclusions. The proposed method provides an automatic and accurate estimation of the 3D position of the tip of a bendable instrument in realistic conditions, where standard approaches fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge