Panos Trahanias

INDOOR-LiDAR: Bridging Simulation and Reality for Robot-Centric 360 degree Indoor LiDAR Perception -- A Robot-Centric Hybrid Dataset

Dec 13, 2025Abstract:We present INDOOR-LIDAR, a comprehensive hybrid dataset of indoor 3D LiDAR point clouds designed to advance research in robot perception. Existing indoor LiDAR datasets often suffer from limited scale, inconsistent annotation formats, and human-induced variability during data collection. INDOOR-LIDAR addresses these limitations by integrating simulated environments with real-world scans acquired using autonomous ground robots, providing consistent coverage and realistic sensor behavior under controlled variations. Each sample consists of dense point cloud data enriched with intensity measurements and KITTI-style annotations. The annotation schema encompasses common indoor object categories within various scenes. The simulated subset enables flexible configuration of layouts, point densities, and occlusions, while the real-world subset captures authentic sensor noise, clutter, and domain-specific artifacts characteristic of real indoor settings. INDOOR-LIDAR supports a wide range of applications including 3D object detection, bird's-eye-view (BEV) perception, SLAM, semantic scene understanding, and domain adaptation between simulated and real indoor domains. By bridging the gap between synthetic and real-world data, INDOOR-LIDAR establishes a scalable, realistic, and reproducible benchmark for advancing robotic perception in complex indoor environments.

A control scheme for collaborative object transportation between a human and a quadruped robot using the MIGHTY suction cup

Aug 01, 2025Abstract:In this work, a control scheme for human-robot collaborative object transportation is proposed, considering a quadruped robot equipped with the MIGHTY suction cup that serves both as a gripper for holding the object and a force/torque sensor. The proposed control scheme is based on the notion of admittance control, and incorporates a variable damping term aiming towards increasing the controllability of the human and, at the same time, decreasing her/his effort. Furthermore, to ensure that the object is not detached from the suction cup during the collaboration, an additional control signal is proposed, which is based on a barrier artificial potential. The proposed control scheme is proven to be passive and its performance is demonstrated through experimental evaluations conducted using the Unitree Go1 robot equipped with the MIGHTY suction cup.

* Please find the citation info @ Zenodo, ArXiv or Zenodo, as the proceedings of ICRA are no longer sent to IEEE Xplore

IndoorBEV: Joint Detection and Footprint Completion of Objects via Mask-based Prediction in Indoor Scenarios for Bird's-Eye View Perception

Jul 23, 2025Abstract:Detecting diverse objects within complex indoor 3D point clouds presents significant challenges for robotic perception, particularly with varied object shapes, clutter, and the co-existence of static and dynamic elements where traditional bounding box methods falter. To address these limitations, we propose IndoorBEV, a novel mask-based Bird's-Eye View (BEV) method for indoor mobile robots. In a BEV method, a 3D scene is projected into a 2D BEV grid which handles naturally occlusions and provides a consistent top-down view aiding to distinguish static obstacles from dynamic agents. The obtained 2D BEV results is directly usable to downstream robotic tasks like navigation, motion prediction, and planning. Our architecture utilizes an axis compact encoder and a window-based backbone to extract rich spatial features from this BEV map. A query-based decoder head then employs learned object queries to concurrently predict object classes and instance masks in the BEV space. This mask-centric formulation effectively captures the footprint of both static and dynamic objects regardless of their shape, offering a robust alternative to bounding box regression. We demonstrate the effectiveness of IndoorBEV on a custom indoor dataset featuring diverse object classes including static objects and dynamic elements like robots and miscellaneous items, showcasing its potential for robust indoor scene understanding.

Two-layer adaptive trajectory tracking controller for quadruped robots on slippery terrains

Apr 03, 2023Abstract:Task space trajectory tracking for quadruped robots plays a crucial role on achieving dexterous maneuvers in unstructured environments. To fulfill the control objective, the robot should apply forces through the contact of the legs with the supporting surface, while maintaining its stability and controllability. In order to ensure the operation of the robot under these conditions, one has to account for the possibility of unstable contact of the legs that arises when the robot operates on partially or globally slippery terrains. In this work, we propose an adaptive trajectory tracking controller for quadruped robots, which involves two prioritized layers of adaptation for avoiding possible slippage of one or multiple legs. The adaptive framework is evaluated through simulations and validated through experiments.

Probabilistic Contact State Estimation for Legged Robots using Inertial Information

Mar 04, 2023Abstract:Legged robot navigation in unstructured and slippery terrains depends heavily on the ability to accurately identify the quality of contact between the robot's feet and the ground. Contact state estimation is regarded as a challenging problem and is typically addressed by exploiting force measurements, joint encoders and/or robot kinematics and dynamics. In contrast to most state of the art approaches, the current work introduces a novel probabilistic method for estimating the contact state based solely on proprioceptive sensing, as it is readily available by Inertial Measurement Units (IMUs) mounted on the robot's end effectors. Capitalizing on the uncertainty of IMU measurements, our method estimates the probability of stable contact. This is accomplished by approximating the multimodal probability density function over a batch of data points for each axis of the IMU with Kernel Density Estimation. The proposed method has been extensively assessed against both real and simulated scenarios on bipedal and quadrupedal robotic platforms such as ATLAS, TALOS and Unitree's GO1.

Robust Contact State Estimation in Humanoid Walking Gaits

Jul 30, 2022

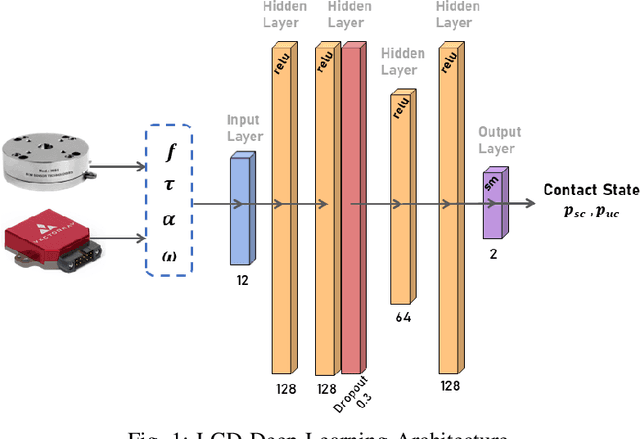

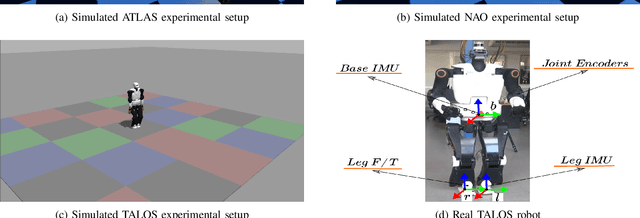

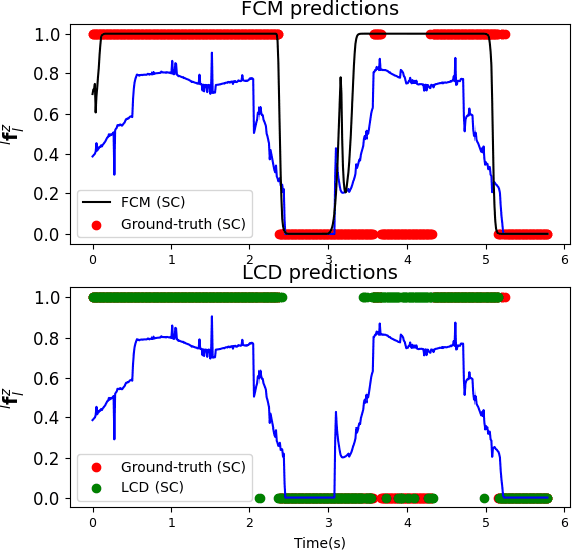

Abstract:In this article, we propose a deep learning framework that provides a unified approach to the problem of leg contact detection in humanoid robot walking gaits. Our formulation accomplishes to accurately and robustly estimate the contact state probability for each leg (i.e., stable or slip/no contact). The proposed framework employs solely proprioceptive sensing and although it relies on simulated ground-truth contact data for the classification process, we demonstrate that it generalizes across varying friction surfaces and different legged robotic platforms and, at the same time, is readily transferred from simulation to practice. The framework is quantitatively and qualitatively assessed in simulation via the use of ground-truth contact data and is contrasted against state of-the-art methods with an ATLAS, a NAO, and a TALOS humanoid robot. Furthermore, its efficacy is demonstrated in base estimation with a real TALOS humanoid. To reinforce further research endeavors, our implementation is offered as an open-source ROS/Python package, coined Legged Contact Detection (LCD).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge